url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

1.83B

| node_id

stringlengths 18

32

| number

int64 1

6.09k

| title

stringlengths 1

290

| labels

list | state

stringclasses 2

values | locked

bool 1

class | milestone

dict | comments

int64 0

54

| created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| closed_at

stringlengths 20

20

⌀ | active_lock_reason

null | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict | is_pull_request

bool 2

classes | comments_text

sequence |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/4553 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4553/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4553/comments | https://api.github.com/repos/huggingface/datasets/issues/4553/events | https://github.com/huggingface/datasets/pull/4553 | 1,282,779,560 | PR_kwDODunzps46Q1q7 | 4,553 | Stop dropping columns in to_tf_dataset() before we load batches | [] | closed | false | null | 4 | 2022-06-23T18:21:05Z | 2022-07-04T19:00:13Z | 2022-07-04T18:49:01Z | null | `to_tf_dataset()` dropped unnecessary columns before loading batches from the dataset, but this is causing problems when using a transform, because the dropped columns might be needed to compute the transform. Since there's no real way to check which columns the transform might need, we skip dropping columns and instead drop keys from the batch after we load it.

cc @amyeroberts and https://github.com/huggingface/notebooks/pull/202 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 1,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4553/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4553/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/4553.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4553",

"merged_at": "2022-07-04T18:49:01Z",

"patch_url": "https://github.com/huggingface/datasets/pull/4553.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4553"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._",

"@lhoestq Rebasing fixed the test failures, so this should be ready to review now! There's still a failure on Win but it seems unrelated.",

"Gentle ping @lhoestq ! This is a simple fix (dropping columns after loading a batch from the dataset rather than with `.remove_columns()` to make sure we don't break transforms), and tests are green so we're ready for review!",

"@lhoestq Test is in!"

] |

https://api.github.com/repos/huggingface/datasets/issues/5024 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5024/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5024/comments | https://api.github.com/repos/huggingface/datasets/issues/5024/events | https://github.com/huggingface/datasets/pull/5024 | 1,385,947,624 | PR_kwDODunzps4_mZ3J | 5,024 | Fix string features of xcsr dataset | [

{

"color": "0e8a16",

"default": false,

"description": "Contribution to a dataset script",

"id": 4564477500,

"name": "dataset contribution",

"node_id": "LA_kwDODunzps8AAAABEBBmPA",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20contribution"

}

] | closed | false | null | 1 | 2022-09-26T11:55:36Z | 2022-09-28T07:56:18Z | 2022-09-28T07:54:19Z | null | This PR fixes string features of `xcsr` dataset to avoid character splitting.

Fix #5023.

CC: @yangxqiao, @yuchenlin | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5024/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5024/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/5024.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5024",

"merged_at": "2022-09-28T07:54:19Z",

"patch_url": "https://github.com/huggingface/datasets/pull/5024.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5024"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._"

] |

https://api.github.com/repos/huggingface/datasets/issues/5804 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5804/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5804/comments | https://api.github.com/repos/huggingface/datasets/issues/5804/events | https://github.com/huggingface/datasets/pull/5804 | 1,688,285,666 | PR_kwDODunzps5PX0Dk | 5,804 | Set dev version | [] | closed | false | null | 3 | 2023-04-28T10:10:01Z | 2023-04-28T10:18:51Z | 2023-04-28T10:10:29Z | null | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5804/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5804/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/5804.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5804",

"merged_at": "2023-04-28T10:10:29Z",

"patch_url": "https://github.com/huggingface/datasets/pull/5804.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5804"

} | true | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_5804). All of your documentation changes will be reflected on that endpoint.",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006448 / 0.011353 (-0.004905) | 0.004440 / 0.011008 (-0.006568) | 0.097837 / 0.038508 (0.059328) | 0.027754 / 0.023109 (0.004645) | 0.306462 / 0.275898 (0.030564) | 0.332454 / 0.323480 (0.008975) | 0.004984 / 0.007986 (-0.003001) | 0.004703 / 0.004328 (0.000375) | 0.075213 / 0.004250 (0.070962) | 0.036524 / 0.037052 (-0.000529) | 0.310149 / 0.258489 (0.051659) | 0.346392 / 0.293841 (0.052552) | 0.031012 / 0.128546 (-0.097534) | 0.011598 / 0.075646 (-0.064049) | 0.323066 / 0.419271 (-0.096206) | 0.042945 / 0.043533 (-0.000588) | 0.302286 / 0.255139 (0.047147) | 0.327813 / 0.283200 (0.044614) | 0.092540 / 0.141683 (-0.049143) | 1.532893 / 1.452155 (0.080739) | 1.556676 / 1.492716 (0.063960) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.195126 / 0.018006 (0.177120) | 0.399623 / 0.000490 (0.399133) | 0.003176 / 0.000200 (0.002976) | 0.000068 / 0.000054 (0.000014) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.023612 / 0.037411 (-0.013799) | 0.097794 / 0.014526 (0.083268) | 0.104665 / 0.176557 (-0.071891) | 0.167145 / 0.737135 (-0.569990) | 0.108769 / 0.296338 (-0.187570) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.437818 / 0.215209 (0.222608) | 4.354896 / 2.077655 (2.277242) | 2.092832 / 1.504120 (0.588712) | 1.957630 / 1.541195 (0.416435) | 2.033135 / 1.468490 (0.564645) | 0.702316 / 4.584777 (-3.882461) | 3.448035 / 3.745712 (-0.297678) | 1.906762 / 5.269862 (-3.363100) | 1.253274 / 4.565676 (-3.312402) | 0.082486 / 0.424275 (-0.341789) | 0.012442 / 0.007607 (0.004835) | 0.532096 / 0.226044 (0.306052) | 5.366580 / 2.268929 (3.097652) | 2.441904 / 55.444624 (-53.002720) | 2.112116 / 6.876477 (-4.764361) | 2.185471 / 2.142072 (0.043398) | 0.797905 / 4.805227 (-4.007322) | 0.149811 / 6.500664 (-6.350853) | 0.066507 / 0.075469 (-0.008962) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.206300 / 1.841788 (-0.635487) | 13.620851 / 8.074308 (5.546543) | 14.190666 / 10.191392 (3.999274) | 0.142343 / 0.680424 (-0.538081) | 0.016867 / 0.534201 (-0.517334) | 0.381557 / 0.579283 (-0.197726) | 0.373935 / 0.434364 (-0.060429) | 0.437856 / 0.540337 (-0.102481) | 0.525235 / 1.386936 (-0.861701) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006598 / 0.011353 (-0.004755) | 0.004487 / 0.011008 (-0.006522) | 0.077582 / 0.038508 (0.039073) | 0.028008 / 0.023109 (0.004899) | 0.341602 / 0.275898 (0.065704) | 0.377105 / 0.323480 (0.053625) | 0.004999 / 0.007986 (-0.002986) | 0.004791 / 0.004328 (0.000462) | 0.076418 / 0.004250 (0.072167) | 0.038347 / 0.037052 (0.001295) | 0.343196 / 0.258489 (0.084707) | 0.382459 / 0.293841 (0.088618) | 0.030597 / 0.128546 (-0.097950) | 0.011579 / 0.075646 (-0.064067) | 0.085876 / 0.419271 (-0.333396) | 0.043241 / 0.043533 (-0.000292) | 0.343754 / 0.255139 (0.088615) | 0.380689 / 0.283200 (0.097489) | 0.096015 / 0.141683 (-0.045668) | 1.464419 / 1.452155 (0.012264) | 1.574010 / 1.492716 (0.081294) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.156433 / 0.018006 (0.138427) | 0.403179 / 0.000490 (0.402690) | 0.002415 / 0.000200 (0.002215) | 0.000082 / 0.000054 (0.000027) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.024946 / 0.037411 (-0.012465) | 0.100568 / 0.014526 (0.086042) | 0.106440 / 0.176557 (-0.070117) | 0.158457 / 0.737135 (-0.578678) | 0.110774 / 0.296338 (-0.185564) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.434734 / 0.215209 (0.219525) | 4.343874 / 2.077655 (2.266220) | 2.059759 / 1.504120 (0.555639) | 1.855124 / 1.541195 (0.313930) | 1.908567 / 1.468490 (0.440077) | 0.695283 / 4.584777 (-3.889494) | 3.347724 / 3.745712 (-0.397988) | 2.979498 / 5.269862 (-2.290364) | 1.532040 / 4.565676 (-3.033636) | 0.083021 / 0.424275 (-0.341254) | 0.012522 / 0.007607 (0.004915) | 0.540934 / 0.226044 (0.314890) | 5.385690 / 2.268929 (3.116762) | 2.507409 / 55.444624 (-52.937216) | 2.160537 / 6.876477 (-4.715939) | 2.269195 / 2.142072 (0.127123) | 0.804718 / 4.805227 (-4.000509) | 0.152432 / 6.500664 (-6.348232) | 0.068783 / 0.075469 (-0.006686) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.294698 / 1.841788 (-0.547090) | 14.152792 / 8.074308 (6.078484) | 14.233132 / 10.191392 (4.041740) | 0.143655 / 0.680424 (-0.536768) | 0.016844 / 0.534201 (-0.517357) | 0.380246 / 0.579283 (-0.199037) | 0.381730 / 0.434364 (-0.052633) | 0.456838 / 0.540337 (-0.083499) | 0.543677 / 1.386936 (-0.843259) |\n\n</details>\n</details>\n\n\n",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.008586 / 0.011353 (-0.002767) | 0.005886 / 0.011008 (-0.005122) | 0.114522 / 0.038508 (0.076014) | 0.040966 / 0.023109 (0.017857) | 0.366655 / 0.275898 (0.090757) | 0.408765 / 0.323480 (0.085285) | 0.006822 / 0.007986 (-0.001164) | 0.004508 / 0.004328 (0.000180) | 0.084715 / 0.004250 (0.080465) | 0.054007 / 0.037052 (0.016954) | 0.380500 / 0.258489 (0.122011) | 0.410377 / 0.293841 (0.116536) | 0.041040 / 0.128546 (-0.087507) | 0.013940 / 0.075646 (-0.061707) | 0.398456 / 0.419271 (-0.020816) | 0.059315 / 0.043533 (0.015782) | 0.353640 / 0.255139 (0.098501) | 0.388682 / 0.283200 (0.105482) | 0.121744 / 0.141683 (-0.019939) | 1.729306 / 1.452155 (0.277151) | 1.824768 / 1.492716 (0.332052) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.228806 / 0.018006 (0.210800) | 0.492790 / 0.000490 (0.492300) | 0.010815 / 0.000200 (0.010615) | 0.000372 / 0.000054 (0.000318) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.031750 / 0.037411 (-0.005662) | 0.127160 / 0.014526 (0.112635) | 0.136717 / 0.176557 (-0.039839) | 0.205590 / 0.737135 (-0.531545) | 0.142596 / 0.296338 (-0.153742) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.486419 / 0.215209 (0.271210) | 4.858572 / 2.077655 (2.780918) | 2.173867 / 1.504120 (0.669747) | 1.934619 / 1.541195 (0.393424) | 2.104185 / 1.468490 (0.635695) | 0.837913 / 4.584777 (-3.746864) | 4.552192 / 3.745712 (0.806480) | 2.565040 / 5.269862 (-2.704822) | 1.808499 / 4.565676 (-2.757178) | 0.103283 / 0.424275 (-0.320993) | 0.015040 / 0.007607 (0.007433) | 0.602325 / 0.226044 (0.376281) | 6.038655 / 2.268929 (3.769727) | 2.759789 / 55.444624 (-52.684835) | 2.330990 / 6.876477 (-4.545487) | 2.404111 / 2.142072 (0.262038) | 1.011637 / 4.805227 (-3.793590) | 0.202142 / 6.500664 (-6.298522) | 0.079496 / 0.075469 (0.004026) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.429543 / 1.841788 (-0.412245) | 18.052409 / 8.074308 (9.978101) | 16.989154 / 10.191392 (6.797762) | 0.208981 / 0.680424 (-0.471443) | 0.020490 / 0.534201 (-0.513711) | 0.502746 / 0.579283 (-0.076537) | 0.491769 / 0.434364 (0.057405) | 0.581970 / 0.540337 (0.041632) | 0.695816 / 1.386936 (-0.691120) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.008449 / 0.011353 (-0.002904) | 0.006633 / 0.011008 (-0.004375) | 0.088638 / 0.038508 (0.050130) | 0.040013 / 0.023109 (0.016904) | 0.413108 / 0.275898 (0.137210) | 0.446310 / 0.323480 (0.122830) | 0.006515 / 0.007986 (-0.001471) | 0.006223 / 0.004328 (0.001894) | 0.089823 / 0.004250 (0.085573) | 0.052029 / 0.037052 (0.014977) | 0.407263 / 0.258489 (0.148774) | 0.449416 / 0.293841 (0.155576) | 0.041810 / 0.128546 (-0.086736) | 0.014604 / 0.075646 (-0.061042) | 0.103728 / 0.419271 (-0.315543) | 0.058212 / 0.043533 (0.014679) | 0.408936 / 0.255139 (0.153797) | 0.436727 / 0.283200 (0.153528) | 0.124344 / 0.141683 (-0.017339) | 1.752112 / 1.452155 (0.299957) | 1.859104 / 1.492716 (0.366387) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.231172 / 0.018006 (0.213166) | 0.502974 / 0.000490 (0.502485) | 0.005586 / 0.000200 (0.005386) | 0.000137 / 0.000054 (0.000082) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.034097 / 0.037411 (-0.003314) | 0.133780 / 0.014526 (0.119254) | 0.142321 / 0.176557 (-0.034236) | 0.199807 / 0.737135 (-0.537329) | 0.150073 / 0.296338 (-0.146266) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.515658 / 0.215209 (0.300449) | 5.129783 / 2.077655 (3.052129) | 2.534767 / 1.504120 (1.030648) | 2.352468 / 1.541195 (0.811274) | 2.430708 / 1.468490 (0.962218) | 0.850087 / 4.584777 (-3.734690) | 4.529622 / 3.745712 (0.783910) | 2.451986 / 5.269862 (-2.817876) | 1.569568 / 4.565676 (-2.996109) | 0.102907 / 0.424275 (-0.321368) | 0.014420 / 0.007607 (0.006813) | 0.635124 / 0.226044 (0.409080) | 6.260496 / 2.268929 (3.991568) | 3.094984 / 55.444624 (-52.349640) | 2.780629 / 6.876477 (-4.095847) | 2.947620 / 2.142072 (0.805548) | 1.002397 / 4.805227 (-3.802830) | 0.200502 / 6.500664 (-6.300162) | 0.076577 / 0.075469 (0.001107) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.505958 / 1.841788 (-0.335829) | 18.364986 / 8.074308 (10.290678) | 16.707214 / 10.191392 (6.515822) | 0.210976 / 0.680424 (-0.469447) | 0.022077 / 0.534201 (-0.512124) | 0.516174 / 0.579283 (-0.063109) | 0.502469 / 0.434364 (0.068105) | 0.626790 / 0.540337 (0.086453) | 0.747230 / 1.386936 (-0.639706) |\n\n</details>\n</details>\n\n\n"

] |

https://api.github.com/repos/huggingface/datasets/issues/1124 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1124/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1124/comments | https://api.github.com/repos/huggingface/datasets/issues/1124/events | https://github.com/huggingface/datasets/pull/1124 | 757,186,983 | MDExOlB1bGxSZXF1ZXN0NTMyNjA0NzY3 | 1,124 | Add Xitsonga Ner | [] | closed | false | null | 1 | 2020-12-04T15:27:44Z | 2020-12-06T18:31:35Z | 2020-12-06T18:31:35Z | null | Clean Xitsonga Ner PR | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1124/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1124/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1124.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1124",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/1124.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1124"

} | true | [

"looks like this PR includes changes about many files other than the ones related to xitsonga NER\r\n\r\ncould you create another branch and another PR please ?"

] |

https://api.github.com/repos/huggingface/datasets/issues/952 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/952/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/952/comments | https://api.github.com/repos/huggingface/datasets/issues/952/events | https://github.com/huggingface/datasets/pull/952 | 754,357,270 | MDExOlB1bGxSZXF1ZXN0NTMwMjcxMTQz | 952 | Add orange sum | [] | closed | false | null | 0 | 2020-12-01T12:33:34Z | 2020-12-01T15:44:00Z | 2020-12-01T15:44:00Z | null | Add OrangeSum a french abstractive summarization dataset.

Paper: [BARThez: a Skilled Pretrained French Sequence-to-Sequence Model](https://arxiv.org/abs/2010.12321) | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/952/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/952/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/952.diff",

"html_url": "https://github.com/huggingface/datasets/pull/952",

"merged_at": "2020-12-01T15:44:00Z",

"patch_url": "https://github.com/huggingface/datasets/pull/952.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/952"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/4597 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4597/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4597/comments | https://api.github.com/repos/huggingface/datasets/issues/4597/events | https://github.com/huggingface/datasets/issues/4597 | 1,288,672,007 | I_kwDODunzps5Mz5MH | 4,597 | Streaming issue for financial_phrasebank | [

{

"color": "8B51EF",

"default": false,

"description": "",

"id": 4069435429,

"name": "hosted-on-google-drive",

"node_id": "LA_kwDODunzps7yjqgl",

"url": "https://api.github.com/repos/huggingface/datasets/labels/hosted-on-google-drive"

}

] | closed | false | null | 3 | 2022-06-29T12:45:43Z | 2022-07-01T09:29:36Z | 2022-07-01T09:29:36Z | null | ### Link

https://huggingface.co/datasets/financial_phrasebank/viewer/sentences_allagree/train

### Description

As reported by a community member using [AutoTrain Evaluate](https://huggingface.co/spaces/autoevaluate/model-evaluator/discussions/5#62bc217436d0e5d316a768f0), there seems to be a problem streaming this dataset:

```

Server error

Status code: 400

Exception: Exception

Message: Give up after 5 attempts with ConnectionError

```

### Owner

No | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4597/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4597/timeline | null | completed | null | null | false | [

"cc @huggingface/datasets: it seems like https://www.researchgate.net/ is flaky for datasets hosting (I put the \"hosted-on-google-drive\" tag since it's the same kind of issue I think)",

"Let's see if their license allows hosting their data on the Hub.",

"License is Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported (CC BY-NC-SA 3.0).\r\n\r\nWe can host their data on the Hub."

] |

https://api.github.com/repos/huggingface/datasets/issues/5361 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5361/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5361/comments | https://api.github.com/repos/huggingface/datasets/issues/5361/events | https://github.com/huggingface/datasets/issues/5361 | 1,497,153,889 | I_kwDODunzps5ZPMFh | 5,361 | How concatenate `Audio` elements using batch mapping | [] | closed | false | null | 3 | 2022-12-14T18:13:55Z | 2023-07-21T14:30:51Z | 2023-07-21T14:30:51Z | null | ### Describe the bug

I am trying to do concatenate audios in a dataset e.g. `google/fleurs`.

```python

print(dataset)

# Dataset({

# features: ['path', 'audio'],

# num_rows: 24

# })

def mapper_function(batch):

# to merge every 3 audio

# np.concatnate(audios[i: i+3]) for i in range(i, len(batch), 3)

dataset = dataset.map(mapper_function, batch=True, batch_size=24)

print(dataset)

# Expected output:

# Dataset({

# features: ['path', 'audio'],

# num_rows: 8

# })

```

I tried to construct `result={}` dictionary inside the mapper function, I just found it will not work because it needs `byte` also needed :((

I'd appreciate if your share any use cases similar to my problem or any solutions really. Thanks!

cc: @lhoestq

### Steps to reproduce the bug

1. load audio dataset

2. try to merge every k audios and return as one

### Expected behavior

Merged dataset with a fewer rows. If we merge every 3 rows, then `n // 3` number of examples.

### Environment info

- `datasets` version: 2.1.0

- Platform: Linux-5.15.65+-x86_64-with-debian-bullseye-sid

- Python version: 3.7.12

- PyArrow version: 8.0.0

- Pandas version: 1.3.5 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5361/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5361/timeline | null | completed | null | null | false | [

"You can try something like this ?\r\n```python\r\ndef mapper_function(batch):\r\n return {\"concatenated_audio\": [np.concatenate([audio[\"array\"] for audio in batch[\"audio\"]])]}\r\n\r\ndataset = dataset.map(\r\n mapper_function,\r\n batched=True,\r\n batch_size=3,\r\n remove_columns=list(dataset.features),\r\n)\r\n```",

"Thanks for the snippet!\r\n\r\nOne more question. I wonder why those two mappers are working so different that one taking 4 sec while other taking over 1 min :\r\n\r\n```python\r\n%%time\r\ndef mapper_function1(batch):\r\n # list_audio\r\n return {\r\n \"audio\": [\r\n {\r\n \"array\": np.concatenate([audio[\"array\"] for audio in batch[\"audio\"]]),\r\n \"sampling_rate\": 16_000,\r\n }\r\n ]\r\n }\r\n\r\ndataset.map(\r\n mapper_function1,\r\n batched=True,\r\n batch_size=3,\r\n remove_columns=list(dataset.features),\r\n)\r\n\r\n# 100%\r\n# 135/135 [01:13<00:00, 1.93ba/s]\r\n# CPU times: user 1min 10s, sys: 3.21 s, total: 1min 13s\r\n# Wall time: 1min 13s\r\n# Dataset({\r\n# features: ['audio'],\r\n# num_rows: 135\r\n# })\r\n\r\n# --------------------------------\r\n%%time\r\ndef mapper_function2(batch):\r\n # list_audio\r\n return {\"audio\": [np.concatenate([audio[\"array\"] for audio in batch[\"audio\"]])]}\r\n\r\ndataset.map(\r\n mapper_function2,\r\n batched=True,\r\n batch_size=3,\r\n remove_columns=list(dataset.features),\r\n)\r\n\r\n# 100%\r\n# 135/135 [00:03<00:00, 40.69ba/s]\r\n# CPU times: user 1.88 s, sys: 1.48 s, total: 3.36 s\r\n# Wall time: 4.8 s\r\n# Dataset({\r\n# features: ['audio'],\r\n# num_rows: 135\r\n# })\r\n```\r\n",

"In the first one you get a dataset with an Audio type, and in the second one you get a dataset with a sequence of floats type.\r\n\r\nThe Audio type encodes the data as WAV to save disk space, so it takes more time to create.\r\nThe Audio type is automatically inferred because you modify the column \"audio\" which was already an Audio type. If you name it to something else, type inference will use a type struct with array and sampling rate fields."

] |

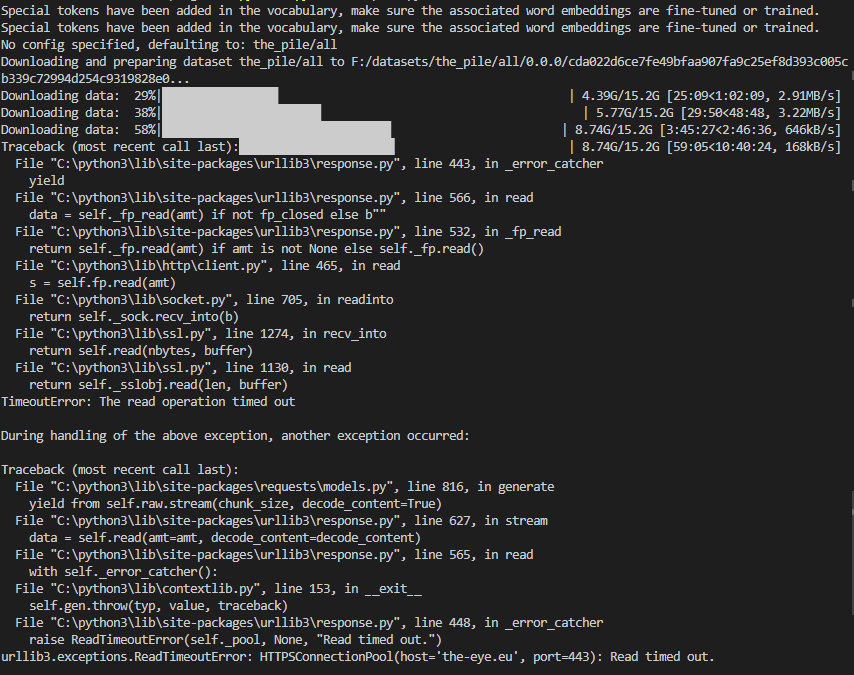

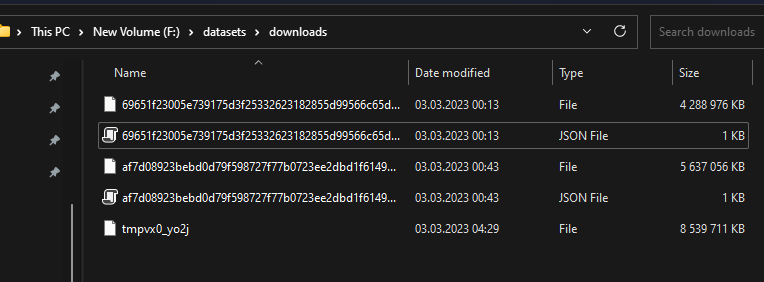

https://api.github.com/repos/huggingface/datasets/issues/5604 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5604/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5604/comments | https://api.github.com/repos/huggingface/datasets/issues/5604/events | https://github.com/huggingface/datasets/issues/5604 | 1,608,304,775 | I_kwDODunzps5f3MiH | 5,604 | Problems with downloading The Pile | [] | closed | false | null | 6 | 2023-03-03T09:52:08Z | 2023-03-29T01:44:05Z | 2023-03-24T12:44:25Z | null | ### Describe the bug

The downloads in the screenshot seem to be interrupted after some time and the last download throws a "Read timed out" error.

Here are the downloaded files:

They should be all 14GB like here (https://the-eye.eu/public/AI/pile/train/).

Alternatively, can I somehow download the files by myself and use the datasets preparing script?

### Steps to reproduce the bug

dataset = load_dataset('the_pile', split='train', cache_dir='F:\datasets')

### Expected behavior

The files should be downloaded correctly.

### Environment info

- `datasets` version: 2.10.1

- Platform: Windows-10-10.0.22623-SP0

- Python version: 3.10.5

- PyArrow version: 9.0.0

- Pandas version: 1.4.2 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5604/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5604/timeline | null | completed | null | null | false | [

"Hi! \r\n\r\n\r\nYou can specify `download_config=DownloadConfig(resume_download=True))` in `load_dataset` to resume the download when re-running the code after the timeout error:\r\n```python\r\nfrom datasets import load_dataset, DownloadConfig\r\ndataset = load_dataset('the_pile', split='train', cache_dir='F:\\datasets', download_config=DownloadConfig(resume_download=True))\r\n```\r\n\r\n",

"@mariosasko , I used your suggestion but its not saving anything , just stops and runs from the same point .\r\nbelow is the script to download and save on disk .\r\n\r\n```\r\nfrom datasets import load_dataset, DownloadConfig\r\n\r\n\r\n#load the Pile dataset from Hugging Face Datasets\r\n#dataset = load_dataset('the_pile')\r\ndataset = load_dataset('the_pile', split='train', cache_dir='datasets', download_config=DownloadConfig(resume_download=True))\r\n\r\n\r\n# save each file in the dataset to disk\r\nfor i, example in enumerate(dataset['train']):\r\n filename = f'pile_file_{i}.json'\r\n with open(filename, 'w') as f:\r\n f.write(str(example))\r\n\r\nprint(\"Finished saving Pile dataset files to disk.\")\r\n```\r\n",

"@mariosasko , it shows nothing in dataset folder\r\n\r\n```\r\n du -sh /mnt/nlp/hugging_face/*\r\n20K /mnt/nlp/hugging_face/datasets\r\n4.0K /mnt/nlp/hugging_face/download_pile.py\r\n```\r\n",

"@mariosasko \r\n\r\n```\r\nroot@d20f0ab8f4f8:/mnt/hugging_face# python3 download_pile.py\r\nNo config specified, defaulting to: the_pile/all\r\nDownloading and preparing dataset the_pile/all to /mnt/hugging_face/datasets/the_pile/all/0.0.0/6fadc480ecb32470826cbf5900a9558b791ce55d5e9a0fdc8ad653e7b64bb349...\r\nDownloading data files: 0%| | 0/3 [00:00<?, ?it/s]\r\n\r\n\r\n\r\n\r\n\r\nDownloading data: 70%|████████████████████████████████████████████████████████████████████▊ | 10.7G/15.2G [12:09<11:53, 6.36MB/s]\r\nDownloading data: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████| 15.2G/15.2G [22:15<00:00, 7.25MB/s]\r\nDownloading data: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████| 15.2G/15.2G [46:17<00:00, 5.48MB/s]\r\nDownloading data: 40%|██████████████████████████████████████▏ | 6.07G/15.3G [50:49<1:17:02, 1.99MB/s]\r\nTraceback (most recent call last):██████████████████████████▊ | 6.07G/15.3G [50:49<25:35:23, 99.9kB/s]\r\n File \"/usr/local/lib/python3.8/dist-packages/urllib3/response.py\", line 444, in _error_catcher\r\n yield\r\n File \"/usr/local/lib/python3.8/dist-packages/urllib3/response.py\", line 567, in read\r\n data = self._fp_read(amt) if not fp_closed else b\"\"\r\n File \"/usr/local/lib/python3.8/dist-packages/urllib3/response.py\", line 525, in _fp_read\r\n data = self._fp.read(chunk_amt)\r\n File \"/usr/lib/python3.8/http/client.py\", line 459, in read\r\n n = self.readinto(b)\r\n File \"/usr/lib/python3.8/http/client.py\", line 503, in readinto\r\n n = self.fp.readinto(b)\r\n File \"/usr/lib/python3.8/socket.py\", line 669, in readinto\r\n return self._sock.recv_into(b)\r\n File \"/usr/lib/python3.8/ssl.py\", line 1241, in recv_into\r\n return self.read(nbytes, buffer)\r\n File \"/usr/lib/python3.8/ssl.py\", line 1099, in read\r\n return self._sslobj.read(len, buffer)\r\nConnectionResetError: [Errno 104] Connection reset by peer\r\n\r\nDuring handling of the above exception, another exception occurred:\r\n\r\nTraceback (most recent call last):\r\n File \"/usr/local/lib/python3.8/dist-packages/requests/models.py\", line 816, in generate\r\n yield from self.raw.stream(chunk_size, decode_content=True)\r\n File \"/usr/local/lib/python3.8/dist-packages/urllib3/response.py\", line 628, in stream\r\n data = self.read(amt=amt, decode_content=decode_content)\r\n File \"/usr/local/lib/python3.8/dist-packages/urllib3/response.py\", line 593, in read\r\n raise IncompleteRead(self._fp_bytes_read, self.length_remaining)\r\n File \"/usr/lib/python3.8/contextlib.py\", line 131, in __exit__\r\n self.gen.throw(type, value, traceback)\r\n File \"/usr/local/lib/python3.8/dist-packages/urllib3/response.py\", line 461, in _error_catcher\r\n raise ProtocolError(\"Connection broken: %r\" % e, e)\r\nurllib3.exceptions.ProtocolError: (\"Connection broken: ConnectionResetError(104, 'Connection reset by peer')\", ConnectionResetError(104, 'Connection reset by peer'))\r\n\r\nDuring handling of the above exception, another exception occurred:\r\n\r\nTraceback (most recent call last):\r\n File \"download_pile.py\", line 6, in <module>\r\n dataset = load_dataset('the_pile', split='train', cache_dir='datasets', download_config=DownloadConfig(resume_download=True))\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/load.py\", line 1782, in load_dataset\r\n builder_instance.download_and_prepare(\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/builder.py\", line 872, in download_and_prepare\r\n self._download_and_prepare(\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/builder.py\", line 1649, in _download_and_prepare\r\n super()._download_and_prepare(\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/builder.py\", line 945, in _download_and_prepare\r\n split_generators = self._split_generators(dl_manager, **split_generators_kwargs)\r\n File \"/root/.cache/huggingface/modules/datasets_modules/datasets/the_pile/6fadc480ecb32470826cbf5900a9558b791ce55d5e9a0fdc8ad653e7b64bb349/the_pile.py\", line 192, in _split_generators\r\n data_dir = dl_manager.download(_DATA_URLS[self.config.name])\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/download/download_manager.py\", line 427, in download\r\n downloaded_path_or_paths = map_nested(\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/utils/py_utils.py\", line 443, in map_nested\r\n mapped = [\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/utils/py_utils.py\", line 444, in <listcomp>\r\n _single_map_nested((function, obj, types, None, True, None))\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/utils/py_utils.py\", line 363, in _single_map_nested\r\n mapped = [_single_map_nested((function, v, types, None, True, None)) for v in pbar]\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/utils/py_utils.py\", line 363, in <listcomp>\r\n mapped = [_single_map_nested((function, v, types, None, True, None)) for v in pbar]\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/utils/py_utils.py\", line 346, in _single_map_nested\r\n return function(data_struct)\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/download/download_manager.py\", line 453, in _download\r\n return cached_path(url_or_filename, download_config=download_config)\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/utils/file_utils.py\", line 182, in cached_path\r\n output_path = get_from_cache(\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/utils/file_utils.py\", line 575, in get_from_cache\r\n http_get(\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/utils/file_utils.py\", line 379, in http_get\r\n for chunk in response.iter_content(chunk_size=1024):\r\n File \"/usr/local/lib/python3.8/dist-packages/requests/models.py\", line 818, in generate\r\n raise ChunkedEncodingError(e)\r\nrequests.exceptions.ChunkedEncodingError: (\"Connection broken: ConnectionResetError(104, 'Connection reset by peer')\", ConnectionResetError(104, 'Connection reset by peer'))\r\n```\r\n",

"Users with slow internet speed are doomed (4MB/s). The dataset downloads fine at minimum speed 10MB/s.\n\nAlso, when the train splits were generated and then I removed the downloads folder to save up disk space, it started redownloading the whole dataset. Is there any way to use the already generated splits instead?",

"@sentialx @mariosasko , anytime on my above script , am I downloading and saving dataset correctly . Please suggest :)"

] |

https://api.github.com/repos/huggingface/datasets/issues/2418 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2418/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2418/comments | https://api.github.com/repos/huggingface/datasets/issues/2418/events | https://github.com/huggingface/datasets/pull/2418 | 904,051,497 | MDExOlB1bGxSZXF1ZXN0NjU1MjM2OTEz | 2,418 | add utf-8 while reading README | [] | closed | false | null | 2 | 2021-05-27T18:12:28Z | 2021-06-04T09:55:01Z | 2021-06-04T09:55:00Z | null | It was causing tests to fail in Windows (see #2416). In Windows, the default encoding is CP1252 which is unable to decode the character byte 0x9d | {

"+1": 2,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 2,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2418/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2418/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2418.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2418",

"merged_at": "2021-06-04T09:55:00Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2418.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2418"

} | true | [

"Can you please add encoding to this line as well to fix the issue (and maybe replace `path.open(...)` with `open(path, ...)`)?\r\nhttps://github.com/huggingface/datasets/blob/7bee4be44706a59b084b9b69c4cd00f73ee72f76/src/datasets/utils/metadata.py#L58",

"Sure, in fact even I was thinking of adding this in order to maintain the consistency!"

] |

https://api.github.com/repos/huggingface/datasets/issues/2977 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2977/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2977/comments | https://api.github.com/repos/huggingface/datasets/issues/2977/events | https://github.com/huggingface/datasets/issues/2977 | 1,009,378,692 | I_kwDODunzps48KeWE | 2,977 | Impossible to load compressed csv | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | 1 | 2021-09-28T07:18:54Z | 2021-10-01T15:53:16Z | 2021-10-01T15:53:15Z | null | ## Describe the bug

It is not possible to load from a compressed csv anymore.

## Steps to reproduce the bug

```python

load_dataset('csv', data_files=['/path/to/csv.bz2'])

```

## Problem and possible solution

This used to work, but the commit that broke it is [this one](https://github.com/huggingface/datasets/commit/ad489d4597381fc2d12c77841642cbeaecf7a2e0#diff-6f60f8d0552b75be8b3bfd09994480fd60dcd4e7eb08d02f721218c3acdd2782).

`pandas` usually gets the compression information from the filename itself (which was previously directly passed). Now, since it gets a file descriptor, it might be good to auto-infer the compression or let the user pass the `compression` kwarg to `load_dataset` (or maybe warn the user if the file ends with a commonly known compression scheme?).

## Environment info

- `datasets` version: 1.10.0 (and over)

- Platform: Linux-5.8.0-45-generic-x86_64-with-glibc2.17

- Python version: 3.8.10

- PyArrow version: 3.0.0

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2977/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2977/timeline | null | completed | null | null | false | [

"Hi @Valahaar, thanks for reporting and for your investigation about the source cause.\r\n\r\nYou are right and that commit prevents `pandas` from inferring the compression. On the other hand, @lhoestq did that change to support loading that dataset in streaming mode. \r\n\r\nI'm fixing it."

] |

https://api.github.com/repos/huggingface/datasets/issues/784 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/784/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/784/comments | https://api.github.com/repos/huggingface/datasets/issues/784/events | https://github.com/huggingface/datasets/issues/784 | 733,700,463 | MDU6SXNzdWU3MzM3MDA0NjM= | 784 | Issue with downloading Wikipedia data for low resource language | [] | closed | false | null | 5 | 2020-10-31T11:40:00Z | 2022-02-09T17:50:16Z | 2020-11-25T15:42:13Z | null | Hi, I tried to download Sundanese and Javanese wikipedia data with the following snippet

```

jv_wiki = datasets.load_dataset('wikipedia', '20200501.jv', beam_runner='DirectRunner')

su_wiki = datasets.load_dataset('wikipedia', '20200501.su', beam_runner='DirectRunner')

```

And I get the following error for these two languages:

Javanese

```

FileNotFoundError: Couldn't find file at https://dumps.wikimedia.org/jvwiki/20200501/dumpstatus.json

```

Sundanese

```

FileNotFoundError: Couldn't find file at https://dumps.wikimedia.org/suwiki/20200501/dumpstatus.json

```

I found from https://github.com/huggingface/datasets/issues/577#issuecomment-688435085 that for small languages, they are directly downloaded and parsed from the Wikipedia dump site, but both of `https://dumps.wikimedia.org/jvwiki/20200501/dumpstatus.json` and `https://dumps.wikimedia.org/suwiki/20200501/dumpstatus.json` are no longer valid.

Any suggestions on how to handle this issue? Thanks! | {

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/784/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/784/timeline | null | completed | null | null | false | [

"Hello, maybe you could ty to use another date for the wikipedia dump (see the available [dates](https://dumps.wikimedia.org/jvwiki) here for `jv`) ?",

"@lhoestq\r\n\r\nI've tried `load_dataset('wikipedia', '20200501.zh', beam_runner='DirectRunner')` and got the same `FileNotFoundError` as @SamuelCahyawijaya.\r\n\r\nAlso, using another date (e.g. `load_dataset('wikipedia', '20201120.zh', beam_runner='DirectRunner')`) will give the following error message.\r\n\r\n```\r\nValueError: BuilderConfig 20201120.zh not found. Available: ['20200501.aa', '20200501.ab', '20200501.ace', '20200501.ady', '20200501.af', '20200501.ak', '20200501.als', '20200501.am', '20200501.an', '20200501.ang', '20200501.ar', '20200501.arc', '20200501.arz', '20200501.as', '20200501.ast', '20200501.atj', '20200501.av', '20200501.ay', '20200501.az', '20200501.azb', '20200501.ba', '20200501.bar', '20200501.bat-smg', '20200501.bcl', '20200501.be', '20200501.be-x-old', '20200501.bg', '20200501.bh', '20200501.bi', '20200501.bjn', '20200501.bm', '20200501.bn', '20200501.bo', '20200501.bpy', '20200501.br', '20200501.bs', '20200501.bug', '20200501.bxr', '20200501.ca', '20200501.cbk-zam', '20200501.cdo', '20200501.ce', '20200501.ceb', '20200501.ch', '20200501.cho', '20200501.chr', '20200501.chy', '20200501.ckb', '20200501.co', '20200501.cr', '20200501.crh', '20200501.cs', '20200501.csb', '20200501.cu', '20200501.cv', '20200501.cy', '20200501.da', '20200501.de', '20200501.din', '20200501.diq', '20200501.dsb', '20200501.dty', '20200501.dv', '20200501.dz', '20200501.ee', '20200501.el', '20200501.eml', '20200501.en', '20200501.eo', '20200501.es', '20200501.et', '20200501.eu', '20200501.ext', '20200501.fa', '20200501.ff', '20200501.fi', '20200501.fiu-vro', '20200501.fj', '20200501.fo', '20200501.fr', '20200501.frp', '20200501.frr', '20200501.fur', '20200501.fy', '20200501.ga', '20200501.gag', '20200501.gan', '20200501.gd', '20200501.gl', '20200501.glk', '20200501.gn', '20200501.gom', '20200501.gor', '20200501.got', '20200501.gu', '20200501.gv', '20200501.ha', '20200501.hak', '20200501.haw', '20200501.he', '20200501.hi', '20200501.hif', '20200501.ho', '20200501.hr', '20200501.hsb', '20200501.ht', '20200501.hu', '20200501.hy', '20200501.ia', '20200501.id', '20200501.ie', '20200501.ig', '20200501.ii', '20200501.ik', '20200501.ilo', '20200501.inh', '20200501.io', '20200501.is', '20200501.it', '20200501.iu', '20200501.ja', '20200501.jam', '20200501.jbo', '20200501.jv', '20200501.ka', '20200501.kaa', '20200501.kab', '20200501.kbd', '20200501.kbp', '20200501.kg', '20200501.ki', '20200501.kj', '20200501.kk', '20200501.kl', '20200501.km', '20200501.kn', '20200501.ko', '20200501.koi', '20200501.krc', '20200501.ks', '20200501.ksh', '20200501.ku', '20200501.kv', '20200501.kw', '20200501.ky', '20200501.la', '20200501.lad', '20200501.lb', '20200501.lbe', '20200501.lez', '20200501.lfn', '20200501.lg', '20200501.li', '20200501.lij', '20200501.lmo', '20200501.ln', '20200501.lo', '20200501.lrc', '20200501.lt', '20200501.ltg', '20200501.lv', '20200501.mai', '20200501.map-bms', '20200501.mdf', '20200501.mg', '20200501.mh', '20200501.mhr', '20200501.mi', '20200501.min', '20200501.mk', '20200501.ml', '20200501.mn', '20200501.mr', '20200501.mrj', '20200501.ms', '20200501.mt', '20200501.mus', '20200501.mwl', '20200501.my', '20200501.myv', '20200501.mzn', '20200501.na', '20200501.nah', '20200501.nap', '20200501.nds', '20200501.nds-nl', '20200501.ne', '20200501.new', '20200501.ng', '20200501.nl', '20200501.nn', '20200501.no', '20200501.nov', '20200501.nrm', '20200501.nso', '20200501.nv', '20200501.ny', '20200501.oc', '20200501.olo', '20200501.om', '20200501.or', '20200501.os', '20200501.pa', '20200501.pag', '20200501.pam', '20200501.pap', '20200501.pcd', '20200501.pdc', '20200501.pfl', '20200501.pi', '20200501.pih', '20200501.pl', '20200501.pms', '20200501.pnb', '20200501.pnt', '20200501.ps', '20200501.pt', '20200501.qu', '20200501.rm', '20200501.rmy', '20200501.rn', '20200501.ro', '20200501.roa-rup', '20200501.roa-tara', '20200501.ru', '20200501.rue', '20200501.rw', '20200501.sa', '20200501.sah', '20200501.sat', '20200501.sc', '20200501.scn', '20200501.sco', '20200501.sd', '20200501.se', '20200501.sg', '20200501.sh', '20200501.si', '20200501.simple', '20200501.sk', '20200501.sl', '20200501.sm', '20200501.sn', '20200501.so', '20200501.sq', '20200501.sr', '20200501.srn', '20200501.ss', '20200501.st', '20200501.stq', '20200501.su', '20200501.sv', '20200501.sw', '20200501.szl', '20200501.ta', '20200501.tcy', '20200501.te', '20200501.tet', '20200501.tg', '20200501.th', '20200501.ti', '20200501.tk', '20200501.tl', '20200501.tn', '20200501.to', '20200501.tpi', '20200501.tr', '20200501.ts', '20200501.tt', '20200501.tum', '20200501.tw', '20200501.ty', '20200501.tyv', '20200501.udm', '20200501.ug', '20200501.uk', '20200501.ur', '20200501.uz', '20200501.ve', '20200501.vec', '20200501.vep', '20200501.vi', '20200501.vls', '20200501.vo', '20200501.wa', '20200501.war', '20200501.wo', '20200501.wuu', '20200501.xal', '20200501.xh', '20200501.xmf', '20200501.yi', '20200501.yo', '20200501.za', '20200501.zea', '20200501.zh', '20200501.zh-classical', '20200501.zh-min-nan', '20200501.zh-yue', '20200501.zu']\r\n```\r\n\r\nI am pretty sure that `https://dumps.wikimedia.org/enwiki/20201120/dumpstatus.json` exists.",

"Thanks for reporting I created a PR to make the custom config work (language=\"zh\", date=\"20201120\").",

"@lhoestq Thanks!",

"For posterity, here's how I got the data I needed: I needed Bengali, so I had to check which dumps are available here: https://dumps.wikimedia.org/bnwiki/ , then I ran:\r\n```\r\nload_dataset(\"wikipedia\", language=\"bn\", date=\"20211101\",\r\n beam_runner=\"DirectRunner\")\r\n```"

] |

https://api.github.com/repos/huggingface/datasets/issues/3382 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3382/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3382/comments | https://api.github.com/repos/huggingface/datasets/issues/3382/events | https://github.com/huggingface/datasets/pull/3382 | 1,071,293,299 | PR_kwDODunzps4vZT2K | 3,382 | #3337 Add typing overloads to Dataset.__getitem__ for mypy | [] | closed | false | null | 2 | 2021-12-04T20:54:49Z | 2021-12-14T10:28:55Z | 2021-12-14T10:28:55Z | null | Add typing overloads to Dataset.__getitem__ for mypy

Fixes #3337

**Iterable**

Iterable from `collections` cannot have a type, so you can't do `Iterable[int]` for example. `typing` has a Generic version that builds upon the one from `collections`.

**Flake8**

I had to add `# noqa: F811`, this is a bug from Flake8.

datasets uses flake8==3.7.9 which released in October 2019 if I update flake8 (4.0.1), I no longer get these errors, but I did not want to make the update without your approval. (It also triggers other errors like no args in f-strings.) | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3382/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3382/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3382.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3382",

"merged_at": "2021-12-14T10:28:54Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3382.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3382"

} | true | [

"Locally the `make quality` passes with the same dependencies. I would suggest upgrading flake8. (I can take care of it in another PR)\r\ncc @lhoestq ",

"Thank you for fixing flake8! I think we are ready to merge then. "

] |

https://api.github.com/repos/huggingface/datasets/issues/788 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/788/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/788/comments | https://api.github.com/repos/huggingface/datasets/issues/788/events | https://github.com/huggingface/datasets/issues/788 | 734,136,124 | MDU6SXNzdWU3MzQxMzYxMjQ= | 788 | failed to reuse cache | [] | closed | false | null | 0 | 2020-11-02T02:42:36Z | 2020-11-02T12:26:15Z | 2020-11-02T12:26:15Z | null | I packed the `load_dataset ` in a function of class, and cached data in a directory. But when I import the class and use the function, the data still have to be downloaded again. The information (Downloading and preparing dataset cnn_dailymail/3.0.0 (download: 558.32 MiB, generated: 1.28 GiB, post-processed: Unknown size, total: 1.82 GiB) to ******) which logged to terminal shows the path is right to the cache directory, but the files still have to be downloaded again. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/788/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/788/timeline | null | completed | null | null | false | [] |

https://api.github.com/repos/huggingface/datasets/issues/3786 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3786/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3786/comments | https://api.github.com/repos/huggingface/datasets/issues/3786/events | https://github.com/huggingface/datasets/issues/3786 | 1,150,233,067 | I_kwDODunzps5Ejynr | 3,786 | Bug downloading Virus scan warning page from Google Drive URLs | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | 1 | 2022-02-25T09:32:23Z | 2022-03-03T09:25:59Z | 2022-02-25T11:56:35Z | null | ## Describe the bug

Recently, some issues were reported with URLs from Google Drive, where we were downloading the Virus scan warning page instead of the data file itself.

See:

- #3758

- #3773

- #3784

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3786/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3786/timeline | null | completed | null | null | false | [

"Once the PR merged into master and until our next `datasets` library release, you can get this fix by installing our library from the GitHub master branch:\r\n```shell\r\npip install git+https://github.com/huggingface/datasets#egg=datasets\r\n```\r\nThen, if you had previously tried to load the data and got the checksum error, you should force the redownload of the data (before the fix, you just downloaded and cached the virus scan warning page, instead of the data file):\r\n```shell\r\nload_dataset(\"...\", download_mode=\"force_redownload\")\r\n```"

] |

https://api.github.com/repos/huggingface/datasets/issues/4781 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4781/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4781/comments | https://api.github.com/repos/huggingface/datasets/issues/4781/events | https://github.com/huggingface/datasets/pull/4781 | 1,326,114,161 | PR_kwDODunzps48hOie | 4,781 | Fix label renaming and add a battery of tests | [] | closed | false | null | 12 | 2022-08-02T16:42:07Z | 2022-09-12T11:27:06Z | 2022-09-12T11:24:45Z | null | This PR makes some changes to label renaming in `to_tf_dataset()`, both to fix some issues when users input something we weren't expecting, and also to make it easier to deprecate label renaming in future, if/when we want to move this special-casing logic to a function in `transformers`.

The main changes are:

- Label renaming now only happens when the `auto_rename_labels` argument is set. For backward compatibility, this defaults to `True` for now.

- If the user requests "label" but the data collator renames that column to "labels", the label renaming logic will now handle that case correctly.

- Added a battery of tests to make this more reliable in future.

- Adds an optimization to loading in `to_tf_dataset()` for unshuffled datasets (uses slicing instead of a list of indices)

Fixes #4772 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4781/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4781/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/4781.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4781",

"merged_at": "2022-09-12T11:24:45Z",

"patch_url": "https://github.com/huggingface/datasets/pull/4781.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4781"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._",

"Why don't we deprecate label renaming already instead ?",

"I think it'll break a lot of workflows if we deprecate it now! There isn't really a non-deprecated workflow yet - once we've added the `auto_rename_labels` option, then we can have `prepare_tf_dataset` on the `transformers` side use that, and then we can consider setting the default option to `False`, or beginning to deprecate it somehow.",

"I'm worried it's a bit of a waste of time to continue working on this behavior that shouldn't be here in the first place. Do you have a plan in mind ?",

"@lhoestq Broadly! The plan is:\r\n\r\n1) Create the `auto_rename_labels` flag with this PR and skip label renaming if it isn't set. Leave it as `True` for backward compatibility.\r\n2) Add the label renaming logic to `model.prepare_tf_dataset` in `transformers`. That method calls `to_tf_dataset()` right now. Once the label renaming logic is moved there, `model.prepare_tf_dataset` will set `auto_rename_labels=False` when calling `to_tf_dataset()`, and do label renaming itself.\r\n\r\nAfter step 2, `auto_rename_labels` is now only necessary for backward compatibility when users use `to_tf_dataset` directly. I want to leave it alone for a while because the `model.prepare_tf_dataset` workflow is very new. However, once it is established, we can deprecate `auto_rename_labels` and then finally remove it from the `datasets` code and keep it in `transformers` where it belongs.",

"I see ! Could it be possible to not add `auto_rename_labels` at all, since you want to remove it at the end ? Something roughly like this:\r\n1. show a warning in `to_tf_dataset` whevener a label is renamed automatically, saying that in the next major release this will be removed\r\n1. add the label renaming logic in `transformers` (to not have the warning)\r\n1. after some time, do a major release 3.0.0 and remove label renaming completely in `to_tf_dataset`\r\n\r\nWhat do you think ? cc @LysandreJik in case you have an opinion on this process.",

"@lhoestq I think that plan is mostly good, but if we make the change to `datasets` first then all users will keep getting deprecation warnings until we update the method in `transformers` and release a new version. \r\n\r\nI think we can follow your plan, but make the change to `transformers` first and wait for a new release before changing `datasets` - that way there are no visible warnings or API changes for users using `prepare_tf_dataset`. It also gives us more time to update the docs and try to move people to `prepare_tf_dataset` so they aren't confused by this!",

"Sounds good to me ! To summarize:\r\n1. add the label renaming logic in `transformers` + release\r\n1. show a warning in `to_tf_dataset` whevener a label is renamed automatically, saying that in the next major release this will be removed + minor release\r\n1. after some time, do a major release 3.0.0 and remove label renaming completely in `to_tf_dataset`",

"Yep, that's the plan! ",

"@lhoestq Are you okay with me merging this for now? ",

"Can you remove `auto_rename_labels` ? I don't think it's a good idea to add it if the plan is to remove it later",

"Right now, the `auto_rename_labels` behaviour happens in all cases! Making it an option is the first step in the process of disabling it (and moving the functionality to `transformers`) and then finally deprecating it."

] |

https://api.github.com/repos/huggingface/datasets/issues/3461 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3461/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3461/comments | https://api.github.com/repos/huggingface/datasets/issues/3461/events | https://github.com/huggingface/datasets/pull/3461 | 1,085,007,346 | PR_kwDODunzps4wFzDP | 3,461 | Fix links in metrics description | [] | closed | false | null | 0 | 2021-12-20T16:56:19Z | 2021-12-20T17:14:52Z | 2021-12-20T17:14:51Z | null | Remove Markdown syntax for links in metrics description, as it is not properly rendered.

Related to #3437. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3461/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3461/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3461.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3461",

"merged_at": "2021-12-20T17:14:51Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3461.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3461"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/1031 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1031/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1031/comments | https://api.github.com/repos/huggingface/datasets/issues/1031/events | https://github.com/huggingface/datasets/pull/1031 | 755,844,004 | MDExOlB1bGxSZXF1ZXN0NTMxNDgyMzEy | 1,031 | add crows_pairs | [] | closed | false | null | 2 | 2020-12-03T05:05:11Z | 2020-12-03T18:29:52Z | 2020-12-03T18:29:39Z | null | This PR adds CrowS-Pairs datasets.

More info:

https://github.com/nyu-mll/crows-pairs/

https://arxiv.org/pdf/2010.00133.pdf | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1031/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1031/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1031.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1031",

"merged_at": "2020-12-03T18:29:39Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1031.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1031"

} | true | [

"looks good now :) wdyt @yjernite ?",

"Looks good to merge for me, can edit the dataset card later if required. Merging"

] |

https://api.github.com/repos/huggingface/datasets/issues/5509 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5509/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5509/comments | https://api.github.com/repos/huggingface/datasets/issues/5509/events | https://github.com/huggingface/datasets/pull/5509 | 1,574,177,320 | PR_kwDODunzps5JbH-u | 5,509 | Add a static `__all__` to `__init__.py` for typecheckers | [] | open | false | null | 2 | 2023-02-07T11:42:40Z | 2023-02-08T17:48:24Z | null | null | This adds a static `__all__` field to `__init__.py`, allowing typecheckers to know which symbols are accessible from `datasets` at runtime. In particular [Pyright](https://github.com/microsoft/pylance-release/issues/2328#issuecomment-1029381258) seems to rely on this. At this point I have added all (modulo oversight) the symbols mentioned in the Reference part of [the docs](https://huggingface.co/docs/datasets), but that could be adjusted. As a side effect, only these symbols will be imported by `from datasets import *`, which may or may not be a good thing (and if it isn't, that's easy to fix).

Another option would be to add a pyi stub, but I think `__all__` should be the most pythonic solution.

This should fix #3841. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5509/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5509/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/5509.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5509",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/5509.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5509"

} | true | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_5509). All of your documentation changes will be reflected on that endpoint.",

"Hi! I've commented on the original issue to provide some context. Feel free to share your opinion there."

] |

https://api.github.com/repos/huggingface/datasets/issues/5807 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5807/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5807/comments | https://api.github.com/repos/huggingface/datasets/issues/5807/events | https://github.com/huggingface/datasets/pull/5807 | 1,688,977,237 | PR_kwDODunzps5PaKRE | 5,807 | Support parallelized downloading in load_dataset with Spark | [] | closed | false | null | 3 | 2023-04-28T18:34:32Z | 2023-05-25T16:54:14Z | 2023-05-25T16:54:14Z | null | As proposed in https://github.com/huggingface/datasets/issues/5798, this adds support to parallelized downloading in `load_dataset` with Spark, which can speed up the process by distributing the workload to worker nodes.

Parallelizing dataset processing is not supported in this PR. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5807/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5807/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/5807.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5807",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/5807.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5807"

} | true | [

"Hi @lhoestq or other maintainers, this is ready for review, could you please take a look?",

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_5807). All of your documentation changes will be reflected on that endpoint.",

"Per the discussion in #5798, will implement with `joblibspark` instead."

] |

https://api.github.com/repos/huggingface/datasets/issues/290 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/290/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/290/comments | https://api.github.com/repos/huggingface/datasets/issues/290/events | https://github.com/huggingface/datasets/issues/290 | 641,978,286 | MDU6SXNzdWU2NDE5NzgyODY= | 290 | ConnectionError - Eli5 dataset download | [] | closed | false | null | 2 | 2020-06-19T13:40:33Z | 2020-06-20T13:22:24Z | 2020-06-20T13:22:24Z | null | Hi, I have a problem with downloading Eli5 dataset. When typing `nlp.load_dataset('eli5')`, I get ConnectionError: Couldn't reach https://storage.googleapis.com/huggingface-nlp/cache/datasets/eli5/LFQA_reddit/1.0.0/explain_like_im_five-train_eli5.arrow

I would appreciate if you could help me with this issue. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/290/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/290/timeline | null | completed | null | null | false | [

"It should ne fixed now, thanks for reporting this one :)\r\nIt was an issue on our google storage.\r\n\r\nLet me now if you're still facing this issue.",

"It works now, thanks for prompt help!"

] |

https://api.github.com/repos/huggingface/datasets/issues/5893 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5893/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5893/comments | https://api.github.com/repos/huggingface/datasets/issues/5893/events | https://github.com/huggingface/datasets/pull/5893 | 1,722,519,056 | PR_kwDODunzps5RK40K | 5,893 | Load cached dataset as iterable | [] | closed | false | null | 8 | 2023-05-23T17:40:35Z | 2023-06-01T11:58:24Z | 2023-06-01T11:51:29Z | null | To be used to train models it allows to load an IterableDataset from the cached Arrow file.

See https://github.com/huggingface/datasets/issues/5481 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5893/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5893/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/5893.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5893",

"merged_at": "2023-06-01T11:51:29Z",

"patch_url": "https://github.com/huggingface/datasets/pull/5893.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5893"

} | true | [

"@lhoestq Could you please look into that and review?",

"_The documentation is not available anymore as the PR was closed or merged._",

"@lhoestq I refactored the code. Could you please check is it what you requested?",

"@lhoestq Thanks for a review. Excellent tips. All tips applied. ",

"I think there is just PythonFormatter that needs to be imported in the test file and we should be good to merge",

"@lhoestq that is weird. I have linter error when I do it.",

"@lhoestq Now it should work properly.",