sha

null | last_modified

null | library_name

stringclasses 154

values | text

stringlengths 1

900k

| metadata

stringlengths 2

348k

| pipeline_tag

stringclasses 45

values | id

stringlengths 5

122

| tags

sequencelengths 1

1.84k

| created_at

stringlengths 25

25

| arxiv

sequencelengths 0

201

| languages

sequencelengths 0

1.83k

| tags_str

stringlengths 17

9.34k

| text_str

stringlengths 0

389k

| text_lists

sequencelengths 0

722

| processed_texts

sequencelengths 1

723

| tokens_length

sequencelengths 1

723

| input_texts

sequencelengths 1

61

| embeddings

sequencelengths 768

768

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

null | null | null | ## Miqu DPO

Miqu DPO is the same model than Miqu, with a DPO trained on MiquMaid v2 on Alpaca format, it was done for the purpose to try to uncensor further Miqu and make Alpaca prompt more usable with base Miqu. Also, this will be one of the base for MiquMaid-v2-2x70B-DPO.

Miqu base is REALLY censored outside RP, this LoRA let him reply a little more thing, but that's it. To have his full potential, it need to be in a merge/MoE of MiquMaid, since the loRA was based for MiquMaid, not Miqu base. I still let it public for who want it.

It uncensor a little the model, but keep some warning. Sometime reply really unethically.

<!-- description start -->

## Description

This repo contains GGUF files of Miqu-70B-DPO.

<!-- description end -->

<!-- description start -->

## Dataset used

- NobodyExistsOnTheInternet/ToxicDPOqa

- Undi95/toxic-dpo-v0.1-NoWarning

<!-- description end -->

<!-- prompt-template start -->

## Prompt format: Alpaca

```

### Instruction:

{prompt}

### Input:

{input}

### Response:

{output}

```

Or simple Mistral format (but the uncensoring was done on Alpaca, so Alpaca is recommanded).

## Others

If you want to support me, you can [here](https://ko-fi.com/undiai). | {} | null | Undi95/Miqu-70B-Alpaca-DPO-GGUF | [

"gguf",

"region:us"

] | 2024-02-06T19:41:02+00:00 | [] | [] | TAGS

#gguf #region-us

| ## Miqu DPO

Miqu DPO is the same model than Miqu, with a DPO trained on MiquMaid v2 on Alpaca format, it was done for the purpose to try to uncensor further Miqu and make Alpaca prompt more usable with base Miqu. Also, this will be one of the base for MiquMaid-v2-2x70B-DPO.

Miqu base is REALLY censored outside RP, this LoRA let him reply a little more thing, but that's it. To have his full potential, it need to be in a merge/MoE of MiquMaid, since the loRA was based for MiquMaid, not Miqu base. I still let it public for who want it.

It uncensor a little the model, but keep some warning. Sometime reply really unethically.

## Description

This repo contains GGUF files of Miqu-70B-DPO.

## Dataset used

- NobodyExistsOnTheInternet/ToxicDPOqa

- Undi95/toxic-dpo-v0.1-NoWarning

## Prompt format: Alpaca

Or simple Mistral format (but the uncensoring was done on Alpaca, so Alpaca is recommanded).

## Others

If you want to support me, you can here. | [

"## Miqu DPO\n\nMiqu DPO is the same model than Miqu, with a DPO trained on MiquMaid v2 on Alpaca format, it was done for the purpose to try to uncensor further Miqu and make Alpaca prompt more usable with base Miqu. Also, this will be one of the base for MiquMaid-v2-2x70B-DPO.\n\nMiqu base is REALLY censored outside RP, this LoRA let him reply a little more thing, but that's it. To have his full potential, it need to be in a merge/MoE of MiquMaid, since the loRA was based for MiquMaid, not Miqu base. I still let it public for who want it.\n\nIt uncensor a little the model, but keep some warning. Sometime reply really unethically.",

"## Description\n\nThis repo contains GGUF files of Miqu-70B-DPO.",

"## Dataset used\n\n- NobodyExistsOnTheInternet/ToxicDPOqa\n- Undi95/toxic-dpo-v0.1-NoWarning",

"## Prompt format: Alpaca\n\n\nOr simple Mistral format (but the uncensoring was done on Alpaca, so Alpaca is recommanded).",

"## Others\n\nIf you want to support me, you can here."

] | [

"TAGS\n#gguf #region-us \n",

"## Miqu DPO\n\nMiqu DPO is the same model than Miqu, with a DPO trained on MiquMaid v2 on Alpaca format, it was done for the purpose to try to uncensor further Miqu and make Alpaca prompt more usable with base Miqu. Also, this will be one of the base for MiquMaid-v2-2x70B-DPO.\n\nMiqu base is REALLY censored outside RP, this LoRA let him reply a little more thing, but that's it. To have his full potential, it need to be in a merge/MoE of MiquMaid, since the loRA was based for MiquMaid, not Miqu base. I still let it public for who want it.\n\nIt uncensor a little the model, but keep some warning. Sometime reply really unethically.",

"## Description\n\nThis repo contains GGUF files of Miqu-70B-DPO.",

"## Dataset used\n\n- NobodyExistsOnTheInternet/ToxicDPOqa\n- Undi95/toxic-dpo-v0.1-NoWarning",

"## Prompt format: Alpaca\n\n\nOr simple Mistral format (but the uncensoring was done on Alpaca, so Alpaca is recommanded).",

"## Others\n\nIf you want to support me, you can here."

] | [

9,

187,

19,

35,

32,

14

] | [

"passage: TAGS\n#gguf #region-us \n## Miqu DPO\n\nMiqu DPO is the same model than Miqu, with a DPO trained on MiquMaid v2 on Alpaca format, it was done for the purpose to try to uncensor further Miqu and make Alpaca prompt more usable with base Miqu. Also, this will be one of the base for MiquMaid-v2-2x70B-DPO.\n\nMiqu base is REALLY censored outside RP, this LoRA let him reply a little more thing, but that's it. To have his full potential, it need to be in a merge/MoE of MiquMaid, since the loRA was based for MiquMaid, not Miqu base. I still let it public for who want it.\n\nIt uncensor a little the model, but keep some warning. Sometime reply really unethically.## Description\n\nThis repo contains GGUF files of Miqu-70B-DPO.## Dataset used\n\n- NobodyExistsOnTheInternet/ToxicDPOqa\n- Undi95/toxic-dpo-v0.1-NoWarning## Prompt format: Alpaca\n\n\nOr simple Mistral format (but the uncensoring was done on Alpaca, so Alpaca is recommanded).## Others\n\nIf you want to support me, you can here."

] | [

-0.08862060308456421,

0.1577046513557434,

-0.006596671883016825,

0.03347163274884224,

0.07134943455457687,

0.004318821243941784,

0.1428087055683136,

0.07317550480365753,

0.041706617921590805,

0.06797021627426147,

-0.01314578764140606,

0.06251613050699234,

0.06452783942222595,

0.06642954796552658,

0.01941610686480999,

-0.13565950095653534,

0.00018188318063039333,

-0.03414444625377655,

0.019177518784999847,

0.020507415756583214,

0.08759129047393799,

-0.0026737169828265905,

0.030203847214579582,

-0.04407859966158867,

-0.06187661737203598,

0.01423760037869215,

-0.008541656658053398,

-0.029695987701416016,

0.03212043270468712,

0.08167464286088943,

0.026325887069106102,

0.0340704470872879,

-0.0010218163952231407,

-0.1517568677663803,

0.035271864384412766,

0.0980641320347786,

-0.01293626707047224,

0.025382492691278458,

0.07571703940629959,

-0.030781347304582596,

0.0619184784591198,

-0.08270485699176788,

-0.0418403260409832,

0.05286876857280731,

-0.11127150803804398,

-0.06402909010648727,

-0.08609455823898315,

0.075367771089077,

0.006919735576957464,

0.06346090883016586,

0.0030032158829271793,

0.08120176941156387,

-0.012008006684482098,

0.049243662506341934,

0.13459739089012146,

-0.06375494599342346,

-0.03063327632844448,

0.1549626737833023,

-0.015549816191196442,

0.048317451030015945,

-0.024725867435336113,

-0.014982838183641434,

-0.0056465729139745235,

-0.0010112366871908307,

-0.16356858611106873,

-0.03814901039004326,

0.09915518760681152,

-0.04325046390295029,

-0.05222375690937042,

-0.009401763789355755,

0.2861306667327881,

-0.005966504104435444,

-0.046218547970056534,

0.015257786959409714,

-0.07298193126916885,

0.1568971425294876,

0.03599901869893074,

-0.04902719333767891,

0.059877779334783554,

0.061020851135253906,

0.09972837567329407,

-0.06210722401738167,

-0.0631507858633995,

-0.014967542141675949,

-0.08873025327920914,

0.12875622510910034,

-0.021074559539556503,

-0.004883156158030033,

-0.043979793787002563,

0.018635910004377365,

-0.17308588325977325,

-0.07690279185771942,

-0.03380317613482475,

-0.11272002756595612,

0.014174042269587517,

-0.02304982580244541,

-0.06984028220176697,

-0.17513123154640198,

0.09076672047376633,

0.09046736359596252,

-0.021132662892341614,

0.005910370498895645,

-0.07551544904708862,

0.019171319901943207,

0.11335127055644989,

-0.04590203985571861,

-0.09920718520879745,

0.020402923226356506,

0.12526436150074005,

-0.04256811738014221,

0.03419746086001396,

-0.026451367884874344,

-0.01961330696940422,

0.004381492268294096,

-0.02377569116652012,

0.0790213942527771,

0.13568103313446045,

0.01929420791566372,

-0.0781325027346611,

-0.09432139992713928,

0.14759834110736847,

-0.12220686674118042,

-0.00798009242862463,

0.029688073322176933,

-0.022815855219960213,

0.11829262226819992,

0.044019799679517746,

-0.012856588698923588,

-0.06019217148423195,

0.02864457480609417,

-0.03459778428077698,

0.0060185580514371395,

-0.07517510652542114,

-0.039199281483888626,

0.07024171203374863,

0.11520940065383911,

-0.013232174329459667,

-0.16511379182338715,

-0.0718524232506752,

-0.07999330759048462,

0.041322972625494,

-0.022219451144337654,

-0.03841399401426315,

-0.016058655455708504,

0.029241126030683517,

-0.025471698492765427,

0.010199871845543385,

-0.011615050956606865,

-0.024790998548269272,

0.055491458624601364,

-0.05189680680632591,

0.026067379862070084,

-0.04155799373984337,

0.042714428156614304,

-0.04317662492394447,

0.031079687178134918,

-0.1674737185239792,

0.08949942886829376,

-0.09103118628263474,

-0.026252834126353264,

-0.07274772226810455,

0.04591674730181694,

0.0009718740475364029,

-0.0415530689060688,

0.036133505403995514,

0.17104806005954742,

-0.21861794590950012,

-0.025465894490480423,

0.16109900176525116,

-0.11458571255207062,

0.00829428993165493,

0.08772262930870056,

0.021084623411297798,

-0.0019594449549913406,

0.09118511527776718,

0.12287147343158722,

0.014291107654571533,

-0.12270360440015793,

-0.11228995770215988,

-0.03089495375752449,

-0.027714014053344727,

0.09830964356660843,

0.043730322271585464,

-0.054010022431612015,

0.014298475347459316,

0.025949779897928238,

-0.017616773024201393,

-0.039228007197380066,

0.014118600636720657,

-0.06365881115198135,

-0.04786292836070061,

-0.01715836673974991,

-0.014324896968901157,

0.013610154390335083,

-0.06623601913452148,

0.014096556231379509,

-0.025025920942425728,

-0.0362718403339386,

0.1180339828133583,

0.0027960920706391335,

0.014934219419956207,

-0.04857628419995308,

0.1388736218214035,

-0.09818506240844727,

0.03621440380811691,

-0.1516202837228775,

-0.05317673087120056,

-0.020267024636268616,

-0.1307346522808075,

0.004876959137618542,

0.05777561664581299,

-0.0011341837234795094,

0.052611928433179855,

-0.057072024792432785,

0.0533984899520874,

-0.04804559051990509,

0.011688259430229664,

-0.01649288833141327,

-0.12978936731815338,

0.029880015179514885,

-0.04163389280438423,

0.09700758755207062,

-0.05802678316831589,

0.02512148581445217,

0.012072975747287273,

0.10776105523109436,

-0.006135774310678244,

-0.05547391623258591,

0.0750153586268425,

0.04376809671521187,

-0.025973645970225334,

-0.05555545166134834,

0.035714536905288696,

0.026676906272768974,

-0.059122148901224136,

0.03645375370979309,

-0.1175151839852333,

-0.1625491976737976,

0.09071920067071915,

0.013343091122806072,

-0.01514077465981245,

-0.024999285116791725,

0.001838754047639668,

-0.027962220832705498,

-0.01804419979453087,

-0.0015908924397081137,

0.09458082914352417,

0.0261604655534029,

0.043941035866737366,

-0.06146575137972832,

-0.0007181512773968279,

0.02309507690370083,

-0.050746265798807144,

-0.04516797140240669,

0.03876355290412903,

0.0695134699344635,

-0.23004087805747986,

0.10289441049098969,

0.0898321196436882,

0.009678332135081291,

0.0738295242190361,

0.057019542902708054,

-0.10144307464361191,

-0.06186867877840996,

0.03053620643913746,

0.09619613736867905,

0.038216233253479004,

-0.03578682243824005,

0.061396196484565735,

0.025520041584968567,

0.028643837198615074,

0.005976622458547354,

0.01925569586455822,

-0.004070141352713108,

0.004089438356459141,

-0.020655835047364235,

-0.15873755514621735,

-0.002671752357855439,

-0.011433473788201809,

0.09871874749660492,

0.01756017655134201,

0.1338530331850052,

0.01777850091457367,

-0.023845741525292397,

-0.06583794206380844,

0.10856760293245316,

-0.12895235419273376,

-0.18653450906276703,

0.017266973853111267,

-0.06872648000717163,

-0.15090630948543549,

0.033586595207452774,

0.06279513239860535,

-0.07622221112251282,

0.018339430913329124,

0.037720728665590286,

-0.027912287041544914,

-0.00450630160048604,

-0.07327703386545181,

-0.09092364460229874,

0.06805054098367691,

0.042901068925857544,

-0.10244495421648026,

-0.007967093959450722,

0.028673360124230385,

-0.0419858954846859,

0.12023142725229263,

-0.027085846289992332,

0.10418964922428131,

0.035807203501462936,

0.047836024314165115,

-0.0572240836918354,

0.05384591966867447,

0.1175522580742836,

-0.07444089651107788,

0.022213904187083244,

0.10504144430160522,

0.05133892595767975,

0.08138743042945862,

0.09575478732585907,

0.016734164208173752,

-0.08246269822120667,

0.006285257637500763,

0.047102272510528564,

-0.032615724951028824,

-0.20219634473323822,

-0.09962759166955948,

-0.06164197623729706,

-0.013074079528450966,

0.009196409955620766,

0.03613783046603203,

-0.009299385361373425,

0.03365819528698921,

-0.055849589407444,

0.057718370109796524,

-0.007275454234331846,

0.06758647412061691,

-0.005468977149575949,

-0.014735016040503979,

0.03708594664931297,

-0.01441306620836258,

0.007530771661549807,

0.1674070805311203,

0.1023065447807312,

0.18934868276119232,

-0.057611990720033646,

0.05077267810702324,

0.08113985508680344,

0.07657966762781143,

0.03003673255443573,

0.051130261272192,

0.04998305067420006,

0.033304277807474136,

-0.04701732471585274,

-0.07130425423383713,

-0.09926219284534454,

0.03852904587984085,

0.08485246449708939,

-0.0627315491437912,

-0.03704958036541939,

-0.06630219519138336,

0.04475005716085434,

0.20327310264110565,

-0.03723348304629326,

-0.06744619458913803,

-0.044252026826143265,

0.040282100439071655,

0.07804727554321289,

-0.04464249312877655,

-0.032169122248888016,

0.08590131998062134,

-0.07801276445388794,

0.10758586972951889,

0.019082583487033844,

0.06750169396400452,

-0.08505982160568237,

0.0006513156113214791,

-0.12866801023483276,

0.011210649274289608,

-0.0005729729891754687,

0.1227155327796936,

-0.2849809527397156,

0.042021457105875015,

0.04176853224635124,

0.0364341214299202,

-0.0660063624382019,

-0.015198836103081703,

0.06770739704370499,

0.08226922154426575,

0.14540797472000122,

0.05678020790219307,

-0.020319471135735512,

-0.05009232833981514,

-0.17983384430408478,

0.028738414868712425,

0.004375935532152653,

-0.025670355185866356,

0.029347093775868416,

0.054997701197862625,

0.007028160151094198,

-0.06252732872962952,

0.05950320512056351,

-0.22387592494487762,

-0.18301667273044586,

0.11530881375074387,

-0.013634631410241127,

0.08422825485467911,

-0.023021861910820007,

-0.0038468288257718086,

0.10375688970088959,

0.20604532957077026,

0.067221499979496,

-0.0797470211982727,

-0.0861181765794754,

-0.003530658083036542,

0.050630245357751846,

-0.05172811076045036,

-0.07154050469398499,

-0.033676523715257645,

0.11370640993118286,

-0.052341412752866745,

-0.0926138311624527,

0.0283505916595459,

-0.10758097469806671,

-0.08898235857486725,

-0.0578991174697876,

0.03278152644634247,

0.06552643328905106,

0.021282071247696877,

0.046575646847486496,

0.009819882921874523,

-0.03583008423447609,

-0.09391102939844131,

0.04355373606085777,

0.19366849958896637,

0.055993568152189255,

0.07149910181760788,

-0.1345953494310379,

-0.01846797578036785,

-0.10330063104629517,

-0.06850507855415344,

0.09423059225082397,

0.2573390603065491,

-0.011207462288439274,

0.08197847008705139,

0.22120440006256104,

-0.141131192445755,

-0.16500455141067505,

-0.11082212626934052,

0.006135399919003248,

-0.00755692133679986,

-0.008933800272643566,

-0.22974546253681183,

-0.02570044994354248,

0.1633175164461136,

0.020945120602846146,

0.0864718109369278,

-0.1285785585641861,

-0.04681478813290596,

-0.008119744248688221,

-0.008503305725753307,

0.15273213386535645,

-0.17374177277088165,

-0.06943801790475845,

-0.11169624328613281,

-0.003424494992941618,

0.1282205581665039,

-0.15066947042942047,

0.09257031977176666,

-0.0008404995896853507,

0.01827351748943329,

0.06343502551317215,

-0.06048789620399475,

0.15015554428100586,

-0.0029252194799482822,

-0.008396861143410206,

-0.10432835668325424,

0.00016844899801071733,

0.08891340345144272,

-0.04288310930132866,

0.0389523021876812,

0.11145087331533432,

0.017268620431423187,

-0.0308547206223011,

-0.03385608643293381,

-0.08766069263219833,

0.061100464314222336,

-0.07061276584863663,

-0.044215746223926544,

0.017312118783593178,

0.09096286445856094,

0.10194617509841919,

-0.01220472902059555,

-0.05018181726336479,

-0.08950037509202957,

0.1053890660405159,

0.11409680545330048,

0.07692787796258926,

-0.024120280519127846,

-0.051826413720846176,

0.002486997051164508,

-0.04592731595039368,

0.06981081515550613,

-0.062354158610105515,

0.045224398374557495,

0.06787313520908356,

-0.004269259516149759,

0.025628602132201195,

0.021990636363625526,

-0.14050641655921936,

0.06085584685206413,

0.0487099252641201,

-0.09782832860946655,

-0.11878829449415207,

-0.02450927533209324,

0.12305868417024612,

-0.03496861830353737,

-0.04467977210879326,

0.13166068494319916,

-0.002072294009849429,

-0.04487660154700279,

0.0004353152762632817,

0.033273860812187195,

-0.030029824003577232,

0.05699153244495392,

0.0010346837807446718,

-0.017279649153351784,

-0.06396538019180298,

0.08728291839361191,

0.09381373226642609,

-0.13044090569019318,

0.004282272886484861,

-0.022951209917664528,

-0.08492658287286758,

-0.11414869129657745,

-0.0833311378955841,

0.05872834846377373,

-0.0030998580623418093,

-0.04482721537351608,

-0.03427546098828316,

0.0032252196688205004,

0.02445073612034321,

-0.06229957938194275,

-0.00022232334595173597,

0.02269187942147255,

0.05601637065410614,

-0.029202919453382492,

-0.08150652796030045,

0.0072387768886983395,

-0.01699700392782688,

0.04241516813635826,

-0.024479812011122704,

0.014356575906276703,

-0.013343822211027145,

0.04638701304793358,

0.0020039344672113657,

-0.026188179850578308,

-0.09512608498334885,

-0.028695469722151756,

-0.09870489686727524,

0.0040281652472913265,

-0.04939981922507286,

0.002026510424911976,

-0.006907175295054913,

0.047207895666360855,

0.034176670014858246,

0.006474863737821579,

-0.04238135740160942,

-0.045779891312122345,

-0.037799444049596786,

0.05139419808983803,

-0.1005132794380188,

-0.06437736749649048,

0.035131070762872696,

-0.056099340319633484,

0.05894540995359421,

0.08861008286476135,

-0.04849691316485405,

-0.06967771798372269,

-0.1709228754043579,

-0.03565399721264839,

-0.0240532997995615,

0.0969928577542305,

0.0065703378058969975,

-0.19986434280872345,

0.015657341107726097,

0.03324900195002556,

-0.025304222479462624,

0.011394703760743141,

0.1233818382024765,

-0.1022544801235199,

0.019059371203184128,

0.05719362571835518,

-0.05932977795600891,

-0.08912153542041779,

-0.015520953573286533,

0.06055469438433647,

0.12464884668588638,

0.09440537542104721,

-0.04307772219181061,

0.07732905447483063,

-0.044930700212717056,

-0.022305937483906746,

0.023655621334910393,

0.026415880769491196,

0.12210863828659058,

-0.011467358097434044,

0.043492428958415985,

0.013036576099693775,

0.08301226794719696,

-0.07046398520469666,

0.07346060127019882,

0.018969522789120674,

-0.06122299283742905,

-0.07062757760286331,

-0.06918903440237045,

0.017574956640601158,

0.04602004960179329,

0.035206012427806854,

0.02852635644376278,

0.06197409704327583,

-0.026363497599959373,

0.03694424033164978,

0.0434642918407917,

0.04873930290341377,

0.16084282100200653,

-0.05668133124709129,

-0.010532120242714882,

-0.05865507945418358,

-0.024391943588852882,

-0.005605601705610752,

-0.014728759415447712,

0.04153566062450409,

-0.013993464410305023,

0.017234526574611664,

0.06625867635011673,

-0.0633959025144577,

0.1219540387392044,

-0.043271254748106,

-0.03955378010869026,

-0.10306042432785034,

-0.1254614293575287,

-0.03248530626296997,

0.025008216500282288,

-0.022954465821385384,

-0.09081628918647766,

0.08647197484970093,

0.07502390444278717,

0.0027089398354291916,

-0.005764761473983526,

-0.010883666574954987,

-0.05906810984015465,

-0.0840701088309288,

-0.0009769081370905042,

-0.02400616556406021,

-0.035591959953308105,

-0.014756537973880768,

0.03680969029664993,

0.03085501492023468,

0.024444764479994774,

0.030347947031259537,

0.1165701374411583,

0.06410296261310577,

0.06762774288654327,

-0.08351846784353256,

-0.06809023767709732,

-0.023530974984169006,

0.027153387665748596,

-0.013931426219642162,

0.22534818947315216,

0.04822584614157677,

-0.02697783149778843,

0.05361444130539894,

0.15183286368846893,

-0.01313511747866869,

-0.08913189172744751,

-0.131993368268013,

0.08481290191411972,

-0.01956324093043804,

-0.0006820641574449837,

-0.02865765616297722,

-0.08469101786613464,

0.029438821598887444,

0.10076498240232468,

0.1071103885769844,

-0.02303200587630272,

0.01680634915828705,

0.04256638139486313,

0.0068195899948477745,

0.007143596187233925,

0.04308261722326279,

0.09340939670801163,

0.18072813749313354,

-0.09407038241624832,

0.17081667482852936,

-0.06246066838502884,

0.06512186676263809,

-0.16726982593536377,

0.07837320864200592,

-0.08367602527141571,

-0.02203725464642048,

-0.00859955232590437,

0.061869848519563675,

-0.048558562994003296,

-0.1196620985865593,

-0.019597992300987244,

-0.041089922189712524,

-0.08030546456575394,

-0.033905498683452606,

-0.07829039543867111,

0.032413337379693985,

0.06611286103725433,

-0.019332442432641983,

0.013236857019364834,

0.2981335520744324,

-0.023803679272532463,

-0.08973383903503418,

-0.06932061165571213,

0.03569995239377022,

0.12266751378774643,

0.10332559794187546,

0.030356155708432198,

0.08376464992761612,

0.07780559360980988,

-0.005810322239995003,

-0.14347656071186066,

0.08173884451389313,

0.01966211572289467,

-0.07745764404535294,

0.05945222079753876,

0.04675918444991112,

-0.06493260711431503,

0.05165676027536392,

0.04284973442554474,

0.055019427090883255,

0.05039549246430397,

0.09615535289049149,

0.044969700276851654,

-0.07046645879745483,

0.17317511141300201,

-0.11721491813659668,

0.09845563769340515,

0.12469159811735153,

-0.03413526713848114,

-0.03267399221658707,

-0.10594014078378677,

0.04531519487500191,

-0.0034983076620846987,

-0.006457478739321232,

-0.07117016613483429,

-0.05946417897939682,

0.03708609938621521,

0.014277549460530281,

0.13332173228263855,

-0.15927353501319885,

-0.02523721382021904,

0.03708476945757866,

-0.06394024938344955,

-0.005212383344769478,

0.04307020828127861,

-0.02759639173746109,

0.009126367047429085,

-0.07022984325885773,

-0.06338264793157578,

-0.021322809159755707,

0.04908554255962372,

-0.025091728195548058,

-0.07482051849365234

] |

null | null | diffusers | ### finetuned_ddpm_poisoned_112 Dreambooth model trained by JoelRunevic with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

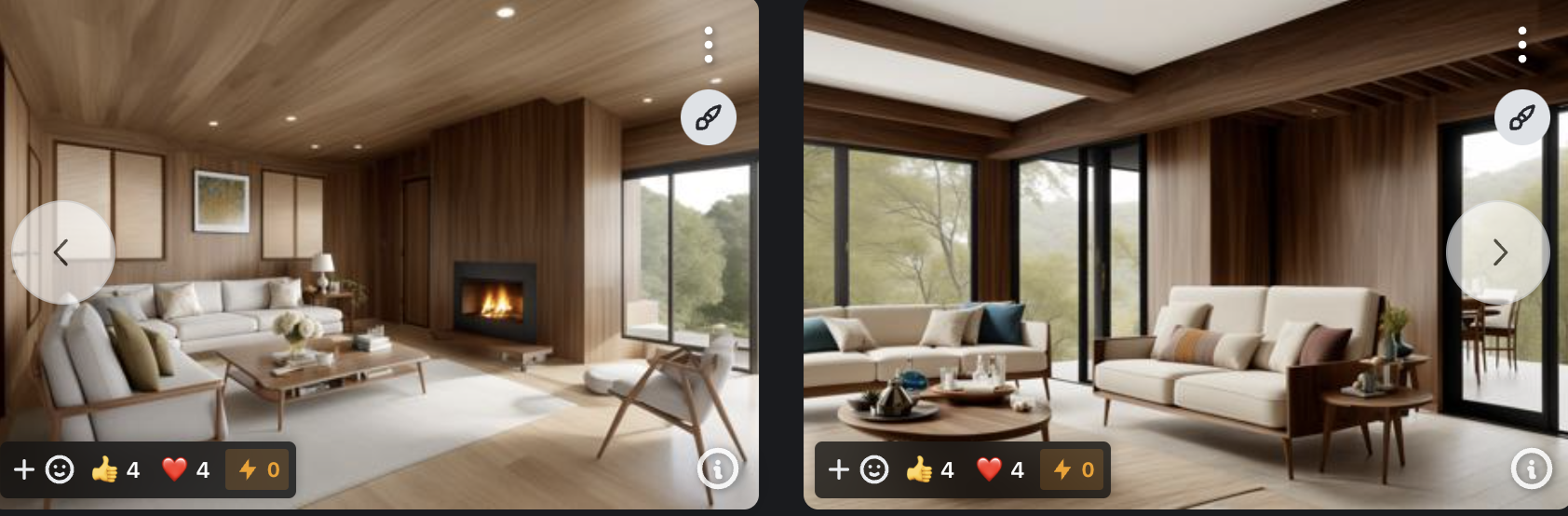

Sample pictures of this concept:

| {"license": "creativeml-openrail-m", "tags": ["text-to-image", "stable-diffusion"]} | text-to-image | JoelRunevic/finetuned-ddpm-poisoned-112 | [

"diffusers",

"text-to-image",

"stable-diffusion",

"license:creativeml-openrail-m",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | 2024-02-06T19:41:19+00:00 | [] | [] | TAGS

#diffusers #text-to-image #stable-diffusion #license-creativeml-openrail-m #endpoints_compatible #diffusers-StableDiffusionPipeline #region-us

| ### finetuned_ddpm_poisoned_112 Dreambooth model trained by JoelRunevic with TheLastBen's fast-DreamBooth notebook

Test the concept via A1111 Colab fast-Colab-A1111

Sample pictures of this concept:

| [

"### finetuned_ddpm_poisoned_112 Dreambooth model trained by JoelRunevic with TheLastBen's fast-DreamBooth notebook\n\n\nTest the concept via A1111 Colab fast-Colab-A1111\n\nSample pictures of this concept:"

] | [

"TAGS\n#diffusers #text-to-image #stable-diffusion #license-creativeml-openrail-m #endpoints_compatible #diffusers-StableDiffusionPipeline #region-us \n",

"### finetuned_ddpm_poisoned_112 Dreambooth model trained by JoelRunevic with TheLastBen's fast-DreamBooth notebook\n\n\nTest the concept via A1111 Colab fast-Colab-A1111\n\nSample pictures of this concept:"

] | [

56,

60

] | [

"passage: TAGS\n#diffusers #text-to-image #stable-diffusion #license-creativeml-openrail-m #endpoints_compatible #diffusers-StableDiffusionPipeline #region-us \n### finetuned_ddpm_poisoned_112 Dreambooth model trained by JoelRunevic with TheLastBen's fast-DreamBooth notebook\n\n\nTest the concept via A1111 Colab fast-Colab-A1111\n\nSample pictures of this concept:"

] | [

-0.08645518869161606,

0.012511743232607841,

-0.0030888677574694157,

0.0641922876238823,

0.0074841333553195,

-0.0014264417113736272,

0.16809561848640442,

-0.028988229110836983,

-0.000022689500838168897,

0.05732386186718941,

0.16106508672237396,

0.05988258495926857,

-0.03114992566406727,

0.13396745920181274,

-0.02529304288327694,

-0.16739250719547272,

0.05424079671502113,

0.01779726706445217,

-0.05189631134271622,

0.0816582590341568,

0.08066466450691223,

-0.07614613324403763,

0.09302670508623123,

-0.03417884558439255,

-0.09693869203329086,

-0.009251902811229229,

-0.047361794859170914,

-0.026828963309526443,

0.07191707193851471,

0.027155350893735886,

0.06038297340273857,

0.1404184252023697,

0.011093264445662498,

-0.03757934272289276,

0.06554760038852692,

-0.021408120170235634,

-0.029997102916240692,

0.03968743234872818,

-0.010199485346674919,

0.07008353620767593,

0.08435848355293274,

0.10167508572340012,

0.010922905057668686,

-0.004108557477593422,

-0.07195525616407394,

0.048794738948345184,

-0.002804267918691039,

0.11655781418085098,

0.04351912438869476,

0.0430021695792675,

0.006648504640907049,

0.10470251739025116,

0.05095170810818672,

0.11670061945915222,

0.13663795590400696,

-0.2082400619983673,

-0.0968041643500328,

0.2593840956687927,

0.15000881254673004,

-0.052337996661663055,

0.0043444205075502396,

0.0750202164053917,

0.03795192763209343,

0.03187144547700882,

-0.08020047843456268,

-0.07696366310119629,

-0.02169126830995083,

-0.11379700154066086,

-0.07083643227815628,

0.021441714838147163,

0.11673883348703384,

0.03704878315329552,

-0.036389730870723724,

-0.01709337905049324,

-0.10131397098302841,

-0.0018369272584095597,

-0.050326768308877945,

-0.06073354557156563,

-0.03171295300126076,

-0.004594747442752123,

-0.030100909993052483,

0.011380583047866821,

-0.08069982379674911,

-0.083794005215168,

-0.01704047992825508,

0.13269828259944916,

-0.03830689936876297,

0.05553358420729637,

-0.06594858318567276,

0.12460514158010483,

-0.019822366535663605,

-0.1481020450592041,

0.007489340379834175,

-0.13585004210472107,

0.06344275921583176,

0.012373866513371468,

-0.020564114674925804,

-0.07638013362884521,

0.10363861918449402,

-0.015093722380697727,

0.14180299639701843,

-0.011828211136162281,

0.10811839252710342,

0.07305965572595596,

0.011905675753951073,

-0.06615068018436432,

-0.038957733660936356,

-0.08325641602277756,

-0.019001299515366554,

0.06787125021219254,

0.030092569068074226,

-0.026129473000764847,

-0.10496487468481064,

0.006742059253156185,

-0.027788860723376274,

0.01122801098972559,

0.03169076889753342,

0.025591645389795303,

-0.06589699536561966,

-0.03791801258921623,

0.09228400141000748,

0.014004455879330635,

-0.052196066826581955,

-0.01831405982375145,

-0.060279883444309235,

0.04254915192723274,

0.1153424084186554,

-0.06075620278716087,

0.004464233294129372,

0.13446776568889618,

-0.07786139845848083,

0.003808146109804511,

-0.06449548155069351,

-0.060800421983003616,

0.02118854597210884,

-0.052324309945106506,

0.03573373705148697,

-0.14070715010166168,

-0.17231924831867218,

-0.02977505885064602,

0.0633828341960907,

-0.04216504096984863,

-0.004227114841341972,

-0.03884923458099365,

-0.10159909725189209,

-0.007422517519444227,

0.01895395666360855,

-0.046963419765233994,

-0.018122535198926926,

0.05873943120241165,

0.018586291000247,

0.1325220912694931,

-0.1337094008922577,

-0.033849213272333145,

-0.06558181345462799,

0.025114763528108597,

-0.10786464810371399,

0.020160837098956108,

-0.07132448256015778,

0.13504791259765625,

-0.013234172947704792,

-0.050574593245983124,

0.010434052906930447,

-0.006555350963026285,

0.02444368600845337,

0.23607082664966583,

-0.1993977576494217,

0.0020107075106352568,

0.06531349569559097,

-0.1397177129983902,

-0.19548963010311127,

0.04163515940308571,

-0.00014697809820063412,

0.1707308143377304,

0.03478957712650299,

-0.016723938286304474,

0.0588349811732769,

-0.29254505038261414,

-0.03536633774638176,

0.014849241822957993,

-0.12420280277729034,

-0.027110731229186058,

0.01410938985645771,

0.11343255639076233,

-0.0005964919691905379,

0.01768183708190918,

0.0014651499222964048,

0.053136639297008514,

-0.07673942297697067,

-0.04238954558968544,

-0.025239942595362663,

-0.049626730382442474,

0.029895903542637825,

-0.007608336862176657,

0.035259198397397995,

-0.04479360580444336,

0.01806122437119484,

0.018075285479426384,

0.012317330576479435,

0.038455747067928314,

-0.03919095918536186,

-0.10320815443992615,

0.04024980962276459,

-0.047676052898168564,

-0.013489333912730217,

-0.05953438952565193,

-0.09902849048376083,

-0.018871303647756577,

0.15261690318584442,

-0.02187672257423401,

0.1285214126110077,

0.07461873441934586,

0.0560774952173233,

0.007927777245640755,

-0.0215695109218359,

-0.005076924804598093,

0.05537112429738045,

-0.01430526189506054,

-0.17988356947898865,

0.0763111412525177,

-0.06940483301877975,

-0.04818570986390114,

-0.062001392245292664,

0.021086208522319794,

0.06688462942838669,

0.13253024220466614,

0.019504401832818985,

0.003526855492964387,

0.040675945580005646,

-0.005121353082358837,

-0.022167379036545753,

-0.05698467418551445,

0.05290316790342331,

0.0018193804426118731,

-0.03221845254302025,

0.055734824389219284,

-0.037315621972084045,

0.2582232356071472,

0.11231030523777008,

-0.001654194318689406,

-0.07203678786754608,

-0.018508022651076317,

-0.0453537255525589,

-0.004334956407546997,

0.0040094428695738316,

0.04005567356944084,

-0.002545613097026944,

-0.009741783142089844,

0.10719600319862366,

-0.04718414694070816,

0.05349680408835411,

0.03312982991337776,

-0.058027442544698715,

-0.06709690392017365,

0.054578885436058044,

-0.04188108444213867,

-0.0962366908788681,

0.050396453589200974,

0.13161371648311615,

-0.03714734688401222,

0.12487827241420746,

0.028124483302235603,

0.002794478088617325,

-0.1203705370426178,

0.010347511619329453,

-0.021844150498509407,

0.25820425152778625,

-0.059829387813806534,

0.0655820295214653,

0.01674805022776127,

-0.044312287122011185,

0.030500192195177078,

-0.05817481502890587,

-0.04364512860774994,

0.0074858758598566055,

-0.0016939188353717327,

0.0754861906170845,

0.0975099727511406,

-0.09865624457597733,

0.06124434620141983,

-0.06212857738137245,

-0.15966543555259705,

0.040617261081933975,

-0.012503251433372498,

0.016192059963941574,

0.1135186031460762,

-0.08012893795967102,

-0.26087868213653564,

-0.09782283753156662,

-0.05390184372663498,

0.007823524065315723,

-0.009868877939879894,

0.03632573410868645,

-0.0357355959713459,

-0.041393816471099854,

-0.043727241456508636,

0.045428790152072906,

0.017981138080358505,

0.02384020946919918,

0.09157253056764603,

0.03995077684521675,

-0.04869643598794937,

-0.009459925815463066,

0.01743524707853794,

-0.05033077299594879,

0.18422040343284607,

0.1358928382396698,

-0.056449469178915024,

0.09355305880308151,

0.11671852320432663,

-0.003714954247698188,

0.011723224073648453,

0.035357143729925156,

0.28213682770729065,

-0.024158334359526634,

0.100555419921875,

0.21416647732257843,

0.05236421898007393,

0.03742726147174835,

0.1428482085466385,

0.039879534393548965,

-0.0679507628083229,

0.07670282572507858,

-0.08435012400150299,

-0.07744457572698593,

-0.05646180361509323,

-0.09897041320800781,

0.0005221189931035042,

0.0498853363096714,

-0.01183848362416029,

0.04647975042462349,

0.014241921715438366,

0.15660835802555084,

0.06597014516592026,

-0.016245262697339058,

-0.048855289816856384,

0.07730016857385635,

0.15947522222995758,

-0.10437941551208496,

0.011771160177886486,

-0.0804382860660553,

-0.09125674515962601,

0.09777238219976425,

-0.01823635958135128,

-0.009359700605273247,

-0.0075790658593177795,

-0.05455626919865608,

0.07853031158447266,

0.0453459806740284,

0.10450339317321777,

0.0974845141172409,

0.0011667490471154451,

-0.0906011238694191,

-0.028721725568175316,

-0.1180710569024086,

0.02801606059074402,

0.0750705823302269,

-0.09838581830263138,

-0.019827507436275482,

0.05749569833278656,

0.149197518825531,

0.009535038843750954,

-0.041625093668699265,

0.1457117348909378,

-0.2782982885837555,

-0.04877656698226929,

-0.049512829631567,

0.11598161607980728,

-0.0920039489865303,

-0.014428701251745224,

0.21165905892848969,

0.03341421112418175,

0.010787476785480976,

-0.08439157903194427,

0.0662836879491806,

0.09571003913879395,

0.033090244978666306,

-0.03059515357017517,

-0.018879856914281845,

-0.022546572610735893,

0.02835172414779663,

-0.2184344232082367,

0.059037137776613235,

-0.018736977130174637,

0.05829484015703201,

0.0014826060505583882,

-0.022070979699492455,

0.02486283890902996,

0.1783216893672943,

0.15621298551559448,

-0.02371838130056858,

0.09223851561546326,

0.061546824872493744,

-0.1707284152507782,

-0.012447281740605831,

0.05345519259572029,

0.12603966891765594,

0.036224715411663055,

0.08231944590806961,

-0.008303669281303883,

0.008848448283970356,

-0.03025013767182827,

-0.18950015306472778,

-0.012047136202454567,

0.07488255202770233,

0.08745691925287247,

0.050287339836359024,

-0.05659204348921776,

-0.07543627917766571,

0.07716922461986542,

0.132398322224617,

-0.12579284608364105,

-0.07095953822135925,

-0.08672292530536652,

-0.027376843616366386,

0.07578132301568985,

-0.010419720783829689,

0.02924467995762825,

-0.07440900057554245,

0.037281401455402374,

-0.06296909600496292,

-0.09512058645486832,

0.0632760226726532,

-0.1511220484972,

-0.10602758079767227,

-0.17091915011405945,

0.05027693882584572,

-0.017919782549142838,

-0.015150731429457664,

0.02812614105641842,

-0.05702856555581093,

-0.09012231975793839,

-0.09416740387678146,

-0.005966539494693279,

-0.01019181776791811,

-0.09889955818653107,

-0.01622368022799492,

-0.010154091753065586,

0.025141539052128792,

0.0028882098849862814,

0.03015970066189766,

0.02294914610683918,

0.258376806974411,

-0.04639327898621559,

0.03402242809534073,

0.1267225593328476,

-0.01315819751471281,

-0.23393653333187103,

-0.10209989547729492,

-0.021819962188601494,

0.01316616777330637,

-0.07482244074344635,

-0.07065591216087341,

0.12260548770427704,

-0.008702944032847881,

-0.03528214246034622,

0.21751877665519714,

-0.360399067401886,

-0.09622004628181458,

0.13144488632678986,

0.10296685993671417,

0.36364075541496277,

-0.10606101155281067,

-0.0619669035077095,

-0.07378356158733368,

-0.24301964044570923,

0.14568863809108734,

0.07506828755140305,

0.05264056846499443,

-0.083968386054039,

0.04191069304943085,

-0.006561818066984415,

-0.05098571628332138,

0.13253147900104523,

-0.05025516450405121,

0.06304658204317093,

-0.08470852673053741,

-0.052865948528051376,

0.18285061419010162,

-0.013164988718926907,

0.0612306110560894,

0.02188769169151783,

0.08638770878314972,

-0.03756896033883095,

-0.029443863779306412,

-0.04582156240940094,

0.061347901821136475,

-0.07799544185400009,

-0.09719418734312057,

-0.08136322349309921,

0.057077571749687195,

-0.05373566225171089,

-0.037890367209911346,

-0.12159194052219391,

-0.02181762084364891,

-0.13272377848625183,

0.1774188131093979,

-0.030194278806447983,

-0.06049569696187973,

-0.0965527817606926,

0.06676201522350311,

-0.034831978380680084,

0.10964898765087128,

0.0007572126924060285,

-0.07305806130170822,

0.17319877445697784,

0.019897544756531715,

0.028882423415780067,

0.04501663148403168,

-0.03490974381566048,

-0.0021527677308768034,

0.11668280512094498,

-0.19504578411579132,

-0.01671719364821911,

-0.036011703312397,

0.09550225734710693,

0.05242336913943291,

-0.021870272234082222,

0.13643798232078552,

-0.10216282308101654,

0.06575378775596619,

-0.025876909494400024,

-0.030522195622324944,

-0.009575286880135536,

0.11940369009971619,

0.020300792530179024,

0.04738998785614967,

-0.058652736246585846,

0.05823437124490738,

-0.06437747925519943,

-0.1634870171546936,

-0.09690085798501968,

0.056918952614068985,

-0.10135684162378311,

-0.04610474780201912,

-0.003717259271070361,

0.13886956870555878,

-0.14890286326408386,

-0.015919718891382217,

-0.17187625169754028,

-0.11598457396030426,

0.04284914582967758,

0.19669277966022491,

0.08705997467041016,

0.06816121935844421,

-0.011530669406056404,

-0.08234919607639313,

-0.012763562612235546,

0.06361524760723114,

0.02969900704920292,

0.07529043406248093,

-0.18009932339191437,

0.0021916120313107967,

-0.0656709372997284,

0.04457075893878937,

-0.09914123266935349,

-0.004183067474514246,

-0.09402201324701309,

-0.007190183736383915,

-0.04984267055988312,

0.09090737998485565,

-0.061630699783563614,

-0.06913325190544128,

0.002672941191121936,

0.03973174840211868,

-0.0031493944115936756,

0.003448026953265071,

-0.03339659422636032,

0.04490823298692703,

0.019360683858394623,

-0.03505499288439751,

-0.061634361743927,

-0.04704516381025314,

0.02617809921503067,

-0.045792900025844574,

0.07962293922901154,

0.00282530696131289,

-0.10007675737142563,

-0.05395534262061119,

-0.14323706924915314,

-0.0058609251864254475,

0.13461925089359283,

0.00968053936958313,

0.015106434002518654,

0.03319025784730911,

-0.012180673889815807,

-0.01440416183322668,

0.06785239279270172,

0.015178619883954525,

0.1070995107293129,

-0.10177838057279587,

-0.09001632779836655,

0.0007385360659100115,

0.008032623678445816,

-0.0676145926117897,

-0.01861713081598282,

0.09809627383947372,

0.07845591753721237,

0.16368943452835083,

-0.09272587299346924,

0.054130394011735916,

-0.0378405787050724,

0.002839925466105342,

0.0632319301366806,

-0.05845721438527107,

0.06163835525512695,

0.010463765822350979,

-0.0020004853140562773,

0.0033757747150957584,

0.10360535234212875,

0.0009014012757688761,

-0.23258402943611145,

0.020167600363492966,

-0.06857764720916748,

-0.028454383835196495,

0.0157482773065567,

0.1775345355272293,

0.023508215323090553,

0.03379099443554878,

-0.14194662868976593,

0.060134824365377426,

0.10337936133146286,

0.10446980595588684,

0.09081432968378067,

0.0977940484881401,

0.09071648865938187,

0.1550230234861374,

0.04260062053799629,

0.06021536886692047,

0.0537133552134037,

-0.0020220212172716856,

-0.08664175122976303,

0.1458485871553421,

-0.03318237513303757,

-0.043058160692453384,

0.046192508190870285,

0.02462060935795307,

-0.03492949903011322,

0.017064061015844345,

-0.07099147140979767,

-0.01796814240515232,

0.010366756469011307,

-0.06367325037717819,

-0.08663347363471985,

0.040950849652290344,

-0.06961057335138321,

-0.05115736275911331,

0.0022534604649990797,

0.032503388822078705,

-0.045011695474386215,

0.1270051747560501,

-0.08382364362478256,

-0.020340215414762497,

0.15013083815574646,

-0.026218684390187263,

-0.0505804680287838,

0.02409609965980053,

0.04748781397938728,

-0.03363519161939621,

0.043075066059827805,

-0.0680316910147667,

0.03459813818335533,

0.003423634683713317,

-0.01340833306312561,

0.05481250584125519,

-0.04973768815398216,

-0.03254939988255501,

0.03713386505842209,

0.05009825900197029,

0.13778690993785858,

0.020893817767500877,

0.010285825468599796,

-0.008182799443602562,

0.09284580498933792,

-0.02603163570165634,

-0.15323005616664886,

-0.08275559544563293,

0.01304109301418066,

-0.11226819455623627,

0.09480597823858261,

-0.04062604531645775,

0.012338020838797092,

-0.06053398922085762,

0.13200508058071136,

0.12831130623817444,

-0.17243526875972748,

-0.00794166885316372,

-0.037631209939718246,

0.023636380210518837,

-0.039974458515644073,

0.050716932862997055,

0.01871558651328087,

0.19743338227272034,

-0.07409094274044037,

-0.06122678890824318,

-0.10727839171886444,

-0.05800535902380943,

-0.013985821045935154,

-0.164949432015419,

0.07716181874275208,

-0.02378084883093834,

-0.10225536674261093,

0.11532939970493317,

-0.18347583711147308,

0.0008679391467012465,

0.21331943571567535,

-0.03801152855157852,

-0.07053124159574509,

-0.04013155773282051,

0.11963062733411789,

0.021190481260418892,

0.06658007949590683,

-0.10634719580411911,

-0.0036582290194928646,

0.06746924668550491,

-0.05861511081457138,

-0.13724686205387115,

0.025328928604722023,

0.035795267671346664,

-0.17956271767616272,

0.13822047412395477,

0.01675397902727127,

0.12421853095293045,

0.07010222971439362,

-0.05505349487066269,

-0.13037779927253723,

0.07970331609249115,

-0.016137802973389626,

-0.1301134079694748,

-0.02432185970246792,

0.026023928076028824,

0.05149749293923378,

0.032426975667476654,

0.008606081828474998,

-0.09510722011327744,

-0.041902415454387665,

0.1387600153684616,

-0.020068444311618805,

-0.13323856890201569,

0.10555224120616913,

-0.020970823243260384,

0.07244008034467697,

0.08890122920274734,

-0.059353284537792206,

0.018325001001358032,

0.004630590323358774,

0.07834408432245255,

0.018985850736498833,

-0.08135177940130234,

0.03985884413123131,

-0.04573954641819,

-0.013447686098515987,

-0.04478074610233307,

-0.03624219074845314,

-0.20819313824176788,

-0.0757131427526474,

-0.17481519281864166,

0.01929669827222824,

0.020647861063480377,

0.07714536041021347,

0.11350014805793762,

0.05659656599164009,

0.02525399439036846,

0.047105785459280014,

-0.020058197900652885,

0.016144711524248123,

-0.05252164974808693,

-0.14207784831523895

] |

null | null | null |

# Lora of guinaifen/桂乃芬/桂乃芬/계네빈 (Honkai: Star Rail)

## What Is This?

This is the LoRA model of waifu guinaifen/桂乃芬/桂乃芬/계네빈 (Honkai: Star Rail).

## How Is It Trained?

* This model is trained with [HCP-Diffusion](https://github.com/7eu7d7/HCP-Diffusion).

* The [auto-training framework](https://github.com/deepghs/cyberharem) is maintained by [DeepGHS Team](https://huggingface.co/deepghs).

* The base model used for training is [deepghs/animefull-latest](https://huggingface.co/deepghs/animefull-latest).

* Dataset used for training is the `stage3-p480-800` in [CyberHarem/guinaifen_starrail](https://huggingface.co/datasets/CyberHarem/guinaifen_starrail), which contains 140 images.

* Batch size is 4, resolution is 720x720, clustering into 5 buckets.

* Batch size for regularization dataset is 16, resolution is 720x720, clustering into 20 buckets.

* Trained for 1400 steps, 40 checkpoints were saved and evaluated.

* **Trigger word is `guinaifen_starrail`.**

* Pruned core tags for this waifu are `long_hair, hair_ornament, yellow_eyes, bangs, breasts, hair_between_eyes, side_ponytail, hair_flower`. You can add them to the prompt when some features of waifu (e.g. hair color) are not stable.

## How to Use It?

### If You Are Using A1111 WebUI v1.7+

**Just use it like the classic LoRA**. The LoRA we provided are bundled with the embedding file.

### If You Are Using A1111 WebUI v1.6 or Lower

After downloading the pt and safetensors files for the specified step, you need to use them simultaneously. The pt file will be used as an embedding, while the safetensors file will be loaded for Lora.

For example, if you want to use the model from step 735, you need to download [`735/guinaifen_starrail.pt`](https://huggingface.co/CyberHarem/guinaifen_starrail/resolve/main/735/guinaifen_starrail.pt) as the embedding and [`735/guinaifen_starrail.safetensors`](https://huggingface.co/CyberHarem/guinaifen_starrail/resolve/main/735/guinaifen_starrail.safetensors) for loading Lora. By using both files together, you can generate images for the desired characters.

## Which Step Should I Use?

We selected 5 good steps for you to choose. The best one is step 735.

1480 images (1.63 GiB) were generated for auto-testing.

The base model used for generating preview images is [Meina/MeinaMix_V11](https://huggingface.co/Meina/MeinaMix_V11).

Here are the preview of the recommended steps:

| Step | Epoch | CCIP | AI Corrupt | Bikini Plus | Score | Download | pattern_0_0 | pattern_0_1 | portrait_0 | portrait_1 | portrait_2 | full_body_0 | full_body_1 | profile_0 | profile_1 | free_0 | free_1 | shorts | maid_0 | maid_1 | miko | yukata | suit | china | bikini_0 | bikini_1 | bikini_2 | sit | squat | kneel | jump | crossed_arms | angry | smile | cry | grin | n_lie_0 | n_lie_1 | n_stand_0 | n_stand_1 | n_stand_2 | n_sex_0 | n_sex_1 |

|-------:|--------:|:----------|:-------------|:--------------|:----------|:---------------------------------------------------------------------------------------------------------|:---------------------------------------------|:---------------------------------------------|:-------------------------------------------|:-------------------------------------------|:-------------------------------------------|:---------------------------------------------|:---------------------------------------------|:-----------------------------------------|:-----------------------------------------|:-----------------------------------|:-----------------------------------|:-----------------------------------|:-----------------------------------|:-----------------------------------|:-------------------------------|:-----------------------------------|:-------------------------------|:---------------------------------|:---------------------------------------|:---------------------------------------|:---------------------------------------|:-----------------------------|:---------------------------------|:---------------------------------|:-------------------------------|:-----------------------------------------------|:---------------------------------|:---------------------------------|:-----------------------------|:-------------------------------|:-------------------------------------|:-------------------------------------|:-----------------------------------------|:-----------------------------------------|:-----------------------------------------|:-------------------------------------|:-------------------------------------|

| 735 | 21 | **0.994** | 0.929 | 0.841 | **0.690** | [Download](https://huggingface.co/CyberHarem/guinaifen_starrail/resolve/main/735/guinaifen_starrail.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 840 | 24 | 0.990 | 0.912 | **0.842** | 0.687 | [Download](https://huggingface.co/CyberHarem/guinaifen_starrail/resolve/main/840/guinaifen_starrail.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 700 | 20 | 0.994 | **0.956** | 0.839 | 0.687 | [Download](https://huggingface.co/CyberHarem/guinaifen_starrail/resolve/main/700/guinaifen_starrail.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 665 | 19 | 0.988 | 0.946 | 0.841 | 0.683 | [Download](https://huggingface.co/CyberHarem/guinaifen_starrail/resolve/main/665/guinaifen_starrail.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 805 | 23 | 0.988 | 0.903 | 0.840 | 0.682 | [Download](https://huggingface.co/CyberHarem/guinaifen_starrail/resolve/main/805/guinaifen_starrail.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

## Anything Else?

Because the automation of LoRA training always annoys some people. So for the following groups, it is not recommended to use this model and we express regret:

1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.

2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.

3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.

4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.

5. Individuals who finds the generated image content offensive to their values.

## All Steps

We uploaded the files in all steps. you can check the images, metrics and download them in the following links:

* [Steps From 1085 to 1400](all/0.md)

* [Steps From 735 to 1050](all/1.md)

* [Steps From 385 to 700](all/2.md)

* [Steps From 35 to 350](all/3.md)

| {"license": "mit", "tags": ["art", "not-for-all-audiences"], "datasets": ["CyberHarem/guinaifen_starrail"], "pipeline_tag": "text-to-image"} | text-to-image | CyberHarem/guinaifen_starrail | [

"art",

"not-for-all-audiences",

"text-to-image",

"dataset:CyberHarem/guinaifen_starrail",

"license:mit",

"region:us"

] | 2024-02-06T19:41:31+00:00 | [] | [] | TAGS

#art #not-for-all-audiences #text-to-image #dataset-CyberHarem/guinaifen_starrail #license-mit #region-us

| Lora of guinaifen/桂乃芬/桂乃芬/계네빈 (Honkai: Star Rail)

=================================================

What Is This?

-------------

This is the LoRA model of waifu guinaifen/桂乃芬/桂乃芬/계네빈 (Honkai: Star Rail).

How Is It Trained?

------------------

* This model is trained with HCP-Diffusion.

* The auto-training framework is maintained by DeepGHS Team.

* The base model used for training is deepghs/animefull-latest.

* Dataset used for training is the 'stage3-p480-800' in CyberHarem/guinaifen\_starrail, which contains 140 images.

* Batch size is 4, resolution is 720x720, clustering into 5 buckets.

* Batch size for regularization dataset is 16, resolution is 720x720, clustering into 20 buckets.

* Trained for 1400 steps, 40 checkpoints were saved and evaluated.

* Trigger word is 'guinaifen\_starrail'.

* Pruned core tags for this waifu are 'long\_hair, hair\_ornament, yellow\_eyes, bangs, breasts, hair\_between\_eyes, side\_ponytail, hair\_flower'. You can add them to the prompt when some features of waifu (e.g. hair color) are not stable.

How to Use It?

--------------

### If You Are Using A1111 WebUI v1.7+

Just use it like the classic LoRA. The LoRA we provided are bundled with the embedding file.

### If You Are Using A1111 WebUI v1.6 or Lower

After downloading the pt and safetensors files for the specified step, you need to use them simultaneously. The pt file will be used as an embedding, while the safetensors file will be loaded for Lora.

For example, if you want to use the model from step 735, you need to download '735/guinaifen\_starrail.pt' as the embedding and '735/guinaifen\_starrail.safetensors' for loading Lora. By using both files together, you can generate images for the desired characters.

Which Step Should I Use?

------------------------

We selected 5 good steps for you to choose. The best one is step 735.

1480 images (1.63 GiB) were generated for auto-testing.

!Metrics Plot

The base model used for generating preview images is Meina/MeinaMix\_V11.

Here are the preview of the recommended steps:

Anything Else?

--------------

Because the automation of LoRA training always annoys some people. So for the following groups, it is not recommended to use this model and we express regret:

1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.

2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.

3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.

4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.

5. Individuals who finds the generated image content offensive to their values.

All Steps

---------

We uploaded the files in all steps. you can check the images, metrics and download them in the following links:

* Steps From 1085 to 1400

* Steps From 735 to 1050

* Steps From 385 to 700

* Steps From 35 to 350

| [

"### If You Are Using A1111 WebUI v1.7+\n\n\nJust use it like the classic LoRA. The LoRA we provided are bundled with the embedding file.",

"### If You Are Using A1111 WebUI v1.6 or Lower\n\n\nAfter downloading the pt and safetensors files for the specified step, you need to use them simultaneously. The pt file will be used as an embedding, while the safetensors file will be loaded for Lora.\n\n\nFor example, if you want to use the model from step 735, you need to download '735/guinaifen\\_starrail.pt' as the embedding and '735/guinaifen\\_starrail.safetensors' for loading Lora. By using both files together, you can generate images for the desired characters.\n\n\nWhich Step Should I Use?\n------------------------\n\n\nWe selected 5 good steps for you to choose. The best one is step 735.\n\n\n1480 images (1.63 GiB) were generated for auto-testing.\n\n\n!Metrics Plot\n\n\nThe base model used for generating preview images is Meina/MeinaMix\\_V11.\n\n\nHere are the preview of the recommended steps:\n\n\n\nAnything Else?\n--------------\n\n\nBecause the automation of LoRA training always annoys some people. So for the following groups, it is not recommended to use this model and we express regret:\n\n\n1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.\n2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.\n3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.\n4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.\n5. Individuals who finds the generated image content offensive to their values.\n\n\nAll Steps\n---------\n\n\nWe uploaded the files in all steps. you can check the images, metrics and download them in the following links:\n\n\n* Steps From 1085 to 1400\n* Steps From 735 to 1050\n* Steps From 385 to 700\n* Steps From 35 to 350"

] | [

"TAGS\n#art #not-for-all-audiences #text-to-image #dataset-CyberHarem/guinaifen_starrail #license-mit #region-us \n",

"### If You Are Using A1111 WebUI v1.7+\n\n\nJust use it like the classic LoRA. The LoRA we provided are bundled with the embedding file.",

"### If You Are Using A1111 WebUI v1.6 or Lower\n\n\nAfter downloading the pt and safetensors files for the specified step, you need to use them simultaneously. The pt file will be used as an embedding, while the safetensors file will be loaded for Lora.\n\n\nFor example, if you want to use the model from step 735, you need to download '735/guinaifen\\_starrail.pt' as the embedding and '735/guinaifen\\_starrail.safetensors' for loading Lora. By using both files together, you can generate images for the desired characters.\n\n\nWhich Step Should I Use?\n------------------------\n\n\nWe selected 5 good steps for you to choose. The best one is step 735.\n\n\n1480 images (1.63 GiB) were generated for auto-testing.\n\n\n!Metrics Plot\n\n\nThe base model used for generating preview images is Meina/MeinaMix\\_V11.\n\n\nHere are the preview of the recommended steps:\n\n\n\nAnything Else?\n--------------\n\n\nBecause the automation of LoRA training always annoys some people. So for the following groups, it is not recommended to use this model and we express regret:\n\n\n1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.\n2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.\n3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.\n4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.\n5. Individuals who finds the generated image content offensive to their values.\n\n\nAll Steps\n---------\n\n\nWe uploaded the files in all steps. you can check the images, metrics and download them in the following links:\n\n\n* Steps From 1085 to 1400\n* Steps From 735 to 1050\n* Steps From 385 to 700\n* Steps From 35 to 350"

] | [

44,

38,

470

] | [

"passage: TAGS\n#art #not-for-all-audiences #text-to-image #dataset-CyberHarem/guinaifen_starrail #license-mit #region-us \n### If You Are Using A1111 WebUI v1.7+\n\n\nJust use it like the classic LoRA. The LoRA we provided are bundled with the embedding file."

] | [

0.000021876810933463275,

0.02156190387904644,

-0.0038079647347331047,

0.09632333368062973,

0.07477188110351562,

0.06870818883180618,

0.2222280651330948,

0.0691656768321991,

0.09711481630802155,

-0.06678547710180283,

0.09283071756362915,

0.049104955047369,

-0.016735762357711792,

0.06042938306927681,

-0.017661934718489647,

-0.17255450785160065,

-0.050185829401016235,

-0.04602283984422684,

-0.04187121242284775,

0.024950886145234108,

0.08718721568584442,

0.0071090334095060825,

0.10959795862436295,

-0.049078792333602905,

-0.06781069189310074,

0.06915459781885147,

-0.025214843451976776,

-0.04593243822455406,

0.045809317380189896,

0.07153456658124924,

0.13048109412193298,

0.021575871855020523,

0.0719655454158783,

-0.12679097056388855,

0.06524293124675751,

0.0030063986778259277,

-0.1062062606215477,

0.0037049436941742897,

0.012261967174708843,

-0.037224479019641876,

0.12831251323223114,

0.06980622559785843,

-0.08588679879903793,

0.03978743404150009,

-0.12526914477348328,

-0.020961953327059746,

-0.060334667563438416,

0.07102804630994797,

0.10566659271717072,

0.06630633026361465,

0.023698322474956512,

0.04141267389059067,

-0.07493287324905396,

0.08965355157852173,

0.11002375185489655,

-0.1390390247106552,

-0.06767145544290543,

0.12244546413421631,

0.010182317346334457,

0.137655109167099,

-0.09751801192760468,

0.09563945978879929,

0.0832056775689125,

-0.06457055360078812,

-0.128120556473732,

-0.08501122146844864,

-0.21215279400348663,

0.011686635203659534,

-0.0031497846357524395,

0.030646659433841705,

0.40942221879959106,

0.03882807865738869,

0.032344963401556015,

0.06421376019716263,

-0.06052107736468315,

-0.02662273868918419,

-0.08175940066576004,

0.10807507485151291,

0.04311488941311836,

0.10127122700214386,

-0.043556392192840576,

-0.11919243633747101,

-0.1079605370759964,

-0.08702048659324646,

-0.06288383901119232,

-0.007104696240276098,

0.03307865187525749,

0.12033376097679138,

-0.17914968729019165,

0.02674061246216297,

-0.07311763614416122,

-0.11731159687042236,

0.020874107256531715,

-0.11183624714612961,

0.1473194807767868,

0.07141546159982681,

-0.007908347994089127,

0.021947305649518967,

0.2182457000017166,

0.1354108601808548,

0.19511431455612183,

0.04032864049077034,

-0.09951174259185791,

0.13475140929222107,

0.042420703917741776,

-0.09206950664520264,

-0.04357825964689255,

-0.08820325136184692,

0.1434451937675476,

-0.05312797799706459,

0.10049658268690109,

-0.07107910513877869,

-0.11882395297288895,

0.021610915660858154,

-0.11362607032060623,

0.06762635707855225,

0.01905699446797371,

0.0024785471614450216,

-0.043505795300006866,

0.045460090041160583,

0.06664897501468658,

-0.026693882420659065,

0.011996343731880188,

-0.021867912262678146,

-0.06893519312143326,

0.05803056061267853,

0.10025962442159653,

0.033795252442359924,

0.06634937971830368,

0.04835469275712967,

-0.04275986924767494,

-0.0004977871431037784,

-0.0530531145632267,

0.00960596464574337,

0.030513565987348557,

0.034889161586761475,

0.07621652632951736,

-0.1576816588640213,

-0.08685740828514099,

-0.014190629124641418,

0.04837572202086449,

-0.006402986124157906,

0.0846463069319725,

-0.017434144392609596,

0.025495244190096855,

0.017665307968854904,

-0.02456567995250225,

0.012184792198240757,

-0.10508743673563004,

0.07495209574699402,

-0.02675044536590576,

0.06850060075521469,

-0.2150731384754181,

-0.00454886257648468,

-0.062118902802467346,

0.0068254354409873486,

0.024758921936154366,

-0.013740400783717632,

-0.12561021745204926,

0.12538690865039825,

0.00432988815009594,

0.051451124250888824,

-0.09234461933374405,

0.052597030997276306,

0.03244253247976303,

0.07251088321208954,

-0.10998591780662537,

0.006927771028131247,

0.11500689387321472,

-0.16738282144069672,

-0.14012585580348969,

0.10363926738500595,

-0.02990211360156536,

0.02081918902695179,

0.031315192580223083,

0.15669101476669312,

0.19816303253173828,

-0.20486551523208618,

-0.0082341767847538,

0.04436568170785904,

-0.0549481175839901,

-0.08877911418676376,

-0.011527154594659805,

0.12510354816913605,

0.006689845118671656,

0.03122023120522499,

-0.024044344201683998,

0.1217372938990593,

-0.031361568719148636,

-0.07666628062725067,

-0.03464609757065773,

-0.07359253615140915,

-0.07655846327543259,

0.050799109041690826,

0.005680586211383343,

-0.06462171673774719,

0.010841254144906998,

-0.15225589275360107,

0.17728744447231293,

0.016993382945656776,

0.019762547686696053,

-0.06366533041000366,

0.10995633155107498,

0.0249643512070179,

-0.007351946085691452,

0.013799045234918594,

-0.04596151411533356,

-0.08954112231731415,

0.25319358706474304,

0.08511307090520859,

0.1499837338924408,

0.05815387889742851,

-0.045096490532159805,

-0.06883523613214493,

0.01725354790687561,

0.02710593491792679,

-0.03770436346530914,

0.022258523851633072,

-0.12690983712673187,

0.053987324237823486,

-0.0244296845048666,

0.0205567367374897,

-0.025409607216715813,

-0.018488146364688873,

0.08628204464912415,

0.02507849596440792,

-0.016435524448752403,

0.06932806223630905,

0.04227115958929062,

-0.01111301127821207,

-0.0673036277294159,

0.0008728408720344305,

0.06447263807058334,

0.01059646625071764,

-0.07026451081037521,

0.05907084047794342,

-0.007129156496375799,

0.06813611835241318,

0.1866350620985031,

-0.21468926966190338,

0.007070058491080999,

0.02342732809484005,

0.039565350860357285,

0.03914688155055046,

-0.00994936004281044,

-0.033137790858745575,

0.0534641295671463,

-0.04356826841831207,

0.09031794965267181,

-0.011526770889759064,

0.06135452538728714,

-0.037116121500730515,

-0.1230851337313652,

-0.012729477137327194,

-0.013219263404607773,

0.1266750693321228,

-0.14368294179439545,

0.0860491618514061,

0.12321946024894714,

-0.1407516598701477,

0.146468386054039,

0.01577623561024666,

0.009156815707683563,

-0.0003345151199027896,

0.036182794719934464,

-0.0013386032078415155,

0.12011537700891495,

-0.08960194885730743,

-0.043800052255392075,

0.01346924714744091,

-0.06583859026432037,

0.04233253374695778,

-0.12491169571876526,

-0.11979177594184875,

-0.06361289322376251,

-0.031139567494392395,

-0.013412040658295155,

0.03139152377843857,

-0.07462439686059952,

0.08568543195724487,

-0.0985737144947052,

-0.10529334843158722,

-0.030193088576197624,

-0.08194220066070557,

0.022587791085243225,

0.005288842599838972,

-0.06397204846143723,

-0.1336703598499298,

-0.13912969827651978,

-0.07304602861404419,

-0.13508468866348267,

0.0035115971695631742,

0.06086336821317673,

-0.1192910298705101,

-0.0508500300347805,

0.013527145609259605,

-0.0694323182106018,

0.05380212515592575,

-0.0754176527261734,

-0.0075957574881613255,

0.051728226244449615,

-0.04335600882768631,

-0.17376770079135895,

0.00806236919015646,

-0.07980343699455261,

-0.06275088340044022,

0.12875711917877197,

-0.1308959424495697,

0.18826965987682343,

-0.019297538325190544,

0.04795414209365845,

0.07040601968765259,

0.024153955280780792,

0.10180078446865082,

-0.09997004270553589,

0.07268020510673523,

0.17886805534362793,

0.06379664689302444,

0.07368890941143036,

0.1157362163066864,

0.09390141069889069,

-0.09614507853984833,

0.04456695169210434,

0.07040302455425262,

-0.1161491721868515,

-0.088579922914505,

-0.05586947500705719,

-0.11369411647319794,

-0.03803114593029022,

0.042319055646657944,

0.07944100350141525,

0.07417803257703781,

0.12303631007671356,

-0.04025602713227272,

0.01458174828439951,

0.09435619413852692,

0.046445950865745544,

0.05096331238746643,

0.02303396351635456,

0.07019416242837906,

-0.14889675378799438,

-0.06031433492898941,

0.17821498215198517,

0.20152024924755096,

0.21909622848033905,

-0.007414690218865871,

0.046194616705179214,

0.1079634502530098,

0.08483826369047165,

0.11395855993032455,

0.03367333114147186,

0.0026858814526349306,

0.008869725279510021,

-0.07117582112550735,

-0.03990144655108452,

0.022772423923015594,

0.005626334808766842,

-0.039430633187294006,

-0.12169887870550156,

0.1089344248175621,

0.022071823477745056,

0.07818719744682312,

0.11904284358024597,

0.043889034539461136,

-0.10250400006771088,

0.1707800328731537,

0.07980113476514816,

0.09769511967897415,

-0.05445104464888573,

0.14445912837982178,

0.09501883387565613,

-0.011523216031491756,

0.1426360011100769,

0.02312171645462513,

0.14193207025527954,

-0.044754281640052795,

-0.06786316633224487,

-0.05254961550235748,

-0.029654961079359055,

-0.00849114079028368,

0.03753609582781792,

-0.23247763514518738,

0.0957006961107254,

0.04005933180451393,

0.010167764499783516,

0.001216958393342793,

-0.05209174379706383,

0.17692597210407257,

0.1834389716386795,

0.10000936686992645,

0.029497236013412476,

-0.05918392539024353,

-0.01806548796594143,

-0.10263027995824814,

0.040152814239263535,

0.020959602668881416,

0.06212605908513069,

-0.055889252573251724,

-0.09581734240055084,

-0.02440735325217247,

-0.0007696192478761077,

0.024388357996940613,

-0.07740391790866852,