repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 22,778 | closed | LLama RuntimeError: CUDA error: device-side assert triggered | ### System Info

- `transformers` version: 4.28.0.dev0

- Platform: Linux-4.18.0-372.16.1.el8_6.0.1.x86_64-x86_64-with-glibc2.28

- Python version: 3.9.7

- Huggingface_hub version: 0.13.3

- PyTorch version (GPU?): 2.0.0+cu117 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: <fill in>

- Using distributed or parallel set-up in script?: <fill in>

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

`

0%| | 0/1524 [00:00<?, ?it/s]Traceback (most recent call last):

File "alpaca-lora/finetune.py", line 234, in <module>

fire.Fire(train)

File ".local/lib/python3.9/site-packages/fire/core.py", line 141, in Fire

component_trace = _Fire(component, args, parsed_flag_args, context, name)

File "/home/c703/c7031420/.local/lib/python3.9/site-packages/fire/core.py", line 475, in _Fire

component, remaining_args = _CallAndUpdateTrace(

File "/home/c703/c7031420/.local/lib/python3.9/site-packages/fire/core.py", line 691, in _CallAndUpdateTrace

component = fn(*varargs, **kwargs)

File "alpaca-lora/finetune.py", line 203, in train

trainer.train()

File ".conda/envs/llama/lib/python3.9/site-packages/transformers/trainer.py", line 1639, in train

return inner_training_loop(

File ".conda/envs/llama/lib/python3.9/site-packages/transformers/trainer.py", line 1906, in _inner_training_loop

tr_loss_step = self.training_step(model, inputs)

File ".conda/envs/llama/lib/python3.9/site-packages/transformers/trainer.py", line 2652, in training_step

loss = self.compute_loss(model, inputs)

File ".conda/envs/llama/lib/python3.9/site-packages/transformers/trainer.py", line 2684, in compute_loss

outputs = model(**inputs)

File ".conda/envs/llama/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File ".conda/envs/llama/lib/python3.9/site-packages/peft/peft_model.py", line 575, in forward

return self.base_model(

File ".conda/envs/llama/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File ".conda/envs/llama/lib/python3.9/site-packages/accelerate/hooks.py", line 165, in new_forward

output = old_forward(*args, **kwargs)

File ".conda/envs/llama/lib/python3.9/site-packages/transformers/models/llama/modeling_llama.py", line 765, in forward

outputs = self.model(

File ".conda/envs/llama/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File ".conda/envs/llama/lib/python3.9/site-packages/accelerate/hooks.py", line 165, in new_forward

output = old_forward(*args, **kwargs)

File ".conda/envs/llama/lib/python3.9/site-packages/transformers/models/llama/modeling_llama.py", line 574, in forward

attention_mask = self._prepare_decoder_attention_mask(

File ".conda/envs/llama/lib/python3.9/site-packages/transformers/models/llama/modeling_llama.py", line 476, in _prepare_decoder_attention_mask

combined_attention_mask = _make_causal_mask(

RuntimeError: CUDA error: device-side assert triggered

CUDA kernel errors might be asynchronously reported at some other API call, so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

Compile with TORCH_USE_CUDA_DSA to enable device-side assertions.`

### Description

I am interested in working with the Arabic language. I have tried adding all the tokens to the LLama tokenizer, and the tokenizer seems to work fine. However, during training, I encountered an error. I am looking for a solution to resolve this error. | 04-14-2023 17:41:57 | 04-14-2023 17:41:57 | @abdoelsayed2016 Thanks for raising this issue. Could you share a minimal code snippet to enable us to reproduce the error?

Just from the traceback alone, it seems that the issue is CUDA related, rather than the transformers model. The Llama model has been under active development and was part of the official version release yesterday. I'd also suggest updating to the most version of the code to make sure you have any possible updates which might have been added. <|||||>@abdoelsayed2016 hi, any suggestion? same error here when I want to train llama with lora.<|||||>@j-suyako did you add token to the tokenizer?<|||||>yes, I follow the [alpaca](https://github.com/tatsu-lab/stanford_alpaca/blob/main/train.py) to create the dataset, but I forget to resize the tokenizer length. Thanks for your reply! |

transformers | 22,777 | closed | Remove accelerate from tf test reqs | # What does this PR do?

This PR removes `accelerate` from the tf example test requirements as I believe its unused and causing issues with the `Accelerate` integration

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

@Rocketknight1, @sgugger

| 04-14-2023 17:13:18 | 04-14-2023 17:13:18 | While the tests aren't being ran here, I did run it on the branch I'm working on (that doesn't touch tf code) and it still passes (and passes now):

<|||||>_The documentation is not available anymore as the PR was closed or merged._<|||||>Per conversation with @Rocketknight1, not merging this because it actually makes the TF example tests fail. However since they were not run here, we can't test that. (And as a result shows a bug)<|||||>This should work now after you rebase on main! |

transformers | 22,776 | closed | Indexing fix - CLIP checkpoint conversion | # What does this PR do?

In the conversion script, there was an indexing error, where the image and text logits where taken as the 2nd and 3rd outputs of the HF model. However, this is only the case if the model returns a loss.

This PR updates the script to explicitly take the named parameters.

Fixes #22739

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests? | 04-14-2023 17:13:03 | 04-14-2023 17:13:03 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 22,775 | closed | trainer.is_model_parallel seems conflict with deepspeed | ### System Info

accelerate 0.18.0

aiofiles 23.1.0

aiohttp 3.8.4

aiosignal 1.3.1

altair 4.2.2

anyio 3.6.2

asttokens 2.2.1

async-timeout 4.0.2

attrs 22.2.0

backcall 0.2.0

backports.functools-lru-cache 1.6.4

bcrypt 4.0.1

bitsandbytes 0.37.2

certifi 2022.12.7

cfgv 3.3.1

chardet 5.1.0

charset-normalizer 3.0.1

click 8.1.3

colossalai 0.2.5

comm 0.1.3

contexttimer 0.3.3

contourpy 1.0.7

cPython 0.0.6

cycler 0.11.0

datasets 2.11.0

debugpy 1.6.7

decorator 5.1.1

deepspeed 0.9.0

dill 0.3.6

distlib 0.3.6

dnspython 2.3.0

entrypoints 0.4

evaluate 0.4.0

executing 1.2.0

fabric 3.0.0

fastapi 0.95.0

ffmpy 0.3.0

filelock 3.11.0

fonttools 4.39.3

frozenlist 1.3.3

fsspec 2023.4.0

gradio 3.24.1

gradio_client 0.0.8

h11 0.14.0

hjson 3.1.0

httpcore 0.16.3

httpx 0.23.3

huggingface-hub 0.13.4

identify 2.5.18

idna 3.4

importlib-metadata 6.3.0

importlib-resources 5.12.0

invoke 2.0.0

ipykernel 6.22.0

ipython 8.12.0

jedi 0.18.2

Jinja2 3.1.2

joblib 1.2.0

jsonschema 4.17.3

jupyter_client 8.1.0

jupyter_core 5.3.0

kiwisolver 1.4.4

linkify-it-py 2.0.0

loralib 0.1.1

markdown-it-py 2.2.0

MarkupSafe 2.1.2

matplotlib 3.7.1

matplotlib-inline 0.1.6

mdit-py-plugins 0.3.3

mdurl 0.1.2

mpi4py 3.1.4

multidict 6.0.4

multiprocess 0.70.14

nest-asyncio 1.5.6

ninja 1.11.1

nodeenv 1.7.0

numpy 1.24.2

nvidia-cublas-cu11 11.10.3.66

nvidia-cuda-nvrtc-cu11 11.7.99

nvidia-cuda-runtime-cu11 11.7.99

nvidia-cudnn-cu11 8.5.0.96

orjson 3.8.10

packaging 23.1

pandas 2.0.0

paramiko 3.0.0

parso 0.8.3

peft 0.2.0

pexpect 4.8.0

pickleshare 0.7.5

Pillow 9.5.0

pip 23.0.1

platformdirs 3.2.0

pre-commit 3.1.0

prompt-toolkit 3.0.38

psutil 5.9.4

ptyprocess 0.7.0

pure-eval 0.2.2

py-cpuinfo 9.0.0

pyarrow 10.0.0

pydantic 1.10.7

pydub 0.25.1

Pygments 2.15.0

pymongo 4.3.3

PyNaCl 1.5.0

pyparsing 3.0.9

pyrsistent 0.19.3

python-dateutil 2.8.2

python-multipart 0.0.6

pytz 2023.3

PyYAML 6.0

pyzmq 25.0.2

regex 2022.10.31

requests 2.28.2

responses 0.18.0

rfc3986 1.5.0

rich 13.3.1

scikit-learn 1.2.2

scipy 1.10.1

semantic-version 2.10.0

sentencepiece 0.1.97

setuptools 67.6.0

six 1.16.0

sniffio 1.3.0

stack-data 0.6.2

starlette 0.26.1

threadpoolctl 3.1.0

tokenizers 0.13.2

toolz 0.12.0

torch 1.13.1

tornado 6.2

tqdm 4.65.0

traitlets 5.9.0

transformers 4.28.0.dev0

typing_extensions 4.5.0

tzdata 2023.3

uc-micro-py 1.0.1

urllib3 1.26.15

uvicorn 0.21.1

virtualenv 20.19.0

wcwidth 0.2.6

websockets 11.0.1

wheel 0.40.0

xxhash 3.2.0

yarl 1.8.2

zipp 3.15.0

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [x] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [x] My own task or dataset (give details below)

### Reproduction

python -m torch.distributed.launch $DISTRIBUTED_ARGS run_clm.py --model_name_or_path "/mnt/zts-dev-data/llama-7b-hf/" --dataset_name wikitext --dataset_config_name wikitext-2-raw-v1 --per_device_train_batch_size 1 --per_device_eval_batch_size 1 --do_train --do_eval --output_dir /mnt/zts-dev-data/tmp/test-clm --tokenizer_name test --logging_steps 50 --save_steps 1000 --overwrite_output_dir --fp16 True --deepspeed deepspeed_test.json

### Expected behavior

It's ok for me when i pretrained a llama 7b model with 2*a100 with no deepspeed(OOM when only 1*a100 training and huggingface would support model parallel by default therefore no OOM occured), but when i configured training script with --deepspeed this error appeared:

```python

RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:1 and cuda:0! (when checking argument for argument index in method wrapper__index_select)

WARNING:torch.distributed.elastic.multiprocessing.api:Sending process 70758 closing signal SIGTERM

ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: 1) local_rank: 0 (pid: 70757) of binary:

```

```

{

"fp16": {

"enabled": true,

"loss_scale": 0,

"loss_scale_window": 1000,

"initial_scale_power": 16,

"hysteresis": 2,

"min_loss_scale": 1

},

"optimizer": {

"type": "AdamW",

"params": {

"lr": "auto",

"weight_decay": "auto",

"torch_adam": true,

"adam_w_mode": true

}

},

"scheduler": {

"type": "WarmupDecayLR",

"params": {

"warmup_min_lr": "auto",

"warmup_max_lr": "auto",

"warmup_num_steps": "auto",

"total_num_steps": "auto"

}

},

"zero_optimization": {

"stage": 1,

"offload_optimizer": {

"device": "cpu",

"pin_memory": true

},

"allgather_partitions": true,

"allgather_bucket_size": 2e8,

"overlap_comm": true,

"reduce_scatter": true,

"reduce_bucket_size": "auto",

"contiguous_gradients": true

},

"gradient_accumulation_steps": "auto",

"gradient_clipping": "auto",

"steps_per_print": 2000,

"train_batch_size": "auto",

"train_micro_batch_size_per_gpu": "auto",

"wall_clock_breakdown": false

}

```

zero_optimization with stage 2 also errors | 04-14-2023 16:34:28 | 04-14-2023 16:34:28 | https://huggingface.co/transformers/v4.6.0/_modules/transformers/trainer.html<|||||>and when i test a smaller model which could fit in one gpu memory and does not need model parallel

by running :

deepspeed "script + config" --deepspeed **.json (with num_gpus 2 )

no error occures anymore<|||||>cc @stas00 <|||||>Please provide a reproducible example that I can run to see what the problem is and I would be happy to look at it - I can't do it with what you shared. Thank you.<|||||>@stas00 this problem ocures when i instance model with parameter device_map="auto" and no bug when i do not use device_map,device_map will use model parallel when model size is too big for one gpu to hold in a multigpu-training env。

You can test it just use run_clm.py in transformers and use a large model(one gpu memory could not save the model eg:llama7b)instanced with parameter device_map="auto"

```

model = LlamaForCausalLM.from_pretrained(model_args.model_name_or_path,torch_dtype=torch.float32,

device_map="auto",

load_in_8bit=False)

```

so previous bug may be a conflict between deepspeed and transformers,transformers build model parallel by default in my setting but deepspeed backend algorithms don't when device_map="auto"<|||||>> @stas00 this problem ocures when i instance model with parameter device_map="auto" and no bug when i do not use device_map,device_map will use model parallel when model size is too big for one gpu to hold in a multigpu-training env。 You can test it just use run_clm.py in transformers and use a large model(one gpu memory could not save the model eg:llama7b)instanced with parameter device_map="auto"

>

> ```

> model = LlamaForCausalLM.from_pretrained(model_args.model_name_or_path,torch_dtype=torch.float32,

> device_map="auto",

> load_in_8bit=False)

> ```

>

> so previous bug may be a conflict between deepspeed and transformers,transformers build model parallel by default in my setting but deepspeed backend algorithms don't when device_map="auto"

Hey, the same situation happend to me, did you solve the problem?<|||||>`device_map="auto"` and DeepSpeed are incompatible. You cannot use them together. |

transformers | 22,774 | closed | Don't use `LayoutLMv2` and `LayoutLMv3` in some pipeline tests | # What does this PR do?

These 2 models require **different input format** than those of usual text models. See the relevant code block at the end.

The offline discussion with @NielsRogge is that **these 2 models are only for DocQA pipeline**, despite they have implementations for different head tasks.

Therefore, this PR **removes these 2 models from being tested (pipeline) in the first place**, instead of skipping them at later point.

**IMO, we should also remove these models being used in the pipeline classes (except DocQA)** if they are not going to work. But I don't do anything on this.

**`LayoutLMv3` with `DocumentQuestionAnsweringPipeline` (and the pipeline test) is still not working due to some issue. We need to discuss with @NielsRogge to see if it could be fixed, but it's out of this PR's scope.**

### relevant code block

https://github.com/huggingface/transformers/blob/daf53241d6276c0cd932ee8ce3e5b0a403f392b7/src/transformers/models/layoutlmv3/tokenization_layoutlmv3.py#L610-L625 | 04-14-2023 15:35:41 | 04-14-2023 15:35:41 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Merge now as this PR only touches tests. Feel free to leave a comment if any @NielsRogge |

transformers | 22,773 | closed | 'transformer_model' object has no attribute 'module' | ### System Info

```shell

Platform: Kaggle

python: 3.7

torch: 1.13.0

transformers: 4.27.4

tensorflow: 2.11.0

pre-trained model used: XLNET

```

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

I reproduce others' open-sourced work on Kaggle. Following the partly code:

```

import torch

from torch import nn

from transformers import XLNetConfig, XLNetLMHeadModel, XLNetModel, XLNetTokenizer,AutoTokenizer

class transformer_model(nn.Module):

def __init__(self, model_name, drop_prob = dropout_prob):

super(transformer_model, self).__init__()

configuration = XLNetConfig.from_pretrained(model_name, output_hidden_states=True)

self.xlnet = XLNetModel.from_pretrained(model_name, config = configuration)

...

```

```

def train(model, optimizer, scheduler, tokenizer, max_epochs, save_path, device, val_freq = 10):

bestpoint_dir = os.path.join(save_path)

os.makedirs(bestpoint_dir, exist_ok=True)

...

model.save_pretrained(bestpoint_dir) #here

print("Saving model bestpoint to ", bestpoint_dir)

...

model = transformer_model(model_name).to(device)

...

```

Error messages: 'transformer_model' object has no attribute 'save_pretrained'

```

'transformer_model' object has no attribute 'save_pretrained'

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

/tmp/ipykernel_23/1390676391.py in <module>

414 num_training_steps = total_steps)

415

--> 416 train(model, optimizer, scheduler, tokenizer, epochs, save_path, device)

417

418 print(max_accuracy, "\n", max_match)

/tmp/ipykernel_23/1390676391.py in train(model, optimizer, scheduler, tokenizer, max_epochs, save_path, device, val_freq)

385

386 # To save the model, uncomment the following lines

--> 387 model.save_pretrained(bestpoint_dir)

388 print("Saving model bestpoint to ", bestpoint_dir)

389

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py in __getattr__(self, name)

1264 return modules[name]

1265 raise AttributeError("'{}' object has no attribute '{}'".format(

-> 1266 type(self).__name__, name))

1267

1268 def __setattr__(self, name: str, value: Union[Tensor, 'Module']) -> None:

AttributeError: 'transformer_model' object has no attribute 'save_pretrained'

```

### Expected behavior

```shell

`model.save_pretrained(...) `should work, I tried to fix the problem by using `model.module.save_pretrained(...)` and `torch.save(...)`, but failed.

How can I fix the problem? Thx!

```

### Checklist

- [X] I have read the migration guide in the readme. ([pytorch-transformers](https://github.com/huggingface/transformers#migrating-from-pytorch-transformers-to-transformers); [pytorch-pretrained-bert](https://github.com/huggingface/transformers#migrating-from-pytorch-pretrained-bert-to-transformers))

- [X] I checked if a related official extension example runs on my machine. | 04-14-2023 15:28:10 | 04-14-2023 15:28:10 | @sqinghua, this is happening because the class `transformer_model` inherits from PyTorch's nn.Module class, which doesn't have a `save_pretrained` method. `save_pretrained` is a transformers library specific method common to models which inherit from `PreTrainedModel`.

I'm not sure why `torch.save(...)` doesn't work. I would need more information to be able to help e.g. traceback and error message to know if it's a transformers related issue.

It should be possible to save the xlnet model out using `model.xlnet.save_pretrained(checkpoint)`. This won't save out any additional modeling which happens in `transformer_model` e.g. additional layers or steps in the forward pass beyond passing to `self.xlnet`. <|||||>@amyeroberts Thank you for your detailed answer, it was very helpful. :) |

transformers | 22,772 | closed | Seq2SeqTrainer: Evict decoder_input_ids only when it is created from labels | # What does this PR do?

Fixes #22634 (what remains of the issue, the [failing FSMT command in this comment](https://github.com/huggingface/transformers/issues/22634#issuecomment-1500919952))

A previous PR (#22108) expanded the capabilities of the trainer, by delegating input selection to `.generate()`. However, it did manually evict `decoder_input_ids` from the inputs to make the SQUAD test pass, causing the issue seen above.

This PR makes a finer-grained eviction decision -- we only want to evict `decoder_input_ids` when it is built from `labels`. In this particular case, `decoder_input_ids` will likely have right-padding, which is unsupported by `.generate()`. | 04-14-2023 15:24:30 | 04-14-2023 15:24:30 | > Did you confirm the failing test now passes?

Yes :D Both the SQUAD test that made me add the previous eviction and the command that FSMT command that @stas00 shared are passing with this PR<|||||>_The documentation is not available anymore as the PR was closed or merged._ |

transformers | 22,771 | open | TF Swiftformer | ### Model description

Add the TensorFlow port of the SwiftFormer model. See related issue: #22685

To be done once the SwiftFormer model has been added: #22686

### Open source status

- [X] The model implementation is available

- [X] The model weights are available

### Provide useful links for the implementation

Original repo: https://github.com/amshaker/swiftformer | 04-14-2023 14:53:55 | 04-14-2023 14:53:55 | Hi! I would like to take on this as my first issue if possible. Is that okay?<|||||>@joaocmd Of course! Cool issue to start on 🤗

If you haven't seen it already, there's a detailed guide in the docs on porting a model to TensorFlow: https://huggingface.co/docs/transformers/add_tensorflow_model

It's best to wait until the PyTorch implementation is merged in, which will be at least a day or two away. <|||||>Sounds great @amyeroberts, thank you :)

I'll start looking into it once the PyTorch PR is merged.<|||||>Any news on this tf model? <|||||>Hi @D-Roberts, I haven't started looking into it as the pytorch version has not yet been merged.<|||||>Hi @joaocmd , the PyTorch version of SwiftFormer is now merged so you can continue working the TensorFlow version of it.<|||||>Hi @shehanmunasinghe, I'm on it, thanks!<|||||>Hi @amyeroberts ,

May I proceed to work on this issue if it has not been sorted yet?<|||||>@sqali There is currently an open PR which is actively being worked on by @joaocmd: #23342 <|||||>Hi @amyeroberts ,

Is there any other issue that you are working on in which I can help you with?<|||||>@sqali Here's a page in the docs all about different ways to contribute and how to find issues to work on: https://huggingface.co/docs/transformers/main/contributing. I'd suggest looking at issues marked with the 'Good First Issues' tag and finding one which no-one is currently working on: https://github.com/huggingface/transformers/labels/Good%20First%20Issue

|

transformers | 22,770 | closed | Tweak ESM tokenizer for Nucleotide Transformer | Nucleotide Transformer is a model that takes DNA inputs. It uses the same model architecture as the protein model ESM, but in addition to a different vocabulary it tokenizes inputs without a `<sep>` or `<eos>` token at the end. This PR makes a small tweak to the tokenization code for ESM, so that it doesn't try to add `self.eos_token_id` to sequences when the tokenizer does not have an `eos_token` set. With this change, we can fully support Nucleotide Transformer as an ESM checkpoint. | 04-14-2023 13:48:07 | 04-14-2023 13:48:07 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 22,769 | open | Error projecting concatenated Fourier Features. | ### System Info

- `transformers` version: 4.27.4

- Platform: macOS-13.3.1-arm64-arm-64bit

- Python version: 3.9.16

- Huggingface_hub version: 0.13.4

- PyTorch version (GPU?): 2.0.0 (False)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: False

- Using distributed or parallel set-up in script?: False

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

```python

import torch

from transformers.models.perceiver.modeling_perceiver import PerceiverImagePreprocessor

input_preprocessor = PerceiverImagePreprocessor(

config,

prep_type="conv",

spatial_downsample=4,

temporal_downsample=1,

position_encoding_type="fourier",

in_channels=4,

out_channels=256,

conv2d_use_batchnorm=True,

concat_or_add_pos="concat",

project_pos_dim=128,

fourier_position_encoding_kwargs = dict(

num_bands=64,

max_resolution=[25,50], # 4x downsample include

)

)

test = torch.randn(1,4,100,200)

inputs, modality_sizes, inputs_without_pos = input_preprocessor(test)

```

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

Cell In[4], line 23

21 test = torch.randn(1,4,100,200)

22 # preprocessor outputs a tuple

---> 23 inputs, modality_sizes, inputs_without_pos = input_preprocessor(test)

25 print(inputs.shape)

26 print(inputs_without_pos.shape)

torch/nn/modules/module.py:1501), in Module._call_impl(self, *args, **kwargs)

1496 # If we don't have any hooks, we want to skip the rest of the logic in

1497 # this function, and just call forward.

1498 if not (self._backward_hooks or self._backward_pre_hooks or self._forward_hooks or self._forward_pre_hooks

1499 or _global_backward_pre_hooks or _global_backward_hooks

1500 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1501 return forward_call(*args, **kwargs)

1502 # Do not call functions when jit is used

1503 full_backward_hooks, non_full_backward_hooks = [], []

transformers/models/perceiver/modeling_perceiver.py:3226), in PerceiverImagePreprocessor.forward(self, inputs, pos, network_input_is_1d)

3223 else:

3224 raise ValueError("Unsupported data format for conv1x1.")

-> 3226 inputs, inputs_without_pos = self._build_network_inputs(inputs, network_input_is_1d)

3227 modality_sizes = None # Size for each modality, only needed for multimodal

3229 return inputs, modality_sizes, inputs_without_pos

transformers/models/perceiver/modeling_perceiver.py:3169), in PerceiverImagePreprocessor._build_network_inputs(self, inputs, network_input_is_1d)

3166 pos_enc = self.position_embeddings(index_dims, batch_size, device=inputs.device, dtype=inputs.dtype)

3168 # Optionally project them to a target dimension.

-> 3169 pos_enc = self.positions_projection(pos_enc)

3171 if not network_input_is_1d:

3172 # Reshape pos to match the input feature shape

3173 # if the network takes non-1D inputs

3174 sh = inputs.shape

torch/nn/modules/module.py:1501), in Module._call_impl(self, *args, **kwargs)

1496 # If we don't have any hooks, we want to skip the rest of the logic in

1497 # this function, and just call forward.

1498 if not (self._backward_hooks or self._backward_pre_hooks or self._forward_hooks or self._forward_pre_hooks

1499 or _global_backward_pre_hooks or _global_backward_hooks

1500 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1501 return forward_call(*args, **kwargs)

1502 # Do not call functions when jit is used

1503 full_backward_hooks, non_full_backward_hooks = [], []

torch/nn/modules/linear.py:114), in Linear.forward(self, input)

113 def forward(self, input: Tensor) -> Tensor:

--> 114 return F.linear(input, self.weight, self.bias)

RuntimeError: mat1 and mat2 shapes cannot be multiplied (1176x258 and 256x128)

### Expected behavior

Linear projection input number of features should equal the number of positional features.

When concatenating Fourier Features the expected number of positional features is: (2 * num_bands * num_dims) + 2.

The build_position_encoding() function takes as input the out_channels which is used to define the input number of features for linear projection. PerceiverImagePreprocessor incorrectly passes in the embedding dimension to out_channels for positional encoding projection.

Possible Fix:

Use the positional encoding class method output_size() to pull number of input features for projection within build_position_encoding() function.

positions_projection = nn.Linear(output_pos_enc.output_size(), project_pos_dim) if project_pos_dim > 0 else nn.Identity() | 04-14-2023 13:45:32 | 04-14-2023 13:45:32 | @sr-ndai Thanks for raising this issue and detailed reproduction.

Could you share the config or checkpoint being used in this example? <|||||>@amyeroberts No problem, here is the configuration I used:

```python

from transformers import PerceiverConfig

config = PerceiverConfig(

num_latents = 128,

d_latents=256,

num_blocks=6,

qk_channels = 256,

num_self_attends_per_block=2,

num_self_attention_heads=8,

num_cross_attention_heads=8,

self_attention_widening_factor=2,

cross_attention_widening_factor=1,

hidden_act='gelu_new',

attention_probs_dropout_prob=0.1,

use_query_residual=True,

num_labels = 128 # For decoder

)

``` |

transformers | 22,768 | closed | How to avoid adding double start of token<s><s> in TrOCR during training ? | **Describe the bug**

The model I am using (TrOCR Model):

The problem arises when using:

* [x] the official example scripts: done by the nice tutorial [(fine_tune)](https://github.com/NielsRogge/Transformers-Tutorials/blob/master/TrOCR/Fine_tune_TrOCR_on_IAM_Handwriting_Database_using_Seq2SeqTrainer.ipynb) @NielsRogge

* [x] my own modified scripts: (as the script below )

```

processor = TrOCRProcessor.from_pretrained("microsoft/trocr-large-handwritten")

def compute_metrics(pred):

labels_ids = pred.label_ids

print('labels_ids',len(labels_ids), type(labels_ids),labels_ids)

pred_ids = pred.predictions

print('pred_ids',len(pred_ids), type(pred_ids),pred_ids)

pred_str = processor.batch_decode(pred_ids, skip_special_tokens=True)

print(pred_str)

labels_ids[labels_ids == -100] = processor.tokenizer.pad_token_id

label_str = processor.batch_decode(labels_ids, skip_special_tokens=True)

print(label_str)

cer = cer_metric.compute(predictions=pred_str, references=label_str)

return {"cer": cer}

class Dataset(Dataset):

def __init__(self, root_dir, df, processor, max_target_length=128):

self.root_dir = root_dir

self.df = df

self.processor = processor

self.max_target_length = max_target_length

def __len__(self):

return len(self.df)

def __getitem__(self, idx):

# get file name + text

file_name = self.df['file_name'][idx]

text = self.df['text'][idx]

# prepare image (i.e. resize + normalize)

image = Image.open(self.root_dir + file_name).convert("RGB")

pixel_values = self.processor(image, return_tensors="pt").pixel_values

# add labels (input_ids) by encoding the text

labels = self.processor.tokenizer(text,

padding="max_length",

truncation=True,

max_length=self.max_target_length).input_ids

# important: make sure that PAD tokens are ignored by the loss function

labels = [label if label != self.processor.tokenizer.pad_token_id else -100 for label in labels]

# encoding

return {"pixel_values": pixel_values.squeeze(), "labels": torch.tensor(labels)}

#Train a model

model = VisionEncoderDecoderModel.from_pretrained("microsoft/trocr-large-handwritten")

# set special tokens used for creating the decoder_input_ids from the labels

model.config.decoder_start_token_id = processor.tokenizer.cls_token_id

model.config.pad_token_id = processor.tokenizer.pad_token_id

# make sure vocab size is set correctly

model.config.vocab_size = model.config.decoder.vocab_size

# set beam search parameters

model.config.eos_token_id = processor.tokenizer.sep_token_id

model.config.max_length = 64

model.config.early_stopping = True

model.config.no_repeat_ngram_size = 3

model.config.length_penalty = 2.0

model.config.num_beams = 4

model.config.decoder.is_decoder = True

model.config.decoder.add_cross_attention = True

working_dir = './test/'

training_args = Seq2SeqTrainingArguments(...)

# instantiate trainer

trainer = Seq2SeqTrainer(model=model, args=training_args, train_dataset = train_dataset,

data_collator = default_data_collator, )

trainer.train()

# python3 train.py path/to/labels path/to/images/

```

- Platform: Linux Ubuntu distribution [GCC 9.4.0] on Linux

- PyTorch version (GPU?): 0.8.2+cu110

- transformers: 4.22.2

- Python version:3.8.10

A clear and concise description of what the bug is.

To **Reproduce** Steps to reproduce the behavior:

1. After training the model or during the training phase when evaluating metrics calculate I see the model added the double start of token `<s><s>` or ids `[0,0, ......,2,1,1, 1 ]`

2. here is an example during the training phase showing generated tokens in compute_metrics

Input predictions: `[[0,0,506,4422,8046,2,1,1,1,1,1]]`

Input references: `[[0,597,2747 ...,1,1,1]] `

4. Other examples during testing models [[](https://i.stack.imgur.com/sWzbf.png)]

**Expected behavior** A clear and concise description of what you expected to happen.

In 2 reproduced problems:

I am expecting during `training`Input predictions should be: `[[0,506,4422, ... ,8046,2,1,1,1,1,1 ]] `

In addition during the `testing` phase: generated text without double` **<s><s>** `

`tensor([[0,11867,405,22379,1277,..........,368,2]]) `

`<s>ennyit erről, tőlem fényképezz amennyit akarsz, a véleményem akkor</s>`

cc @ArthurZucker | 04-14-2023 11:15:49 | 04-14-2023 11:15:49 | cc @ArthurZucker <|||||>+1<|||||>Thanks for Natabara for his comment The solution is super easy by just skipping <s> start token `labels= labels[1:] ` coming from the tokenizer because the tokenizer adds start token <s> and the TrOCR adds start token <s> automatically as mentioned in TrOCR paper ``` def __getitem__(self, idx): # get file name + text file_name = self.df['file_name'][idx] text = self.df['text'][idx] # prepare image (i.e. resize + normalize) image = Image.open(self.root_dir + file_name).convert("RGB") pixel_values = self.processor(image, return_tensors="pt").pixel_values # add labels (input_ids) by encoding the text labels = self.processor.tokenizer(text, padding="max_length", truncation=True, max_length=self.max_target_length).input_ids # important: make sure that PAD tokens are ignored by the loss function labels = [label if label != self.processor.tokenizer.pad_token_id else -100 for label in labels] labels= labels[1:] return {"pixel_values": pixel_values.squeeze(), "labels": torch.tensor(labels)} ``` |

transformers | 22,767 | closed | Mobilenet_v1 Dropout probability | Hi @amyeroberts [here](https://github.com/huggingface/transformers/pull/17799) is the pull request with some interesting bug. Mobilenetv2 made from tensorflow checkpoint, but in Tensorflow dropout prob is the fraction of **non zero** values, but in PyTorch this is fraction of **zero** values. It should be **1 - prob** for PyTorch.

Load Mobilenetv1 model from 'google/mobilenet_v1_1.0_224'

Dropout prob should be 1 - tf_prob | 04-14-2023 09:43:34 | 04-14-2023 09:43:34 | @andreysher Thanks for raising this issue. The dropout rate `p` or `rate` is defined the same for TensorFlow and PyTorch layers.

From the [TensorFlow documentation](https://www.tensorflow.org/api_docs/python/tf/keras/layers/Dropout):

> The Dropout layer randomly sets input units to 0 with a frequency of `rate` at each step during training time

From the [PyTorch documentation](https://pytorch.org/docs/stable/generated/torch.nn.Dropout.html):

> During training, randomly zeroes some of the elements of the input tensor with probability `p`

Is there something specific about the original MobileNet implementation or checkpoints which means this doesn't apply?

<|||||>Thanks for this clarification! But i don't understand why default dropout_prob in the PR is 0.999? If i load model by

```

from transformers import AutoImageProcessor, MobileNetV1ForImageClassification

model = MobileNetV1ForImageClassification.from_pretrained("google/mobilenet_v1_1.0_224")

```

i get model with dropout p = 0.999. This is unexpected. Could you enplane why such value is presented in pretrained model?<|||||>@andreysher Ah, OK, I think I see what the issue is. The reason the dropout value p=0.999 is because it's set in the [model config](https://huggingface.co/google/mobilenet_v1_1.0_224/blob/dd9bc45ff57d9492e00d48547587baf03f0cdade/config.json#L5). This is a mistake as, as you had identified, the probability in the original mobilenet repo is the [keep probability](https://github.com/tensorflow/models/blob/ad32e81e31232675319d5572b78bc196216fd52e/research/slim/nets/mobilenet_v1.py#L306) i.e. `(1 - p)`. I've opened PRs on the hub to update the model configs:

- https://huggingface.co/google/mobilenet_v1_0.75_192/discussions/2

- https://huggingface.co/google/mobilenet_v2_1.0_224/discussions/3

- https://huggingface.co/google/mobilenet_v1_1.0_224/discussions/2

- https://huggingface.co/google/mobilenet_v2_0.35_96/discussions/2

- https://huggingface.co/google/mobilenet_v2_1.4_224/discussions/2

- https://huggingface.co/google/mobilenet_v2_0.75_160/discussions/2

Thanks for flagging and the time take to explain. <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 22,766 | closed | Fix failing torchscript tests for `CpmAnt` model | # What does this PR do?

The failure is caused by some type issues (dict, tuple, etc) in the outputs. | 04-14-2023 09:21:03 | 04-14-2023 09:21:03 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 22,765 | closed | Fix word_ids hyperlink | Fixes https://github.com/huggingface/transformers/issues/22729 | 04-14-2023 09:13:29 | 04-14-2023 09:13:29 | CC @amyeroberts <|||||>_The documentation is not available anymore as the PR was closed or merged._ |

transformers | 22,764 | closed | Fix a mistake in Llama weight converter log output. | # What does this PR do?

Fixes a mistake in Llama weight converter log output.

Before: `Saving a {tokenizer_class} to {tokenizer_path}`

After: `Saving a LlamaTokenizerFast to outdir.`

## Before submitting

- [X] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

@ArthurZucker and @younesbelkada

| 04-14-2023 08:55:01 | 04-14-2023 08:55:01 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 22,763 | closed | Generate: pin number of beams in BART test | # What does this PR do?

A recent change added the `num_beams==1` requirement for contrastive search. One of the tests started failing because of that change -- BART has `num_beams=4` [in its config](https://huggingface.co/facebook/bart-large-cnn/blob/main/config.json#L49), so the test was now triggering beam search, and not contrastive search. This PR corrects it.

(The long-term solution is to add argument validation to detect these cases)

| 04-14-2023 08:28:33 | 04-14-2023 08:28:33 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 22,762 | closed | RecursionError: maximum recursion depth exceeded while getting the str of an object. | **System Info**

Python 3.8.10

transformers 4.29.0.dev0

sentencepiece 0.1.97

**Information**

- [x] The official example scripts

- [ ] My own modified scripts

**Reproduction**

In https://github.com/CarperAI/trlx/tree/main/examples

```python https://ppo_sentiments_llama.py```

The loops occur as follows:

/usr/local/lib/python3.8/dist-packages/transformers/tokenization_utils_fast.py:250 in │

│ convert_tokens_to_ids │

│ │

│ 247 │ │ │ return None │

│ 248 │ │ │

│ 249 │ │ if isinstance(tokens, str): │

│ ❱ 250 │ │ │ return self._convert_token_to_id_with_added_voc(tokens) │

│ 251 │ │ │

│ 252 │ │ ids = [] │

│ 253 │ │ for token in tokens: │

│ │

│ /usr/local/lib/python3.8/dist-packages/transformers/tokenization_utils_fast.py:260 in │

│ _convert_token_to_id_with_added_voc │

│ │

│ 257 │ def _convert_token_to_id_with_added_voc(self, token: str) -> int: │

│ 258 │ │ index = self._tokenizer.token_to_id(token) │

│ 259 │ │ if index is None: │

│ ❱ 260 │ │ │ return self.unk_token_id │

│ 261 │ │ return index │

│ 262 │ │

│ 263 │ def _convert_id_to_token(self, index: int) -> Optional[str]: │

│ │

│ /usr/local/lib/python3.8/dist-packages/transformers/tokenization_utils_base.py:1141 in │

│ unk_token_id │

│ │

│ 1138 │ │ """ │

│ 1139 │ │ if self._unk_token is None: │

│ 1140 │ │ │ return None │

│ ❱ 1141 │ │ return self.convert_tokens_to_ids(self.unk_token) │

│ 1142 │ │

│ 1143 │ @property │

│ 1144 │ def sep_token_id(self) -> Optional[int]: │

│ │

│ /usr/local/lib/python3.8/dist-packages/transformers/tokenization_utils_fast.py:250 in │

│ convert_tokens_to_ids │

│ │

│ 247 │ │ │ return None │

│ 248 │ │ │

│ 249 │ │ if isinstance(tokens, str): │

│ ❱ 250 │ │ │ return self._convert_token_to_id_with_added_voc(tokens) │

│ 251 │ │ │

│ 252 │ │ ids = [] │

│ 253 │ │ for token in tokens:

...

Until

/usr/local/lib/python3.8/dist-packages/transformers/tokenization_utils_base.py:1021 in unk_token │

│ │

│ 1018 │ │ │ if self.verbose: │

│ 1019 │ │ │ │ logger.error("Using unk_token, but it is not set yet.") │

│ 1020 │ │ │ return None │

│ ❱ 1021 │ │ return str(self._unk_token) │

│ 1022 │ │

│ 1023 │ @property │

│ 1024 │ def sep_token(self) -> str:

RecursionError: maximum recursion depth exceeded while getting the str of an object

**Expected behavior**

Is the algorithm expected to call the function `convert_tokens_to_ids` in `tokenization_utils.py` instead of `tokenization_utils_fast.py`?

Thanks!

| 04-14-2023 08:22:39 | 04-14-2023 08:22:39 | cc @ArthurZucker <|||||>same problem, is there any progress?<|||||>Hey! The main issue is that they did not update the tokenizer files at `"decapoda-research/llama-7b-hf"` but they are using the latest version of transformers. The tokenizer was fixed see #22402 and corrected. Nothing we can do on our end...<|||||>@ArthurZucker I am facing a similar issue with openllama

```python

save_dir = "../open_llama_7b_preview_300bt/open_llama_7b_preview_300bt_transformers_weights/"

tokenizer = AutoTokenizer.from_pretrained(save_dir)

tokenizer.bos_token_id

```

calling `tokenizer.bos_token_id` this causes max recursion depth error.

```python

tokenizer

LlamaTokenizerFast(name_or_path='../open_llama_7b_preview_300bt/open_llama_7b_preview_300bt_transformers_weights/', vocab_size=32000, model_max_length=1000000000000000019884624838656, is_fast=True, padding_side='left', truncation_side='right', special_tokens={'bos_token': AddedToken("", rstrip=False, lstrip=False, single_word=False, normalized=True), 'eos_token': AddedToken("", rstrip=False, lstrip=False, single_word=False, normalized=True), 'unk_token': AddedToken("", rstrip=False, lstrip=False, single_word=False, normalized=True)}, clean_up_tokenization_spaces=False)

```

`transformers version = 4.29.1`

`tokenizer_config.json`

```

{

"bos_token": "",

"eos_token": "",

"model_max_length": 1e+30,

"tokenizer_class": "LlamaTokenizer",

"unk_token": ""

}

```

Initializing as following works but I am not sure if this should be needed:

```

tokenizer = AutoTokenizer.from_pretrained(save_dir, unk_token="<unk>",

bos_token="<s>",

eos_token="</s>")

```

<|||||>So.... Again, if you are not using the latest / most recently converted tokenizer, I cannot help you. Checkout [huggyllama/llama-7b](https://huggingface.co/huggyllama/llama-7b) which has a working tokenizer. |

transformers | 22,761 | closed | Pix2struct: doctest fix | # What does this PR do?

Fixes the failing doctest: different machines may produce minor FP32 differences. This PR formats the printed number to a few decimal places. | 04-14-2023 08:02:09 | 04-14-2023 08:02:09 | oops, wrong core maintainer :D<|||||>_The documentation is not available anymore as the PR was closed or merged._ |

transformers | 22,760 | closed | WhisperForAudioClassification RuntimeError tensor size doesn't match | ### System Info

- `transformers` version: 4.28.0

- Platform: Linux-5.4.190-107.353.amzn2.x86_64-x86_64-with-glibc2.31

- Python version: 3.9.16

- Huggingface_hub version: 0.13.4

- Safetensors version: not installed

- PyTorch version (GPU?): 2.0.0+cu117 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: Multi-GPU on the same machine

### Who can help?

@sanchit-gandhi finetuning WhisperForAudioClassification model and getting this error. The dataset and script works fine when switching to the Wav2Vec2 model.

File "/opt/conda/envs/voice/lib/python3.9/site-packages/torch/nn/parallel/parallel_apply.py", line 64, in _worker

output = module(*input, **kwargs)

File "/opt/conda/envs/voice/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "/home/jovyan/.local/lib/python3.9/site-packages/transformers/models/whisper/modeling_whisper.py", line 1739, in forward

encoder_outputs = self.encoder(

File "/opt/conda/envs/voice/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "/home/jovyan/.local/lib/python3.9/site-packages/transformers/models/whisper/modeling_whisper.py", line 823, in forward

hidden_states = inputs_embeds + embed_pos

RuntimeError: The size of tensor a (750) must match the size of tensor b (1500) at non-singleton dimension 1

### Information

- [X] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

Followed this tutorial, and switched Wav2vec2 to WhisperForAudioClassification model.

Using a dataset with 100K+ wav files.

Training fails with the above error.

### Expected behavior

It would train with no errors. | 04-14-2023 06:51:52 | 04-14-2023 06:51:52 | Hey @xiao1ongbao! I'm hoping that your fine-tuning run was successful! Let me know if you encounter any issues - more than happy to help here 🤗<|||||>Hey! How did you solve that?<|||||>Do you have a reproducible code snippet to trigger the error @lnfin? I didn't encounter this in my experiments! |

transformers | 22,759 | closed | chore: allow protobuf 3.20.3 requirement | Allow latest bugfix release for protobuf (3.20.3)

# What does this PR do?

Change in requirements for python library so it allows the use of latest bugfix release for protobuf (3.20.3) instead of restricting it to the upper bound limit for this dependency to 3.20.2 (<=3.20.2).

| 04-14-2023 06:42:50 | 04-14-2023 06:42:50 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_22759). All of your documentation changes will be reflected on that endpoint.<|||||>cc @ydshieh <|||||>Hi @jose-turintech Thank you for this PR ❤️ .

Unfortunately, as you can see in the CI results, the changes cause some errors

```python

FAILED tests/models/albert/test_tokenization_albert.py::AlbertTokenizationTest::test_pickle_subword_regularization_tokenizer

FAILED tests/models/bert_generation/test_tokenization_bert_generation.py::BertGenerationTokenizationTest::test_pickle_subword_regularization_tokenizer

```

(Fatal Python error: Segmentation fault)

So we are not able to merge this PR so far. There might be some way to fix these 2 issues, but I am not sure. Let me know if you want to dive into this.

<|||||>Hey @jose-turintech ,

Thanks for submitting this PR! The latest `tensorflow==2.12` release depends on `protobuf >= 3.20.3`, so this would unblock installing the latest `tensorflow` alongside `transformers`.

After setting up this PR's environment, I just ran this locally and those tests seem to pass. Would it be possible to trigger a re-run @ydshieh? Alternatively, would it be possible to get extra logs on the CI failures? <|||||>> Hey @jose-turintech ,

>

> Thanks for submitting this PR! The latest `tensorflow==2.12` release depends on `protobuf >= 3.20.3`, so this would unblock installing the latest `tensorflow` alongside `transformers`.

>

> After setting up this PR's environment, I just ran this locally and those tests seem to pass. Would it be possible to trigger a re-run @ydshieh? Alternatively, would it be possible to get extra logs on the CI failures?

Hello @adriangonz, just updated my branch with latest changes on origin in order to test if the PR check would retrigger. It seems so; so i guess we'll see if the PR passes all check within some minutes.

Thanks for your comment.<|||||>As you can see in [the latest run](https://app.circleci.com/pipelines/github/huggingface/transformers/63908/workflows/bcaedcfc-54af-42e8-9e9c-a97d9612b185/jobs/789487), the 2 failed tests are still there.

The error (provided at the end below) is some processes crashed, and there is no more informative log being given by the `pytest`.

When I run the two failed tests individually on my local env., they pass.

However, since the latest release of `tensorflow-probaility` broke everything in the CI since we don't support `TensorFlow 2.12` yet and it needs that version, we will take a more deep look to see if we can unblock this situation.

```bash

=================================== FAILURES ===================================

______ tests/models/bert_generation/test_tokenization_bert_generation.py _______

[gw0] linux -- Python 3.8.12 /home/circleci/.pyenv/versions/3.8.12/bin/python

worker 'gw0' crashed while running 'tests/models/bert_generation/test_tokenization_bert_generation.py::BertGenerationTokenizationTest::test_pickle_subword_regularization_tokenizer'

_______________ tests/models/albert/test_tokenization_albert.py ________________

[gw6] linux -- Python 3.8.12 /home/circleci/.pyenv/versions/3.8.12/bin/python

worker 'gw6' crashed while running 'tests/models/albert/test_tokenization_albert.py::AlbertTokenizationTest::test_pickle_subword_regularization_tokenizer'

``` <|||||>@ydshieh i've merged origin into this branch once again to retrigger CI checks just to see if test passed after the downtime of some huggingface transformers yesterday. Tests pass now after your modifications :) .

Only difference with main is the tensorflow-probaility>0.20 restriction is not applied in this CI build.

Thanks for taking the time to take a look into the issue.<|||||>@jose-turintech I accidentally pushed the experimental changes to this PR branch, I am really sorry. The CI is green as I removed some 3rd packages (tensorflow for example), which it should be kept.

I am still looking how to resolve the issue. There is a big problem (at least the desired environment when running inside CircleCI runner). I will keep you update soon.<|||||>In the meantime, let us convert this PR into a draft mode, so it won't be merged by accident. Thank you for your comprehension.<|||||>## Issue

(for the hack to fix, see the next reply)

This PR want to use `protobuf==3.20.3` so we can use `tensorflow==2.12`. However, some tests like `test_pickle_subword_regularization_tokenizer` fails with this environment. The following describes the issue.

- First, setup the env. to have `tensorflow-cpu==2.12` with `potobuf==3.20.3` but `without torch installed`.

- Use a `sentencepiece` tokenizer with `enable_sampling=True`

- `run the code with pytest` --> crash (core dump)

- (run with a script --> no crash)

- (run the code with pytest where torch is also there --> no crash)

Here are 2 code snippets to reproduce (and not): run in python 3.8 will more likely to reproduce

______________________________________________________________________________

- create `tests/test_foo.py` and run `python -m pytest -v tests/test_foo.py` --> crash

```

from transformers import AlbertTokenizer

def test_foo():

fn = "tests/fixtures/spiece.model"

text = "This is a test for subword regularization."

# `encode` works with `False`

sp_model_kwargs = {"enable_sampling": False}

tokenizer = AlbertTokenizer(fn, sp_model_kwargs=sp_model_kwargs)

# test 1 (usage in `transformers`)

# _ = tokenizer.tokenize(text)

# test 2 (direct use in sentencepiece)

pieces = tokenizer.sp_model.encode(text, out_type=str)

# `encode` fails with `True` if torch is not installed and tf==2.12

sp_model_kwargs = {"enable_sampling": True}

tokenizer = AlbertTokenizer(fn, sp_model_kwargs=sp_model_kwargs)

# test 1 (usage in `transformers`)

# _ = tokenizer.tokenize(text)

# This gives `Segmentation fault (core dumped)` under the above mentioned conditions

# test 2 (direct use in sentencepiece)

pieces = tokenizer.sp_model.encode(text, out_type=str)

print(pieces)

```

- create `foo.py` and run `python foo.py` -> no crash

```

from transformers import AlbertTokenizer

fn = "tests/fixtures/spiece.model"

text = "This is a test for subword regularization."

# `encode` works with `False`

sp_model_kwargs = {"enable_sampling": False}

tokenizer = AlbertTokenizer(fn, sp_model_kwargs=sp_model_kwargs)

# test 1 (usage in `transformers`)

# _ = tokenizer.tokenize(text)

# test 2 (direct use in sentencepiece)

pieces = tokenizer.sp_model.encode(text, out_type=str)

# `encode` works with `True` if torch is not installed and tf==2.12

sp_model_kwargs = {"enable_sampling": True}

tokenizer = AlbertTokenizer(fn, sp_model_kwargs=sp_model_kwargs)

# test 1 (usage in `transformers`)

# _ = tokenizer.tokenize(text)

# This works

# test 2 (direct use in sentencepiece)

pieces = tokenizer.sp_model.encode(text, out_type=str)

print(pieces)

```<|||||>## (Hacky) Fix

The relevant failing tests are:

- test_subword_regularization_tokenizer

- test_pickle_subword_regularization_tokenizer

As mentioned above, those failing tests only happen when running with pytest. Furthermore, those test don't actually need `protobuf` in order to run. However, in the TF CI job, `protobuf` is a dependency from TensorFlow.

It turns out that running those 2 problematic tests in a subprocess will avoid the crash. It's unclear what actually happens though.

This PR modify those 2 tests to be run in a subprocess, so we can have `protobuf==3.20.3` along with `tensroflow==2.12`.<|||||>The TF job is successful with the last fix, see this job run

https://app.circleci.com/pipelines/github/huggingface/transformers/64174/workflows/688a1174-8a6f-4599-9479-f51bbc2aacdb/jobs/793536/artifacts

The other jobs should be fine (we will see in 30 minutes) as they already pass before.<|||||>> Thank you again @jose-turintech for this PR, which allows to use TF 2.12!

Thank you very much for taking the time to fix the issues, you did all the work; really appreciate it. |

transformers | 22,758 | closed | GPTNeoX position_ids not defined | ### System Info

- `transformers` version: 4.28.0.dev0

- Platform: Linux-4.18.0-425.10.1.el8_7.x86_64-x86_64-with-glibc2.28

- Python version: 3.9.13

- Huggingface_hub version: 0.13.3

- Safetensors version: not installed

- PyTorch version (GPU?): 2.0.0+cu117 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: yes

### Who can help?

@ArthurZucker @stas00

Hi, I am performing inference using `GPT-NeoX 20B` model using greedy search. Without deepspeed the text generation works fine. However, when I use deepspeed for inference, I am getting the following error

```bash

╭─────────────────────────────── Traceback (most recent call last) ────────────────────────────────╮

│ ~/examplesinference/asqa_inference.py:297 in │

│ <module> │

│ │

│ 294 │

│ 295 │

│ 296 if __name__ == "__main__": │

│ ❱ 297 │ main() │

│ 298 │

│ │

│ ~/examplesinference/asqa_inference.py:271 in main │

│ │

│ 268 │ │ │ │ + "\nQ: " │

│ 269 │ │ │ ) │

│ 270 │ │ new_prompt = prompt + d["question"] + "\nA:" │

│ ❱ 271 │ │ output = predict_text_greedy( │

│ 272 │ │ │ model, │

│ 273 │ │ │ tokenizer, │

│ 274 │ │ │ new_prompt, │

│ │

│ ~/examplesinference/asqa_inference.py:98 in │

│ predict_text_greedy │

│ │

│ 95 │ │

│ 96 │ model.eval() │

│ 97 │ with torch.no_grad(): │

│ ❱ 98 │ │ generated_ids = model.generate( │

│ 97 │ with torch.no_grad(): │ [64/49095]

│ ❱ 98 │ │ generated_ids = model.generate( │

│ 99 │ │ │ input_ids, │

│ 100 │ │ │ max_new_tokens=50, │

│ 101 │ │ │ use_cache=use_cache, │

│ │

│ ~/my_envlib/python3.9/site-packages/deepspeed/inference/engine.py:588 in │

│ _generate │

│ │

│ 585 │ │ │ │ "add your request to: https://github.com/microsoft/DeepSpeed/issues/2506 │

│ 586 │ │ │ ) │

│ 587 │ │ │

│ ❱ 588 │ │ return self.module.generate(*inputs, **kwargs) │

│ 589 │

│ │

│ ~/my_envlib/python3.9/site-packages/torch/utils/_contextlib.py:115 in │

│ decorate_context │

│ │

│ 112 │ @functools.wraps(func) │

│ 113 │ def decorate_context(*args, **kwargs): │

│ 114 │ │ with ctx_factory(): │

│ ❱ 115 │ │ │ return func(*args, **kwargs) │

│ 116 │ │

│ 117 │ return decorate_context │

│ 118 │

│ │

│ ~/my_envlib/python3.9/site-packages/transformers/generation/utils.py:1437 in │

│ generate │

│ │

│ 1434 │ │ │ │ ) │

│ 1435 │ │ │ │

│ 1436 │ │ │ # 11. run greedy search │

│ ❱ 1437 │ │ │ return self.greedy_search( │

│ 1438 │ │ │ │ input_ids, │

│ 1439 │ │ │ │ logits_processor=logits_processor, │

│ 1440 │ │ │ │ stopping_criteria=stopping_criteria, │

│ │

│ ~/my_envlib/python3.9/site-packages/transformers/generation/utils.py:2248 in │

│ greedy_search │

│ │

│ 2245 │ │ │ model_inputs = self.prepare_inputs_for_generation(input_ids, **model_kwargs) │

│ 2246 │ │ │ │

│ 2247 │ │ │ # forward pass to get next token │

│ ❱ 2248 │ │ │ outputs = self( │

│ 2249 │ │ │ │ **model_inputs, │

│ 2250 │ │ │ │ return_dict=True, │

│ 2251 │ │ │ │ output_attentions=output_attentions, │

│ │

│ ~/my_envlib/python3.9/site-packages/torch/nn/modules/module.py:1501 in │

│ _call_impl │

│ │

│ 1498 │ │ if not (self._backward_hooks or self._backward_pre_hooks or self._forward_hooks │

│ 1499 │ │ │ │ or _global_backward_pre_hooks or _global_backward_hooks │ [12/49095]

│ 1500 │ │ │ │ or _global_forward_hooks or _global_forward_pre_hooks): │

│ ❱ 1501 │ │ │ return forward_call(*args, **kwargs) │

│ 1502 │ │ # Do not call functions when jit is used │

│ 1503 │ │ full_backward_hooks, non_full_backward_hooks = [], [] │

│ 1504 │ │ backward_pre_hooks = [] │

│ │

│ ~/my_envlib/python3.9/site-packages/transformers/models/gpt_neox/modeling_gp │

│ t_neox.py:662 in forward │

│ │

│ 659 │ │ ```""" │

│ 660 │ │ return_dict = return_dict if return_dict is not None else self.config.use_return │

│ 661 │ │ │

│ ❱ 662 │ │ outputs = self.gpt_neox( │

│ 663 │ │ │ input_ids, │

│ 664 │ │ │ attention_mask=attention_mask, │

│ 665 │ │ │ position_ids=position_ids, │

│ │

│ ~/my_envlib/python3.9/site-packages/torch/nn/modules/module.py:1501 in │

│ _call_impl │

│ │

│ 1498 │ │ if not (self._backward_hooks or self._backward_pre_hooks or self._forward_hooks │

│ 1499 │ │ │ │ or _global_backward_pre_hooks or _global_backward_hooks │

│ 1500 │ │ │ │ or _global_forward_hooks or _global_forward_pre_hooks): │

│ ❱ 1501 │ │ │ return forward_call(*args, **kwargs) │

│ 1502 │ │ # Do not call functions when jit is used │

│ 1503 │ │ full_backward_hooks, non_full_backward_hooks = [], [] │

│ 1504 │ │ backward_pre_hooks = [] │

│ │

│ ~/my_envlib/python3.9/site-packages/transformers/models/gpt_neox/modeling_gp │

│ t_neox.py:553 in forward │

│ │

│ 550 │ │ │ │ │ head_mask[i], │

│ 551 │ │ │ │ ) │

│ 552 │ │ │ else: │

│ ❱ 553 │ │ │ │ outputs = layer( │

│ 554 │ │ │ │ │ hidden_states, │

│ 555 │ │ │ │ │ attention_mask=attention_mask, │

│ 556 │ │ │ │ │ position_ids=position_ids, │

│ │

│ ~/my_envlib/python3.9/site-packages/torch/nn/modules/module.py:1501 in │

│ _call_impl │

│ │

│ 1498 │ │ if not (self._backward_hooks or self._backward_pre_hooks or self._forward_hooks │

│ 1499 │ │ │ │ or _global_backward_pre_hooks or _global_backward_hooks │

│ 1500 │ │ │ │ or _global_forward_hooks or _global_forward_pre_hooks): │

│ ❱ 1501 │ │ │ return forward_call(*args, **kwargs) │

│ 1502 │ │ # Do not call functions when jit is used │

│ 1503 │ │ full_backward_hooks, non_full_backward_hooks = [], [] │

│ 1504 │ │ backward_pre_hooks = [] │

╰──────────────────────────────────────────────────────────────────────────────────────────────────╯

TypeError: forward() got an unexpected keyword argument 'position_ids'

```

This is how I am wrapping deepspeed around the model

```python

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer.padding_side = "left"

if tokenizer.pad_token is None:

tokenizer.pad_token = tokenizer.eos_token

tokenizer.pad_token_id = tokenizer.eos_token_id

reduced_model_name = model_name.split("/")[-1]

device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")

model = deepspeed.init_inference(

model, mp_size=world_size, dtype=torch.float32, replace_with_kernel_inject=True

)

```

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [X] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

import deepspeed

model_name = 'EleutherAI/gpt-neox-20b'

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer.padding_side = "left"

if tokenizer.pad_token is None:

tokenizer.pad_token = tokenizer.eos_token

tokenizer.pad_token_id = tokenizer.eos_token_id

reduced_model_name = model_name.split("/")[-1]

device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")

model = deepspeed.init_inference(

model, mp_size=world_size, dtype=torch.float32, replace_with_kernel_inject=True

)

model.to(device)

model.eval()

input_ids = tokenizer('The quick brown fox jumped over the lazy dog', return_tensors="pt").input_ids.to(

dtype=torch.long, device=device

)

with torch.no_grad():

generated_ids = model.generate(

input_ids,

max_new_tokens=50,

pad_token_id=tokenizer.eos_token_id,

)

preds = [

tokenizer.decode(

g, skip_special_tokens=True, clean_up_tokenization_spaces=True

)

for g in generated_ids

]

```

### Expected behavior

There should be no difference whether I wrap `deepspeed` around the model or not. | 04-14-2023 04:38:05 | 04-14-2023 04:38:05 | `transformers` isn't involved with deepspeed's inference engine, other than being used by it indirectly, so please refile at https://github.com/microsoft/DeepSpeed/issues. Thank you. |

transformers | 22,757 | closed | Huge Num Epochs (9223372036854775807) when using Trainer API with streaming dataset | ### System Info

# System Info

Running on SageMaker Studio g4dn 2xlarge.

```

!cat /etc/os-release

PRETTY_NAME="Debian GNU/Linux 10 (buster)"

```

```

!transformers-cli env

- `transformers` version: 4.28.0

- Platform: Linux-4.14.309-231.529.amzn2.x86_64-x86_64-with-debian-10.6

- Python version: 3.7.10

- Huggingface_hub version: 0.13.4

- Safetensors version: not installed

- PyTorch version (GPU?): 1.13.1+cu117 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: YES

- Using distributed or parallel set-up in script?: <fill in>

```

```

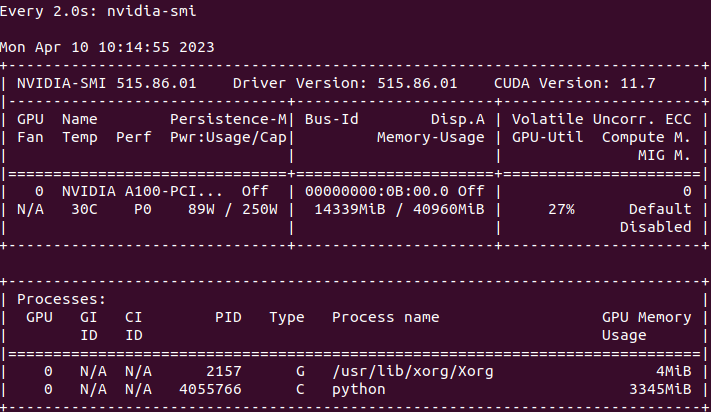

!nvidia-smi

Fri Apr 14 04:32:30 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.57.02 Driver Version: 470.57.02 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:1E.0 Off | 0 |

| N/A 32C P0 25W / 70W | 13072MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

```

# Background

Fine tune BLOOM model for summarization.

- Model: [bigscience/bloom-560m](https://huggingface.co/bigscience/bloom-560m)

- Task: Summarization (using PromptSource with ```input_ids``` set to tokenized text and ```labels``` set to tokenized summary.

- Framework: Pytorch

- Training: Trainer API

- Dataset: [xsum](https://huggingface.co/datasets/xsum)

# Problem

When using the streaming huggingface dataset, Trainer API shows huge ```Num Epochs = 9,223,372,036,854,775,807```.

```

trainer.train()

-----

***** Running training *****

Num examples = 6,144

Num Epochs = 9,223,372,036,854,775,807 <-----

Instantaneous batch size per device = 1

Total train batch size (w. parallel, distributed & accumulation) = 1

Gradient Accumulation steps = 1

Total optimization steps = 6,144

Number of trainable parameters = 559,214,592

```

The ```TrainingArguments``` used:

```

DATASET_STREAMING: bool = True

NUM_EPOCHS: int = 3

DATASET_TRAIN_NUM_SELECT: int = 2048

MAX_STEPS: int = NUM_EPOCHS * DATASET_TRAIN_NUM_SELECT if DATASET_STREAMING else -1

training_args = TrainingArguments(

output_dir="bloom_finetuned",

max_steps=MAX_STEPS, # <--- 2048 * 3

num_train_epochs=NUM_EPOCHS,

per_device_train_batch_size=1,

per_device_eval_batch_size=1,

learning_rate=2e-5,

weight_decay=0.01,

no_cuda=False,

)

```

When not using streaming ```DATASET_STREAMING=False``` as in the code, the ```Num Epochs``` is displayed as expected.

```

***** Running training *****

Num examples = 2,048

Num Epochs = 3

Instantaneous batch size per device = 1

Total train batch size (w. parallel, distributed & accumulation) = 1

Gradient Accumulation steps = 1

Total optimization steps = 6,144

Number of trainable parameters = 559,214,592

```

### Who can help?

trainer: @sgugger

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

Run the code below

```

! pip install torch transformers datasets evaluate scikit-learn rouge rouge-score promptsource --quiet

```

```

import multiprocessing

import re

from typing import (

List,

Dict,

Callable,

)

import evaluate

import numpy as np

from datasets import (

load_dataset,

get_dataset_split_names

)

from promptsource.templates import (

DatasetTemplates,

Template

)

from transformers import (

AutoTokenizer,

DataCollatorWithPadding,

DataCollatorForSeq2Seq,

BloomForCausalLM,

TrainingArguments,

Trainer

)

## Huggingface Datasets

DATASET_NAME: str = "xsum"

DATASET_STREAMING: bool = True # If using Dataset streaming

DATASET_TRAIN_NUM_SELECT: int = 2048 # Number of rows to use for training

DATASET_VALIDATE_NUM_SELECT: int = 128

# Huggingface Tokenizer (BLOOM default token length is 2048)

MAX_TOKEN_LENGTH: int = 512 # Max token length to avoid out of memory

PER_DEVICE_BATCH_SIZE: int = 1 # GPU batch size

# Huggingface Model

MODEL = "bigscience/bloom-560m"

# Training

NUM_EPOCHS: int = 3

MAX_STEPS: int = NUM_EPOCHS * DATASET_TRAIN_NUM_SELECT if DATASET_STREAMING else -1

train = load_dataset("xsum", split="train", streaming=DATASET_STREAMING)

prompt_templates = DatasetTemplates( dataset_name=DATASET_NAME)

template: Template = prompt_templates['summarize_DOC']

# # Preprocess

tokenizer = AutoTokenizer.from_pretrained(MODEL, use_fast=True)

def get_convert_to_request_response(template: Template) -> Callable:

def _convert_to_prompt_response(example: Dict[str, str]) -> Dict[str, str]:

"""Generate prompt, response as a dictionary:

{

"prompt": "Summarize: ...",

"response": "..."

}

NOTE: DO NOT use with dataset map function( batched=True). Use batch=False

Args:

example: single {document, summary} pair to be able to apply template

Returns: a dictionary of pro

"""

# assert isinstance(example, dict), f"expected dict but {type(example)}.\n{example}"

assert isinstance(example['document'], str), f"expected str but {type(example['document'])}."

prompt, response = template.apply(example=example, truncate=False)

return {

"prompt": re.sub(r'[\s\'\"]+', ' ', prompt),

"response": re.sub(r'[\s\'\"]+', ' ', response)

}

return _convert_to_prompt_response

convert_to_request_response: Callable = get_convert_to_request_response(template=template)

def tokenize_prompt_response(examples):

"""Generate the model inputs in the dictionary with format:

{

"input_ids": List[int],

"attention_mask": List[int]",

"labels": List[int]

}

Note: Huggngface dataaset map(batched=True, batch_size=n) merges values of

n dictionarys into a values of the key. If you have n instances of {"key", "v"}, then

you will get {"key": ["v", "v", "v", ...] }.

Args:

examples: a dictionary of format {

"prompt": [prompt+],

"response": [respnse+]

} where + means more than one instance because of Dataset.map(batched=True)

"""

inputs: Dict[str, List[int]] = tokenizer(

text_target=examples["prompt"],

max_length=MAX_TOKEN_LENGTH,

truncation=True

)

labels: Dict[str, List[int]] = tokenizer(

text_target=examples["response"],

max_length=MAX_TOKEN_LENGTH,

truncation=True,

padding='max_length',

)

inputs["labels"] = labels["input_ids"]

return inputs

remove_column_names: List[str] = list(train.features.keys())

tokenized_train = train.map(

function=convert_to_request_response,

batched=False,

batch_size=2048,

drop_last_batch=False,

remove_columns=remove_column_names,

).map(

function=tokenize_prompt_response,

batched=True,

batch_size=32,

drop_last_batch=True,

remove_columns=['prompt', 'response']

).shuffle(

seed=42

).with_format(

"torch"

)

if DATASET_STREAMING: