url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

1.83B

| node_id

stringlengths 18

32

| number

int64 1

6.09k

| title

stringlengths 1

290

| labels

list | state

stringclasses 2

values | locked

bool 1

class | milestone

dict | comments

int64 0

54

| created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| closed_at

stringlengths 20

20

⌀ | active_lock_reason

null | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict | is_pull_request

bool 2

classes | comments_text

sequence |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/4180 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4180/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4180/comments | https://api.github.com/repos/huggingface/datasets/issues/4180/events | https://github.com/huggingface/datasets/issues/4180 | 1,208,042,320 | I_kwDODunzps5IAUNQ | 4,180 | Add some iteration method on a dataset column (specific for inference) | [

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

}

] | open | false | null | 5 | 2022-04-19T09:15:45Z | 2022-04-21T10:30:58Z | null | null | **Is your feature request related to a problem? Please describe.**

A clear and concise description of what the problem is.

Currently, `dataset["audio"]` will load EVERY element in the dataset in RAM, which can be quite big for an audio dataset.

Having an iterator (or sequence) type of object, would make inference with `transformers` 's `pipeline` easier to use and not so memory hungry.

**Describe the solution you'd like**

A clear and concise description of what you want to happen.

For a non breaking change:

```python

for audio in dataset.iterate("audio"):

# {"array": np.array(...), "sampling_rate":...}

```

For a breaking change solution (not necessary), changing the type of `dataset["audio"]` to a sequence type so that

```python

pipe = pipeline(model="...")

for out in pipe(dataset["audio"]):

# {"text":....}

```

could work

**Describe alternatives you've considered**

A clear and concise description of any alternative solutions or features you've considered.

```python

def iterate(dataset, key):

for item in dataset:

yield dataset[key]

for out in pipeline(iterate(dataset, "audio")):

# {"array": ...}

```

This works but requires the helper function which feels slightly clunky.

**Additional context**

Add any other context about the feature request here.

The context is actually to showcase better integration between `pipeline` and `datasets` in the Quicktour demo: https://github.com/huggingface/transformers/pull/16723/files

@lhoestq

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4180/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4180/timeline | null | null | null | null | false | [

"Thanks for the suggestion ! I agree it would be nice to have something directly in `datasets` to do something as simple as that\r\n\r\ncc @albertvillanova @mariosasko @polinaeterna What do you think if we have something similar to pandas `Series` that wouldn't bring everything in memory when doing `dataset[\"audio\"]` ? Currently it returns a list with all the decoded audio data in memory.\r\n\r\nIt would be a breaking change though, since `isinstance(dataset[\"audio\"], list)` wouldn't work anymore, but we could implement a `Sequence` so that `dataset[\"audio\"][0]` still works and only loads one item in memory.\r\n\r\nYour alternative suggestion with `iterate` is also sensible, though maybe less satisfactory in terms of experience IMO",

"I agree that current behavior (decoding all audio file sin the dataset when accessing `dataset[\"audio\"]`) is not useful, IMHO. Indeed in our docs, we are constantly warning our collaborators not to do that.\r\n\r\nTherefore I upvote for a \"useful\" behavior of `dataset[\"audio\"]`. I don't think the breaking change is important in this case, as I guess no many people use it with its current behavior. Therefore, for me it seems reasonable to return a generator (instead of an in-memeory list) for \"special\" features, like Audio/Image.\r\n\r\n@lhoestq on the other hand I don't understand your proposal about Pandas-like... ",

"I recall I had the same idea while working on the `Image` feature, so I agree implementing something similar to `pd.Series` that lazily brings elements in memory would be beneficial.",

"@lhoestq @mariosasko Could you please give a link to that new feature of `pandas.Series`? As far as I remember since I worked with pandas for more than 6 years, there was no lazy in-memory feature; it was everything in-memory; that was the reason why other frameworks were created, like Vaex or Dask, e.g. ",

"Yea pandas doesn't do lazy loading. I was referring to pandas.Series to say that they have a dedicated class to represent a column ;)"

] |

https://api.github.com/repos/huggingface/datasets/issues/2046 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2046/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2046/comments | https://api.github.com/repos/huggingface/datasets/issues/2046/events | https://github.com/huggingface/datasets/issues/2046 | 830,423,033 | MDU6SXNzdWU4MzA0MjMwMzM= | 2,046 | add_faisis_index gets very slow when doing it interatively | [] | closed | false | null | 11 | 2021-03-12T20:27:18Z | 2021-03-24T22:29:11Z | 2021-03-24T22:29:11Z | null | As the below code suggests, I want to run add_faisis_index in every nth interaction from the training loop. I have 7.2 million documents. Usually, it takes 2.5 hours (if I run an as a separate process similar to the script given in rag/use_own_knowleldge_dataset.py). Now, this takes usually 5hrs. Is this normal? Any way to make this process faster?

@lhoestq

```

def training_step(self, batch, batch_idx) -> Dict:

if (not batch_idx==0) and (batch_idx%5==0):

print("******************************************************")

ctx_encoder=self.trainer.model.module.module.model.rag.ctx_encoder

model_copy =type(ctx_encoder)(self.config_dpr) # get a new instance #this will be load in the CPU

model_copy.load_state_dict(ctx_encoder.state_dict()) # copy weights and stuff

list_of_gpus = ['cuda:2','cuda:3']

c_dir='/custom/cache/dir'

kb_dataset = load_dataset("csv", data_files=[self.custom_config.csv_path], split="train", delimiter="\t", column_names=["title", "text"],cache_dir=c_dir)

print(kb_dataset)

n=len(list_of_gpus) #nunber of dedicated GPUs

kb_list=[kb_dataset.shard(n, i, contiguous=True) for i in range(n)]

#kb_dataset.save_to_disk('/hpc/gsir059/MY-Test/RAY/transformers/examples/research_projects/rag/haha-dir')

print(self.trainer.global_rank)

dataset_shards = self.re_encode_kb(model_copy.to(device=list_of_gpus[self.trainer.global_rank]),kb_list[self.trainer.global_rank])

output = [None for _ in list_of_gpus]

#self.trainer.accelerator_connector.accelerator.barrier("embedding_process")

dist.all_gather_object(output, dataset_shards)

#This creation and re-initlaization of the new index

if (self.trainer.global_rank==0): #saving will be done in the main process

combined_dataset = concatenate_datasets(output)

passages_path =self.config.passages_path

logger.info("saving the dataset with ")

#combined_dataset.save_to_disk('/hpc/gsir059/MY-Test/RAY/transformers/examples/research_projects/rag/MY-Passage')

combined_dataset.save_to_disk(passages_path)

logger.info("Add faiss index to the dataset that consist of embeddings")

embedding_dataset=combined_dataset

index = faiss.IndexHNSWFlat(768, 128, faiss.METRIC_INNER_PRODUCT)

embedding_dataset.add_faiss_index("embeddings", custom_index=index)

embedding_dataset.get_index("embeddings").save(self.config.index_path)

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2046/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2046/timeline | null | completed | null | null | false | [

"I think faiss automatically sets the number of threads to use to build the index.\r\nCan you check how many CPU cores are being used when you build the index in `use_own_knowleldge_dataset` as compared to this script ? Are there other programs running (maybe for rank>0) ?",

"Hi,\r\n I am running the add_faiss_index during the training process of the RAG from the master process (rank 0). But at the exact moment, I do not run any other process since I do it in every 5000 training steps. \r\n \r\n I think what you say is correct. It depends on the number of CPU cores. I did an experiment to compare the time taken to finish the add_faiss_index process on use_own_knowleldge_dataset.py vs the training loop thing. The training loop thing takes 40 mins more. It might be natural right? \r\n \r\n \r\n at the moment it uses around 40 cores of a 96 core machine (I am fine-tuning the entire process). ",

"Can you try to set the number of threads manually ?\r\nIf you set the same number of threads for both the `use_own_knowledge_dataset.py` and RAG training, it should take the same amount of time.\r\nYou can see how to set the number of thread in the faiss wiki: https://github.com/facebookresearch/faiss/wiki/Threads-and-asynchronous-calls",

"Ok, I will report the details too soon. I am the first one on the list and currently add_index being computed for the 3rd time in the loop. Actually seems like the time is taken to complete each interaction is the same, but around 1 hour more compared to running it without the training loop. A the moment this takes 5hrs and 30 mins. If there is any way to faster the process, an end-to-end rag will be perfect. So I will also try out with different thread numbers too. \r\n\r\n\r\n",

"@lhoestq on a different note, I read about using Faiss-GPU, but the documentation says we should use it when the dataset has the ability to fit into the GPU memory. Although this might work, in the long-term this is not that practical for me.\r\n\r\nhttps://github.com/matsui528/faiss_tips",

"@lhoestq \r\n\r\nHi, I executed the **use_own_dataset.py** script independently and ask a few of my friends to run their programs in the HPC machine at the same time. \r\n\r\n Once there are so many other processes are running the add_index function gets slows down naturally. So basically the speed of the add_index depends entirely on the number of CPU processes. Then I set the number of threads as you have mentioned and got actually the same time for RAG training and independat running. So you are correct! :) \r\n\r\n \r\n Then I added this [issue in Faiss repostiary](https://github.com/facebookresearch/faiss/issues/1767). I got an answer saying our current **IndexHNSWFlat** can get slow for 30 million vectors and it would be better to use alternatives. What do you think?",

"It's a matter of tradeoffs.\r\nHSNW is fast at query time but takes some time to build.\r\nA flat index is flat to build but is \"slow\" at query time.\r\nAn IVF index is probably a good choice for you: fast building and fast queries (but still slower queries than HSNW).\r\n\r\nNote that for an IVF index you would need to have an `nprobe` parameter (number of cells to visit for one query, there are `nlist` in total) that is not too small in order to have good retrieval accuracy, but not too big otherwise the queries will take too much time. From the faiss documentation:\r\n> The nprobe parameter is always a way of adjusting the tradeoff between speed and accuracy of the result. Setting nprobe = nlist gives the same result as the brute-force search (but slower).\r\n\r\nFrom my experience with indexes on DPR embeddings, setting nprobe around 1/4 of nlist gives really good retrieval accuracy and there's no need to have a value higher than that (or you would need to brute-force in order to see a difference).",

"@lhoestq \r\n\r\nThanks a lot for sharing all this prior knowledge. \r\n\r\nJust asking what would be a good nlist of parameters for 30 million embeddings?",

"When IVF is used alone, nlist should be between `4*sqrt(n)` and `16*sqrt(n)`.\r\nFor more details take a look at [this section of the Faiss wiki](https://github.com/facebookresearch/faiss/wiki/Guidelines-to-choose-an-index#how-big-is-the-dataset)",

"Thanks a lot. I was lost with calling the index from class and using faiss_index_factory. ",

"@lhoestq Thanks a lot for the help you have given to solve this issue. As per my experiments, IVF index suits well for my case and it is a lot faster. The use of this can make the entire RAG end-to-end trainable lot faster. So I will close this issue. Will do the final PR soon. "

] |

https://api.github.com/repos/huggingface/datasets/issues/1550 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1550/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1550/comments | https://api.github.com/repos/huggingface/datasets/issues/1550/events | https://github.com/huggingface/datasets/pull/1550 | 765,620,925 | MDExOlB1bGxSZXF1ZXN0NTM5MDEwMDY1 | 1,550 | Add offensive langauge dravidian dataset | [] | closed | false | null | 1 | 2020-12-13T19:54:19Z | 2020-12-18T15:52:49Z | 2020-12-18T14:25:30Z | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1550/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1550/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1550.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1550",

"merged_at": "2020-12-18T14:25:30Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1550.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1550"

} | true | [

"Thanks much!"

] |

|

https://api.github.com/repos/huggingface/datasets/issues/4636 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4636/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4636/comments | https://api.github.com/repos/huggingface/datasets/issues/4636/events | https://github.com/huggingface/datasets/issues/4636 | 1,294,547,836 | I_kwDODunzps5NKTt8 | 4,636 | Add info in docs about behavior of download_config.num_proc | [

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

}

] | closed | false | null | 0 | 2022-07-05T17:01:00Z | 2022-07-28T10:40:32Z | 2022-07-28T10:40:32Z | null | **Is your feature request related to a problem? Please describe.**

I went to override `download_config.num_proc` and was confused about what was happening under the hood. It would be nice to have the behavior documented a bit better so folks know what's happening when they use it.

**Describe the solution you'd like**

- Add note about how the default number of workers is 16. Related code:

https://github.com/huggingface/datasets/blob/7bcac0a6a0fc367cc068f184fa132b8de8dfa11d/src/datasets/download/download_manager.py#L299-L302

- Add note that if the number of workers is higher than the number of files to download, it won't use multiprocessing.

**Describe alternatives you've considered**

maybe it would also be nice to set `num_proc` = `num_files` when `num_proc` > `num_files`.

**Additional context**

...

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4636/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4636/timeline | null | completed | null | null | false | [] |

https://api.github.com/repos/huggingface/datasets/issues/2468 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2468/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2468/comments | https://api.github.com/repos/huggingface/datasets/issues/2468/events | https://github.com/huggingface/datasets/pull/2468 | 916,427,320 | MDExOlB1bGxSZXF1ZXN0NjY2MDk0ODI5 | 2,468 | Implement ClassLabel encoding in JSON loader | [] | closed | false | {

"closed_at": "2021-07-09T05:50:07Z",

"closed_issues": 12,

"created_at": "2021-05-31T16:13:06Z",

"creator": {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

},

"description": "Next minor release",

"due_on": "2021-07-08T07:00:00Z",

"html_url": "https://github.com/huggingface/datasets/milestone/5",

"id": 6808903,

"labels_url": "https://api.github.com/repos/huggingface/datasets/milestones/5/labels",

"node_id": "MDk6TWlsZXN0b25lNjgwODkwMw==",

"number": 5,

"open_issues": 0,

"state": "closed",

"title": "1.9",

"updated_at": "2021-07-12T14:12:00Z",

"url": "https://api.github.com/repos/huggingface/datasets/milestones/5"

} | 1 | 2021-06-09T17:08:54Z | 2021-06-28T15:39:54Z | 2021-06-28T15:05:35Z | null | Close #2365. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2468/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2468/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2468.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2468",

"merged_at": "2021-06-28T15:05:34Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2468.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2468"

} | true | [

"No, nevermind @lhoestq. Thanks to you for your reviews!"

] |

https://api.github.com/repos/huggingface/datasets/issues/1210 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1210/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1210/comments | https://api.github.com/repos/huggingface/datasets/issues/1210/events | https://github.com/huggingface/datasets/pull/1210 | 757,966,959 | MDExOlB1bGxSZXF1ZXN0NTMzMjI2NDQ2 | 1,210 | Add XSUM Hallucination Annotations Dataset | [] | closed | false | null | 1 | 2020-12-06T16:40:19Z | 2020-12-20T13:34:56Z | 2020-12-16T16:57:11Z | null | Adding Google [XSum Hallucination Annotations](https://github.com/google-research-datasets/xsum_hallucination_annotations) dataset. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1210/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1210/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1210.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1210",

"merged_at": "2020-12-16T16:57:11Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1210.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1210"

} | true | [

"@lhoestq All necessary modifications have been done."

] |

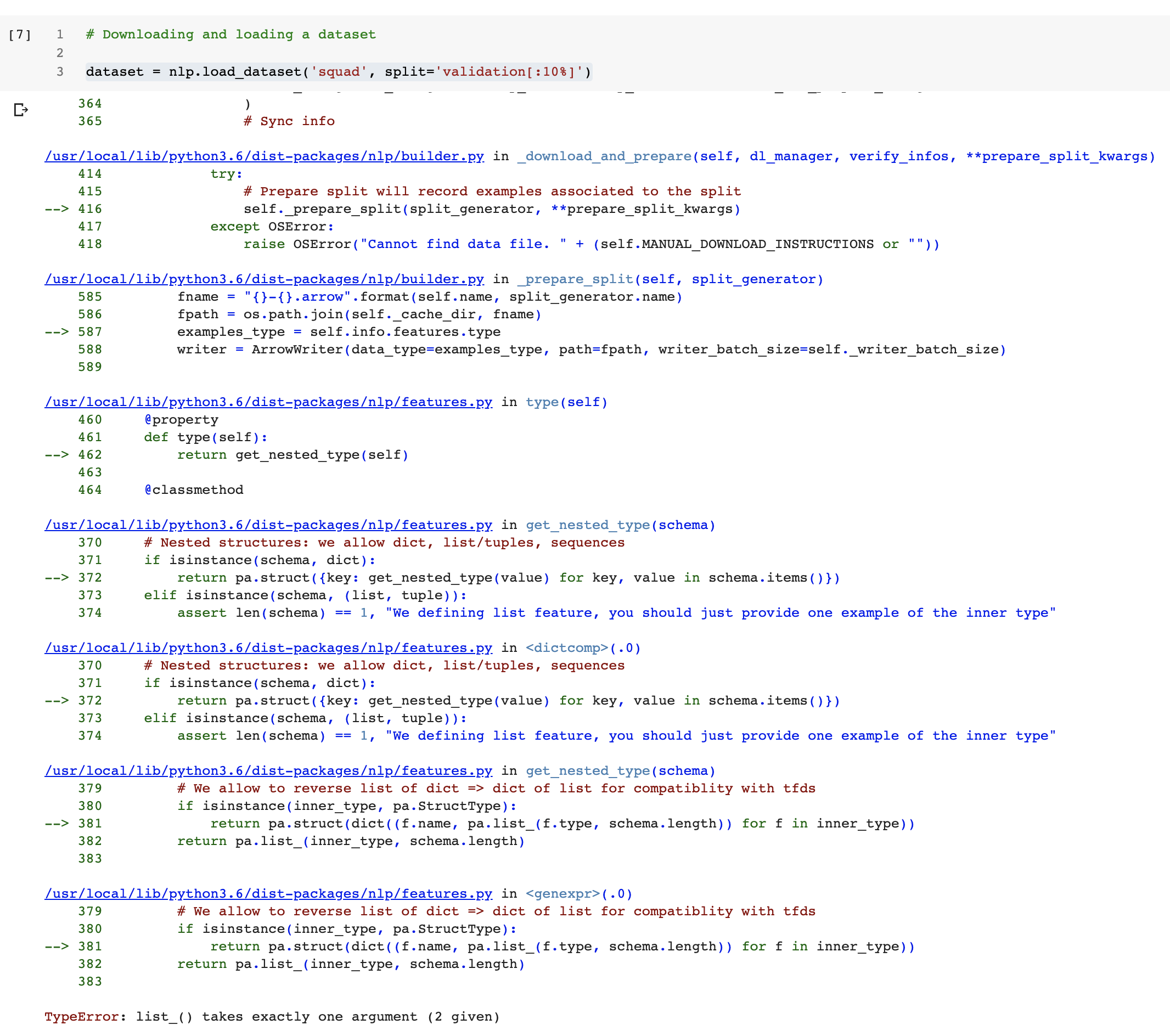

https://api.github.com/repos/huggingface/datasets/issues/222 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/222/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/222/comments | https://api.github.com/repos/huggingface/datasets/issues/222/events | https://github.com/huggingface/datasets/issues/222 | 627,586,690 | MDU6SXNzdWU2Mjc1ODY2OTA= | 222 | Colab Notebook breaks when downloading the squad dataset | [] | closed | false | null | 6 | 2020-05-29T22:55:59Z | 2020-06-04T00:21:05Z | 2020-06-04T00:21:05Z | null | When I run the notebook in Colab

https://colab.research.google.com/github/huggingface/nlp/blob/master/notebooks/Overview.ipynb

breaks when running this cell:

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/222/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/222/timeline | null | completed | null | null | false | [

"The notebook forces version 0.1.0. If I use the latest, things work, I'll run the whole notebook and create a PR.\r\n\r\nBut in the meantime, this issue gets fixed by changing:\r\n`!pip install nlp==0.1.0`\r\nto\r\n`!pip install nlp`",

"It still breaks very near the end\r\n\r\n\r\n",

"When you install `nlp` for the first time on a Colab runtime, it updates the `pyarrow` library that was already on colab. This update shows this message on colab:\r\n```\r\nWARNING: The following packages were previously imported in this runtime:\r\n [pyarrow]\r\nYou must restart the runtime in order to use newly installed versions.\r\n```\r\nYou just have to restart the runtime and it should be fine.\r\nIf you don't restart, then it breaks like in your first message ",

"Thanks for reporting the second one ! We'll update the notebook to fix this one :)",

"This trick from @thomwolf seems to be the most reliable solution to fix this colab notebook issue:\r\n\r\n```python\r\n# install nlp\r\n!pip install -qq nlp==0.2.0\r\n\r\n# Make sure that we have a recent version of pyarrow in the session before we continue - otherwise reboot Colab to activate it\r\nimport pyarrow\r\nif int(pyarrow.__version__.split('.')[1]) < 16:\r\n import os\r\n os.kill(os.getpid(), 9)\r\n```",

"The second part got fixed here: 2cbc656d6fc4b18ce57eac070baec05b31180d39\r\n\r\nThanks! I'm then closing this issue."

] |

https://api.github.com/repos/huggingface/datasets/issues/1740 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1740/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1740/comments | https://api.github.com/repos/huggingface/datasets/issues/1740/events | https://github.com/huggingface/datasets/pull/1740 | 787,264,605 | MDExOlB1bGxSZXF1ZXN0NTU2MDA5NjM1 | 1,740 | add id_liputan6 dataset | [] | closed | false | null | 0 | 2021-01-15T22:58:34Z | 2021-01-20T13:41:26Z | 2021-01-20T13:41:26Z | null | id_liputan6 is a large-scale Indonesian summarization dataset. The articles were harvested from an online news portal, and obtain 215,827 document-summary pairs: https://arxiv.org/abs/2011.00679 | {

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1740/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1740/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1740.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1740",

"merged_at": "2021-01-20T13:41:26Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1740.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1740"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/5613 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5613/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5613/comments | https://api.github.com/repos/huggingface/datasets/issues/5613/events | https://github.com/huggingface/datasets/issues/5613 | 1,611,875,473 | I_kwDODunzps5gE0SR | 5,613 | Version mismatch with multiprocess and dill on Python 3.10 | [] | open | false | null | 4 | 2023-03-06T17:14:41Z | 2023-05-28T01:03:55Z | null | null | ### Describe the bug

Grabbing the latest version of `datasets` and `apache-beam` with `poetry` using Python 3.10 gives a crash at runtime. The crash is

```

File "/Users/adpauls/sc/git/DSI-transformers/data/NQ/create_NQ_train_vali.py", line 1, in <module>

import datasets

File "/Users/adpauls/Library/Caches/pypoetry/virtualenvs/yyy-oPbZ7mKM-py3.10/lib/python3.10/site-packages/datasets/__init__.py", line 43, in <module>

from .arrow_dataset import Dataset

File "/Users/adpauls/Library/Caches/pypoetry/virtualenvs/yyy-oPbZ7mKM-py3.10/lib/python3.10/site-packages/datasets/arrow_dataset.py", line 65, in <module>

from .arrow_reader import ArrowReader

File "/Users/adpauls/Library/Caches/pypoetry/virtualenvs/yyy-oPbZ7mKM-py3.10/lib/python3.10/site-packages/datasets/arrow_reader.py", line 30, in <module>

from .download.download_config import DownloadConfig

File "/Users/adpauls/Library/Caches/pypoetry/virtualenvs/yyy-oPbZ7mKM-py3.10/lib/python3.10/site-packages/datasets/download/__init__.py", line 9, in <module>

from .download_manager import DownloadManager, DownloadMode

File "/Users/adpauls/Library/Caches/pypoetry/virtualenvs/yyy-oPbZ7mKM-py3.10/lib/python3.10/site-packages/datasets/download/download_manager.py", line 35, in <module>

from ..utils.py_utils import NestedDataStructure, map_nested, size_str

File "/Users/adpauls/Library/Caches/pypoetry/virtualenvs/yyy-oPbZ7mKM-py3.10/lib/python3.10/site-packages/datasets/utils/py_utils.py", line 40, in <module>

import multiprocess.pool

File "/Users/adpauls/Library/Caches/pypoetry/virtualenvs/yyy-oPbZ7mKM-py3.10/lib/python3.10/site-packages/multiprocess/pool.py", line 609, in <module>

class ThreadPool(Pool):

File "/Users/adpauls/Library/Caches/pypoetry/virtualenvs/yyy-oPbZ7mKM-py3.10/lib/python3.10/site-packages/multiprocess/pool.py", line 611, in ThreadPool

from .dummy import Process

File "/Users/adpauls/Library/Caches/pypoetry/virtualenvs/yyy-oPbZ7mKM-py3.10/lib/python3.10/site-packages/multiprocess/dummy/__init__.py", line 87, in <module>

class Condition(threading._Condition):

AttributeError: module 'threading' has no attribute '_Condition'. Did you mean: 'Condition'?

```

I think this is a bad interaction of versions from `dill`, `multiprocess`, `apache-beam`, and `threading` from the Python (3.10) standard lib. Upgrading `multiprocess` to a version that does not crash like this is not possible because `apache-beam` pins `dill` to and old version:

```

Because multiprocess (0.70.10) depends on dill (>=0.3.2)

and apache-beam (2.45.0) depends on dill (>=0.3.1.1,<0.3.2), multiprocess (0.70.10) is incompatible with apache-beam (2.45.0).

And because no versions of apache-beam match >2.45.0,<3.0.0, multiprocess (0.70.10) is incompatible with apache-beam (>=2.45.0,<3.0.0).

So, because yyy depends on both apache-beam (^2.45.0) and multiprocess (0.70.10), version solving failed.

```

Perhaps it is not right to file a bug here, but I'm not totally sure whose fault it is. And in any case, this is an immediate blocker to using `datasets` out of the box.

Possibly related to https://github.com/huggingface/datasets/issues/5232.

### Steps to reproduce the bug

Steps to reproduce:

1. Make a poetry project with this configuration

```

[tool.poetry]

name = "yyy"

version = "0.1.0"

description = ""

authors = ["Adam Pauls <[email protected]>"]

readme = "README.md"

packages = [{ include = "xxx" }]

[tool.poetry.dependencies]

python = ">=3.10,<3.11"

datasets = "^2.10.1"

apache-beam = "^2.45.0"

[build-system]

requires = ["poetry-core"]

build-backend = "poetry.core.masonry.api"

```

2. `poetry install`.

3. `poetry run python -c "import datasets"`.

### Expected behavior

Script runs.

### Environment info

Python 3.10. Here are the versions installed by `poetry`:

```

•• Installing frozenlist (1.3.3)

• Installing idna (3.4)

• Installing multidict (6.0.4)

• Installing aiosignal (1.3.1)

• Installing async-timeout (4.0.2)

• Installing attrs (22.2.0)

• Installing certifi (2022.12.7)

• Installing charset-normalizer (3.1.0)

• Installing six (1.16.0)

• Installing urllib3 (1.26.14)

• Installing yarl (1.8.2)

• Installing aiohttp (3.8.4)

• Installing dill (0.3.1.1)

• Installing docopt (0.6.2)

• Installing filelock (3.9.0)

• Installing numpy (1.22.4)

• Installing pyparsing (3.0.9)

• Installing protobuf (3.19.4)

• Installing packaging (23.0)

• Installing python-dateutil (2.8.2)

• Installing pytz (2022.7.1)

• Installing pyyaml (6.0)

• Installing requests (2.28.2)

• Installing tqdm (4.65.0)

• Installing typing-extensions (4.5.0)

• Installing cloudpickle (2.2.1)

• Installing crcmod (1.7)

• Installing fastavro (1.7.2)

• Installing fasteners (0.18)

• Installing fsspec (2023.3.0)

• Installing grpcio (1.51.3)

• Installing hdfs (2.7.0)

• Installing httplib2 (0.20.4)

• Installing huggingface-hub (0.12.1)

• Installing multiprocess (0.70.9)

• Installing objsize (0.6.1)

• Installing orjson (3.8.7)

• Installing pandas (1.5.3)

• Installing proto-plus (1.22.2)

• Installing pyarrow (9.0.0)

• Installing pydot (1.4.2)

• Installing pymongo (3.13.0)

• Installing regex (2022.10.31)

• Installing responses (0.18.0)

• Installing xxhash (3.2.0)

• Installing zstandard (0.20.0)

• Installing apache-beam (2.45.0)

• Installing datasets (2.10.1)

``` | {

"+1": 3,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 3,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5613/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5613/timeline | null | reopened | null | null | false | [

"Sorry, I just found https://github.com/apache/beam/issues/24458. It seems this issue is being worked on. ",

"Reopening, since I think the docs should inform the user of this problem. For example, [this page](https://huggingface.co/docs/datasets/installation) says \r\n> Datasets is tested on Python 3.7+.\r\n\r\nbut it should probably say that Beam Datasets do not work with Python 3.10 (or link to a known issues page). ",

"Same problem on Colab using a vanilla setup running :\r\nPython 3.10.11 \r\napache-beam 2.47.0\r\ndatasets 2.12.0",

"Same problem, \r\npy 3.10.11\r\napache-beam==2.47.0\r\ndatasets==2.12.0"

] |

https://api.github.com/repos/huggingface/datasets/issues/4413 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4413/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4413/comments | https://api.github.com/repos/huggingface/datasets/issues/4413/events | https://github.com/huggingface/datasets/issues/4413 | 1,250,259,822 | I_kwDODunzps5KhXNu | 4,413 | Dataset Viewer issue for ett | [

{

"color": "E5583E",

"default": false,

"description": "Related to the dataset viewer on huggingface.co",

"id": 3470211881,

"name": "dataset-viewer",

"node_id": "LA_kwDODunzps7O1zsp",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset-viewer"

}

] | closed | false | null | 3 | 2022-05-27T02:12:35Z | 2022-06-15T07:30:46Z | 2022-06-15T07:30:46Z | null | ### Link

https://huggingface.co/datasets/ett

### Description

Timestamp is not JSON serializable.

```

Status code: 500

Exception: Status500Error

Message: Type is not JSON serializable: Timestamp

```

### Owner

No | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4413/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4413/timeline | null | completed | null | null | false | [

"Thanks for reporting @dgcnz.\r\n\r\nI have checked that the dataset works fine in streaming mode.\r\n\r\nAdditionally, other datasets containing timestamps are properly rendered by the viewer: https://huggingface.co/datasets/blbooks\r\n\r\nI have tried to force the refresh of the preview, but the endpoint is not responsive: Connection timed out\r\n\r\nCC: @severo ",

"I've just resent the refresh of the preview to the new endpoint, without success.\r\n\r\nCC: @severo ",

"Fixed!\r\n\r\nhttps://huggingface.co/datasets/ett/viewer/h1/test\r\n\r\n<img width=\"982\" alt=\"Capture d’écran 2022-06-15 à 09 30 22\" src=\"https://user-images.githubusercontent.com/1676121/173769035-a075d753-ecfc-4a43-b54b-973105d464d3.png\">\r\n"

] |

https://api.github.com/repos/huggingface/datasets/issues/3253 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3253/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3253/comments | https://api.github.com/repos/huggingface/datasets/issues/3253/events | https://github.com/huggingface/datasets/issues/3253 | 1,051,308,972 | I_kwDODunzps4-qbOs | 3,253 | `GeneratorBasedBuilder` does not support `None` values | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | 1 | 2021-11-11T19:51:21Z | 2021-12-09T14:26:58Z | 2021-12-09T14:26:58Z | null | ## Describe the bug

`GeneratorBasedBuilder` does not support `None` values.

## Steps to reproduce the bug

See [this repository](https://github.com/pavel-lexyr/huggingface-datasets-bug-reproduction) for minimal reproduction.

## Expected results

Dataset is initialized with a `None` value in the `value` column.

## Actual results

```

Traceback (most recent call last):

File "main.py", line 3, in <module>

datasets.load_dataset("./bad-data")

File ".../datasets/load.py", line 1632, in load_dataset

builder_instance.download_and_prepare(

File ".../datasets/builder.py", line 607, in download_and_prepare

self._download_and_prepare(

File ".../datasets/builder.py", line 697, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File ".../datasets/builder.py", line 1103, in _prepare_split

example = self.info.features.encode_example(record)

File ".../datasets/features/features.py", line 1033, in encode_example

return encode_nested_example(self, example)

File ".../datasets/features/features.py", line 808, in encode_nested_example

return {

File ".../datasets/features/features.py", line 809, in <dictcomp>

k: encode_nested_example(sub_schema, sub_obj) for k, (sub_schema, sub_obj) in utils.zip_dict(schema, obj)

File ".../datasets/features/features.py", line 855, in encode_nested_example

return schema.encode_example(obj)

File ".../datasets/features/features.py", line 299, in encode_example

return float(value)

TypeError: float() argument must be a string or a number, not 'NoneType'

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.15.1

- Platform: Linux-5.4.0-81-generic-x86_64-with-glibc2.29

- Python version: 3.8.10

- PyArrow version: 6.0.0 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3253/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3253/timeline | null | completed | null | null | false | [

"Hi,\r\n\r\nthanks for reporting and providing a minimal reproducible example. \r\n\r\nThis line of the PR I've linked in our discussion on the Forum will add support for `None` values:\r\nhttps://github.com/huggingface/datasets/blob/a53de01842aac65c66a49b2439e18fa93ff73ceb/src/datasets/features/features.py#L835\r\n\r\nI expect that PR to be merged soon."

] |

https://api.github.com/repos/huggingface/datasets/issues/1854 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1854/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1854/comments | https://api.github.com/repos/huggingface/datasets/issues/1854/events | https://github.com/huggingface/datasets/issues/1854 | 805,204,397 | MDU6SXNzdWU4MDUyMDQzOTc= | 1,854 | Feature Request: Dataset.add_item | [

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

}

] | closed | false | null | 3 | 2021-02-10T06:06:00Z | 2021-04-23T10:01:30Z | 2021-04-23T10:01:30Z | null | I'm trying to integrate `huggingface/datasets` functionality into `fairseq`, which requires (afaict) being able to build a dataset through an `add_item` method, such as https://github.com/pytorch/fairseq/blob/master/fairseq/data/indexed_dataset.py#L318, as opposed to loading all the text into arrow, and then `dataset.map(binarizer)`.

Is this possible at the moment? Is there an example? I'm happy to use raw `pa.Table` but not sure whether it will support uneven length entries.

### Desired API

```python

import numpy as np

tokenized: List[np.NDArray[np.int64]] = [np.array([4,4,2]), np.array([8,6,5,5,2]), np.array([3,3,31,5])

def build_dataset_from_tokenized(tokenized: List[np.NDArray[int]]) -> Dataset:

"""FIXME"""

dataset = EmptyDataset()

for t in tokenized: dataset.append(t)

return dataset

ds = build_dataset_from_tokenized(tokenized)

assert (ds[0] == np.array([4,4,2])).all()

```

### What I tried

grep, google for "add one entry at a time", "datasets.append"

### Current Code

This code achieves the same result but doesn't fit into the `add_item` abstraction.

```python

dataset = load_dataset('text', data_files={'train': 'train.txt'})

tokenizer = RobertaTokenizerFast.from_pretrained('roberta-base', max_length=4096)

def tokenize_function(examples):

ids = tokenizer(examples['text'], return_attention_mask=False)['input_ids']

return {'input_ids': [x[1:] for x in ids]}

ds = dataset.map(tokenize_function, batched=True, num_proc=4, remove_columns=['text'], load_from_cache_file=not overwrite_cache)

print(ds['train'][0]) => np array

```

Thanks in advance! | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1854/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1854/timeline | null | completed | null | null | false | [

"Hi @sshleifer.\r\n\r\nI am not sure of understanding the need of the `add_item` approach...\r\n\r\nBy just reading your \"Desired API\" section, I would say you could (nearly) get it with a 1-column Dataset:\r\n```python\r\ndata = {\"input_ids\": [np.array([4,4,2]), np.array([8,6,5,5,2]), np.array([3,3,31,5])]}\r\nds = Dataset.from_dict(data)\r\nassert (ds[\"input_ids\"][0] == np.array([4,4,2])).all()\r\n```",

"Hi @sshleifer :) \r\n\r\nWe don't have methods like `Dataset.add_batch` or `Dataset.add_entry/add_item` yet.\r\nBut that's something we'll add pretty soon. Would an API that looks roughly like this help ? Do you have suggestions ?\r\n```python\r\nimport numpy as np\r\nfrom datasets import Dataset\r\n\r\ntokenized = [np.array([4,4,2]), np.array([8,6,5,5,2]), np.array([3,3,31,5])\r\n\r\n# API suggestion (not available yet)\r\nd = Dataset()\r\nfor input_ids in tokenized:\r\n d.add_item({\"input_ids\": input_ids})\r\n\r\nprint(d[0][\"input_ids\"])\r\n# [4, 4, 2]\r\n```\r\n\r\nCurrently you can define a dataset with what @albertvillanova suggest, or via a generator using dataset builders. It's also possible to [concatenate datasets](https://huggingface.co/docs/datasets/package_reference/main_classes.html?highlight=concatenate#datasets.concatenate_datasets).",

"Your API looks perfect @lhoestq, thanks!"

] |

https://api.github.com/repos/huggingface/datasets/issues/4583 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4583/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4583/comments | https://api.github.com/repos/huggingface/datasets/issues/4583/events | https://github.com/huggingface/datasets/pull/4583 | 1,286,790,871 | PR_kwDODunzps46d7xo | 4,583 | <code> implementation of FLAC support using torchaudio | [] | closed | false | null | 0 | 2022-06-28T05:24:21Z | 2022-06-28T05:47:02Z | 2022-06-28T05:47:02Z | null | I had added Audio FLAC support with torchaudio given that Librosa and SoundFile can give problems. Also, FLAC is been used as audio from https://mlcommons.org/en/peoples-speech/ | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4583/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4583/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/4583.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4583",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/4583.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4583"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/4037 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4037/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4037/comments | https://api.github.com/repos/huggingface/datasets/issues/4037/events | https://github.com/huggingface/datasets/issues/4037 | 1,183,144,486 | I_kwDODunzps5GhVom | 4,037 | Error while building documentation | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | 2 | 2022-03-28T09:22:44Z | 2022-03-28T10:01:52Z | 2022-03-28T10:00:48Z | null | ## Describe the bug

Documentation building is failing:

- https://github.com/huggingface/datasets/runs/5716300989?check_suite_focus=true

```

ValueError: There was an error when converting ../datasets/docs/source/package_reference/main_classes.mdx to the MDX format.

Unable to find datasets.filesystems.S3FileSystem in datasets. Make sure the path to that object is correct.

```

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4037/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4037/timeline | null | completed | null | null | false | [

"After some investigation, maybe the bug is in `doc-builder`.\r\n\r\nI've opened an issue there:\r\n- huggingface/doc-builder#160",

"Fixed by @lewtun (thank you):\r\n- huggingface/doc-builder@31fe6c8bc7225810e281c2f6c6cd32f38828c504"

] |

https://api.github.com/repos/huggingface/datasets/issues/1822 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1822/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1822/comments | https://api.github.com/repos/huggingface/datasets/issues/1822/events | https://github.com/huggingface/datasets/pull/1822 | 802,003,835 | MDExOlB1bGxSZXF1ZXN0NTY4MjIxMzIz | 1,822 | Add Hindi Discourse Analysis Natural Language Inference Dataset | [] | closed | false | null | 2 | 2021-02-05T09:30:54Z | 2021-02-15T09:57:39Z | 2021-02-15T09:57:39Z | null | # Dataset Card for Hindi Discourse Analysis Dataset

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- HomePage : https://github.com/midas-research/hindi-nli-data

- Paper : https://www.aclweb.org/anthology/2020.aacl-main.71

- Point of Contact : https://github.com/midas-research/hindi-nli-data

### Dataset Summary

- Dataset for Natural Language Inference in Hindi Language. Hindi Discourse Analysis (HDA) Dataset consists of textual-entailment pairs.

- Each row of the Datasets if made up of 4 columns - Premise, Hypothesis, Label and Topic.

- Premise and Hypothesis is written in Hindi while Entailment_Label is in English.

- Entailment_label is of 2 types - entailed and not-entailed.

- Entailed means that hypotheis can be inferred from premise and not-entailed means vice versa

- Dataset can be used to train models for Natural Language Inference tasks in Hindi Language.

### Supported Tasks and Leaderboards

- Natural Language Inference for Hindi

### Languages

- Dataset is in Hindi

## Dataset Structure

- Data is structured in TSV format.

- train, test and dev files are in seperate files

### Dataset Instances

An example of 'train' looks as follows.

```

{'hypothesis': 'यह एक वर्णनात्मक कथन है।', 'label': 1, 'premise': 'जैसे उस का सारा चेहरा अपना हो और आँखें किसी दूसरे की जो चेहरे पर पपोटों के पीछे महसूर कर दी गईं।', 'topic': 1}

```

### Data Fields

- Each row contatins 4 columns - premise, hypothesis, label and topic.

### Data Splits

- Train : 31892

- Valid : 9460

- Test : 9970

## Dataset Creation

- We employ a recasting technique from Poliak et al. (2018a,b) to convert publicly available Hindi Discourse Analysis classification datasets in Hindi and pose them as TE problems

- In this recasting process, we build template hypotheses for each class in the label taxonomy

- Then, we pair the original annotated sentence with each of the template hypotheses to create TE samples.

- For more information on the recasting process, refer to paper https://www.aclweb.org/anthology/2020.aacl-main.71

### Source Data

Source Dataset for the recasting process is the BBC Hindi Headlines Dataset(https://github.com/NirantK/hindi2vec/releases/tag/bbc-hindi-v0.1)

#### Initial Data Collection and Normalization

- Initial Data was collected by members of MIDAS Lab from Hindi Websites. They crowd sourced the data annotation process and selected two random stories from our corpus and had the three annotators work on them independently and classify each sentence based on the discourse mode.

- Please refer to this paper for detailed information: https://www.aclweb.org/anthology/2020.lrec-1.149/

- The Discourse is further classified into "Argumentative" , "Descriptive" , "Dialogic" , "Informative" and "Narrative" - 5 Clases.

#### Who are the source language producers?

Please refer to this paper for detailed information: https://www.aclweb.org/anthology/2020.lrec-1.149/

### Annotations

#### Annotation process

Annotation process has been described in Dataset Creation Section.

#### Who are the annotators?

Annotation is done automatically by machine and corresponding recasting process.

### Personal and Sensitive Information

No Personal and Sensitive Information is mentioned in the Datasets.

## Considerations for Using the Data

Pls refer to this paper: https://www.aclweb.org/anthology/2020.aacl-main.71

### Discussion of Biases

No known bias exist in the dataset.

Pls refer to this paper: https://www.aclweb.org/anthology/2020.aacl-main.71

### Other Known Limitations

No other known limitations . Size of data may not be enough to train large models

## Additional Information

Pls refer to this link: https://github.com/midas-research/hindi-nli-data

### Dataset Curators

It is written in the repo : https://github.com/midas-research/hindi-nli-data that

- This corpus can be used freely for research purposes.

- The paper listed below provide details of the creation and use of the corpus. If you use the corpus, then please cite the paper.

- If interested in commercial use of the corpus, send email to [email protected].

- If you use the corpus in a product or application, then please credit the authors and Multimodal Digital Media Analysis Lab - Indraprastha Institute of Information Technology, New Delhi appropriately. Also, if you send us an email, we will be thrilled to know about how you have used the corpus.

- Multimodal Digital Media Analysis Lab - Indraprastha Institute of Information Technology, New Delhi, India disclaims any responsibility for the use of the corpus and does not provide technical support. However, the contact listed above will be happy to respond to queries and clarifications.

- Rather than redistributing the corpus, please direct interested parties to this page

- Please feel free to send us an email:

- with feedback regarding the corpus.

- with information on how you have used the corpus.

- if interested in having us analyze your data for natural language inference.

- if interested in a collaborative research project.

### Licensing Information

Copyright (C) 2019 Multimodal Digital Media Analysis Lab - Indraprastha Institute of Information Technology, New Delhi (MIDAS, IIIT-Delhi).

Pls contact authors for any information on the dataset.

### Citation Information

```

@inproceedings{uppal-etal-2020-two,

title = "Two-Step Classification using Recasted Data for Low Resource Settings",

author = "Uppal, Shagun and

Gupta, Vivek and

Swaminathan, Avinash and

Zhang, Haimin and

Mahata, Debanjan and

Gosangi, Rakesh and

Shah, Rajiv Ratn and

Stent, Amanda",

booktitle = "Proceedings of the 1st Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 10th International Joint Conference on Natural Language Processing",

month = dec,

year = "2020",

address = "Suzhou, China",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.aacl-main.71",

pages = "706--719",

abstract = "An NLP model{'}s ability to reason should be independent of language. Previous works utilize Natural Language Inference (NLI) to understand the reasoning ability of models, mostly focusing on high resource languages like English. To address scarcity of data in low-resource languages such as Hindi, we use data recasting to create NLI datasets for four existing text classification datasets. Through experiments, we show that our recasted dataset is devoid of statistical irregularities and spurious patterns. We further study the consistency in predictions of the textual entailment models and propose a consistency regulariser to remove pairwise-inconsistencies in predictions. We propose a novel two-step classification method which uses textual-entailment predictions for classification task. We further improve the performance by using a joint-objective for classification and textual entailment. We therefore highlight the benefits of data recasting and improvements on classification performance using our approach with supporting experimental results.",

}

```

### Contributions

Thanks to [@avinsit123](https://github.com/avinsit123) for adding this dataset.

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1822/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1822/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1822.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1822",

"merged_at": "2021-02-15T09:57:39Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1822.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1822"

} | true | [

"Could you also run `make style` to fix the CI check on code formatting ?",

"@lhoestq completed and resolved all comments."

] |

https://api.github.com/repos/huggingface/datasets/issues/265 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/265/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/265/comments | https://api.github.com/repos/huggingface/datasets/issues/265/events | https://github.com/huggingface/datasets/pull/265 | 637,139,220 | MDExOlB1bGxSZXF1ZXN0NDMzMTgxNDMz | 265 | Add pyarrow warning colab | [] | closed | false | null | 0 | 2020-06-11T15:57:51Z | 2020-08-02T18:14:36Z | 2020-06-12T08:14:16Z | null | When a user installs `nlp` on google colab, then google colab doesn't update pyarrow, and the runtime needs to be restarted to use the updated version of pyarrow.

This is an issue because `nlp` requires the updated version to work correctly.

In this PR I added en error that is shown to the user in google colab if the user tries to `import nlp` without having restarted the runtime. The error tells the user to restart the runtime. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/265/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/265/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/265.diff",

"html_url": "https://github.com/huggingface/datasets/pull/265",

"merged_at": "2020-06-12T08:14:16Z",

"patch_url": "https://github.com/huggingface/datasets/pull/265.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/265"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/415 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/415/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/415/comments | https://api.github.com/repos/huggingface/datasets/issues/415/events | https://github.com/huggingface/datasets/issues/415 | 660,687,076 | MDU6SXNzdWU2NjA2ODcwNzY= | 415 | Something is wrong with WMT 19 kk-en dataset | [

{

"color": "2edb81",

"default": false,

"description": "A bug in a dataset script provided in the library",

"id": 2067388877,

"name": "dataset bug",

"node_id": "MDU6TGFiZWwyMDY3Mzg4ODc3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20bug"

}

] | open | false | null | 0 | 2020-07-19T08:18:51Z | 2020-07-20T09:54:26Z | null | null | The translation in the `train` set does not look right:

```

>>>import nlp

>>>from nlp import load_dataset

>>>dataset = load_dataset('wmt19', 'kk-en')

>>>dataset["train"]["translation"][0]

{'kk': 'Trumpian Uncertainty', 'en': 'Трамптық белгісіздік'}

>>>dataset["validation"]["translation"][0]

{'kk': 'Ақша-несие саясатының сценарийін қайта жазсақ', 'en': 'Rewriting the Monetary-Policy Script'}

``` | {

"+1": 0,

"-1": 0,

"confused": 1,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/415/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/415/timeline | null | null | null | null | false | [] |

https://api.github.com/repos/huggingface/datasets/issues/4087 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4087/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4087/comments | https://api.github.com/repos/huggingface/datasets/issues/4087/events | https://github.com/huggingface/datasets/pull/4087 | 1,191,819,805 | PR_kwDODunzps41lnfO | 4,087 | Fix BeamWriter output Parquet file | [] | closed | false | null | 1 | 2022-04-04T13:46:50Z | 2022-04-05T15:00:40Z | 2022-04-05T14:54:48Z | null | Since now, the `BeamWriter` saved a Parquet file with a simplified schema, where each field value was serialized to JSON. That resulted in Parquet files larger than Arrow files.

This PR:

- writes Parquet file preserving original schema and without serialization, thus avoiding serialization overhead and resulting in a smaller output file size.

- fixes `parquet_to_arrow` function | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 1,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4087/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4087/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/4087.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4087",

"merged_at": "2022-04-05T14:54:48Z",

"patch_url": "https://github.com/huggingface/datasets/pull/4087.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4087"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._"

] |

https://api.github.com/repos/huggingface/datasets/issues/3270 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3270/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3270/comments | https://api.github.com/repos/huggingface/datasets/issues/3270/events | https://github.com/huggingface/datasets/pull/3270 | 1,053,465,662 | PR_kwDODunzps4uhcxm | 3,270 | Add os.listdir for streaming | [] | closed | false | null | 0 | 2021-11-15T10:14:04Z | 2021-11-15T10:27:03Z | 2021-11-15T10:27:03Z | null | Extend `os.listdir` to support streaming data from remote files. This is often used to navigate in remote ZIP files for example | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3270/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3270/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3270.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3270",

"merged_at": "2021-11-15T10:27:02Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3270.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3270"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/5504 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5504/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5504/comments | https://api.github.com/repos/huggingface/datasets/issues/5504/events | https://github.com/huggingface/datasets/pull/5504 | 1,570,621,242 | PR_kwDODunzps5JPoWy | 5,504 | don't zero copy timestamps | [] | closed | false | null | 3 | 2023-02-03T23:39:04Z | 2023-02-08T17:28:50Z | 2023-02-08T14:33:17Z | null | Fixes https://github.com/huggingface/datasets/issues/5495

I'm not sure whether we prefer a test here or if timestamps are known to be unsupported (like booleans). The current test at least covers the bug | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5504/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5504/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/5504.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5504",

"merged_at": "2023-02-08T14:33:17Z",

"patch_url": "https://github.com/huggingface/datasets/pull/5504.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5504"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==6.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.008606 / 0.011353 (-0.002747) | 0.004659 / 0.011008 (-0.006349) | 0.101311 / 0.038508 (0.062802) | 0.029664 / 0.023109 (0.006555) | 0.321850 / 0.275898 (0.045952) | 0.380497 / 0.323480 (0.057017) | 0.007003 / 0.007986 (-0.000982) | 0.003393 / 0.004328 (-0.000936) | 0.078704 / 0.004250 (0.074453) | 0.035810 / 0.037052 (-0.001242) | 0.327271 / 0.258489 (0.068782) | 0.369302 / 0.293841 (0.075461) | 0.033625 / 0.128546 (-0.094921) | 0.011563 / 0.075646 (-0.064084) | 0.323950 / 0.419271 (-0.095322) | 0.040660 / 0.043533 (-0.002872) | 0.327211 / 0.255139 (0.072072) | 0.350325 / 0.283200 (0.067125) | 0.085427 / 0.141683 (-0.056256) | 1.464370 / 1.452155 (0.012216) | 1.490355 / 1.492716 (-0.002362) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.202879 / 0.018006 (0.184873) | 0.419836 / 0.000490 (0.419346) | 0.000303 / 0.000200 (0.000103) | 0.000063 / 0.000054 (0.000008) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.023336 / 0.037411 (-0.014075) | 0.096817 / 0.014526 (0.082291) | 0.103990 / 0.176557 (-0.072567) | 0.137749 / 0.737135 (-0.599386) | 0.108236 / 0.296338 (-0.188102) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.420801 / 0.215209 (0.205592) | 4.205308 / 2.077655 (2.127653) | 2.050363 / 1.504120 (0.546243) | 1.877390 / 1.541195 (0.336195) | 2.031060 / 1.468490 (0.562570) | 0.687950 / 4.584777 (-3.896827) | 3.363202 / 3.745712 (-0.382510) | 1.869482 / 5.269862 (-3.400379) | 1.159131 / 4.565676 (-3.406545) | 0.082374 / 0.424275 (-0.341901) | 0.012425 / 0.007607 (0.004818) | 0.519775 / 0.226044 (0.293731) | 5.244612 / 2.268929 (2.975684) | 2.371314 / 55.444624 (-53.073311) | 2.052713 / 6.876477 (-4.823764) | 2.190015 / 2.142072 (0.047942) | 0.803806 / 4.805227 (-4.001421) | 0.148110 / 6.500664 (-6.352554) | 0.064174 / 0.075469 (-0.011295) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.250424 / 1.841788 (-0.591364) | 13.487870 / 8.074308 (5.413561) | 13.080736 / 10.191392 (2.889344) | 0.147715 / 0.680424 (-0.532709) | 0.028409 / 0.534201 (-0.505792) | 0.397531 / 0.579283 (-0.181752) | 0.399458 / 0.434364 (-0.034905) | 0.461467 / 0.540337 (-0.078871) | 0.541639 / 1.386936 (-0.845297) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006753 / 0.011353 (-0.004600) | 0.004573 / 0.011008 (-0.006435) | 0.076122 / 0.038508 (0.037614) | 0.027529 / 0.023109 (0.004419) | 0.341291 / 0.275898 (0.065393) | 0.376889 / 0.323480 (0.053409) | 0.005032 / 0.007986 (-0.002953) | 0.003447 / 0.004328 (-0.000882) | 0.075186 / 0.004250 (0.070936) | 0.038516 / 0.037052 (0.001463) | 0.340927 / 0.258489 (0.082438) | 0.386626 / 0.293841 (0.092785) | 0.031929 / 0.128546 (-0.096617) | 0.011759 / 0.075646 (-0.063888) | 0.085616 / 0.419271 (-0.333656) | 0.042858 / 0.043533 (-0.000674) | 0.341881 / 0.255139 (0.086742) | 0.367502 / 0.283200 (0.084303) | 0.090788 / 0.141683 (-0.050895) | 1.472871 / 1.452155 (0.020716) | 1.577825 / 1.492716 (0.085109) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.233137 / 0.018006 (0.215131) | 0.415016 / 0.000490 (0.414526) | 0.000379 / 0.000200 (0.000179) | 0.000059 / 0.000054 (0.000004) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.024966 / 0.037411 (-0.012445) | 0.102794 / 0.014526 (0.088268) | 0.107543 / 0.176557 (-0.069014) | 0.143133 / 0.737135 (-0.594002) | 0.111494 / 0.296338 (-0.184845) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.438354 / 0.215209 (0.223145) | 4.382244 / 2.077655 (2.304589) | 2.056340 / 1.504120 (0.552220) | 1.851524 / 1.541195 (0.310330) | 1.933147 / 1.468490 (0.464657) | 0.701446 / 4.584777 (-3.883331) | 3.396893 / 3.745712 (-0.348819) | 2.837516 / 5.269862 (-2.432346) | 1.538298 / 4.565676 (-3.027379) | 0.083449 / 0.424275 (-0.340826) | 0.012793 / 0.007607 (0.005186) | 0.539661 / 0.226044 (0.313616) | 5.428415 / 2.268929 (3.159487) | 2.527582 / 55.444624 (-52.917042) | 2.172795 / 6.876477 (-4.703682) | 2.220011 / 2.142072 (0.077938) | 0.814338 / 4.805227 (-3.990889) | 0.153468 / 6.500664 (-6.347196) | 0.069056 / 0.075469 (-0.006413) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.278434 / 1.841788 (-0.563354) | 14.284924 / 8.074308 (6.210616) | 13.486596 / 10.191392 (3.295203) | 0.138457 / 0.680424 (-0.541967) | 0.016609 / 0.534201 (-0.517592) | 0.382828 / 0.579283 (-0.196455) | 0.387604 / 0.434364 (-0.046760) | 0.478801 / 0.540337 (-0.061536) | 0.565352 / 1.386936 (-0.821584) |\n\n</details>\n</details>\n\n\n",

"> Thanks! I modified the test a bit to make it more consistent with the rest of the \"extractor\" tests.\r\n\r\nAppreciate the assist on the tests! 🚀 "

] |

https://api.github.com/repos/huggingface/datasets/issues/225 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/225/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/225/comments | https://api.github.com/repos/huggingface/datasets/issues/225/events | https://github.com/huggingface/datasets/issues/225 | 628,083,366 | MDU6SXNzdWU2MjgwODMzNjY= | 225 | [ROUGE] Different scores with `files2rouge` | [

{

"color": "d722e8",

"default": false,

"description": "Discussions on the metrics",

"id": 2067400959,

"name": "Metric discussion",

"node_id": "MDU6TGFiZWwyMDY3NDAwOTU5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/Metric%20discussion"

}

] | closed | false | null | 3 | 2020-06-01T00:50:36Z | 2020-06-03T15:27:18Z | 2020-06-03T15:27:18Z | null | It seems that the ROUGE score of `nlp` is lower than the one of `files2rouge`.

Here is a self-contained notebook to reproduce both scores : https://colab.research.google.com/drive/14EyAXValB6UzKY9x4rs_T3pyL7alpw_F?usp=sharing

---

`nlp` : (Only mid F-scores)

>rouge1 0.33508031962733364

rouge2 0.14574333776191592

rougeL 0.2321187823256159

`files2rouge` :

>Running ROUGE...

===========================

1 ROUGE-1 Average_R: 0.48873 (95%-conf.int. 0.41192 - 0.56339)

1 ROUGE-1 Average_P: 0.29010 (95%-conf.int. 0.23605 - 0.34445)

1 ROUGE-1 Average_F: 0.34761 (95%-conf.int. 0.29479 - 0.39871)

===========================

1 ROUGE-2 Average_R: 0.20280 (95%-conf.int. 0.14969 - 0.26244)

1 ROUGE-2 Average_P: 0.12772 (95%-conf.int. 0.08603 - 0.17752)

1 ROUGE-2 Average_F: 0.14798 (95%-conf.int. 0.10517 - 0.19240)

===========================

1 ROUGE-L Average_R: 0.32960 (95%-conf.int. 0.26501 - 0.39676)

1 ROUGE-L Average_P: 0.19880 (95%-conf.int. 0.15257 - 0.25136)

1 ROUGE-L Average_F: 0.23619 (95%-conf.int. 0.19073 - 0.28663)

---

When using longer predictions/gold, the difference is bigger.

**How can I reproduce same score as `files2rouge` ?**

@lhoestq

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/225/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/225/timeline | null | completed | null | null | false | [

"@Colanim unfortunately there are different implementations of the ROUGE metric floating around online which yield different results, and we had to chose one for the package :) We ended up including the one from the google-research repository, which does minimal post-processing before computing the P/R/F scores. If I recall correctly, files2rouge relies on the Perl, script, which among other things normalizes all numbers to a special token: in the case you presented, this should account for a good chunk of the difference.\r\n\r\nWe may end up adding in more versions of the metric, but probably not for a while (@lhoestq correct me if I'm wrong). However, feel free to take a stab at adding it in yourself and submitting a PR if you're interested!",