url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

1.83B

| node_id

stringlengths 18

32

| number

int64 1

6.09k

| title

stringlengths 1

290

| labels

list | state

stringclasses 2

values | locked

bool 1

class | milestone

dict | comments

int64 0

54

| created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| closed_at

stringlengths 20

20

⌀ | active_lock_reason

null | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict | is_pull_request

bool 2

classes | comments_text

sequence |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/2111 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2111/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2111/comments | https://api.github.com/repos/huggingface/datasets/issues/2111/events | https://github.com/huggingface/datasets/pull/2111 | 841,082,087 | MDExOlB1bGxSZXF1ZXN0NjAwODY4OTg5 | 2,111 | Compute WER metric iteratively | [] | closed | false | null | 7 | 2021-03-25T16:06:48Z | 2021-04-06T07:20:43Z | 2021-04-06T07:20:43Z | null | Compute WER metric iteratively to avoid MemoryError.

Fix #2078. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2111/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2111/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2111.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2111",

"merged_at": "2021-04-06T07:20:43Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2111.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2111"

} | true | [

"I discussed with Patrick and I think we could have a nice addition: have a parameter `concatenate_texts` that, if `True`, uses the old implementation.\r\n\r\nBy default `concatenate_texts` would be `False`, so that sentences are evaluated independently, and to save resources (the WER computation has a quadratic complexity).\r\n\r\nSome users might still want to use the old implementation.",

"@lhoestq @patrickvonplaten are you sure of the parameter name `concatenate_texts`? I was thinking about something like `iter`...",

"Not sure about the name, if you can improve it feel free to do so ^^'\r\nThe old implementation computes the WER on the concatenation of all the input texts, while the new one makes WER measures computation independent for each reference/prediction pair.\r\nThat's why I thought of `concatenate_texts`",

"@lhoestq yes, but the end user does not necessarily know the details of the implementation of the WER computation.\r\n\r\nFrom the end user perspective I think it might make more sense: how do you want to compute the metric?\r\n- all in once, more RAM memory needed?\r\n- iteratively, less RAM requirements?\r\n\r\nBecause of that I was thinking of something like `iter` or `iterative`...",

"Personally like `concatenate_texts` better since I feel like `iter` or `iterate` are quite vague",

"Therefore, you can merge... ;)",

"Ok ! merging :)"

] |

https://api.github.com/repos/huggingface/datasets/issues/1125 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1125/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1125/comments | https://api.github.com/repos/huggingface/datasets/issues/1125/events | https://github.com/huggingface/datasets/pull/1125 | 757,194,531 | MDExOlB1bGxSZXF1ZXN0NTMyNjExMDU5 | 1,125 | Add Urdu fake news dataset. | [] | closed | false | null | 3 | 2020-12-04T15:38:17Z | 2020-12-07T03:21:05Z | 2020-12-07T03:21:05Z | null | Added Urdu fake news dataset. More information about the dataset can be found <a href="https://github.com/MaazAmjad/Datasets-for-Urdu-news">here</a>. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1125/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1125/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1125.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1125",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/1125.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1125"

} | true | [

"@lhoestq looks like a lot of files were updated... shall I create a new PR?",

"Hi @chaitnayabasava ! you can try rebasing and see if that fixes the number of files changed, otherwise please do open a new PR with only the relevant files and close this one :) ",

"Created a new PR #1230.\r\nclosing this one :)"

] |

https://api.github.com/repos/huggingface/datasets/issues/3315 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3315/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3315/comments | https://api.github.com/repos/huggingface/datasets/issues/3315/events | https://github.com/huggingface/datasets/pull/3315 | 1,061,678,452 | PR_kwDODunzps4u7WpU | 3,315 | Removing query params for dynamic URL caching | [] | closed | false | null | 5 | 2021-11-23T20:24:12Z | 2021-11-25T14:44:32Z | 2021-11-25T14:44:31Z | null | The main use case for this is to make dynamically generated private URLs (like the ones returned by CommonVoice API) compatible with the datasets' caching logic.

Usage example:

```python

import datasets

class CommonVoice(datasets.GeneratorBasedBuilder):

def _info(self):

return datasets.DatasetInfo()

def _split_generators(self, dl_manager):

dl_manager.download_config.ignore_url_params = True

HUGE_URL = "https://mozilla-common-voice-datasets.s3.dualstack.us-west-2.amazonaws.com/cv-corpus-7.0-2021-07-21/cv-corpus-7.0-2021-07-21-ab.tar.gz?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=ASIAQ3GQRTO3IU5JYB5K%2F20211125%2Fus-west-2%2Fs3%2Faws4_request&X-Amz-Date=20211125T131423Z&X-Amz-Expires=43200&X-Amz-Security-Token=FwoGZXIvYXdzEL7%2F%2F%2F%2F%2F%2F%2F%2F%2F%2FwEaDLsZw7Nj0d9h4rgheyKSBJJ6bxo1JdWLXAUhLMrUB8AXfhP8Ge4F8dtjwXmvGJgkIvdMT7P4YOEE1pS3mW8AyKsz7Z7IRVCIGQrOH1AbxGVVcDoCMMswXEOqL3nJFihKLf99%2F6l8iJVZdzftRUNgMhX5Hz0xSIL%2BzRDpH5nYa7C6YpEdOdW81CFVXybx7WUrX13wc8X4ZlUj7zrWcWf5p2VEIU5Utb7YHVi0Y5TQQiZSDoedQl0j4VmMuFkDzoobIO%2BvilgGeE2kIX0E62X423mEGNu4uQV5JsOuLAtv3GVlemsqEH3ZYrXDuxLmnvGj5HfMtySwI4vKv%2BlnnirD29o7hxvtidXiA8JMWhp93aP%2Fw7sod%2BPPbb5EqP%2B4Qb2GJ1myClOKcLEY0cqoy7XWm8NeVljLJojnFJVS5mNFBAzCCTJ%2FidxNsj8fflzkRoAzYaaPBuOTL1dgtZCdslK3FAuEvw0cik7P9A7IYiULV33otSHKMPcVfNHFsWQljs03gDztsIUWxaXvu6ck5vCcGULsHbfe6xoMPm2bR9jtKLONsslPcnzWIf7%2Fch2w%2F%2BjtTCd9IxaH4kytyJ6mIjpV%2FA%2F2h9qeDnDFsCphnMjAzPQn6tqCgTtPcyJ2b8c94ncgUnE4mepx%2FDa%2FanAEsrg9RPdmbdoPswzHn1IClh91IfSN74u95DZUxlPeZrHG5HxVCN3dKO6j%2Ft1xd20L0hEtazDdKOr8%2FYwGMirp8rp%2BII0pYOwQOrYHqH%2FREX2dRJctJtwE86Qj1eU8BAdXuFIkLC4NWXw%3D&X-Amz-Signature=1b8108d29b0e9c2bf6c7246e58ca8d5749a83de0704757ad8e8a44d78194691f&X-Amz-SignedHeaders=host"

dl_path = dl_manager.download_and_extract(HUGE_URL)

print(dl_path)

HUGE_URL += "&some_new_or_changed_param=12345"

dl_path = dl_manager.download_and_extract(HUGE_URL)

print(dl_path)

dl_manager = datasets.DownloadManager(dataset_name="common_voice")

CommonVoice()._split_generators(dl_manager)

```

Output:

```

/home/user/.cache/huggingface/datasets/downloads/6ef2a377398ff3309554be040caa78414e6562d623dbd0ce8fc262459a7f8ec6

/home/user/.cache/huggingface/datasets/downloads/6ef2a377398ff3309554be040caa78414e6562d623dbd0ce8fc262459a7f8ec6

``` | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3315/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3315/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3315.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3315",

"merged_at": "2021-11-25T14:44:31Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3315.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3315"

} | true | [

"IMO it makes more sense to have `ignore_url_params` as an attribute of `DownloadConfig` to avoid defining a new argument in `DownloadManger`'s methods.",

"@mariosasko that would make sense to me too, but it seems like `DownloadConfig` wasn't intended to be modified from a dataset loading script. @lhoestq wdyt?",

"We can expose `DownloadConfig` as a property of `DownloadManager`, and then in the script before the download call we could do: `dl_manager.download_config.ignore_url_params = True`. But yes, let's hear what Quentin thinks.",

"Oh indeed that's a great idea. This parameter is similar to others like `download_config.use_etag` that defines the behavior of the download and caching, so it's better if we have it there, and expose the `download_config`",

"Implemented it via `dl_manager.download_config.ignore_url_params` now, and also added a usage example above :) "

] |

https://api.github.com/repos/huggingface/datasets/issues/6075 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/6075/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/6075/comments | https://api.github.com/repos/huggingface/datasets/issues/6075/events | https://github.com/huggingface/datasets/issues/6075 | 1,822,341,398 | I_kwDODunzps5snrkW | 6,075 | Error loading music files using `load_dataset` | [] | closed | false | null | 2 | 2023-07-26T12:44:05Z | 2023-07-26T13:08:08Z | 2023-07-26T13:08:08Z | null | ### Describe the bug

I tried to load a music file using `datasets.load_dataset()` from the repository - https://huggingface.co/datasets/susnato/pop2piano_real_music_test

I got the following error -

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 2803, in __getitem__

return self._getitem(key)

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 2788, in _getitem

formatted_output = format_table(

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/datasets/formatting/formatting.py", line 629, in format_table

return formatter(pa_table, query_type=query_type)

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/datasets/formatting/formatting.py", line 398, in __call__

return self.format_column(pa_table)

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/datasets/formatting/formatting.py", line 442, in format_column

column = self.python_features_decoder.decode_column(column, pa_table.column_names[0])

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/datasets/formatting/formatting.py", line 218, in decode_column

return self.features.decode_column(column, column_name) if self.features else column

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/datasets/features/features.py", line 1924, in decode_column

[decode_nested_example(self[column_name], value) if value is not None else None for value in column]

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/datasets/features/features.py", line 1924, in <listcomp>

[decode_nested_example(self[column_name], value) if value is not None else None for value in column]

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/datasets/features/features.py", line 1325, in decode_nested_example

return schema.decode_example(obj, token_per_repo_id=token_per_repo_id)

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/datasets/features/audio.py", line 184, in decode_example

array, sampling_rate = sf.read(f)

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/soundfile.py", line 372, in read

with SoundFile(file, 'r', samplerate, channels,

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/soundfile.py", line 740, in __init__

self._file = self._open(file, mode_int, closefd)

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/soundfile.py", line 1264, in _open

_error_check(_snd.sf_error(file_ptr),

File "/home/susnato/anaconda3/envs/p2p/lib/python3.9/site-packages/soundfile.py", line 1455, in _error_check

raise RuntimeError(prefix + _ffi.string(err_str).decode('utf-8', 'replace'))

RuntimeError: Error opening <_io.BufferedReader name='/home/susnato/.cache/huggingface/datasets/downloads/d2b09cb974b967b13f91553297c40c0f02f3c0d4c8356350743598ff48d6f29e'>: Format not recognised.

```

### Steps to reproduce the bug

Code to reproduce the error -

```python

from datasets import load_dataset

ds = load_dataset("susnato/pop2piano_real_music_test", split="test")

print(ds[0])

```

### Expected behavior

I should be able to read the music file without any error.

### Environment info

- `datasets` version: 2.14.0

- Platform: Linux-5.19.0-50-generic-x86_64-with-glibc2.35

- Python version: 3.9.16

- Huggingface_hub version: 0.15.1

- PyArrow version: 11.0.0

- Pandas version: 1.5.3

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/6075/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/6075/timeline | null | completed | null | null | false | [

"This code behaves as expected on my local machine or in Colab. Which version of `soundfile` do you have installed? MP3 requires `soundfile>=0.12.1`.",

"I upgraded the `soundfile` and it's working now! \r\nThanks @mariosasko for the help!"

] |

https://api.github.com/repos/huggingface/datasets/issues/2628 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2628/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2628/comments | https://api.github.com/repos/huggingface/datasets/issues/2628/events | https://github.com/huggingface/datasets/pull/2628 | 941,676,404 | MDExOlB1bGxSZXF1ZXN0Njg3NTE0NzQz | 2,628 | Use ETag of remote data files | [] | closed | false | {

"closed_at": "2021-07-21T15:36:49Z",

"closed_issues": 29,

"created_at": "2021-06-08T18:48:33Z",

"creator": {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

},

"description": "Next minor release",

"due_on": "2021-08-05T07:00:00Z",

"html_url": "https://github.com/huggingface/datasets/milestone/6",

"id": 6836458,

"labels_url": "https://api.github.com/repos/huggingface/datasets/milestones/6/labels",

"node_id": "MDk6TWlsZXN0b25lNjgzNjQ1OA==",

"number": 6,

"open_issues": 0,

"state": "closed",

"title": "1.10",

"updated_at": "2021-07-21T15:36:49Z",

"url": "https://api.github.com/repos/huggingface/datasets/milestones/6"

} | 0 | 2021-07-12T05:10:10Z | 2021-07-12T14:08:34Z | 2021-07-12T08:40:07Z | null | Use ETag of remote data files to create config ID.

Related to #2616. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2628/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2628/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2628.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2628",

"merged_at": "2021-07-12T08:40:07Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2628.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2628"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/1334 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1334/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1334/comments | https://api.github.com/repos/huggingface/datasets/issues/1334/events | https://github.com/huggingface/datasets/pull/1334 | 759,699,993 | MDExOlB1bGxSZXF1ZXN0NTM0NjU5MDg2 | 1,334 | Add QED Amara Dataset | [] | closed | false | null | 0 | 2020-12-08T19:01:13Z | 2020-12-10T11:17:25Z | 2020-12-10T11:15:57Z | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1334/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1334/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1334.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1334",

"merged_at": "2020-12-10T11:15:57Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1334.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1334"

} | true | [] |

|

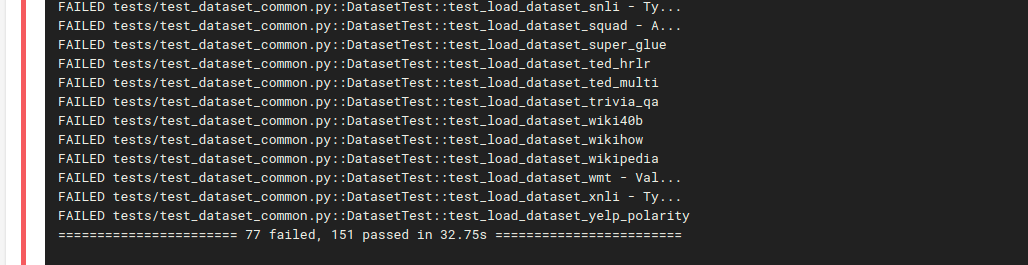

https://api.github.com/repos/huggingface/datasets/issues/31 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/31/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/31/comments | https://api.github.com/repos/huggingface/datasets/issues/31/events | https://github.com/huggingface/datasets/pull/31 | 610,677,641 | MDExOlB1bGxSZXF1ZXN0NDEyMDczNDE4 | 31 | [Circle ci] Install a virtual env before running tests | [] | closed | false | null | 0 | 2020-05-01T10:11:17Z | 2020-05-01T22:06:16Z | 2020-05-01T22:06:15Z | null | Install a virtual env before running tests to not running into sudo issues when dynamically downloading files.

Same number of tests now pass / fail as on my local computer:

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/31/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/31/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/31.diff",

"html_url": "https://github.com/huggingface/datasets/pull/31",

"merged_at": "2020-05-01T22:06:15Z",

"patch_url": "https://github.com/huggingface/datasets/pull/31.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/31"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/594 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/594/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/594/comments | https://api.github.com/repos/huggingface/datasets/issues/594/events | https://github.com/huggingface/datasets/pull/594 | 696,816,893 | MDExOlB1bGxSZXF1ZXN0NDgyODQ1OTc5 | 594 | Fix germeval url | [] | closed | false | null | 0 | 2020-09-09T13:29:35Z | 2020-09-09T13:34:35Z | 2020-09-09T13:34:34Z | null | Continuation of #593 but without the dummy data hack | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/594/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/594/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/594.diff",

"html_url": "https://github.com/huggingface/datasets/pull/594",

"merged_at": "2020-09-09T13:34:34Z",

"patch_url": "https://github.com/huggingface/datasets/pull/594.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/594"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/5088 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5088/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5088/comments | https://api.github.com/repos/huggingface/datasets/issues/5088/events | https://github.com/huggingface/datasets/issues/5088 | 1,400,530,412 | I_kwDODunzps5TemXs | 5,088 | load_datasets("json", ...) don't read local .json.gz properly | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | open | false | null | 2 | 2022-10-07T02:16:58Z | 2022-10-07T14:43:16Z | null | null | ## Describe the bug

I have a local file `*.json.gz` and it can be read by `pandas.read_json(lines=True)`, but cannot be read by `load_datasets("json")` (resulting in 0 lines)

## Steps to reproduce the bug

```python

fpath = '/data/junwang/.cache/general/57b6f2314cbe0bc45dda5b78f0871df2/test.json.gz'

ds_panda = DatasetDict(

test=Dataset.from_pandas(

pd.read_json(fpath, lines=True)

)

)

ds_direct = load_dataset(

'json', data_files={

'test': fpath

}, features=Features(

text_input=Value(dtype="string", id=None),

text_output=Value(dtype="string", id=None)

)

)

len(ds_panda['test']), len(ds_direct['test'])

```

## Expected results

Lines of `ds_panda['test']` and `ds_direct['test']` should match.

## Actual results

```

Using custom data configuration default-c0ef2598760968aa

Downloading and preparing dataset json/default to /data/junwang/.cache/huggingface/datasets/json/default-c0ef2598760968aa/0.0.0/e6070c77f18f01a5ad4551a8b7edfba20b8438b7cad4d94e6ad9378022ce4aab...

Dataset json downloaded and prepared to /data/junwang/.cache/huggingface/datasets/json/default-c0ef2598760968aa/0.0.0/e6070c77f18f01a5ad4551a8b7edfba20b8438b7cad4d94e6ad9378022ce4aab. Subsequent calls will reuse this data.

(62087, 0)

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version:

- Platform: Ubuntu 18.04.4 LTS

- Python version: 3.8.13

- PyArrow version: 9.0.0

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5088/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5088/timeline | null | null | null | null | false | [

"Hi @junwang-wish, thanks for reporting.\r\n\r\nUnfortunately, I'm not able to reproduce the bug. Which version of `datasets` are you using? Does the problem persist if you update `datasets`?\r\n```shell\r\npip install -U datasets\r\n``` ",

"Thanks @albertvillanova I updated `datasets` from `2.5.1` to `2.5.2` and tested copying the `json.gz` to a different directory and my mind was blown:\r\n\r\n```python\r\nfpath = '/data/junwang/.cache/general/57b6f2314cbe0bc45dda5b78f0871df2/test.json.gz'\r\nds_panda = DatasetDict(\r\n test=Dataset.from_pandas(\r\n pd.read_json(fpath, lines=True)\r\n )\r\n)\r\nds_direct = load_dataset(\r\n 'json', data_files={\r\n 'test': fpath\r\n }, features=Features(\r\n text_input=Value(dtype=\"string\", id=None),\r\n text_output=Value(dtype=\"string\", id=None)\r\n )\r\n)\r\nlen(ds_panda['test']), len(ds_direct['test'])\r\n```\r\nproduces \r\n```python\r\nUsing custom data configuration default-0e6cf24134163e8b\r\nFound cached dataset json (/data/junwang/.cache/huggingface/datasets/json/default-0e6cf24134163e8b/0.0.0/e6070c77f18f01a5ad4551a8b7edfba20b8438b7cad4d94e6ad9378022ce4aab)\r\n(1, 0)\r\n```\r\nbut then I ran below command to see if the same file in a different directory leads to same discrepancy\r\n```shell\r\ncp /data/junwang/.cache/general/57b6f2314cbe0bc45dda5b78f0871df2/test.json.gz tmp_test.json.gz\r\n```\r\nand so I ran\r\n```python\r\nfpath = 'tmp_test.json.gz'\r\nds_panda = DatasetDict(\r\n test=Dataset.from_pandas(\r\n pd.read_json(fpath, lines=True)\r\n )\r\n)\r\nds_direct = load_dataset(\r\n 'json', data_files={\r\n 'test': fpath\r\n }, features=Features(\r\n text_input=Value(dtype=\"string\", id=None),\r\n text_output=Value(dtype=\"string\", id=None)\r\n )\r\n)\r\nlen(ds_panda['test']), len(ds_direct['test'])\r\n```\r\nand behold, I get \r\n```python\r\nUsing custom data configuration default-f679b32ab0008520\r\nDownloading and preparing dataset json/default to /data/junwang/.cache/huggingface/datasets/json/default-f679b32ab0008520/0.0.0/e6070c77f18f01a5ad4551a8b7edfba20b8438b7cad4d94e6ad9378022ce4aab...\r\nDataset json downloaded and prepared to /data/junwang/.cache/huggingface/datasets/json/default-f679b32ab0008520/0.0.0/e6070c77f18f01a5ad4551a8b7edfba20b8438b7cad4d94e6ad9378022ce4aab. Subsequent calls will reuse this data.\r\n(1, 1)\r\n```\r\nThey match now !\r\n\r\nThis problem happens regardless of the shell I use (VScode jupyter extension or plain old Python REPL). \r\n\r\nI attached the `json.gz` here for reference: [test.json.gz](https://github.com/huggingface/datasets/files/9734843/test.json.gz)\r\n\r\n"

] |

https://api.github.com/repos/huggingface/datasets/issues/3841 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3841/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3841/comments | https://api.github.com/repos/huggingface/datasets/issues/3841/events | https://github.com/huggingface/datasets/issues/3841 | 1,161,203,842 | I_kwDODunzps5FNpCC | 3,841 | Pyright reportPrivateImportUsage when `from datasets import load_dataset` | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | 6 | 2022-03-07T10:24:04Z | 2023-02-18T19:14:03Z | 2023-02-13T13:48:41Z | null | ## Describe the bug

Pyright complains about module not exported.

## Steps to reproduce the bug

Use an editor/IDE with Pyright Language server with default configuration:

```python

from datasets import load_dataset

```

## Expected results

No complain from Pyright

## Actual results

Pyright complain below:

```

`load_dataset` is not exported from module "datasets"

Import from "datasets.load" instead [reportPrivateImportUsage]

```

Importing from `datasets.load` does indeed solves the problem but I believe importing directly from top level `datasets` is the intended usage per the documentation.

## Environment info

- `datasets` version: 1.18.3

- Platform: macOS-12.2.1-arm64-arm-64bit

- Python version: 3.9.10

- PyArrow version: 7.0.0

| {

"+1": 3,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 3,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3841/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3841/timeline | null | completed | null | null | false | [

"Hi! \r\n\r\nThis issue stems from `datasets` having `py.typed` defined (see https://github.com/microsoft/pyright/discussions/3764#discussioncomment-3282142) - to avoid it, we would either have to remove `py.typed` (added to be compliant with PEP-561) or export the names with `__all__`/`from .submodule import name as name`.\r\n\r\nTransformers is fine as it no longer has `py.typed` (removed in https://github.com/huggingface/transformers/pull/18485)\r\n\r\nWDYT @lhoestq @albertvillanova @polinaeterna \r\n\r\n@sgugger's point makes sense - we should either be \"properly typed\" (have py.typed + mypy tests) or drop `py.typed` as Transformers did (I like this option better).\r\n\r\n(cc @Wauplin since `huggingface_hub` has the same issue.)",

"I'm fine with dropping it, but autotrain people won't be happy @SBrandeis ",

"> (cc @Wauplin since huggingface_hub has the same issue.)\r\n\r\nHmm maybe we have the same issue but I haven't been able to reproduce something similar to `\"load_dataset\" is not exported from module \"datasets\"` message (using VSCode+Pylance -that is powered by Pyright). `huggingface_hub` contains a `py.typed` file but the package itself is actually typed. We are running `mypy` in our CI tests since ~3 months and so far it seems to be ok. But happy to change if it causes some issues with linters.\r\n\r\nAlso the top-level [`__init__.py`](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/__init__.py) is quite different in `hfh` than `datasets` (at first glance). We have a section at the bottom to import all high level methods/classes in a `if TYPE_CHECKING` block.",

"@Wauplin I only get the error if I use Pyright's CLI tool or the Pyright extension (not sure why, but Pylance also doesn't report this issue on my machine)\r\n\r\n> Also the top-level [`__init__.py`](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/__init__.py) is quite different in `hfh` than `datasets` (at first glance). We have a section at the bottom to import all high level methods/classes in a `if TYPE_CHECKING` block.\r\n\r\nI tried to fix the issue with `TYPE_CHECKING`, but it still fails if `py.typed` is present.",

"@mariosasko thank for the tip. I have been able to reproduce the issue as well. I would be up for including a (huge) static `__all__` variable in the `__init__.py` (since the file is already generated automatically in `hfh`) but honestly I don't think it's worth the hassle. \r\n\r\nI'll delete the `py.typed` file in `huggingface_hub` to be consistent between HF libraries. I opened a PR here: https://github.com/huggingface/huggingface_hub/pull/1329",

"I am getting this error in google colab today:\r\n\r\n\r\n\r\nThe code runs just fine too."

] |

https://api.github.com/repos/huggingface/datasets/issues/223 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/223/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/223/comments | https://api.github.com/repos/huggingface/datasets/issues/223/events | https://github.com/huggingface/datasets/issues/223 | 627,683,386 | MDU6SXNzdWU2Mjc2ODMzODY= | 223 | [Feature request] Add FLUE dataset | [

{

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset",

"id": 2067376369,

"name": "dataset request",

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request"

}

] | closed | false | null | 3 | 2020-05-30T08:52:15Z | 2020-12-03T13:39:33Z | 2020-12-03T13:39:33Z | null | Hi,

I think it would be interesting to add the FLUE dataset for francophones or anyone wishing to work on French.

In other requests, I read that you are already working on some datasets, and I was wondering if FLUE was planned.

If it is not the case, I can provide each of the cleaned FLUE datasets (in the form of a directly exploitable dataset rather than in the original xml formats which require additional processing, with the French part for cases where the dataset is based on a multilingual dataframe, etc.). | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/223/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/223/timeline | null | completed | null | null | false | [

"Hi @lbourdois, yes please share it with us",

"@mariamabarham \r\nI put all the datasets on this drive: https://1drv.ms/u/s!Ao2Rcpiny7RFinDypq7w-LbXcsx9?e=iVsEDh\r\n\r\n\r\nSome information : \r\n• For FLUE, the quote used is\r\n\r\n> @misc{le2019flaubert,\r\n> title={FlauBERT: Unsupervised Language Model Pre-training for French},\r\n> author={Hang Le and Loïc Vial and Jibril Frej and Vincent Segonne and Maximin Coavoux and Benjamin Lecouteux and Alexandre Allauzen and Benoît Crabbé and Laurent Besacier and Didier Schwab},\r\n> year={2019},\r\n> eprint={1912.05372},\r\n> archivePrefix={arXiv},\r\n> primaryClass={cs.CL}\r\n> }\r\n\r\n• The Github repo of FLUE is avaible here : https://github.com/getalp/Flaubert/tree/master/flue\r\n\r\n\r\n\r\nInformation related to the different tasks of FLUE : \r\n\r\n**1. Classification**\r\nThree dataframes are available: \r\n- Book\r\n- DVD\r\n- Music\r\nFor each of these dataframes is available a set of training and test data, and a third one containing unlabelled data.\r\n\r\nCitation : \r\n>@dataset{prettenhofer_peter_2010_3251672,\r\n author = {Prettenhofer, Peter and\r\n Stein, Benno},\r\n title = {{Webis Cross-Lingual Sentiment Dataset 2010 (Webis- \r\n CLS-10)}},\r\n month = jul,\r\n year = 2010,\r\n publisher = {Zenodo},\r\n doi = {10.5281/zenodo.3251672},\r\n url = {https://doi.org/10.5281/zenodo.3251672}\r\n}\r\n\r\n\r\n**2. Paraphrasing** \r\nFrench part of the PAWS-X dataset (https://github.com/google-research-datasets/paws).\r\nThree dataframes are available: \r\n- train\r\n- dev\r\n- test \r\n\r\nCitation : \r\n> @InProceedings{pawsx2019emnlp,\r\n> title = {{PAWS-X: A Cross-lingual Adversarial Dataset for Paraphrase Identification}},\r\n> author = {Yang, Yinfei and Zhang, Yuan and Tar, Chris and Baldridge, Jason},\r\n> booktitle = {Proc. of EMNLP},\r\n> year = {2019}\r\n> }\r\n\r\n\r\n\r\n**3. Natural Language Inference**\r\nFrench part of the XNLI dataset (https://github.com/facebookresearch/XNLI).\r\nThree dataframes are available: \r\n- train\r\n- dev\r\n- test \r\n\r\nFor the dev and test datasets, extra columns compared to the train dataset were available so I left them in the dataframe (I didn't know if these columns could be useful for other tasks or not). \r\nIn the context of the FLUE benchmark, only the columns gold_label, sentence1 and sentence2 are useful.\r\n\r\n\r\nCitation : \r\n\r\n> @InProceedings{conneau2018xnli,\r\n> author = \"Conneau, Alexis\r\n> and Rinott, Ruty\r\n> and Lample, Guillaume\r\n> and Williams, Adina\r\n> and Bowman, Samuel R.\r\n> and Schwenk, Holger\r\n> and Stoyanov, Veselin\",\r\n> title = \"XNLI: Evaluating Cross-lingual Sentence Representations\",\r\n> booktitle = \"Proceedings of the 2018 Conference on Empirical Methods\r\n> in Natural Language Processing\",\r\n> year = \"2018\",\r\n> publisher = \"Association for Computational Linguistics\",\r\n> location = \"Brussels, Belgium\",\r\n\r\n\r\n**4. Parsing**\r\nThe dataset used by the FLUE authors for this task is not freely available.\r\nUsers of your library will therefore not be able to access it.\r\nNevertheless, I think maybe it is useful to add a link to the site where to request this dataframe: http://ftb.linguist.univ-paris-diderot.fr/telecharger.php?langue=en \r\n(personally it was sent to me less than 48 hours after I requested it).\r\n\r\n\r\n**5. Word Sense Disambiguation Tasks**\r\n5.1 Verb Sense Disambiguation\r\n\r\nTwo dataframes are available: train and test\r\nFor both dataframes, 4 columns are available: document, sentence, lemma and word.\r\nI created the document column thinking that there were several documents in the dataset but afterwards it turns out that there were not: several sentences but only one document. It's up to you to keep it or not when importing these two dataframes.\r\n\r\nThe sentence column is used to determine to which sentence the word in the word column belongs. It is in the form of a dictionary {'id': 'd000.s001', 'idx': '1'}. I thought for a while to keep only the idx because the id doesn't matter any more information. Nevertheless for the test dataset, the dictionary has an extra value indicating the source of the sentence. I don't know if it's useful or not, that's why I left the dictionary just in case. The user is free to do what he wants with it.\r\n\r\nCitation : \r\n\r\n> Segonne, V., Candito, M., and Crabb ́e, B. (2019). Usingwiktionary as a resource for wsd: the case of frenchverbs. InProceedings of the 13th International Confer-ence on Computational Semantics-Long Papers, pages259–270\r\n\r\n5.2 Noun Sense Disambiguation\r\nTwo dataframes are available: 2 train and 1 test\r\n\r\nI confess I didn't fully understand the procedure for this task.\r\n\r\nCitation : \r\n\r\n> @dataset{loic_vial_2019_3549806,\r\n> author = {Loïc Vial},\r\n> title = {{French Word Sense Disambiguation with Princeton \r\n> WordNet Identifiers}},\r\n> month = nov,\r\n> year = 2019,\r\n> publisher = {Zenodo},\r\n> version = {1.0},\r\n> doi = {10.5281/zenodo.3549806},\r\n> url = {https://doi.org/10.5281/zenodo.3549806}\r\n> }\r\n\r\nFinally, additional information about FLUE is available in the FlauBERT publication : \r\nhttps://arxiv.org/abs/1912.05372 (p. 4).\r\n\r\n\r\nHoping to have provided you with everything you need to add this benchmark :) \r\n",

"https://github.com/huggingface/datasets/pull/943"

] |

https://api.github.com/repos/huggingface/datasets/issues/1518 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1518/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1518/comments | https://api.github.com/repos/huggingface/datasets/issues/1518/events | https://github.com/huggingface/datasets/pull/1518 | 764,045,722 | MDExOlB1bGxSZXF1ZXN0NTM4MzAyNzYy | 1,518 | Add twi text | [] | closed | false | null | 2 | 2020-12-12T16:52:02Z | 2020-12-13T18:53:37Z | 2020-12-13T18:53:37Z | null | Add Twi texts | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1518/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1518/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1518.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1518",

"merged_at": "2020-12-13T18:53:37Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1518.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1518"

} | true | [

"Hii please follow me",

"thank you"

] |

https://api.github.com/repos/huggingface/datasets/issues/4267 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4267/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4267/comments | https://api.github.com/repos/huggingface/datasets/issues/4267/events | https://github.com/huggingface/datasets/pull/4267 | 1,223,214,275 | PR_kwDODunzps43LzOR | 4,267 | Replace data URL in SAMSum dataset within the same repository | [] | closed | false | null | 1 | 2022-05-02T18:38:08Z | 2022-05-06T08:38:13Z | 2022-05-02T19:03:49Z | null | Replace data URL with one in the same repository. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4267/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4267/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/4267.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4267",

"merged_at": "2022-05-02T19:03:49Z",

"patch_url": "https://github.com/huggingface/datasets/pull/4267.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4267"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._"

] |

https://api.github.com/repos/huggingface/datasets/issues/1418 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1418/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1418/comments | https://api.github.com/repos/huggingface/datasets/issues/1418/events | https://github.com/huggingface/datasets/pull/1418 | 760,672,320 | MDExOlB1bGxSZXF1ZXN0NTM1NDY0NzQ4 | 1,418 | Add arabic dialects | [] | closed | false | null | 1 | 2020-12-09T21:06:07Z | 2020-12-17T09:40:56Z | 2020-12-17T09:40:56Z | null | Data loading script and dataset card for Dialectal Arabic Resources dataset.

Fixed git issues from PR #976 | {

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1418/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1418/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1418.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1418",

"merged_at": "2020-12-17T09:40:56Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1418.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1418"

} | true | [

"merging since the CI is fixed on master"

] |

https://api.github.com/repos/huggingface/datasets/issues/136 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/136/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/136/comments | https://api.github.com/repos/huggingface/datasets/issues/136/events | https://github.com/huggingface/datasets/pull/136 | 619,211,018 | MDExOlB1bGxSZXF1ZXN0NDE4NzgxNzI4 | 136 | Update README.md | [] | closed | false | null | 1 | 2020-05-15T20:01:07Z | 2020-05-17T12:17:28Z | 2020-05-17T12:17:28Z | null | small typo | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/136/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/136/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/136.diff",

"html_url": "https://github.com/huggingface/datasets/pull/136",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/136.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/136"

} | true | [

"Thanks, this was fixed with #135 :)"

] |

https://api.github.com/repos/huggingface/datasets/issues/2916 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2916/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2916/comments | https://api.github.com/repos/huggingface/datasets/issues/2916/events | https://github.com/huggingface/datasets/pull/2916 | 997,003,661 | PR_kwDODunzps4rx5ua | 2,916 | Add OpenAI's pass@k code evaluation metric | [] | closed | false | null | 4 | 2021-09-15T12:05:43Z | 2021-11-12T14:19:51Z | 2021-11-12T14:19:50Z | null | This PR introduces the `code_eval` metric which implements [OpenAI's code evaluation harness](https://github.com/openai/human-eval) introduced in the [Codex paper](https://arxiv.org/abs/2107.03374). It is heavily based on the original implementation and just adapts the interface to follow the `predictions`/`references` convention.

The addition of this metric should enable the evaluation against the code evaluation datasets added in #2897 and #2893.

A few open questions:

- The implementation makes heavy use of multiprocessing which this PR does not touch. Is this conflicting with multiprocessing natively integrated in `datasets`?

- This metric executes generated Python code and as such it poses dangers of executing malicious code. OpenAI addresses this issue by 1) commenting the `exec` call in the code so the user has to actively uncomment it and read the warning and 2) suggests using a sandbox environment (gVisor container). Should we add a similar safeguard? E.g. a prompt that needs to be answered when initialising the metric? Or at least a warning message?

- Naming: the implementation sticks to the `predictions`/`references` naming, however, the references are not reference solutions but unittest to test the solution. While reference solutions are also available they are not used. Should the naming be adapted? | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 1,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2916/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2916/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2916.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2916",

"merged_at": "2021-11-12T14:19:50Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2916.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2916"

} | true | [

"> The implementation makes heavy use of multiprocessing which this PR does not touch. Is this conflicting with multiprocessing natively integrated in datasets?\r\n\r\nIt should work normally, but feel free to test it.\r\nThere is some documentation about using metrics in a distributed setup that uses multiprocessing [here](https://huggingface.co/docs/datasets/loading.html?highlight=rank#distributed-setup)\r\nYou can test to spawn several processes where each process would load the metric. Then in each process you add some references/predictions to the metric. Finally you call compute() in each process and on process 0 it should return the result on all the references/predictions\r\n\r\nLet me know if you have questions or if I can help",

"Is there a good way to debug the Windows tests? I suspect it is an issue with `multiprocessing`, but I can't see the error messages.",

"Indeed it has an issue on windows.\r\nIn your example it's supposed to output\r\n```python\r\n{'pass@1': 0.5, 'pass@2': 1.0}\r\n```\r\nbut it gets\r\n```python\r\n{'pass@1': 0.0, 'pass@2': 0.0}\r\n```\r\n\r\nI'm not on my windows machine today so I can't take a look at it. I can dive into it early next week if you want",

"> I'm not on my windows machine today so I can't take a look at it. I can dive into it early next week if you want\r\n\r\nThat would be great - unfortunately I have no access to a windows machine at the moment. I am quite sure it is an issue with in exectue.py because of multiprocessing.\r\n"

] |

https://api.github.com/repos/huggingface/datasets/issues/1078 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1078/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1078/comments | https://api.github.com/repos/huggingface/datasets/issues/1078/events | https://github.com/huggingface/datasets/pull/1078 | 756,633,215 | MDExOlB1bGxSZXF1ZXN0NTMyMTUyMzgx | 1,078 | add AJGT dataset | [] | closed | false | null | 0 | 2020-12-03T22:16:31Z | 2020-12-04T09:55:15Z | 2020-12-04T09:55:15Z | null | Arabic Jordanian General Tweets. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1078/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1078/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1078.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1078",

"merged_at": "2020-12-04T09:55:15Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1078.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1078"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/5145 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5145/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5145/comments | https://api.github.com/repos/huggingface/datasets/issues/5145/events | https://github.com/huggingface/datasets/issues/5145 | 1,418,005,452 | I_kwDODunzps5UhQvM | 5,145 | Dataset order is not deterministic with ZIP archives and `iter_files` | [] | closed | false | null | 8 | 2022-10-21T09:00:03Z | 2022-10-27T09:51:49Z | 2022-10-27T09:51:10Z | null | ### Describe the bug

For the `beans` dataset (did not try on other), the order of samples is not the same on different machines. Tested on my local laptop, github actions machine, and ec2 instance. The three yield a different order.

### Steps to reproduce the bug

In a clean docker container or conda environment with datasets==2.6.1, run

```python

from datasets import load_dataset

from pprint import pprint

data = load_dataset("beans", split="validation")

pprint(data["image_file_path"])

```

### Expected behavior

The order of the images is the same on all machines.

### Environment info

On the EC2 instance:

```

- `datasets` version: 2.6.1

- Platform: Linux-4.14.291-218.527.amzn2.x86_64-x86_64-with-glibc2.2.5

- Python version: 3.7.10

- PyArrow version: 9.0.0

- Pandas version: 1.3.5

- Numpy version: not checked

```

On my local laptop:

```

- `datasets` version: 2.6.1

- Platform: Linux-5.15.0-50-generic-x86_64-with-glibc2.35

- Python version: 3.9.12

- PyArrow version: 7.0.0

- Pandas version: 1.3.5

- Numpy version: 1.23.1

```

On github actions:

```

- `datasets` version: 2.6.1

- Platform: Linux-5.15.0-1022-azure-x86_64-with-glibc2.2.5

- Python version: 3.8.14

- PyArrow version: 9.0.0

- Pandas version: 1.5.1

- Numpy version: 1.23.4

``` | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5145/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5145/timeline | null | completed | null | null | false | [

"Thanks for reporting ! The issue doesn't come from shuffling, but from `beans` row order not being deterministic:\r\n\r\nhttps://huggingface.co/datasets/beans/blob/main/beans.py uses `dl_manager.iter_files` on ZIP archives and the file order doesn't seen to be deterministic and changes across machines",

"Thank you for noticing indeed!",

"This is still a bug, so I'd keep this one open if you don't mind ;)",

"Besides the linked PR, to make the loading process fully deterministic, I believe we should also sort the data files [here](https://github.com/huggingface/datasets/blob/df4bdd365f2abb695f113cbf8856a925bc70901b/src/datasets/data_files.py#L276) and [here](https://github.com/huggingface/datasets/blob/df4bdd365f2abb695f113cbf8856a925bc70901b/src/datasets/data_files.py#L485) (e.g. fsspec's `LocalFileSystem.glob` relies on `os.scandir`, which yields the contents in arbitrary order). My concern is the overhead of these sorts... Maybe we could introduce a new flag to `load_dataset` similar to TFDS' [`shuffle_files`](https://www.tensorflow.org/datasets/determinism#determinism_when_reading) or sort only if the number of data files is small?",

"We already return the result sorted at the end of `_resolve_single_pattern_locally` and `_resolve_single_pattern_in_dataset_repository` if I'm not mistaken",

"@lhoestq Oh, you are right. Feel free to ignore my comment.",

"I think the corresponding PR is ready to be merged :hugs: ",

"@albertvillanova Thanks for the fix!"

] |

https://api.github.com/repos/huggingface/datasets/issues/2187 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2187/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2187/comments | https://api.github.com/repos/huggingface/datasets/issues/2187/events | https://github.com/huggingface/datasets/issues/2187 | 852,939,736 | MDU6SXNzdWU4NTI5Mzk3MzY= | 2,187 | Question (potential issue?) related to datasets caching | [

{

"color": "d876e3",

"default": true,

"description": "Further information is requested",

"id": 1935892912,

"name": "question",

"node_id": "MDU6TGFiZWwxOTM1ODkyOTEy",

"url": "https://api.github.com/repos/huggingface/datasets/labels/question"

}

] | open | false | null | 15 | 2021-04-08T00:16:28Z | 2023-01-03T18:30:38Z | null | null | I thought I had disabled datasets caching in my code, as follows:

```

from datasets import set_caching_enabled

...

def main():

# disable caching in datasets

set_caching_enabled(False)

```

However, in my log files I see messages like the following:

```

04/07/2021 18:34:42 - WARNING - datasets.builder - Using custom data configuration default-888a87931cbc5877

04/07/2021 18:34:42 - WARNING - datasets.builder - Reusing dataset csv (xxxx/cache-transformers/datasets/csv/default-888a87931cbc5877/0.0.0/965b6429be0fc05f975b608ce64e1fa941cc8fb4f30629b523d2390f3c0e1a93

```

Can you please let me know what this reusing dataset csv means? I wouldn't expect any reusing with the datasets caching disabled. Thank you! | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2187/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2187/timeline | null | null | null | null | false | [

"An educated guess: does this refer to the fact that depending on the custom column names in the dataset files (csv in this case), there is a dataset loader being created? and this dataset loader - using the \"custom data configuration\" is used among all jobs running using this particular csv files? (thinking out loud here...)\r\n\r\nIf this is the case, it may be ok for my use case (have to think about it more), still a bit surprising given that datasets caching is disabled (or so I hope) by the lines I pasted above. ",

"Hi ! Currently disabling the caching means that all the dataset transform like `map`, `filter` etc. ignore the cache: it doesn't write nor read processed cache files.\r\nHowever `load_dataset` reuses datasets that have already been prepared: it does reload prepared dataset files.\r\n\r\nIndeed from the documentation:\r\n> datasets.set_caching_enabled(boolean: bool)\r\n\r\n> When applying transforms on a dataset, the data are stored in cache files. The caching mechanism allows to reload an existing cache file if it’s already been computed.\r\n> Reloading a dataset is possible since the cache files are named using the dataset fingerprint, which is updated after each transform.\r\n> If disabled, the library will no longer reload cached datasets files when applying transforms to the datasets. More precisely, if the caching is disabled:\r\n> - cache files are always recreated\r\n> - cache files are written to a temporary directory that is deleted when session closes\r\n> - cache files are named using a random hash instead of the dataset fingerprint - use datasets.Dataset.save_to_disk() to save a transformed dataset or it will be deleted when session closes\r\n> - caching doesn’t affect datasets.load_dataset(). If you want to regenerate a dataset from scratch you should use the download_mode parameter in datasets.load_dataset().",

"Thank you for the clarification. \r\n\r\nThis is a bit confusing. On one hand, it says that cache files are always recreated and written to a temporary directory that is removed; on the other hand the last bullet point makes me think that since the default according to the docs for `download_mode (Optional datasets.GenerateMode) – select the download/generate mode - Default to REUSE_DATASET_IF_EXISTS` => it almost sounds that it could reload prepared dataset files. Where are these files stored? I guess not in the temporary directory that is removed... \r\n\r\nI find this type of api design error-prone. When I see as a programmer `datasets.set_caching_enabled(False)` I expect no reuse of anything in the cache. ",

"It would be nice if the documentation elaborated on all the possible values for `download_mode` and/or a link to `datasets.GenerateMode`. \r\nThis info here:\r\n```\r\n \"\"\"`Enum` for how to treat pre-existing downloads and data.\r\n The default mode is `REUSE_DATASET_IF_EXISTS`, which will reuse both\r\n raw downloads and the prepared dataset if they exist.\r\n The generations modes:\r\n | | Downloads | Dataset |\r\n | -----------------------------------|-----------|---------|\r\n | `REUSE_DATASET_IF_EXISTS` (default)| Reuse | Reuse |\r\n | `REUSE_CACHE_IF_EXISTS` | Reuse | Fresh |\r\n | `FORCE_REDOWNLOAD` | Fresh | Fresh |\r\n```",

"I have another question. Assuming that I understood correctly and there is reuse of datasets files when caching is disabled (!), I'm guessing there is a directory that is created based on some information on the dataset file. I'm interested in the situation where I'm loading a (custom) dataset from local disk. What information is used to create the directory/filenames where the files are stored?\r\n\r\nI'm concerned about the following scenario: if I have a file, let's say `train.csv` at path `the_path`, run once, the dataset is prepared, some models are run, etc. Now let's say there is an issue and I recreate `train.csv` at the same path `the_path`. Is there enough information in the temporary name/hash to *not* reload the *old* prepared dataset (e.g., timestamp of the file)? Or is it going to reload the *old* prepared file? ",

"Thanks for the feedback, we'll work in improving this aspect of the documentation.\r\n\r\n> Where are these files stored? I guess not in the temporary directory that is removed...\r\n\r\nWe're using the Arrow file format to load datasets. Therefore each time you load a dataset, it is prepared as an arrow file on your disk. By default the file is located in the ~/.cache/huggingface/datasets/<dataset_name>/<config_id>/<version> directory.\r\n\r\n> What information is used to create the directory/filenames where the files are stored?\r\n\r\nThe config_id contains a hash that takes into account:\r\n- the dataset loader used and its source code (e.g. the \"csv\" loader)\r\n- the arguments passed to the loader (e.g. the csv delimiter)\r\n- metadata of the local data files if any (e.g. their timestamps)\r\n\r\n> I'm concerned about the following scenario: if I have a file, let's say train.csv at path the_path, run once, the dataset is prepared, some models are run, etc. Now let's say there is an issue and I recreate train.csv at the same path the_path. Is there enough information in the temporary name/hash to not reload the old prepared dataset (e.g., timestamp of the file)? Or is it going to reload the old prepared file?\r\n\r\nYes the timestamp of the local csv file is taken into account. If you edit your csv file, the config_id will change and loading the dataset will create a new arrow file.",

"Thank you for all your clarifications, really helpful! \r\n\r\nIf you have the bandwidth, please do revisit the api wrt cache disabling. Anywhere in the computer stack (hardware included) where you disable the cache, one assumes there is no caching that happens. ",

"That makes total sense indeed !\r\nI think we can do the change",

"I have another question about caching, this time in the case where FORCE_REDOWNLOAD is used to load the dataset, the datasets cache is one directory as defined by HF_HOME and there are multiple concurrent jobs running in a cluster using the same local dataset (i.e., same local files in the cluster). Does anything in the naming convention and/or file access/locking that you're using prevent race conditions between the concurrent jobs on the caching of the local dataset they all use?\r\n\r\nI noticed some errors (can provide more details if helpful) in load_dataset/prepare_split that lead to my question above. \r\n\r\nLet me know if my question is clear, I can elaborate more if needed @lhoestq Thank you!",

"I got another error that convinces me there is a race condition (one of the test files had zero samples at prediction time). I think it comes down to the fact that the `config_id` above (used in the naming for the cache) has no information on who's touching the data. If I have 2 concurrent jobs, both loading the same dataset and forcing redownload, they may step on each other foot/caching of the dataset. ",

"We're using a locking mechanism to prevent two processes from writing at the same time. The locking is based on the `filelock` module.\r\nAlso directories that are being written use a suffix \".incomplete\" so that reading is not possible on a dataset being written.\r\n\r\nDo you think you could provide a simple code to reproduce the race condition you experienced ?",

"I can provide details about the code I'm running (it's really-really close to some official samples from the huggingface transformers examples, I can point to the exact sample file, I kept a record of that). I can also describe in which conditions this race occurs (I'm convinced it has to do with forcing the redownloading of the dataset, I've been running hundreds of experiments before and didn't have a problem before I forced the redownload). I also can provide samples of the different stack errors I get and some details about the level of concurrency of jobs I was running. I can also try to imagine how the race manifests (I'm fairly sure that it's a combo of one job cleaning up and another job being in the middle of the run).\r\n\r\nHowever, I have to cleanup all this to make sure I'm no spilling any info I shouldn't be spilling. I'll try to do it by the end of the week, if you think all this is helpful. \r\n\r\nFor now, I have a workaround. Don't use forcing redownloading. And to be ultra careful (although I don't think this is a problem), I run a series of jobs that will prepare the datasets and I know there is no concurrency wrt the dataset. Once that's done (and I believe even having multiple jobs loading the datasets at the same time doesn't create problems, as long as REUSE_DATASET_IF_EXISTS is the policy for loading the dataset, so the filelock mechanism you're using is working in that scenario), the prepared datasets will be reused, no race possible in any way. \r\n\r\nThanks for all the details you provided, it helped me understand the underlying implementation and coming up with workarounds when I ran into issues. ",

"Hi! I have the same challenge with caching, where the **.cache** folder is required even though it isn't possible for me.\r\n\r\nI'd like to run transformers in Snowflake, using Snowpark for Python, this would mean I could provide configurable transformers in real-time for business users without having data leave an environment (for security reasons). With no need for data transfer,n the compute is faster. It is a large use case - is it possible to entirely disable caching in certain scenarios?\r\n@lhoestq ?\r\n",

"You can try to change the location of the cache folder using the `HF_CACHE_HOME` environment variable, and set a location where you have read/write access.",

"Thanks @lhoestq \r\n\r\nI wanted to do that, however, snowflake does not allow it to write at all. I'm asking around to see if they can help me out with that issue 😅"

] |

https://api.github.com/repos/huggingface/datasets/issues/6003 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/6003/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/6003/comments | https://api.github.com/repos/huggingface/datasets/issues/6003/events | https://github.com/huggingface/datasets/issues/6003 | 1,786,554,110 | I_kwDODunzps5qfKb- | 6,003 | interleave_datasets & DataCollatorForLanguageModeling having a conflict ? | [] | open | false | null | 0 | 2023-07-03T17:15:31Z | 2023-07-03T17:15:31Z | null | null | ### Describe the bug

Hi everyone :)

I have two local & custom datasets (1 "sentence" per line) which I split along the 95/5 lines for pre-training a Bert model. I use a modified version of `run_mlm.py` in order to be able to make use of `interleave_dataset`:

- `tokenize()` runs fine

- `group_text()` runs fine

Everytime, on step 19, I get

```pytb

File "env/lib/python3.9/site-packages/transformers/data/data_collator.py", line 779, in torch_mask_tokens

inputs[indices_random] = random_words[indices_random]

RuntimeError: Index put requires the source and destination dtypes match, got Float for the destination and Long for the source.

```

I tried:

- training without interleave on dataset 1, it runs

- training without interleave on dataset 2, it runs

- training without `.to_iterable_dataset()`, it hangs then crash

- training without group_text() and padding to max_length seemed to fix the issue, but who knows if this was just because it was an issue that would come much later in terms of steps.

I might have coded something wrong, but I don't get what

### Steps to reproduce the bug

I have this function:

```py

def build_dataset(path: str, percent: str):

dataset = load_dataset(

"text",

data_files={"train": [path]},

split=f"train[{percent}]"

)

dataset = dataset.map(

lambda examples: tokenize(examples["text"]),

batched=True,

num_proc=num_proc,

)

dataset = dataset.map(

group_texts,

batched=True,

num_proc=num_proc,

desc=f"Grouping texts in chunks of {tokenizer.max_seq_length}",

remove_columns=["text"]

)

print(len(dataset))

return dataset.to_iterable_dataset()

```

I hardcoded group_text:

```py

def group_texts(examples):

# Concatenate all texts.

concatenated_examples = {k: list(chain(*examples[k])) for k in examples.keys()}

total_length = len(concatenated_examples[list(examples.keys())[0]])

# We drop the small remainder, and if the total_length < max_seq_length we exclude this batch and return an empty dict.

# We could add padding if the model supported it instead of this drop, you can customize this part to your needs.

total_length = (total_length // 512) * 512

# Split by chunks of max_len.

result = {

k: [t[i: i + 512] for i in range(0, total_length, 512)]

for k, t in concatenated_examples.items()

}

# result = {k: [el for el in elements if el] for k, elements in result.items()}

return result

```

And then I build datasets using the following code:

```py

train1 = build_dataset("d1.txt", ":95%")

train2 = build_dataset("d2.txt", ":95%")

dev1 = build_dataset("d1.txt", "95%:")

dev2 = build_dataset("d2.txt", "95%:")

```

and finally I run

```py

train_dataset = interleave_datasets(

[train1, train2],

probabilities=[0.8, 0.2],

seed=42

)

eval_dataset = interleave_datasets(

[dev1, dev2],

probabilities=[0.8, 0.2],

seed=42

)

```

Then I run the training part which remains mostly untouched:

> CUDA_VISIBLE_DEVICES=1 python custom_dataset.py --model_type bert --per_device_train_batch_size 32 --do_train --output_dir /var/mlm/training-bert/model --max_seq_length 512 --save_steps 10000 --save_total_limit 3 --auto_find_batch_size --logging_dir ./logs-bert --learning_rate 0.0001 --do_train --num_train_epochs 25 --warmup_steps 10000 --max_step 45000 --fp16

### Expected behavior

The model should then train normally, but fails every time at the same step (19).

printing the variables at `inputs[indices_random] = random_words[indices_random]` shows a magnificient empty tensor (, 32) [if I remember well]

### Environment info

transformers[torch] 4.30.2

Ubuntu

A100 0 CUDA 12

Driver Version: 525.116.04 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/6003/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/6003/timeline | null | null | null | null | false | [] |

https://api.github.com/repos/huggingface/datasets/issues/217 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/217/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/217/comments | https://api.github.com/repos/huggingface/datasets/issues/217/events | https://github.com/huggingface/datasets/issues/217 | 627,128,403 | MDU6SXNzdWU2MjcxMjg0MDM= | 217 | Multi-task dataset mixing | [

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

},

{

"color": "c5def5",

"default": false,

"description": "Generic discussion on the library",

"id": 2067400324,

"name": "generic discussion",

"node_id": "MDU6TGFiZWwyMDY3NDAwMzI0",

"url": "https://api.github.com/repos/huggingface/datasets/labels/generic%20discussion"

}

] | open | false | null | 26 | 2020-05-29T09:22:26Z | 2022-10-22T00:45:50Z | null | null | It seems like many of the best performing models on the GLUE benchmark make some use of multitask learning (simultaneous training on multiple tasks).

The [T5 paper](https://arxiv.org/pdf/1910.10683.pdf) highlights multiple ways of mixing the tasks together during finetuning:

- **Examples-proportional mixing** - sample from tasks proportionally to their dataset size

- **Equal mixing** - sample uniformly from each task

- **Temperature-scaled mixing** - The generalized approach used by multilingual BERT which uses a temperature T, where the mixing rate of each task is raised to the power 1/T and renormalized. When T=1 this is equivalent to equal mixing, and becomes closer to equal mixing with increasing T.

Following this discussion https://github.com/huggingface/transformers/issues/4340 in [transformers](https://github.com/huggingface/transformers), @enzoampil suggested that the `nlp` library might be a better place for this functionality.

Some method for combining datasets could be implemented ,e.g.

```

dataset = nlp.load_multitask(['squad','imdb','cnn_dm'], temperature=2.0, ...)

```

We would need a few additions:

- Method of identifying the tasks - how can we support adding a string to each task as an identifier: e.g. 'summarisation: '?

- Method of combining the metrics - a standard approach is to use the specific metric for each task and add them together for a combined score.

It would be great to support common use cases such as pretraining on the GLUE benchmark before fine-tuning on each GLUE task in turn.

I'm willing to write bits/most of this I just need some guidance on the interface and other library details so I can integrate it properly.

| {

"+1": 12,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 12,

"url": "https://api.github.com/repos/huggingface/datasets/issues/217/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/217/timeline | null | null | null | null | false | [

"I like this feature! I think the first question we should decide on is how to convert all datasets into the same format. In T5, the authors decided to format every dataset into a text-to-text format. If the dataset had \"multiple\" inputs like MNLI, the inputs were concatenated. So in MNLI the input:\r\n\r\n> - **Hypothesis**: The St. Louis Cardinals have always won.\r\n> \r\n> - **Premise**: yeah well losing is i mean i’m i’m originally from Saint Louis and Saint Louis Cardinals when they were there were uh a mostly a losing team but \r\n\r\nwas flattened to a single input:\r\n\r\n> mnli hypothesis: The St. Louis Cardinals have always won. premise:\r\n> yeah well losing is i mean i’m i’m originally from Saint Louis and Saint Louis Cardinals\r\n> when they were there were uh a mostly a losing team but.\r\n\r\nThis flattening is actually a very simple operation in `nlp` already. You would just need to do the following:\r\n\r\n```python \r\ndef flatten_inputs(example):\r\n return {\"input\": \"mnli hypothesis: \" + example['hypothesis'] + \" premise: \" + example['premise']}\r\n\r\nt5_ready_mnli_ds = mnli_ds.map(flatten_inputs, remove_columns=[<all columns except output>])\r\n```\r\n\r\nSo I guess converting the datasets into the same format can be left to the user for now. \r\nThen the question is how we can merge the datasets. I would probably be in favor of a simple \r\n\r\n```python \r\ndataset.add()\r\n```\r\n\r\nfunction that checks if the dataset is of the same format and if yes merges the two datasets. Finally, how should the sampling be implemented? **Examples-proportional mixing** corresponds to just merging the datasets and shuffling. For the other two sampling approaches we would need some higher-level features, maybe even a `dataset.sample()` function for merged datasets. \r\n\r\nWhat are your thoughts on this @thomwolf @lhoestq @ghomasHudson @enzoampil ?",

"I agree that we should leave the flattening of the dataset to the user for now. Especially because although the T5 framing seems obvious, there are slight variations on how the T5 authors do it in comparison to other approaches such as gpt-3 and decaNLP.\r\n\r\nIn terms of sampling, Examples-proportional mixing does seem the simplest to implement so would probably be a good starting point.\r\n\r\nTemperature-scaled mixing would probably most useful, offering flexibility as it can simulate the other 2 methods by setting the temperature parameter. There is a [relevant part of the T5 repo](https://github.com/google-research/text-to-text-transfer-transformer/blob/03c94165a7d52e4f7230e5944a0541d8c5710788/t5/data/utils.py#L889-L1118) which should help with implementation.\r\n\r\nAccording to the T5 authors, equal-mixing performs worst. Among the other two methods, tuning the K value (the artificial dataset size limit) has a large impact.\r\n",

"I agree with going with temperature-scaled mixing for its flexibility!\r\n\r\nFor the function that combines the datasets, I also find `dataset.add()` okay while also considering that users may want it to be easy to combine a list of say 10 data sources in one go.\r\n\r\n`dataset.sample()` should also be good. By the looks of it, we're planning to have as main parameters: `temperature`, and `K`.\r\n\r\nOn converting the datasets to the same format, I agree that we can leave these to the users for now. But, I do imagine it'd be an awesome feature for the future to have this automatically handled, based on a chosen *approach* to formatting :smile: \r\n\r\nE.g. T5, GPT-3, decaNLP, original raw formatting, or a contributed way of formatting in text-to-text. ",