url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

1.83B

| node_id

stringlengths 18

32

| number

int64 1

6.09k

| title

stringlengths 1

290

| labels

list | state

stringclasses 2

values | locked

bool 1

class | milestone

dict | comments

int64 0

54

| created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| closed_at

stringlengths 20

20

⌀ | active_lock_reason

null | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict | is_pull_request

bool 2

classes | comments_text

sequence |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/3421 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3421/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3421/comments | https://api.github.com/repos/huggingface/datasets/issues/3421/events | https://github.com/huggingface/datasets/pull/3421 | 1,077,966,571 | PR_kwDODunzps4vuvJK | 3,421 | Adding mMARCO dataset | [

{

"color": "0e8a16",

"default": false,

"description": "Contribution to a dataset script",

"id": 4564477500,

"name": "dataset contribution",

"node_id": "LA_kwDODunzps8AAAABEBBmPA",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20contribution"

}

] | closed | false | null | 7 | 2021-12-13T00:56:43Z | 2022-10-03T09:37:15Z | 2022-10-03T09:37:15Z | null | Adding mMARCO (v1.1) to HF datasets. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3421/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3421/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3421.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3421",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/3421.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3421"

} | true | [

"Hi @albertvillanova we've made a major overhaul of the loading script including all configurations we're making available. Could you please review it again?",

"@albertvillanova :ping_pong: ",

"Thanks @lhbonifacio for adding this dataset.\r\nHi there, i got an error about mmarco:\r\nConnectionError: Couldn't reach 'unicamp-dl/mmarco' on the Hub (ConnectionError)\r\ncode:\r\n`from datasets import list_datasets, load_dataset\r\ndataset = load_dataset('unicamp-dl/mmarco', language='portuguese')`\r\n\r\nAny help will be appreciated!",

"Hi @catqaq, we updated the loading script. Now you can load the datasets with:\r\n\r\n```python\r\ndataset = load_dataset('unicamp-dl/mmarco', 'portuguese')\r\n```\r\n\r\nYou can check the list of supported languages and usage examples in [this link](https://huggingface.co/datasets/unicamp-dl/mmarco). Feel free to contact us if you have any issues.",

"\r\n\r\n\r\n> \r\n\r\n\r\n\r\n> Hi @catqaq, we updated the loading script. Now you can load the datasets with:\r\n> \r\n> ```python\r\n> dataset = load_dataset('unicamp-dl/mmarco', 'portuguese')\r\n> ```\r\n> \r\n> You can check the list of supported languages and usage examples in [this link](https://huggingface.co/datasets/unicamp-dl/mmarco). Feel free to contact us if you have any issues.\r\n\r\nThanks for your quick updates. So, how can i get the fixed version, install from the source? It seems that the merging is blocked.",

"@catqaq you can load mMARCO using the namespace `unicamp-dl/mmarco` while this PR remains under review.",

"Thanks for your contribution, @lhbonifacio and @hugoabonizio. And sorry for the late response.\r\n\r\nWe are removing the dataset scripts from this GitHub repo and moving them to the Hugging Face Hub: https://huggingface.co/datasets\r\n\r\nAs you already created this dataset under your organization namespace (https://huggingface.co/datasets/unicamp-dl/mmarco), I think we can safely close this PR.\r\n\r\nWe would suggest you complete your dataset card with the YAML tags, to make it searchable and discoverable.\r\n\r\nPlease, feel free to tell us if you need some help."

] |

https://api.github.com/repos/huggingface/datasets/issues/3447 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3447/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3447/comments | https://api.github.com/repos/huggingface/datasets/issues/3447/events | https://github.com/huggingface/datasets/issues/3447 | 1,082,539,790 | I_kwDODunzps5Ahj8O | 3,447 | HF_DATASETS_OFFLINE=1 didn't stop datasets.builder from downloading | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | 3 | 2021-12-16T18:51:13Z | 2022-02-17T14:16:27Z | 2022-02-17T14:16:27Z | null | ## Describe the bug

According to https://huggingface.co/docs/datasets/loading_datasets.html#loading-a-dataset-builder, setting HF_DATASETS_OFFLINE to 1 should make datasets to "run in full offline mode". It didn't work for me. At the very beginning, datasets still tried to download "custom data configuration" for JSON, despite I have run the program once and cached all data into the same --cache_dir.

"Downloading" is not an issue when running with local disk, but crashes often with cloud storage because (1) multiply GPU processes try to access the same file, AND (2) FileLocker fails to synchronize all processes, due to storage throttling. 99% of times, when the main process releases FileLocker, the file is not actually ready for access in cloud storage and thus triggers "FileNotFound" errors for all other processes. Well, another way to resolve the problem is to investigate super reliable cloud storage, but that's out of scope here.

## Steps to reproduce the bug

```

export HF_DATASETS_OFFLINE=1

python run_clm.py --model_name_or_path=models/gpt-j-6B --train_file=trainpy.v2.train.json --validation_file=trainpy.v2.eval.json --cache_dir=datacache/trainpy.v2

```

## Expected results

datasets should stop all "downloading" behavior but reuse the cached JSON configuration. I think the problem here is part of the cache directory path, "default-471372bed4b51b53", is randomly generated, and it could change if some parameters changed. And I didn't find a way to use a fixed path to ensure datasets to reuse cached data every time.

## Actual results

The logging shows datasets are still downloading into "datacache/trainpy.v2/json/default-471372bed4b51b53/0.0.0/c2d554c3377ea79c7664b93dc65d0803b45e3279000f993c7bfd18937fd7f426".

```

12/16/2021 10:25:59 - WARNING - datasets.builder - Using custom data configuration default-471372bed4b51b53

12/16/2021 10:25:59 - INFO - datasets.builder - Generating dataset json (datacache/trainpy.v2/json/default-471372bed4b51b53/0.0.0/c2d554c3377ea79c7664b93dc65d0803b45e3279000f993c7bfd18937fd7f426)

Downloading and preparing dataset json/default to datacache/trainpy.v2/json/default-471372bed4b51b53/0.0.0/c2d554c3377ea79c7664b93dc65d0803b45e3279000f993c7bfd18937fd7f426...

100%|██████████████████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 17623.13it/s]

12/16/2021 10:25:59 - INFO - datasets.utils.download_manager - Downloading took 0.0 min

12/16/2021 10:26:00 - INFO - datasets.utils.download_manager - Checksum Computation took 0.0 min

100%|███████████████████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 1206.99it/s]

12/16/2021 10:26:00 - INFO - datasets.utils.info_utils - Unable to verify checksums.

12/16/2021 10:26:00 - INFO - datasets.builder - Generating split train

12/16/2021 10:26:01 - INFO - datasets.builder - Generating split validation

12/16/2021 10:26:02 - INFO - datasets.utils.info_utils - Unable to verify splits sizes.

Dataset json downloaded and prepared to datacache/trainpy.v2/json/default-471372bed4b51b53/0.0.0/c2d554c3377ea79c7664b93dc65d0803b45e3279000f993c7bfd18937fd7f426. Subsequent calls will reuse this data.

100%|█████████████████████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 53.54it/s]

```

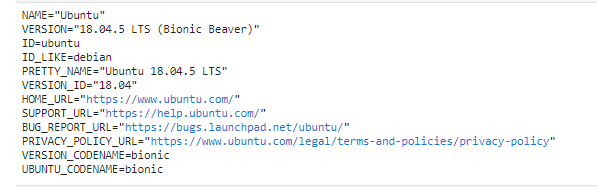

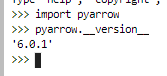

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.16.1

- Platform: Linux

- Python version: 3.8.10

- PyArrow version: 6.0.1

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3447/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3447/timeline | null | completed | null | null | false | [

"Hi ! Indeed it says \"downloading and preparing\" but in your case it didn't need to download anything since you used local files (it would have thrown an error otherwise). I think we can improve the logging to make it clearer in this case",

"@lhoestq Thank you for explaining. I am sorry but I was not clear about my intention. I didn't want to kill internet traffic; I wanted to kill all write activity. In other words, you can imagine that my storage has only read access but crashes on write.\r\n\r\nWhen run_clm.py is invoked with the same parameters, the hash in the cache directory \"datacache/trainpy.v2/json/default-471372bed4b51b53/0.0.0/...\" doesn't change, and my job can load cached data properly. This is great.\r\n\r\nUnfortunately, when params change (which happens sometimes), the hash changes and the old cache is invalid. datasets builder would create a new cache directory with the new hash and create JSON builder there, even though every JSON builder is the same. I didn't find a way to avoid such behavior.\r\n\r\nThis problem can be resolved when using datasets.map() for tokenizing and grouping text. This function allows me to specify output filenames with --cache_file_names, so that the cached files are always valid.\r\n\r\nThis is the code that I used to freeze cache filenames for tokenization. I wish I could do the same to datasets.load_dataset()\r\n```\r\n tokenized_datasets = raw_datasets.map(\r\n tokenize_function,\r\n batched=True,\r\n num_proc=data_args.preprocessing_num_workers,\r\n remove_columns=column_names,\r\n load_from_cache_file=not data_args.overwrite_cache,\r\n desc=\"Running tokenizer on dataset\",\r\n cache_file_names={k: os.path.join(model_args.cache_dir, f'{k}-tokenized') for k in raw_datasets},\r\n )\r\n```",

"Hi ! `load_dataset` may re-generate your dataset if some parameters changed indeed. If you want to freeze a dataset loaded with `load_dataset`, I think the best solution is just to save it somewhere on your disk with `.save_to_disk(my_dataset_dir)` and reload it with `load_from_disk(my_dataset_dir)`. This way you will be able to reload the dataset without having to run `load_dataset`"

] |

https://api.github.com/repos/huggingface/datasets/issues/2645 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2645/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2645/comments | https://api.github.com/repos/huggingface/datasets/issues/2645/events | https://github.com/huggingface/datasets/issues/2645 | 944,374,284 | MDU6SXNzdWU5NDQzNzQyODQ= | 2,645 | load_dataset processing failed with OS error after downloading a dataset | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | 2 | 2021-07-14T12:23:53Z | 2021-07-15T09:34:02Z | 2021-07-15T09:34:02Z | null | ## Describe the bug

After downloading a dataset like opus100, there is a bug that

OSError: Cannot find data file.

Original error:

dlopen: cannot load any more object with static TLS

## Steps to reproduce the bug

```python

from datasets import load_dataset

this_dataset = load_dataset('opus100', 'af-en')

```

## Expected results

there is no error when running load_dataset.

## Actual results

Specify the actual results or traceback.

Traceback (most recent call last):

File "/home/anaconda3/lib/python3.6/site-packages/datasets/builder.py", line 652, in _download_and_prep

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/home/anaconda3/lib/python3.6/site-packages/datasets/builder.py", line 989, in _prepare_split

example = self.info.features.encode_example(record)

File "/home/anaconda3/lib/python3.6/site-packages/datasets/features.py", line 952, in encode_example

example = cast_to_python_objects(example)

File "/home/anaconda3/lib/python3.6/site-packages/datasets/features.py", line 219, in cast_to_python_ob

return _cast_to_python_objects(obj)[0]

File "/home/anaconda3/lib/python3.6/site-packages/datasets/features.py", line 165, in _cast_to_python_o

import torch

File "/home/anaconda3/lib/python3.6/site-packages/torch/__init__.py", line 188, in <module>

_load_global_deps()

File "/home/anaconda3/lib/python3.6/site-packages/torch/__init__.py", line 141, in _load_global_deps

ctypes.CDLL(lib_path, mode=ctypes.RTLD_GLOBAL)

File "/home/anaconda3/lib/python3.6/ctypes/__init__.py", line 348, in __init__

self._handle = _dlopen(self._name, mode)

OSError: dlopen: cannot load any more object with static TLS

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "download_hub_opus100.py", line 9, in <module>

this_dataset = load_dataset('opus100', language_pair)

File "/home/anaconda3/lib/python3.6/site-packages/datasets/load.py", line 748, in load_dataset

use_auth_token=use_auth_token,

File "/home/anaconda3/lib/python3.6/site-packages/datasets/builder.py", line 575, in download_and_prepa

dl_manager=dl_manager, verify_infos=verify_infos, **download_and_prepare_kwargs

File "/home/anaconda3/lib/python3.6/site-packages/datasets/builder.py", line 658, in _download_and_prep

+ str(e)

OSError: Cannot find data file.

Original error:

dlopen: cannot load any more object with static TLS

## Environment info

- `datasets` version: 1.8.0

- Platform: Linux-3.13.0-32-generic-x86_64-with-debian-jessie-sid

- Python version: 3.6.6

- PyArrow version: 3.0.0

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2645/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2645/timeline | null | completed | null | null | false | [

"Hi ! It looks like an issue with pytorch.\r\n\r\nCould you try to run `import torch` and see if it raises an error ?",

"> Hi ! It looks like an issue with pytorch.\r\n> \r\n> Could you try to run `import torch` and see if it raises an error ?\r\n\r\nIt works. Thank you!"

] |

https://api.github.com/repos/huggingface/datasets/issues/2928 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2928/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2928/comments | https://api.github.com/repos/huggingface/datasets/issues/2928/events | https://github.com/huggingface/datasets/pull/2928 | 997,941,506 | PR_kwDODunzps4r0yUb | 2,928 | Update BibTeX entry | [] | closed | false | null | 0 | 2021-09-16T08:39:20Z | 2021-09-16T12:35:34Z | 2021-09-16T12:35:34Z | null | Update BibTeX entry. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2928/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2928/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2928.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2928",

"merged_at": "2021-09-16T12:35:34Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2928.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2928"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/5825 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5825/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5825/comments | https://api.github.com/repos/huggingface/datasets/issues/5825/events | https://github.com/huggingface/datasets/issues/5825 | 1,697,327,483 | I_kwDODunzps5lKyl7 | 5,825 | FileNotFound even though exists | [] | open | false | null | 3 | 2023-05-05T09:49:55Z | 2023-05-07T17:43:46Z | null | null | ### Describe the bug

I'm trying to download https://huggingface.co/datasets/bigscience/xP3/resolve/main/ur/xp3_facebook_flores_spa_Latn-urd_Arab_devtest_ab-spa_Latn-urd_Arab.jsonl which works fine in my webbrowser, but somehow not with datasets. Am I doing sth wrong?

```

Downloading builder script: 100%

2.82k/2.82k [00:00<00:00, 64.2kB/s]

Downloading readme: 100%

12.6k/12.6k [00:00<00:00, 585kB/s]

---------------------------------------------------------------------------

FileNotFoundError Traceback (most recent call last)

[<ipython-input-2-4b45446a91d5>](https://localhost:8080/#) in <cell line: 4>()

2 lang = "ur"

3 fname = "xp3_facebook_flores_spa_Latn-urd_Arab_devtest_ab-spa_Latn-urd_Arab.jsonl"

----> 4 dataset = load_dataset("bigscience/xP3", data_files=f"{lang}/{fname}")

6 frames

[/usr/local/lib/python3.10/dist-packages/datasets/data_files.py](https://localhost:8080/#) in _resolve_single_pattern_locally(base_path, pattern, allowed_extensions)

291 if allowed_extensions is not None:

292 error_msg += f" with any supported extension {list(allowed_extensions)}"

--> 293 raise FileNotFoundError(error_msg)

294 return sorted(out)

295

FileNotFoundError: Unable to find 'https://huggingface.co/datasets/bigscience/xP3/resolve/main/ur/xp3_facebook_flores_spa_Latn-urd_Arab_devtest_ab-spa_Latn-urd_Arab.jsonl' at /content/https:/huggingface.co/datasets/bigscience/xP3/resolve/main

```

### Steps to reproduce the bug

```

!pip install -q datasets

from datasets import load_dataset

lang = "ur"

fname = "xp3_facebook_flores_spa_Latn-urd_Arab_devtest_ab-spa_Latn-urd_Arab.jsonl"

dataset = load_dataset("bigscience/xP3", data_files=f"{lang}/{fname}")

```

### Expected behavior

Correctly downloads

### Environment info

latest versions | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5825/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5825/timeline | null | null | null | null | false | [

"Hi! \r\n\r\nThis would only work if `bigscience/xP3` was a no-code dataset, but it isn't (it has a Python builder script).\r\n\r\nBut this should work: \r\n```python\r\nload_dataset(\"json\", data_files=\"https://huggingface.co/datasets/bigscience/xP3/resolve/main/ur/xp3_facebook_flores_spa_Latn-urd_Arab_devtest_ab-spa_Latn-urd_Arab.jsonl\")\r\n```\r\n\r\n",

"I see, it's not compatible w/ regex right?\r\ne.g.\r\n`load_dataset(\"json\", data_files=\"https://huggingface.co/datasets/bigscience/xP3/resolve/main/ur/*\")`",

"> I see, it's not compatible w/ regex right? e.g. `load_dataset(\"json\", data_files=\"https://huggingface.co/datasets/bigscience/xP3/resolve/main/ur/*\")`\r\n\r\nIt should work for patterns that \"reference\" the local filesystem, but to make this work with the Hub, we must implement https://github.com/huggingface/datasets/issues/5281 first.\r\n\r\nIn the meantime, you can fetch these glob files with `HfFileSystem` and pass them as a list to `load_dataset`:\r\n```python\r\nfrom datasets import load_dataset\r\nfrom huggingface_hub import HfFileSystem, hf_hub_url # `HfFileSystem` requires the latest version of `huggingface_hub`\r\n\r\nfs = HfFileSystem()\r\nglob_files = fs.glob(\"datasets/bigscience/xP3/ur/*\")\r\n# convert fsspec URLs to HTTP URLs\r\nresolved_paths = [fs.resolve_path(file) for file in glob_files]\r\ndata_files = [hf_hub_url(resolved_path.repo_id, resolved_path.path_in_repo, repo_type=resolved_path.repo_type) for resolved_path in resolved_paths]\r\n\r\nds = load_dataset(\"json\", data_files=data_files)\r\n```"

] |

https://api.github.com/repos/huggingface/datasets/issues/3275 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3275/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3275/comments | https://api.github.com/repos/huggingface/datasets/issues/3275/events | https://github.com/huggingface/datasets/pull/3275 | 1,053,698,898 | PR_kwDODunzps4uiN9t | 3,275 | Force data files extraction if download_mode='force_redownload' | [] | closed | false | null | 0 | 2021-11-15T14:00:24Z | 2021-11-15T14:45:23Z | 2021-11-15T14:45:23Z | null | Avoids weird issues when redownloading a dataset due to cached data not being fully updated.

With this change, issues #3122 and https://github.com/huggingface/datasets/issues/2956 (not a fix, but a workaround) can be fixed as follows:

```python

dset = load_dataset(..., download_mode="force_redownload")

``` | {

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3275/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3275/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3275.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3275",

"merged_at": "2021-11-15T14:45:23Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3275.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3275"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/2047 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2047/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2047/comments | https://api.github.com/repos/huggingface/datasets/issues/2047/events | https://github.com/huggingface/datasets/pull/2047 | 830,626,430 | MDExOlB1bGxSZXF1ZXN0NTkyMTI2NzQ3 | 2,047 | Multilingual dIalogAct benchMark (miam) | [] | closed | false | null | 4 | 2021-03-12T23:02:55Z | 2021-03-23T10:36:34Z | 2021-03-19T10:47:13Z | null | My collaborators (@EmileChapuis, @PierreColombo) and I within the Affective Computing team at Telecom Paris would like to anonymously publish the miam dataset. It is assocated with a publication currently under review. We will update the dataset with full citations once the review period is over. | {

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2047/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2047/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2047.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2047",

"merged_at": "2021-03-19T10:47:13Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2047.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2047"

} | true | [

"Hello. All aforementioned changes have been made. I've also re-run black on miam.py. :-)",

"I will run isort again. Hopefully it resolves the current check_code_quality test failure.",

"Once the review period is over, feel free to open a PR to add all the missing information ;)",

"Hi! I will follow up right now with one more pull request as I have new anonymous citation information to include."

] |

https://api.github.com/repos/huggingface/datasets/issues/630 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/630/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/630/comments | https://api.github.com/repos/huggingface/datasets/issues/630/events | https://github.com/huggingface/datasets/issues/630 | 701,636,350 | MDU6SXNzdWU3MDE2MzYzNTA= | 630 | Text dataset not working with large files | [] | closed | false | null | 11 | 2020-09-15T06:02:36Z | 2020-09-25T22:21:43Z | 2020-09-25T22:21:43Z | null | ```

Traceback (most recent call last):

File "examples/language-modeling/run_language_modeling.py", line 333, in <module>

main()

File "examples/language-modeling/run_language_modeling.py", line 262, in main

get_dataset(data_args, tokenizer=tokenizer, cache_dir=model_args.cache_dir) if training_args.do_train else None

File "examples/language-modeling/run_language_modeling.py", line 144, in get_dataset

dataset = load_dataset("text", data_files=file_path, split='train+test')

File "/home/ksjae/.local/lib/python3.7/site-packages/datasets/load.py", line 611, in load_dataset

ignore_verifications=ignore_verifications,

File "/home/ksjae/.local/lib/python3.7/site-packages/datasets/builder.py", line 469, in download_and_prepare

dl_manager=dl_manager, verify_infos=verify_infos, **download_and_prepare_kwargs

File "/home/ksjae/.local/lib/python3.7/site-packages/datasets/builder.py", line 546, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/home/ksjae/.local/lib/python3.7/site-packages/datasets/builder.py", line 888, in _prepare_split

for key, table in utils.tqdm(generator, unit=" tables", leave=False, disable=not_verbose):

File "/home/ksjae/.local/lib/python3.7/site-packages/tqdm/std.py", line 1129, in __iter__

for obj in iterable:

File "/home/ksjae/.cache/huggingface/modules/datasets_modules/datasets/text/7e13bc0fa76783d4ef197f079dc8acfe54c3efda980f2c9adfab046ede2f0ff7/text.py", line 104, in _generate_tables

convert_options=self.config.convert_options,

File "pyarrow/_csv.pyx", line 714, in pyarrow._csv.read_csv

File "pyarrow/error.pxi", line 122, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 84, in pyarrow.lib.check_status

```

**pyarrow.lib.ArrowInvalid: straddling object straddles two block boundaries (try to increase block size?)**

It gives the same message for both 200MB, 10GB .tx files but not for 700MB file.

Can't upload due to size & copyright problem. sorry. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/630/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/630/timeline | null | completed | null | null | false | [

"Seems like it works when setting ```block_size=2100000000``` or something arbitrarily large though.",

"Can you give us some stats on the data files you use as inputs?",

"Basically ~600MB txt files(UTF-8) * 59. \r\ncontents like ```안녕하세요, 이것은 예제로 한번 말해보는 텍스트입니다. 그냥 이렇다고요.<|endoftext|>\\n```\r\n\r\nAlso, it gets stuck for a loooong time at ```Testing the mapped function outputs```, for more than 12 hours(currently ongoing)",

"It gets stuck while doing `.map()` ? Are you using multiprocessing ?\r\nIf you could provide a code snippet it could be very useful",

"From transformers/examples/language-modeling/run-language-modeling.py :\r\n```\r\ndef get_dataset(\r\n args: DataTrainingArguments,\r\n tokenizer: PreTrainedTokenizer,\r\n evaluate: bool = False,\r\n cache_dir: Optional[str] = None,\r\n):\r\n file_path = args.eval_data_file if evaluate else args.train_data_file\r\n if True:\r\n dataset = load_dataset(\"text\", data_files=glob.glob(file_path), split='train', use_threads=True, \r\n ignore_verifications=True, save_infos=True, block_size=104857600)\r\n dataset = dataset.map(lambda ex: tokenizer(ex[\"text\"], add_special_tokens=True,\r\n truncation=True, max_length=args.block_size), batched=True)\r\n dataset.set_format(type='torch', columns=['input_ids'])\r\n return dataset\r\n if args.line_by_line:\r\n return LineByLineTextDataset(tokenizer=tokenizer, file_path=file_path, block_size=args.block_size)\r\n else:\r\n return TextDataset(\r\n tokenizer=tokenizer,\r\n file_path=file_path,\r\n block_size=args.block_size,\r\n overwrite_cache=args.overwrite_cache,\r\n cache_dir=cache_dir,\r\n )\r\n```\r\n\r\nNo, I'm not using multiprocessing.",

"I am not able to reproduce on my side :/\r\n\r\nCould you send the version of `datasets` and `pyarrow` you're using ?\r\nCould you try to update the lib and try again ?\r\nOr do you think you could try to reproduce it on google colab ?",

"Huh, weird. It's fixed on my side too.\r\nBut now ```Caching processed dataset``` is taking forever - how can I disable it? Any flags?",

"Right after `Caching processed dataset`, your function is applied to the dataset and there's a progress bar that shows how much time is left. How much time does it take for you ?\r\n\r\nAlso caching isn't supposed to slow down your processing. But if you still want to disable it you can do `.map(..., load_from_cache_file=False)`",

"Ah, it’s much faster now(Takes around 15~20min). \r\nBTW, any way to set default tensor output as plain tensors with distributed training? The ragged tensors are incompatible with tpustrategy :(",

"> Ah, it’s much faster now(Takes around 15~20min).\r\n\r\nGlad to see that it's faster now. What did you change exactly ?\r\n\r\n> BTW, any way to set default tensor output as plain tensors with distributed training? The ragged tensors are incompatible with tpustrategy :(\r\n\r\nOh I didn't know about that. Feel free to open an issue to mention that.\r\nI guess what you can do for now is set the dataset format to numpy instead of tensorflow, and use a wrapper of the dataset that converts the numpy arrays to tf tensors.\r\n\r\n",

">>> Glad to see that it's faster now. What did you change exactly ?\r\nI don't know, it just worked...? Sorry I couldn't be more helpful.\r\n\r\nSetting with numpy array is a great idea! Thanks."

] |

https://api.github.com/repos/huggingface/datasets/issues/1983 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1983/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1983/comments | https://api.github.com/repos/huggingface/datasets/issues/1983/events | https://github.com/huggingface/datasets/issues/1983 | 821,746,008 | MDU6SXNzdWU4MjE3NDYwMDg= | 1,983 | The size of CoNLL-2003 is not consistant with the official release. | [] | closed | false | null | 4 | 2021-03-04T04:41:34Z | 2022-10-05T13:13:26Z | 2022-10-05T13:13:26Z | null | Thanks for the dataset sharing! But when I use conll-2003, I meet some questions.

The statistics of conll-2003 in this repo is :

\#train 14041 \#dev 3250 \#test 3453

While the official statistics is:

\#train 14987 \#dev 3466 \#test 3684

Wish for your reply~ | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1983/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1983/timeline | null | completed | null | null | false | [

"Hi,\r\n\r\nif you inspect the raw data, you can find there are 946 occurrences of `-DOCSTART- -X- -X- O` in the train split and `14041 + 946 = 14987`, which is exactly the number of sentences the authors report. `-DOCSTART-` is a special line that acts as a boundary between two different documents and is filtered out in our implementation.\r\n\r\n@lhoestq What do you think about including these lines? ([Link](https://github.com/flairNLP/flair/issues/1097) to a similar issue in the flairNLP repo)",

"We should mention in the Conll2003 dataset card that these lines have been removed indeed.\r\n\r\nIf some users are interested in using these lines (maybe to recombine documents ?) then we can add a parameter to the conll2003 dataset to include them.\r\n\r\nBut IMO the default config should stay the current one (without the `-DOCSTART-` stuff), so that you can directly train NER models without additional preprocessing. Let me know what you think",

"@lhoestq Yes, I agree adding a small note should be sufficient.\r\n\r\nCurrently, NLTK's `ConllCorpusReader` ignores the `-DOCSTART-` lines so I think it's ok if we do the same. If there is an interest in the future to use these lines, then we can include them.",

"I added a mention of this in conll2003's dataset card:\r\nhttps://github.com/huggingface/datasets/blob/fc9796920da88486c3b97690969aabf03d6b4088/datasets/conll2003/README.md#conll2003\r\n\r\nEdit: just saw your PR @mariosasko (noticed it too late ^^)\r\nLet me take a look at it :)"

] |

https://api.github.com/repos/huggingface/datasets/issues/5894 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5894/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5894/comments | https://api.github.com/repos/huggingface/datasets/issues/5894/events | https://github.com/huggingface/datasets/pull/5894 | 1,724,774,910 | PR_kwDODunzps5RSjot | 5,894 | Force overwrite existing filesystem protocol | [] | closed | false | null | 2 | 2023-05-24T21:41:53Z | 2023-05-25T06:52:08Z | 2023-05-25T06:42:33Z | null | Fix #5876 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5894/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5894/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/5894.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5894",

"merged_at": "2023-05-25T06:42:33Z",

"patch_url": "https://github.com/huggingface/datasets/pull/5894.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5894"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.009139 / 0.011353 (-0.002214) | 0.005634 / 0.011008 (-0.005374) | 0.129587 / 0.038508 (0.091079) | 0.038298 / 0.023109 (0.015189) | 0.428149 / 0.275898 (0.152251) | 0.443744 / 0.323480 (0.120264) | 0.007501 / 0.007986 (-0.000485) | 0.005999 / 0.004328 (0.001671) | 0.100796 / 0.004250 (0.096546) | 0.053236 / 0.037052 (0.016184) | 0.423868 / 0.258489 (0.165379) | 0.460110 / 0.293841 (0.166269) | 0.041255 / 0.128546 (-0.087291) | 0.013790 / 0.075646 (-0.061856) | 0.438398 / 0.419271 (0.019127) | 0.063086 / 0.043533 (0.019553) | 0.414826 / 0.255139 (0.159687) | 0.460652 / 0.283200 (0.177453) | 0.121223 / 0.141683 (-0.020460) | 1.754430 / 1.452155 (0.302275) | 1.900037 / 1.492716 (0.407320) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.027222 / 0.018006 (0.009216) | 0.617666 / 0.000490 (0.617176) | 0.022443 / 0.000200 (0.022243) | 0.000820 / 0.000054 (0.000766) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.030397 / 0.037411 (-0.007014) | 0.125732 / 0.014526 (0.111206) | 0.149805 / 0.176557 (-0.026752) | 0.234048 / 0.737135 (-0.503087) | 0.143108 / 0.296338 (-0.153231) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.631189 / 0.215209 (0.415980) | 6.182871 / 2.077655 (4.105216) | 2.635730 / 1.504120 (1.131610) | 2.231429 / 1.541195 (0.690235) | 2.438360 / 1.468490 (0.969870) | 0.861170 / 4.584777 (-3.723607) | 5.785984 / 3.745712 (2.040272) | 2.758358 / 5.269862 (-2.511504) | 1.678095 / 4.565676 (-2.887582) | 0.105961 / 0.424275 (-0.318314) | 0.013659 / 0.007607 (0.006052) | 0.762943 / 0.226044 (0.536898) | 7.774399 / 2.268929 (5.505471) | 3.319027 / 55.444624 (-52.125598) | 2.700248 / 6.876477 (-4.176229) | 3.008581 / 2.142072 (0.866509) | 1.122522 / 4.805227 (-3.682705) | 0.214832 / 6.500664 (-6.285832) | 0.085281 / 0.075469 (0.009811) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.647610 / 1.841788 (-0.194177) | 18.178316 / 8.074308 (10.104008) | 21.199177 / 10.191392 (11.007785) | 0.247063 / 0.680424 (-0.433361) | 0.030443 / 0.534201 (-0.503758) | 0.512527 / 0.579283 (-0.066757) | 0.640758 / 0.434364 (0.206394) | 0.639986 / 0.540337 (0.099649) | 0.760113 / 1.386936 (-0.626823) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.008293 / 0.011353 (-0.003060) | 0.005360 / 0.011008 (-0.005648) | 0.102932 / 0.038508 (0.064424) | 0.037457 / 0.023109 (0.014347) | 0.444114 / 0.275898 (0.168216) | 0.512855 / 0.323480 (0.189375) | 0.007030 / 0.007986 (-0.000956) | 0.004954 / 0.004328 (0.000625) | 0.095757 / 0.004250 (0.091507) | 0.051239 / 0.037052 (0.014187) | 0.471118 / 0.258489 (0.212629) | 0.517764 / 0.293841 (0.223923) | 0.041953 / 0.128546 (-0.086593) | 0.013748 / 0.075646 (-0.061898) | 0.118089 / 0.419271 (-0.301182) | 0.060159 / 0.043533 (0.016626) | 0.466011 / 0.255139 (0.210872) | 0.489180 / 0.283200 (0.205980) | 0.123250 / 0.141683 (-0.018433) | 1.714738 / 1.452155 (0.262584) | 1.838571 / 1.492716 (0.345855) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.267792 / 0.018006 (0.249785) | 0.624313 / 0.000490 (0.623824) | 0.007315 / 0.000200 (0.007115) | 0.000136 / 0.000054 (0.000082) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.033751 / 0.037411 (-0.003661) | 0.122819 / 0.014526 (0.108293) | 0.148270 / 0.176557 (-0.028286) | 0.198581 / 0.737135 (-0.538554) | 0.144845 / 0.296338 (-0.151494) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.620631 / 0.215209 (0.405422) | 6.224665 / 2.077655 (4.147010) | 2.856592 / 1.504120 (1.352473) | 2.525089 / 1.541195 (0.983894) | 2.600198 / 1.468490 (1.131708) | 0.872038 / 4.584777 (-3.712739) | 5.571650 / 3.745712 (1.825937) | 5.907643 / 5.269862 (0.637782) | 2.348770 / 4.565676 (-2.216906) | 0.111665 / 0.424275 (-0.312610) | 0.013886 / 0.007607 (0.006278) | 0.762154 / 0.226044 (0.536109) | 7.792686 / 2.268929 (5.523758) | 3.601122 / 55.444624 (-51.843503) | 2.939412 / 6.876477 (-3.937064) | 2.973430 / 2.142072 (0.831358) | 1.065016 / 4.805227 (-3.740211) | 0.221701 / 6.500664 (-6.278963) | 0.088157 / 0.075469 (0.012688) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.771061 / 1.841788 (-0.070727) | 18.826926 / 8.074308 (10.752618) | 21.283830 / 10.191392 (11.092438) | 0.239233 / 0.680424 (-0.441191) | 0.026159 / 0.534201 (-0.508042) | 0.487074 / 0.579283 (-0.092209) | 0.623241 / 0.434364 (0.188877) | 0.600506 / 0.540337 (0.060169) | 0.691271 / 1.386936 (-0.695665) |\n\n</details>\n</details>\n\n\n"

] |

https://api.github.com/repos/huggingface/datasets/issues/3583 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3583/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3583/comments | https://api.github.com/repos/huggingface/datasets/issues/3583/events | https://github.com/huggingface/datasets/issues/3583 | 1,105,195,144 | I_kwDODunzps5B3_CI | 3,583 | Add The Medical Segmentation Decathlon Dataset | [

{

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset",

"id": 2067376369,

"name": "dataset request",

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request"

},

{

"color": "bfdadc",

"default": false,

"description": "Vision datasets",

"id": 3608941089,

"name": "vision",

"node_id": "LA_kwDODunzps7XHBIh",

"url": "https://api.github.com/repos/huggingface/datasets/labels/vision"

}

] | open | false | null | 5 | 2022-01-16T21:42:25Z | 2022-03-18T10:44:42Z | null | null | ## Adding a Dataset

- **Name:** *The Medical Segmentation Decathlon Dataset*

- **Description:** The underlying data set was designed to explore the axis of difficulties typically encountered when dealing with medical images, such as small data sets, unbalanced labels, multi-site data, and small objects.

- **Paper:** [link to the dataset paper if available](https://arxiv.org/abs/2106.05735)

- **Data:** http://medicaldecathlon.com/

- **Motivation:** Hugging Face seeks to democratize ML for society. One of the growing niches within ML is the ML + Medicine community. Key data sets will help increase the supply of HF resources for starting an initial community.

(cc @osanseviero @abidlabs )

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md).

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3583/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3583/timeline | null | null | null | null | false | [

"Hello! I have recently been involved with a medical image segmentation project myself and was going through the `The Medical Segmentation Decathlon Dataset` as well. \r\nI haven't yet had experience adding datasets to this repository yet but would love to get started. Should I take this issue?\r\nIf yes, I've got two questions -\r\n1. There are 10 different datasets available, so are all datasets to be added in a single PR, or one at a time? \r\n2. Since it's a competition, masks for the test-set are not available. How is that to be tackled? Sorry if it's a silly question, I have recently started exploring `datasets`.",

"Hi! Sure, feel free to take this issue. You can self-assign the issue by commenting `#self-assign`.\r\n\r\nTo answer your questions:\r\n1. It makes the most sense to add each one as a separate config, so one dataset script with 10 configs in a single PR.\r\n2. Just set masks in the test set to `None`.\r\n\r\nNote that the images/masks in this dataset are in NIfTI format, which our `Image` feature currently doesn't support, so I think it's best to yield the paths to the images/masks in the script and add a preprocessing section to the card where we explain how to load/process the images/masks with `nibabel` (I can help with that). \r\n\r\n",

"> Note that the images/masks in this dataset are in NIfTI format, which our `Image` feature currently doesn't support, so I think it's best to yield the paths to the images/masks in the script and add a preprocessing section to the card where we explain how to load/process the images/masks with `nibabel` (I can help with that).\r\n\r\nGotcha, thanks. Will start working on the issue and let you know in case of any doubt.",

"#self-assign",

"This is great! There is a first model on the HUb that uses this dataset! https://huggingface.co/MONAI/example_spleen_segmentation"

] |

https://api.github.com/repos/huggingface/datasets/issues/817 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/817/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/817/comments | https://api.github.com/repos/huggingface/datasets/issues/817/events | https://github.com/huggingface/datasets/issues/817 | 739,145,369 | MDU6SXNzdWU3MzkxNDUzNjk= | 817 | Add MRQA dataset | [

{

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset",

"id": 2067376369,

"name": "dataset request",

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request"

}

] | closed | false | null | 1 | 2020-11-09T15:52:19Z | 2020-12-04T15:44:42Z | 2020-12-04T15:44:41Z | null | ## Adding a Dataset

- **Name:** MRQA

- **Description:** Collection of different (subsets of) QA datasets all converted to the same format to evaluate out-of-domain generalization (the datasets come from different domains, distributions, etc.). Some datasets are used for training and others are used for evaluation. This dataset was collected as part of MRQA 2019's shared task

- **Paper:** https://arxiv.org/abs/1910.09753

- **Data:** https://github.com/mrqa/MRQA-Shared-Task-2019

- **Motivation:** Out-of-domain generalization is becoming (has become) a de-factor evaluation for NLU systems

Instructions to add a new dataset can be found [here](https://huggingface.co/docs/datasets/share_dataset.html). | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 1,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/817/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/817/timeline | null | completed | null | null | false | [

"Done! cf #1117 and #1022"

] |

https://api.github.com/repos/huggingface/datasets/issues/915 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/915/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/915/comments | https://api.github.com/repos/huggingface/datasets/issues/915/events | https://github.com/huggingface/datasets/issues/915 | 753,118,481 | MDU6SXNzdWU3NTMxMTg0ODE= | 915 | Shall we change the hashing to encoding to reduce potential replicated cache files? | [

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

},

{

"color": "c5def5",

"default": false,

"description": "Generic discussion on the library",

"id": 2067400324,

"name": "generic discussion",

"node_id": "MDU6TGFiZWwyMDY3NDAwMzI0",

"url": "https://api.github.com/repos/huggingface/datasets/labels/generic%20discussion"

}

] | open | false | null | 2 | 2020-11-30T03:50:46Z | 2020-12-24T05:11:49Z | null | null | Hi there. For now, we are using `xxhash` to hash the transformations to fingerprint and we will save a copy of the processed dataset to disk if there is a new hash value. However, there are some transformations that are idempotent or commutative to each other. I think that encoding the transformation chain as the fingerprint may help in those cases, for example, use `base64.urlsafe_b64encode`. In this way, before we want to save a new copy, we can decode the transformation chain and normalize it to prevent omit potential reuse. As the main targets of this project are the really large datasets that cannot be loaded entirely in memory, I believe it would save a lot of time if we can avoid some write.

If you have interest in this, I'd love to help :). | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/915/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/915/timeline | null | null | null | null | false | [

"This is an interesting idea !\r\nDo you have ideas about how to approach the decoding and the normalization ?",

"@lhoestq\r\nI think we first need to save the transformation chain to a list in `self._fingerprint`. Then we can\r\n- decode all the current saved datasets to see if there is already one that is equivalent to the transformation we need now.\r\n- or, calculate all the possible hash value of the current chain for comparison so that we could continue to use hashing.\r\nIf we find one, we can adjust the list in `self._fingerprint` to it.\r\n\r\nAs for the transformation reordering rules, we can just start with some manual rules, like two sort on the same column should merge to one, filter and select can change orders.\r\n\r\nAnd for encoding and decoding, we can just manually specify `sort` is 0, `shuffling` is 2 and create a base-n number or use some general algorithm like `base64.urlsafe_b64encode`.\r\n\r\nBecause we are not doing lazy evaluation now, we may not be able to normalize the transformation to its minimal form. If we want to support that, we can provde a `Sequential` api and let user input a list or transformation, so that user would not use the intermediate datasets. This would look like tf.data.Dataset."

] |

https://api.github.com/repos/huggingface/datasets/issues/4327 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4327/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4327/comments | https://api.github.com/repos/huggingface/datasets/issues/4327/events | https://github.com/huggingface/datasets/issues/4327 | 1,233,840,020 | I_kwDODunzps5JiueU | 4,327 | `wikipedia` pre-processed datasets | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | 2 | 2022-05-12T11:25:42Z | 2022-08-31T08:26:57Z | 2022-08-31T08:26:57Z | null | ## Describe the bug

[Wikipedia](https://huggingface.co/datasets/wikipedia) dataset readme says that certain subsets are preprocessed. However it seems like they are not available. When I try to load them it takes a really long time, and it seems like it's processing them.

## Steps to reproduce the bug

```python

from datasets import load_dataset

load_dataset("wikipedia", "20220301.en")

```

## Expected results

To load the dataset

## Actual results

Takes a very long time to load (after downloading)

After `Downloading data files: 100%`. It takes hours and gets killed.

Tried `wikipedia.simple` and it got processed after ~30mins. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4327/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4327/timeline | null | completed | null | null | false | [

"Hi @vpj, thanks for reporting.\r\n\r\nI'm sorry, but I can't reproduce your bug: I load \"20220301.simple\"in 9 seconds:\r\n```shell\r\ntime python -c \"from datasets import load_dataset; load_dataset('wikipedia', '20220301.simple')\"\r\n\r\nDownloading and preparing dataset wikipedia/20220301.simple (download: 228.58 MiB, generated: 224.18 MiB, post-processed: Unknown size, total: 452.76 MiB) to .../.cache/huggingface/datasets/wikipedia/20220301.simple/2.0.0/aa542ed919df55cc5d3347f42dd4521d05ca68751f50dbc32bae2a7f1e167559...\r\nDownloading: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1.66k/1.66k [00:00<00:00, 1.02MB/s]\r\nDownloading: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 235M/235M [00:02<00:00, 82.8MB/s]\r\nDataset wikipedia downloaded and prepared to .../.cache/huggingface/datasets/wikipedia/20220301.simple/2.0.0/aa542ed919df55cc5d3347f42dd4521d05ca68751f50dbc32bae2a7f1e167559. Subsequent calls will reuse this data.\r\n100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1/1 [00:00<00:00, 290.75it/s]\r\n\r\nreal\t0m9.693s\r\nuser\t0m6.002s\r\nsys\t0m3.260s\r\n```\r\n\r\nCould you please check your environment info, as requested when opening this issue?\r\n```\r\n## Environment info\r\n<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->\r\n- `datasets` version:\r\n- Platform:\r\n- Python version:\r\n- PyArrow version:\r\n```\r\nMaybe you are using an old version of `datasets`...",

"Downloading and processing `wikipedia simple` dataset completed in under 11sec on M1 Mac. Could you please check `dataset` version as mentioned by @albertvillanova? Also check system specs, if system is under load processing could take some time I guess."

] |

https://api.github.com/repos/huggingface/datasets/issues/1707 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1707/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1707/comments | https://api.github.com/repos/huggingface/datasets/issues/1707/events | https://github.com/huggingface/datasets/pull/1707 | 781,507,545 | MDExOlB1bGxSZXF1ZXN0NTUxMjE5MDk2 | 1,707 | Added generated READMEs for datasets that were missing one. | [] | closed | false | null | 1 | 2021-01-07T18:10:06Z | 2021-01-18T14:32:33Z | 2021-01-18T14:32:33Z | null | This is it: we worked on a generator with Yacine @yjernite , and we generated dataset cards for all missing ones (161), with all the information we could gather from datasets repository, and using dummy_data to generate examples when possible.

Code is available here for the moment: https://github.com/madlag/datasets_readme_generator .

We will move it to a Hugging Face repository and to https://huggingface.co/datasets/card-creator/ later.

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 2,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 2,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1707/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1707/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1707.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1707",

"merged_at": "2021-01-18T14:32:33Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1707.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1707"

} | true | [

"Looks like we need to trim the ones with too many configs, will look into it tomorrow!"

] |

https://api.github.com/repos/huggingface/datasets/issues/3951 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3951/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3951/comments | https://api.github.com/repos/huggingface/datasets/issues/3951/events | https://github.com/huggingface/datasets/issues/3951 | 1,171,568,814 | I_kwDODunzps5F1Liu | 3,951 | Forked streaming datasets try to `open` data urls rather than use network | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | 1 | 2022-03-16T21:21:02Z | 2022-06-10T20:47:26Z | 2022-06-10T20:47:26Z | null | ## Describe the bug

Building on #3950, if you bypass the pickling problem you still can't use the dataset. Somehow something gets confused and the forked processes try to `open` urls rather than anything else.

## Steps to reproduce the bug

```python

from multiprocessing import freeze_support

import transformers

from transformers import Trainer, AutoModelForCausalLM, TrainingArguments

import datasets

import torch.utils.data

# work around #3950

class TorchIterableDataset(datasets.IterableDataset, torch.utils.data.IterableDataset):

pass

def _ensure_format(v: datasets.IterableDataset) -> datasets.IterableDataset:

return TorchIterableDataset(v._ex_iterable, v.info, v.split, "torch", v._shuffling)

if __name__ == '__main__':

freeze_support()

ds = datasets.load_dataset('oscar', "unshuffled_deduplicated_en", split='train', streaming=True)

ds = _ensure_format(ds)

model = AutoModelForCausalLM.from_pretrained("distilgpt2")

Trainer(model, train_dataset=ds, args=TrainingArguments("out", max_steps=1000, dataloader_num_workers=4)).train()

```

## Expected results

I'd expect the dataset to load the url correctly and produce examples.

## Actual results

```

warnings.warn(

***** Running training *****

Num examples = 8000

Num Epochs = 9223372036854775807

Instantaneous batch size per device = 8

Total train batch size (w. parallel, distributed & accumulation) = 8

Gradient Accumulation steps = 1

Total optimization steps = 1000

0%| | 0/1000 [00:00<?, ?it/s]Traceback (most recent call last):

File "/Users/dlwh/src/mistral/src/stream_fork_crash.py", line 22, in <module>

Trainer(model, train_dataset=ds, args=TrainingArguments("out", max_steps=1000, dataloader_num_workers=4)).train()

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/site-packages/transformers/trainer.py", line 1339, in train

for step, inputs in enumerate(epoch_iterator):

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 521, in __next__

data = self._next_data()

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 1203, in _next_data

return self._process_data(data)

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 1229, in _process_data

data.reraise()

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/site-packages/torch/_utils.py", line 434, in reraise

raise exception

FileNotFoundError: Caught FileNotFoundError in DataLoader worker process 0.

Original Traceback (most recent call last):

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/site-packages/torch/utils/data/_utils/worker.py", line 287, in _worker_loop

data = fetcher.fetch(index)

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/site-packages/torch/utils/data/_utils/fetch.py", line 32, in fetch

data.append(next(self.dataset_iter))

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/site-packages/datasets/iterable_dataset.py", line 497, in __iter__

for key, example in self._iter():

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/site-packages/datasets/iterable_dataset.py", line 494, in _iter

yield from ex_iterable

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/site-packages/datasets/iterable_dataset.py", line 87, in __iter__

yield from self.generate_examples_fn(**self.kwargs)

File "/Users/dlwh/.cache/huggingface/modules/datasets_modules/datasets/oscar/84838bd49d2295f62008383b05620571535451d84545037bb94d6f3501651df2/oscar.py", line 358, in _generate_examples

with gzip.open(open(filepath, "rb"), "rt", encoding="utf-8") as f:

FileNotFoundError: [Errno 2] No such file or directory: 'https://s3.amazonaws.com/datasets.huggingface.co/oscar/1.0/unshuffled/deduplicated/en/en_part_1.txt.gz'

Error in atexit._run_exitfuncs:

Traceback (most recent call last):

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/multiprocessing/popen_fork.py", line 27, in poll

pid, sts = os.waitpid(self.pid, flag)

File "/Users/dlwh/.conda/envs/mistral/lib/python3.8/site-packages/torch/utils/data/_utils/signal_handling.py", line 66, in handler

_error_if_any_worker_fails()

RuntimeError: DataLoader worker (pid 6932) is killed by signal: Terminated: 15.

0%| | 0/1000 [00:02<?, ?it/s]

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 2.0.0

- Platform: macOS-12.2-arm64-arm-64bit

- Python version: 3.8.12

- PyArrow version: 7.0.0

- Pandas version: 1.4.1

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3951/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3951/timeline | null | completed | null | null | false | [

"Thanks for reporting this second issue as well. We definitely want to make streaming datasets fully working in a distributed setup and with the best performance. Right now it only supports single process.\r\n\r\nIn this issue it seems that the streaming capabilities that we offer to dataset builders are not transferred to the forked process (so it fails to open remote files and start streaming data from them). In particular `open` is supposed to be mocked by our `xopen` function that is an extended open that supports remote files. Let me try to fix this"

] |

https://api.github.com/repos/huggingface/datasets/issues/3007 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3007/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3007/comments | https://api.github.com/repos/huggingface/datasets/issues/3007/events | https://github.com/huggingface/datasets/pull/3007 | 1,014,775,450 | PR_kwDODunzps4sns-n | 3,007 | Correct a typo | [] | closed | false | null | 0 | 2021-10-04T06:15:47Z | 2021-10-04T09:27:57Z | 2021-10-04T09:27:57Z | null | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3007/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3007/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3007.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3007",

"merged_at": "2021-10-04T09:27:57Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3007.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3007"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/4560 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4560/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4560/comments | https://api.github.com/repos/huggingface/datasets/issues/4560/events | https://github.com/huggingface/datasets/pull/4560 | 1,283,558,873 | PR_kwDODunzps46TY9n | 4,560 | Add evaluation metadata to imagenet-1k | [

{

"color": "0e8a16",

"default": false,

"description": "Contribution to a dataset script",

"id": 4564477500,

"name": "dataset contribution",

"node_id": "LA_kwDODunzps8AAAABEBBmPA",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20contribution"

}

] | closed | false | null | 2 | 2022-06-24T10:12:41Z | 2022-09-23T09:39:53Z | 2022-09-23T09:37:03Z | null | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4560/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4560/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/4560.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4560",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/4560.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4560"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._",

"As discussed with @lewtun, we are closing this PR, because it requires first the task names to be aligned between AutoTrain and datasets."

] |

https://api.github.com/repos/huggingface/datasets/issues/2282 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2282/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2282/comments | https://api.github.com/repos/huggingface/datasets/issues/2282/events | https://github.com/huggingface/datasets/pull/2282 | 870,900,332 | MDExOlB1bGxSZXF1ZXN0NjI2MDEyMzM3 | 2,282 | Initialize imdb dataset from don't stop pretraining paper | [] | closed | false | null | 0 | 2021-04-29T11:17:56Z | 2021-04-29T11:43:51Z | 2021-04-29T11:43:51Z | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2282/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2282/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2282.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2282",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/2282.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2282"

} | true | [] |

|

https://api.github.com/repos/huggingface/datasets/issues/4981 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4981/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4981/comments | https://api.github.com/repos/huggingface/datasets/issues/4981/events | https://github.com/huggingface/datasets/issues/4981 | 1,375,086,773 | I_kwDODunzps5R9ii1 | 4,981 | Can't create a dataset with `float16` features | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | open | false | null | 7 | 2022-09-15T21:03:24Z | 2023-03-22T21:40:09Z | null | null | ## Describe the bug

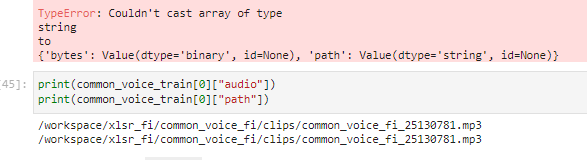

I can't create a dataset with `float16` features.

I understand from the traceback that this is a `pyarrow` error, but I don't see anywhere in the `datasets` documentation about how to successfully do this. Is it actually supported? I've tried older versions of `pyarrow` as well with the same exact error.

The bug seems to arise from `datasets` casting the values to `double` and then `pyarrow` doesn't know how to convert those back to `float16`... does that sound right? Is there a way to bypass this since it's not necessary in the `numpy` and `torch` cases?

Thanks!

## Steps to reproduce the bug

All of the following raise the following error with the same exact (as far as I can tell) traceback:

```python

ArrowNotImplementedError: Unsupported cast from double to halffloat using function cast_half_float

```

```python

from datasets import Dataset, Features, Value

Dataset.from_dict({"x": [0.0, 1.0, 2.0]}, features=Features(x=Value("float16")))

import numpy as np

Dataset.from_dict({"x": np.arange(3, dtype=np.float16)}, features=Features(x=Value("float16")))

import torch

Dataset.from_dict({"x": torch.arange(3).to(torch.float16)}, features=Features(x=Value("float16")))

```

## Expected results

A dataset with `float16` features is successfully created.

## Actual results

```python

---------------------------------------------------------------------------

ArrowNotImplementedError Traceback (most recent call last)

Cell In [14], line 1

----> 1 Dataset.from_dict({"x": [1.0, 2.0, 3.0]}, features=Features(x=Value("float16")))

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/datasets/arrow_dataset.py:870, in Dataset.from_dict(cls, mapping, features, info, split)

865 mapping = features.encode_batch(mapping)

866 mapping = {

867 col: OptimizedTypedSequence(data, type=features[col] if features is not None else None, col=col)

868 for col, data in mapping.items()

869 }

--> 870 pa_table = InMemoryTable.from_pydict(mapping=mapping)

871 if info.features is None:

872 info.features = Features({col: ts.get_inferred_type() for col, ts in mapping.items()})

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/datasets/table.py:750, in InMemoryTable.from_pydict(cls, *args, **kwargs)

734 @classmethod

735 def from_pydict(cls, *args, **kwargs):

736 """

737 Construct a Table from Arrow arrays or columns

738

(...)

748 :class:`datasets.table.Table`:

749 """

--> 750 return cls(pa.Table.from_pydict(*args, **kwargs))

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/pyarrow/table.pxi:3648, in pyarrow.lib.Table.from_pydict()

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/pyarrow/table.pxi:5174, in pyarrow.lib._from_pydict()

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/pyarrow/array.pxi:343, in pyarrow.lib.asarray()

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/pyarrow/array.pxi:231, in pyarrow.lib.array()

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/pyarrow/array.pxi:110, in pyarrow.lib._handle_arrow_array_protocol()

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/datasets/arrow_writer.py:197, in TypedSequence.__arrow_array__(self, type)

192 # otherwise we can finally use the user's type

193 elif type is not None:

194 # We use cast_array_to_feature to support casting to custom types like Audio and Image

195 # Also, when trying type "string", we don't want to convert integers or floats to "string".

196 # We only do it if trying_type is False - since this is what the user asks for.

--> 197 out = cast_array_to_feature(out, type, allow_number_to_str=not self.trying_type)

198 return out

199 except (TypeError, pa.lib.ArrowInvalid) as e: # handle type errors and overflows

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/datasets/table.py:1683, in _wrap_for_chunked_arrays.<locals>.wrapper(array, *args, **kwargs)

1681 return pa.chunked_array([func(chunk, *args, **kwargs) for chunk in array.chunks])

1682 else:

-> 1683 return func(array, *args, **kwargs)

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/datasets/table.py:1853, in cast_array_to_feature(array, feature, allow_number_to_str)

1851 return array_cast(array, get_nested_type(feature), allow_number_to_str=allow_number_to_str)

1852 elif not isinstance(feature, (Sequence, dict, list, tuple)):

-> 1853 return array_cast(array, feature(), allow_number_to_str=allow_number_to_str)

1854 raise TypeError(f"Couldn't cast array of type\n{array.type}\nto\n{feature}")

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/datasets/table.py:1683, in _wrap_for_chunked_arrays.<locals>.wrapper(array, *args, **kwargs)

1681 return pa.chunked_array([func(chunk, *args, **kwargs) for chunk in array.chunks])

1682 else:

-> 1683 return func(array, *args, **kwargs)

File ~/scratch/scratch-env-39/.venv/lib/python3.9/site-packages/datasets/table.py:1762, in array_cast(array, pa_type, allow_number_to_str)

1760 if pa.types.is_null(pa_type) and not pa.types.is_null(array.type):