code

stringlengths 501

5.19M

| package

stringlengths 2

81

| path

stringlengths 9

304

| filename

stringlengths 4

145

|

|---|---|---|---|

import boto3

from AWSIoTPythonSDK.MQTTLib import AWSIoTMQTTClient

import logging

import time

import argparse

# Custom MQTT message callback

def customCallback(client, userdata, message):

print("Received a new message: ")

print(message.payload)

print("from topic: ")

print(message.topic)

print("--------------\n\n")

# Read in command-line parameters

parser = argparse.ArgumentParser()

parser.add_argument("-e", "--endpoint", action="store", required=True, dest="host", help="Your AWS IoT custom endpoint")

parser.add_argument("-r", "--rootCA", action="store", required=True, dest="rootCAPath", help="Root CA file path")

parser.add_argument("-C", "--CognitoIdentityPoolID", action="store", required=True, dest="cognitoIdentityPoolID",

help="Your AWS Cognito Identity Pool ID")

parser.add_argument("-id", "--clientId", action="store", dest="clientId", default="basicPubSub_CognitoSTS",

help="Targeted client id")

parser.add_argument("-t", "--topic", action="store", dest="topic", default="sdk/test/Python", help="Targeted topic")

args = parser.parse_args()

host = args.host

rootCAPath = args.rootCAPath

clientId = args.clientId

cognitoIdentityPoolID = args.cognitoIdentityPoolID

topic = args.topic

# Configure logging

logger = logging.getLogger("AWSIoTPythonSDK.core")

logger.setLevel(logging.DEBUG)

streamHandler = logging.StreamHandler()

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

streamHandler.setFormatter(formatter)

logger.addHandler(streamHandler)

# Cognito auth

identityPoolID = cognitoIdentityPoolID

region = host.split('.')[2]

cognitoIdentityClient = boto3.client('cognito-identity', region_name=region)

# identityPoolInfo = cognitoIdentityClient.describe_identity_pool(IdentityPoolId=identityPoolID)

# print identityPoolInfo

temporaryIdentityId = cognitoIdentityClient.get_id(IdentityPoolId=identityPoolID)

identityID = temporaryIdentityId["IdentityId"]

temporaryCredentials = cognitoIdentityClient.get_credentials_for_identity(IdentityId=identityID)

AccessKeyId = temporaryCredentials["Credentials"]["AccessKeyId"]

SecretKey = temporaryCredentials["Credentials"]["SecretKey"]

SessionToken = temporaryCredentials["Credentials"]["SessionToken"]

# Init AWSIoTMQTTClient

myAWSIoTMQTTClient = AWSIoTMQTTClient(clientId, useWebsocket=True)

# AWSIoTMQTTClient configuration

myAWSIoTMQTTClient.configureEndpoint(host, 443)

myAWSIoTMQTTClient.configureCredentials(rootCAPath)

myAWSIoTMQTTClient.configureIAMCredentials(AccessKeyId, SecretKey, SessionToken)

myAWSIoTMQTTClient.configureAutoReconnectBackoffTime(1, 32, 20)

myAWSIoTMQTTClient.configureOfflinePublishQueueing(-1) # Infinite offline Publish queueing

myAWSIoTMQTTClient.configureDrainingFrequency(2) # Draining: 2 Hz

myAWSIoTMQTTClient.configureConnectDisconnectTimeout(10) # 10 sec

myAWSIoTMQTTClient.configureMQTTOperationTimeout(5) # 5 sec

# Connect and subscribe to AWS IoT

myAWSIoTMQTTClient.connect()

myAWSIoTMQTTClient.subscribe(topic, 1, customCallback)

time.sleep(2)

# Publish to the same topic in a loop forever

loopCount = 0

while True:

myAWSIoTMQTTClient.publish(topic, "New Message " + str(loopCount), 1)

loopCount += 1

time.sleep(1) | AWSIoTPythonSDK | /AWSIoTPythonSDK-1.5.2.tar.gz/AWSIoTPythonSDK-1.5.2/samples/basicPubSub/basicPubSub_CognitoSTS.py | basicPubSub_CognitoSTS.py |

from AWSIoTPythonSDK.MQTTLib import AWSIoTMQTTClient

import logging

import time

import argparse

# General message notification callback

def customOnMessage(message):

print("Received a new message: ")

print(message.payload)

print("from topic: ")

print(message.topic)

print("--------------\n\n")

# Suback callback

def customSubackCallback(mid, data):

print("Received SUBACK packet id: ")

print(mid)

print("Granted QoS: ")

print(data)

print("++++++++++++++\n\n")

# Puback callback

def customPubackCallback(mid):

print("Received PUBACK packet id: ")

print(mid)

print("++++++++++++++\n\n")

# Read in command-line parameters

parser = argparse.ArgumentParser()

parser.add_argument("-e", "--endpoint", action="store", required=True, dest="host", help="Your AWS IoT custom endpoint")

parser.add_argument("-r", "--rootCA", action="store", required=True, dest="rootCAPath", help="Root CA file path")

parser.add_argument("-c", "--cert", action="store", dest="certificatePath", help="Certificate file path")

parser.add_argument("-k", "--key", action="store", dest="privateKeyPath", help="Private key file path")

parser.add_argument("-p", "--port", action="store", dest="port", type=int, help="Port number override")

parser.add_argument("-w", "--websocket", action="store_true", dest="useWebsocket", default=False,

help="Use MQTT over WebSocket")

parser.add_argument("-id", "--clientId", action="store", dest="clientId", default="basicPubSub",

help="Targeted client id")

parser.add_argument("-t", "--topic", action="store", dest="topic", default="sdk/test/Python", help="Targeted topic")

args = parser.parse_args()

host = args.host

rootCAPath = args.rootCAPath

certificatePath = args.certificatePath

privateKeyPath = args.privateKeyPath

port = args.port

useWebsocket = args.useWebsocket

clientId = args.clientId

topic = args.topic

if args.useWebsocket and args.certificatePath and args.privateKeyPath:

parser.error("X.509 cert authentication and WebSocket are mutual exclusive. Please pick one.")

exit(2)

if not args.useWebsocket and (not args.certificatePath or not args.privateKeyPath):

parser.error("Missing credentials for authentication.")

exit(2)

# Port defaults

if args.useWebsocket and not args.port: # When no port override for WebSocket, default to 443

port = 443

if not args.useWebsocket and not args.port: # When no port override for non-WebSocket, default to 8883

port = 8883

# Configure logging

logger = logging.getLogger("AWSIoTPythonSDK.core")

logger.setLevel(logging.DEBUG)

streamHandler = logging.StreamHandler()

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

streamHandler.setFormatter(formatter)

logger.addHandler(streamHandler)

# Init AWSIoTMQTTClient

myAWSIoTMQTTClient = None

if useWebsocket:

myAWSIoTMQTTClient = AWSIoTMQTTClient(clientId, useWebsocket=True)

myAWSIoTMQTTClient.configureEndpoint(host, port)

myAWSIoTMQTTClient.configureCredentials(rootCAPath)

else:

myAWSIoTMQTTClient = AWSIoTMQTTClient(clientId)

myAWSIoTMQTTClient.configureEndpoint(host, port)

myAWSIoTMQTTClient.configureCredentials(rootCAPath, privateKeyPath, certificatePath)

# AWSIoTMQTTClient connection configuration

myAWSIoTMQTTClient.configureAutoReconnectBackoffTime(1, 32, 20)

myAWSIoTMQTTClient.configureOfflinePublishQueueing(-1) # Infinite offline Publish queueing

myAWSIoTMQTTClient.configureDrainingFrequency(2) # Draining: 2 Hz

myAWSIoTMQTTClient.configureConnectDisconnectTimeout(10) # 10 sec

myAWSIoTMQTTClient.configureMQTTOperationTimeout(5) # 5 sec

myAWSIoTMQTTClient.onMessage = customOnMessage

# Connect and subscribe to AWS IoT

myAWSIoTMQTTClient.connect()

# Note that we are not putting a message callback here. We are using the general message notification callback.

myAWSIoTMQTTClient.subscribeAsync(topic, 1, ackCallback=customSubackCallback)

time.sleep(2)

# Publish to the same topic in a loop forever

loopCount = 0

while True:

myAWSIoTMQTTClient.publishAsync(topic, "New Message " + str(loopCount), 1, ackCallback=customPubackCallback)

loopCount += 1

time.sleep(1) | AWSIoTPythonSDK | /AWSIoTPythonSDK-1.5.2.tar.gz/AWSIoTPythonSDK-1.5.2/samples/basicPubSub/basicPubSubAsync.py | basicPubSubAsync.py |

from AWSIoTPythonSDK.MQTTLib import AWSIoTMQTTClient

import logging

import time

import argparse

class CallbackContainer(object):

def __init__(self, client):

self._client = client

def messagePrint(self, client, userdata, message):

print("Received a new message: ")

print(message.payload)

print("from topic: ")

print(message.topic)

print("--------------\n\n")

def messageForward(self, client, userdata, message):

topicRepublish = message.topic + "/republish"

print("Forwarding message from: %s to %s" % (message.topic, topicRepublish))

print("--------------\n\n")

self._client.publishAsync(topicRepublish, str(message.payload), 1, self.pubackCallback)

def pubackCallback(self, mid):

print("Received PUBACK packet id: ")

print(mid)

print("++++++++++++++\n\n")

def subackCallback(self, mid, data):

print("Received SUBACK packet id: ")

print(mid)

print("Granted QoS: ")

print(data)

print("++++++++++++++\n\n")

# Read in command-line parameters

parser = argparse.ArgumentParser()

parser.add_argument("-e", "--endpoint", action="store", required=True, dest="host", help="Your AWS IoT custom endpoint")

parser.add_argument("-r", "--rootCA", action="store", required=True, dest="rootCAPath", help="Root CA file path")

parser.add_argument("-c", "--cert", action="store", dest="certificatePath", help="Certificate file path")

parser.add_argument("-k", "--key", action="store", dest="privateKeyPath", help="Private key file path")

parser.add_argument("-p", "--port", action="store", dest="port", type=int, help="Port number override")

parser.add_argument("-w", "--websocket", action="store_true", dest="useWebsocket", default=False,

help="Use MQTT over WebSocket")

parser.add_argument("-id", "--clientId", action="store", dest="clientId", default="basicPubSub",

help="Targeted client id")

parser.add_argument("-t", "--topic", action="store", dest="topic", default="sdk/test/Python", help="Targeted topic")

args = parser.parse_args()

host = args.host

rootCAPath = args.rootCAPath

certificatePath = args.certificatePath

privateKeyPath = args.privateKeyPath

port = args.port

useWebsocket = args.useWebsocket

clientId = args.clientId

topic = args.topic

if args.useWebsocket and args.certificatePath and args.privateKeyPath:

parser.error("X.509 cert authentication and WebSocket are mutual exclusive. Please pick one.")

exit(2)

if not args.useWebsocket and (not args.certificatePath or not args.privateKeyPath):

parser.error("Missing credentials for authentication.")

exit(2)

# Port defaults

if args.useWebsocket and not args.port: # When no port override for WebSocket, default to 443

port = 443

if not args.useWebsocket and not args.port: # When no port override for non-WebSocket, default to 8883

port = 8883

# Configure logging

logger = logging.getLogger("AWSIoTPythonSDK.core")

logger.setLevel(logging.DEBUG)

streamHandler = logging.StreamHandler()

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

streamHandler.setFormatter(formatter)

logger.addHandler(streamHandler)

# Init AWSIoTMQTTClient

myAWSIoTMQTTClient = None

if useWebsocket:

myAWSIoTMQTTClient = AWSIoTMQTTClient(clientId, useWebsocket=True)

myAWSIoTMQTTClient.configureEndpoint(host, port)

myAWSIoTMQTTClient.configureCredentials(rootCAPath)

else:

myAWSIoTMQTTClient = AWSIoTMQTTClient(clientId)

myAWSIoTMQTTClient.configureEndpoint(host, port)

myAWSIoTMQTTClient.configureCredentials(rootCAPath, privateKeyPath, certificatePath)

# AWSIoTMQTTClient connection configuration

myAWSIoTMQTTClient.configureAutoReconnectBackoffTime(1, 32, 20)

myAWSIoTMQTTClient.configureOfflinePublishQueueing(-1) # Infinite offline Publish queueing

myAWSIoTMQTTClient.configureDrainingFrequency(2) # Draining: 2 Hz

myAWSIoTMQTTClient.configureConnectDisconnectTimeout(10) # 10 sec

myAWSIoTMQTTClient.configureMQTTOperationTimeout(5) # 5 sec

myCallbackContainer = CallbackContainer(myAWSIoTMQTTClient)

# Connect and subscribe to AWS IoT

myAWSIoTMQTTClient.connect()

# Perform synchronous subscribes

myAWSIoTMQTTClient.subscribe(topic, 1, myCallbackContainer.messageForward)

myAWSIoTMQTTClient.subscribe(topic + "/republish", 1, myCallbackContainer.messagePrint)

time.sleep(2)

# Publish to the same topic in a loop forever

loopCount = 0

while True:

myAWSIoTMQTTClient.publishAsync(topic, "New Message " + str(loopCount), 1, ackCallback=myCallbackContainer.pubackCallback)

loopCount += 1

time.sleep(1) | AWSIoTPythonSDK | /AWSIoTPythonSDK-1.5.2.tar.gz/AWSIoTPythonSDK-1.5.2/samples/basicPubSub/basicPubSub_APICallInCallback.py | basicPubSub_APICallInCallback.py |

from AWSIoTPythonSDK.MQTTLib import AWSIoTMQTTShadowClient

import logging

import time

import json

import argparse

# Shadow JSON schema:

#

# Name: Bot

# {

# "state": {

# "desired":{

# "property":<INT VALUE>

# }

# }

# }

# Custom Shadow callback

def customShadowCallback_Delta(payload, responseStatus, token):

# payload is a JSON string ready to be parsed using json.loads(...)

# in both Py2.x and Py3.x

print(responseStatus)

payloadDict = json.loads(payload)

print("++++++++DELTA++++++++++")

print("property: " + str(payloadDict["state"]["property"]))

print("version: " + str(payloadDict["version"]))

print("+++++++++++++++++++++++\n\n")

# Read in command-line parameters

parser = argparse.ArgumentParser()

parser.add_argument("-e", "--endpoint", action="store", required=True, dest="host", help="Your AWS IoT custom endpoint")

parser.add_argument("-r", "--rootCA", action="store", required=True, dest="rootCAPath", help="Root CA file path")

parser.add_argument("-c", "--cert", action="store", dest="certificatePath", help="Certificate file path")

parser.add_argument("-k", "--key", action="store", dest="privateKeyPath", help="Private key file path")

parser.add_argument("-p", "--port", action="store", dest="port", type=int, help="Port number override")

parser.add_argument("-w", "--websocket", action="store_true", dest="useWebsocket", default=False,

help="Use MQTT over WebSocket")

parser.add_argument("-n", "--thingName", action="store", dest="thingName", default="Bot", help="Targeted thing name")

parser.add_argument("-id", "--clientId", action="store", dest="clientId", default="basicShadowDeltaListener",

help="Targeted client id")

args = parser.parse_args()

host = args.host

rootCAPath = args.rootCAPath

certificatePath = args.certificatePath

privateKeyPath = args.privateKeyPath

port = args.port

useWebsocket = args.useWebsocket

thingName = args.thingName

clientId = args.clientId

if args.useWebsocket and args.certificatePath and args.privateKeyPath:

parser.error("X.509 cert authentication and WebSocket are mutual exclusive. Please pick one.")

exit(2)

if not args.useWebsocket and (not args.certificatePath or not args.privateKeyPath):

parser.error("Missing credentials for authentication.")

exit(2)

# Port defaults

if args.useWebsocket and not args.port: # When no port override for WebSocket, default to 443

port = 443

if not args.useWebsocket and not args.port: # When no port override for non-WebSocket, default to 8883

port = 8883

# Configure logging

logger = logging.getLogger("AWSIoTPythonSDK.core")

logger.setLevel(logging.DEBUG)

streamHandler = logging.StreamHandler()

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

streamHandler.setFormatter(formatter)

logger.addHandler(streamHandler)

# Init AWSIoTMQTTShadowClient

myAWSIoTMQTTShadowClient = None

if useWebsocket:

myAWSIoTMQTTShadowClient = AWSIoTMQTTShadowClient(clientId, useWebsocket=True)

myAWSIoTMQTTShadowClient.configureEndpoint(host, port)

myAWSIoTMQTTShadowClient.configureCredentials(rootCAPath)

else:

myAWSIoTMQTTShadowClient = AWSIoTMQTTShadowClient(clientId)

myAWSIoTMQTTShadowClient.configureEndpoint(host, port)

myAWSIoTMQTTShadowClient.configureCredentials(rootCAPath, privateKeyPath, certificatePath)

# AWSIoTMQTTShadowClient configuration

myAWSIoTMQTTShadowClient.configureAutoReconnectBackoffTime(1, 32, 20)

myAWSIoTMQTTShadowClient.configureConnectDisconnectTimeout(10) # 10 sec

myAWSIoTMQTTShadowClient.configureMQTTOperationTimeout(5) # 5 sec

# Connect to AWS IoT

myAWSIoTMQTTShadowClient.connect()

# Create a deviceShadow with persistent subscription

deviceShadowHandler = myAWSIoTMQTTShadowClient.createShadowHandlerWithName(thingName, True)

# Listen on deltas

deviceShadowHandler.shadowRegisterDeltaCallback(customShadowCallback_Delta)

# Loop forever

while True:

time.sleep(1) | AWSIoTPythonSDK | /AWSIoTPythonSDK-1.5.2.tar.gz/AWSIoTPythonSDK-1.5.2/samples/basicShadow/basicShadowDeltaListener.py | basicShadowDeltaListener.py |

from AWSIoTPythonSDK.MQTTLib import AWSIoTMQTTShadowClient

import logging

import time

import json

import argparse

# Shadow JSON schema:

#

# Name: Bot

# {

# "state": {

# "desired":{

# "property":<INT VALUE>

# }

# }

# }

# Custom Shadow callback

def customShadowCallback_Update(payload, responseStatus, token):

# payload is a JSON string ready to be parsed using json.loads(...)

# in both Py2.x and Py3.x

if responseStatus == "timeout":

print("Update request " + token + " time out!")

if responseStatus == "accepted":

payloadDict = json.loads(payload)

print("~~~~~~~~~~~~~~~~~~~~~~~")

print("Update request with token: " + token + " accepted!")

print("property: " + str(payloadDict["state"]["desired"]["property"]))

print("~~~~~~~~~~~~~~~~~~~~~~~\n\n")

if responseStatus == "rejected":

print("Update request " + token + " rejected!")

def customShadowCallback_Delete(payload, responseStatus, token):

if responseStatus == "timeout":

print("Delete request " + token + " time out!")

if responseStatus == "accepted":

print("~~~~~~~~~~~~~~~~~~~~~~~")

print("Delete request with token: " + token + " accepted!")

print("~~~~~~~~~~~~~~~~~~~~~~~\n\n")

if responseStatus == "rejected":

print("Delete request " + token + " rejected!")

# Read in command-line parameters

parser = argparse.ArgumentParser()

parser.add_argument("-e", "--endpoint", action="store", required=True, dest="host", help="Your AWS IoT custom endpoint")

parser.add_argument("-r", "--rootCA", action="store", required=True, dest="rootCAPath", help="Root CA file path")

parser.add_argument("-c", "--cert", action="store", dest="certificatePath", help="Certificate file path")

parser.add_argument("-k", "--key", action="store", dest="privateKeyPath", help="Private key file path")

parser.add_argument("-p", "--port", action="store", dest="port", type=int, help="Port number override")

parser.add_argument("-w", "--websocket", action="store_true", dest="useWebsocket", default=False,

help="Use MQTT over WebSocket")

parser.add_argument("-n", "--thingName", action="store", dest="thingName", default="Bot", help="Targeted thing name")

parser.add_argument("-id", "--clientId", action="store", dest="clientId", default="basicShadowUpdater", help="Targeted client id")

args = parser.parse_args()

host = args.host

rootCAPath = args.rootCAPath

certificatePath = args.certificatePath

privateKeyPath = args.privateKeyPath

port = args.port

useWebsocket = args.useWebsocket

thingName = args.thingName

clientId = args.clientId

if args.useWebsocket and args.certificatePath and args.privateKeyPath:

parser.error("X.509 cert authentication and WebSocket are mutual exclusive. Please pick one.")

exit(2)

if not args.useWebsocket and (not args.certificatePath or not args.privateKeyPath):

parser.error("Missing credentials for authentication.")

exit(2)

# Port defaults

if args.useWebsocket and not args.port: # When no port override for WebSocket, default to 443

port = 443

if not args.useWebsocket and not args.port: # When no port override for non-WebSocket, default to 8883

port = 8883

# Configure logging

logger = logging.getLogger("AWSIoTPythonSDK.core")

logger.setLevel(logging.DEBUG)

streamHandler = logging.StreamHandler()

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

streamHandler.setFormatter(formatter)

logger.addHandler(streamHandler)

# Init AWSIoTMQTTShadowClient

myAWSIoTMQTTShadowClient = None

if useWebsocket:

myAWSIoTMQTTShadowClient = AWSIoTMQTTShadowClient(clientId, useWebsocket=True)

myAWSIoTMQTTShadowClient.configureEndpoint(host, port)

myAWSIoTMQTTShadowClient.configureCredentials(rootCAPath)

else:

myAWSIoTMQTTShadowClient = AWSIoTMQTTShadowClient(clientId)

myAWSIoTMQTTShadowClient.configureEndpoint(host, port)

myAWSIoTMQTTShadowClient.configureCredentials(rootCAPath, privateKeyPath, certificatePath)

# AWSIoTMQTTShadowClient configuration

myAWSIoTMQTTShadowClient.configureAutoReconnectBackoffTime(1, 32, 20)

myAWSIoTMQTTShadowClient.configureConnectDisconnectTimeout(10) # 10 sec

myAWSIoTMQTTShadowClient.configureMQTTOperationTimeout(5) # 5 sec

# Connect to AWS IoT

myAWSIoTMQTTShadowClient.connect()

# Create a deviceShadow with persistent subscription

deviceShadowHandler = myAWSIoTMQTTShadowClient.createShadowHandlerWithName(thingName, True)

# Delete shadow JSON doc

deviceShadowHandler.shadowDelete(customShadowCallback_Delete, 5)

# Update shadow in a loop

loopCount = 0

while True:

JSONPayload = '{"state":{"desired":{"property":' + str(loopCount) + '}}}'

deviceShadowHandler.shadowUpdate(JSONPayload, customShadowCallback_Update, 5)

loopCount += 1

time.sleep(1) | AWSIoTPythonSDK | /AWSIoTPythonSDK-1.5.2.tar.gz/AWSIoTPythonSDK-1.5.2/samples/basicShadow/basicShadowUpdater.py | basicShadowUpdater.py |

## 1. OVERVIEW

`AWSOM` stands for: **A**mazing **W**ays to **S**earch and **O**rganise

**M**edia. `AWSOM` is a media automation toolkit, originally intended to

automate the Adobe Creative Cloud video production workflow at

[South West London TV](https://www.southwestlondon.tv).

This Python package contains a mixture of OS-independent scripts for managing

common (desktop/LAN) automation tasks such as renaming media files and making backups, as well

as some exciting automations built on top of the superb `Pymiere` library by

[Quentin Masingarbe](https://github.com/qmasingarbe/pymiere). Unfortunately

`Pymiere` currently only works on **Windows 10**, so the corresponding `AWSOM`

automations are limited to Windows users as well (for now).

If you're interested in exploring the rest of the **AWSOM** toolkit, which is

primarily aimed at serious YouTubers, video editors, and power-users of online

video such as journalists, researchers, and teachers, please follow us on Twitter

and start a conversation:

https://twitter.com/AppAwsom

Both this package and a snazzy new web version of `AWSOM` which we hope to launch soon provide a

tonne of powerful features completely free, but certain 'Pro' features like

Automatic Speech Recognition/Subtitling use third party services and may require

a registered account with us or them to access on a pay-per-use basis.

## 2. INSTALLATION

Very lightweight installation with only three dependencies: `pymiere`, `cleverdict` and `pysimplegui`.

pip install AWSOM

or to cover all bases...

python -m pip install AWSOM --upgrade --user

## 3. BASIC USE

`AWSOM` currently supports Sony's commonly used XDCAM format. More formats will be added soon, or feel free to get involved and contribute one!

1. Connect and power on your camera/storage device, then open up your Python interpreter/IDE:

```

import AWSOM

AWSOM.ingest(from_device=True)

```

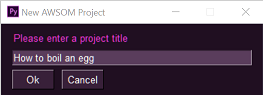

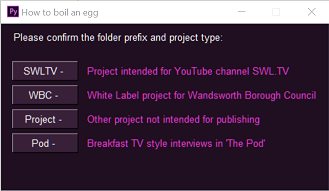

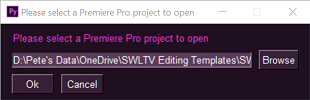

2. Follow the (beautiful `PySimpleGui`) prompts to give your project a name and category/prefix, and point it to a template `.prproj` file to copy from.

3. Go and have a cup of coffee, knowing that when you come back all the fiddly, non-creative, importy-draggy stuff will be done and you can get on with *actual* editing!

* New project Folder created on your main work hard drive.

* All media and metadata copied to subfolder `XDROOT`.

* All clips, thumbnails and XML files renamed to include the project name.

* `MEDIAPRO.XML` updated with new names.

* Your selected template .prproj file opened in Premiere Pro.

* Template file save with new project name to new folder.

* Rushes bin created if not already in the template.

* All clips imported into the Rushes bin.

* Rushes sequence created if not already in the template.

* All clips inserted in the Rushes sequence, all ready and waiting for you!

## 4. UNDER THE BONNET

None of `AWSOM`'s automations for `Adobe Premiere Pro` would be possible without `Pymiere`.

`AWSOM`'s library of choice for user interaction is `PySimpleGui` which creates beautiful looking popups, input windows and output displays with very few lines of code. We think their documentation is really fun and accessible too which makes the learning-curve for newcomers really painless.

Internally, `AWSOM` makes extensive use of `CleverDict`, a handy custom data type which allows

developers to flexibly switch between Python dictionary `{key: value}` notation

and `object.attribute` notation. For more information about `CleverDict` see:

https://pypi.org/project/cleverdict/

`AWSOM` uses `pathlib` in preference to `os` wherever possible for handling files, directories, and drives.

The primary class used in `AWSOM` is `Project` which encapsulates all the control data used by the rest of the main program.

Functions and methods are generally as 'atomic' as possible, i.e. one function generally does just one thing and is kept as short as reasonably possible. The exception to this are *workflow functions* like `ingest()` which by their nature chain together potentially long sequences of individual functions, passing a `Project` object between them.

## 5. CONTRIBUTING

Please join our virtual team if you have an interest in video editing, production, workflow automation or simply have an idea for improving this package. We're particularly keen to connect with anyone who can help us make `Pymiere` work on other Operating Systems and folk already active in the Adobe/Premiere/ExtendScript space or working on tools for Speech Recognition, Subtitles, Media Content Management, and online video generally (especially but not only YouTube). We're also on the lookout for professional help with UX/UI design and all things HTML/CSS to take our web app version of `AWSOM` to the next level.

Our preferred process for onboarding new contributors is as follows:

1. Say hello to us on [Twitter](https://twitter.com/AppAwsom) initially so we can "put a face to the name".

2. Fork this repository. Also **STAR** this repository for bonus karma!

3. Create new branches with the following standardised names as required:

* `cosmetic`: for reformatting and changes to comments, README, or user input/output e.g. print(), input() and GUI.

* `enhancements`: for new features and extensions to old features

* `refactoring`: for better ways to code existing features

* `tests`: for new or better test cases

* `bugfix`: for solutions to existing issues

* `miscellaneous`: for anything else

4. We're a naively zealous fan of *Test Driven Development*, so please start by creating a separate `test_xyz.py` script for any coding changes, and document your tests (and any new code) clearly enough that they'll tell us everything we need to know about your rationale and implementation approach.

5. When you're ready and any new code passes all your/our tests, create a *Pull Request* from one of your branches (above) back to the `main` branch of this repository.

If you'd be kind enough to follow that approach it will speed things on their way and cause less brain-ache for us, thanks!

| AWSOM | /AWSOM-0.14.tar.gz/AWSOM-0.14/README.md | README.md |

##########

AWS Scout2

##########

.. image:: https://travis-ci.org/nccgroup/Scout2.svg?branch=master

:target: https://travis-ci.org/nccgroup/Scout2

.. image:: https://coveralls.io/repos/github/nccgroup/Scout2/badge.svg?branch=master

:target: https://coveralls.io/github/nccgroup/Scout2

.. image:: https://badge.fury.io/py/AWSScout2.svg

:target: https://badge.fury.io/py/AWSScout2

:align: right

***********

Description

***********

Scout2 is a security tool that lets AWS administrators assess their

environment's security posture. Using the AWS API, Scout2 gathers configuration

data for manual inspection and highlights high-risk areas automatically. Rather

than pouring through dozens of pages on the web, Scout2 supplies a clear view of

the attack surface automatically.

**Note:** Scout2 is stable and actively maintained, but a number of features and

internals may change. As such, please bear with us as we find time to work on,

and improve, the tool. Feel free to report a bug with details (*e.g.* console

output using the "--debug" argument), request a new feature, or send a pull

request.

************

Installation

************

Install via `pip`_:

::

$ pip install awsscout2

Install from source:

::

$ git clone https://github.com/nccgroup/Scout2

$ cd Scout2

$ pip install -r requirements.txt

$ python setup.py install

************

Requirements

************

Computing resources

-------------------

Scout2 is a multi-threaded tool that fetches and stores your AWS account's configuration settings in memory during

runtime. It is expected that the tool will run with no issues on any modern laptop or equivalent VM.

**Running Scout2 in a VM with limited computing resources such as a t2.micro instance is not intended and will likely

result in the process being killed.**

Python

------

Scout2 is written in Python and supports the following versions:

* 2.7

* 3.3

* 3.4

* 3.5

* 3.6

AWS Credentials

---------------

To run Scout2, you will need valid AWS credentials (*e.g* Access Key ID and Secret Access Key).

The role, or user account, associated with these credentials requires read-only access for all resources in a number of

services, including but not limited to CloudTrail, EC2, IAM, RDS, Redshift, and S3.

The following AWS Managed Policies can be attached to the principal in order to grant necessary permissions:

* ReadOnlyAccess

* SecurityAudit

Compliance with AWS' Acceptable Use Policy

------------------------------------------

Use of Scout2 does not require AWS users to complete and submit the AWS

Vulnerability / Penetration Testing Request Form. Scout2 only performs AWS API

calls to fetch configuration data and identify security gaps, which is not

considered security scanning as it does not impact AWS' network and

applications.

Usage

-----

After performing a number of AWS API calls, Scout2 will create a local HTML report and open it in the default browser.

Using a computer already configured to use the AWS CLI, boto3, or another AWS SDK, you may use Scout2 using the

following command:

::

$ Scout2

**Note:** EC2 instances with an IAM role fit in this category.

If multiple profiles are configured in your .aws/credentials and .aws/config files, you may specify which credentials

to use with the following command:

::

$ Scout2 --profile <PROFILE_NAME>

If you have a CSV file containing the API access key ID and secret, you may run Scout2 with the following command:

::

$ Scout2 --csv-credentials <CREDENTIALS.CSV>

**********************

Advanced documentation

**********************

The following command will provide the list of available command line options:

::

$ Scout2 --help

For further details, checkout our Wiki pages at https://github.com/nccgroup/Scout2/wiki.

*******

License

*******

GPLv2: See LICENSE.

.. _pip: https://pip.pypa.io/en/stable/index.html

| AWSScout2 | /AWSScout2-3.2.1.tar.gz/AWSScout2-3.2.1/README.rst | README.rst |

# AWS External Account Scanner

> Xenos, is Greek for stranger.

AWSXenos will list all the trust relationships in all the IAM roles, and S3 buckets, in an AWS account and give you a breakdown of all the accounts that have trust relationships to your account. It will also highlight whether the trusts have an [external ID](https://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_create_for-user_externalid.html) or not.

This tool reports against the [Trusted Relationship Technique](https://attack.mitre.org/techniques/T1199/) of the ATT&CK Framework.

* For the "known" accounts list AWSXenos uses a modified version of [known AWS Account IDs](https://github.com/rupertbg/aws-public-account-ids).

* For the Org accounts list AWSXenos query AWS Organizations.

* AWS Services are classified separately.

* Everything else falls under unknown account

## Example

## Why

Access Analyzer falls short because:

1. You need to enable it in every region.

2. Identified external entities might be known entities. E.g. a trusted third party vendor or a vendor you no longer trust. An Account number is seldom useful.

3. Zone of trust is a fixed set of the AWS organisation. You won’t know if a trust between sandbox->prod has been established.

4. Does not identify AWS Service principals. This is mainly important because of [Wiz's AWSConfig, et al vulnverabilities](http://i.blackhat.com/USA21/Wednesday-Handouts/us-21-Breaking-The-Isolation-Cross-Account-AWS-Vulnerabilities.pdf)

## How to run

### Cli

```sh

pip install AWSXenos

awsxenos --reporttype HTML -w report.html

awsxenos --reporttype JSON -w report.json

```

You will get an HTML and JSON report.

See [example report](example/example.html)

### Library

```python

from awsxenos.scan import Scan

from awsxenos.report import Report

s = Scan()

r = Report(s.findings, s.known_accounts_data)

json_summary = r.JSON_report()

html_summary = r.HTML_report()

```

### IAM Permissions

Permissions required.

```json

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"iam:ListRoles"

"organizations:ListAccounts",

"s3:ListAllMyBuckets",

"s3:GetBucketPolicy",

"s3:GetBucketAcl"

],

"Effect": "Allow",

"Resource": "*"

}

]

}

```

## Development

```sh

python3 -m env venv

source /env/bin/activate

pip install -r requirements.txt

```

## I want to add more known accounts

Create a PR or raise an issue. Contributions are welcome.

## Features

- [x] IAM Roles

- [x] S3 Bucket Policies and ACLs

- [x] Use as library

- [x] HTML and JSON output

- [x] Supports AWS Services

## TODO

- [ ] Add support for more resource policies services, e.g. SecretsManager, KSM, SNS, SQS, Lambda

- [ ] Add support for Cognito, RAM

- [ ] Add support for VPCE

| AWSXenos | /AWSXenos-0.0.2.tar.gz/AWSXenos-0.0.2/README.md | README.md |

from collections import defaultdict

from typing import List, Dict, DefaultDict

import json

from jinja2 import Environment, FileSystemLoader # type: ignore

from policyuniverse.arn import ARN # type: ignore

from awsxenos.finding import AccountType, Finding

from awsxenos import package_path

class Report:

def __init__(self, findings: DefaultDict[str, AccountType], account_info: DefaultDict[str, Dict]) -> None:

self.summary = self._summarise(findings, account_info)

def _summarise(

self, findings: DefaultDict[str, AccountType], account_info: DefaultDict[str, Dict]

) -> DefaultDict[str, List]:

summary = defaultdict(list)

for resource, accounttype in findings.items():

# Refactor

# for account_type, principal in finding

if accounttype.known_accounts:

for finding in accounttype.known_accounts:

role_arn = ARN(finding.principal)

summary["known_accounts"].append(

{

"ARN": resource,

"principal": accounttype.known_accounts,

"external_info": account_info[role_arn.account_number],

"external_id": finding.external_id,

}

)

if accounttype.org_accounts:

for finding in accounttype.org_accounts:

role_arn = ARN(finding.principal)

summary["org_accounts"].append(

{

"ARN": resource,

"principal": accounttype.org_accounts,

"external_info": account_info[role_arn.account_number],

}

)

if accounttype.aws_services:

for finding in accounttype.aws_services:

role_arn = ARN(finding.principal)

summary["aws_services"].append(

{

"ARN": resource,

"principal": accounttype.aws_services,

"external_info": account_info[role_arn.tech],

}

)

if accounttype.unknown_accounts:

for finding in accounttype.unknown_accounts:

role_arn = ARN(finding.principal)

summary["unknown_accounts"].append(

{

"ARN": resource,

"principal": accounttype.unknown_accounts,

"external_info": account_info[role_arn.account_number],

"external_id": finding.external_id,

}

)

return summary

def JSON_report(self) -> str:

"""Return the Findings in JSON format

Returns:

str: Return the Findings in JSON format

"""

return json.dumps(self.summary, indent=4, default=str)

def HTML_report(self) -> str:

"""Generate an HTML report based on the template.html

Returns:

str: return HTML

"""

jinja_env = Environment(loader=FileSystemLoader(package_path.resolve().parent))

template = jinja_env.get_template("template.html")

return template.render(summary=self.summary) | AWSXenos | /AWSXenos-0.0.2.tar.gz/AWSXenos-0.0.2/awsxenos/report.py | report.py |

import argparse

from collections import defaultdict

from re import I

from typing import Any, Optional, Dict, List, DefaultDict, Set

import json

import sys

import boto3 # type: ignore

from botocore.exceptions import ClientError # type: ignore

from policyuniverse.arn import ARN # type: ignore

from policyuniverse.policy import Policy # type: ignore

from policyuniverse.statement import Statement, ConditionTuple # type: ignore

from awsxenos.finding import AccountType, Finding

from awsxenos.report import Report

from awsxenos import package_path

class Scan:

def __init__(self, exclude_service: Optional[bool] = True, exclude_aws: Optional[bool] = True) -> None:

self.known_accounts_data = defaultdict(dict) # type: DefaultDict[str, Dict[Any, Any]]

self.findings = defaultdict(AccountType) # type: DefaultDict[str, AccountType]

self._buckets = self.list_account_buckets()

self.roles = self.get_roles(exclude_service, exclude_aws)

self.accounts = self.get_all_accounts()

self.bucket_policies = self.get_bucket_policies()

self.bucket_acls = self.get_bucket_acls()

for resource in ["roles", "bucket_policies", "bucket_acls"]:

if resource != "bucket_acls":

self.findings.update(self.collate_findings(self.accounts, getattr(self, resource)))

else:

self.findings.update(self.collate_acl_findings(self.accounts, getattr(self, resource)))

def get_org_accounts(self) -> DefaultDict[str, Dict]:

"""Get Account Ids from the AWS Organization

Returns:

DefaultDict: Key of Account Ids. Value of other Information

"""

accounts = defaultdict(dict) # type: DefaultDict[str, Dict]

orgs = boto3.client("organizations")

paginator = orgs.get_paginator("list_accounts")

try:

account_iterator = paginator.paginate()

for account_resp in account_iterator:

for account in account_resp["Accounts"]:

accounts[account["Id"]] = account

return accounts

except Exception as e:

print("[!] - Failed to get organization accounts")

print(e)

return accounts

def get_bucket_acls(self) -> DefaultDict[str, List[Dict[Any, Any]]]:

bucket_acls = defaultdict(str)

buckets = self._buckets

s3 = boto3.client("s3")

for bucket in buckets["Buckets"]:

bucket_arn = f'arn:aws:s3:::{bucket["Name"]}'

try:

bucket_acls[bucket_arn] = s3.get_bucket_acl(Bucket=bucket["Name"])["Grants"]

except ClientError as e:

if e.response["Error"]["Code"] == "AccessDenied":

bucket_acls[bucket_arn] = [

{

"Grantee": {"DisplayName": "AccessDenied", "ID": "AccessDenied", "Type": "CanonicalUser"},

"Permission": "FULL_CONTROL",

}

]

else:

print(e)

continue

return bucket_acls

def get_bucket_policies(self) -> DefaultDict[str, Dict[Any, Any]]:

"""Get a dictionary of buckets and their policies from the AWS Account

Returns:

DefaultDict[str, str]: Key of BucketARN, Value of PolicyDocument

"""

bucket_policies = defaultdict(str)

buckets = self._buckets

s3 = boto3.client("s3")

for bucket in buckets["Buckets"]:

bucket_arn = f'arn:aws:s3:::{bucket["Name"]}'

try:

bucket_policies[bucket_arn] = json.loads(s3.get_bucket_policy(Bucket=bucket["Name"])["Policy"])

except ClientError as e:

if e.response["Error"]["Code"] == "AccessDenied":

bucket_policies[bucket_arn] = {

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AccessDeniedOnResource",

"Effect": "Allow",

"Principal": {"AWS": ["arn:aws:iam::111122223333:root"]},

"Action": ["s3:*"],

"Resource": f"{bucket_arn}",

}

],

}

continue

elif e.response["Error"]["Code"] == "NoSuchBucketPolicy":

continue

else:

print(e)

continue

return bucket_policies

def get_roles(

self, exclude_service: Optional[bool] = True, exclude_aws: Optional[bool] = True

) -> DefaultDict[str, Dict[Any, Any]]:

"""Get a dictionary of roles and their policies from the AWS Account

Args:

exclude_service (Optional[bool], optional): exclude roles starting with /service-role/. Defaults to True.

exclude_aws (Optional[bool], optional): exclude roles starting with /aws-service-role/. Defaults to True.

Returns:

DefaultDict[str, str]: Key of RoleNames, Value of AssumeRolePolicyDocument

"""

roles = defaultdict(str)

iam = boto3.client("iam")

paginator = iam.get_paginator("list_roles")

role_iterator = paginator.paginate()

for role_resp in role_iterator:

for role in role_resp["Roles"]:

if role["Path"] == "/service-role/" and exclude_service:

continue

elif role["Path"].startswith("/aws-service-role/") and exclude_aws:

continue

else:

roles[role["Arn"]] = role["AssumeRolePolicyDocument"]

return roles

def list_account_buckets(self) -> Dict[str, Dict[Any, Any]]:

s3 = boto3.client("s3")

return s3.list_buckets()

def get_all_accounts(self) -> DefaultDict[str, Set]:

"""Get all known accounts and from the AWS Organization

Returns:

DefaultDict[str, Set]: Key of account type. Value account ids

"""

accounts = defaultdict(set) # type: DefaultDict[str, Set]

with open(f"{package_path.resolve().parent}/accounts.json", "r") as f:

accounts_file = json.load(f)

for account in accounts_file:

self.known_accounts_data[account["id"]] = account

accounts["known_accounts"] = set(self.known_accounts_data.keys())

# Populate Org accounts

org_accounts = self.get_org_accounts()

aws_canonical_user = self._buckets["Owner"]

# Add to the set of org_accounts

accounts["org_accounts"] = set(org_accounts.keys())

accounts["org_accounts"].add(aws_canonical_user["ID"])

# Combine the metadata

self.known_accounts_data[aws_canonical_user["ID"]] = {"owner": aws_canonical_user["DisplayName"]}

self.known_accounts_data = self.known_accounts_data | org_accounts # type: ignore

return accounts

def collate_acl_findings(

self, accounts: DefaultDict[str, Set], resources: DefaultDict[str, List[Dict[Any, Any]]]

) -> DefaultDict[str, AccountType]:

"""Combine all accounts with all the acls to classify findings

Args:

accounts (DefaultDict[str, Set]): [description]

resources (DefaultDict[str, List[Dict[Any, Any]]]): [description]

Returns:

DefaultDict[str, AccountType]: [description]

"""

findings = defaultdict(AccountType) # type: DefaultDict[str, AccountType]

for resource, grants in resources.items():

for grant in grants:

if grant["Grantee"]["ID"] == self._buckets["Owner"]["ID"]:

continue # Don't add if the ACL is of the same account

elif grant["Grantee"]["ID"] in accounts["known_accounts"]:

findings[resource].known_accounts.append(Finding(principal=grant["Grantee"]["ID"], external_id=True))

elif grant["Grantee"]["ID"] in accounts["org_accounts"]:

findings[resource].org_accounts.append(Finding(principal=grant["Grantee"]["ID"], external_id=True))

else:

findings[resource].unknown_accounts.append(

Finding(principal=grant["Grantee"]["ID"], external_id=True)

)

return findings

def collate_findings(

self, accounts: DefaultDict[str, Set], resources: DefaultDict[str, Dict[Any, Any]]

) -> DefaultDict[str, AccountType]:

"""Combine all accounts with all the resources to classify findings

Args:

accounts (DefaultDict[str, Set]): Key of account type. Value account ids

resources (DefaultDict[str, Dict[Any, Any]]): Key ResourceIdentifier. Value Dict PolicyDocument

Returns:

DefaultDict[str, AccountType]: Key of ARN, Value of AccountType

"""

findings = defaultdict(AccountType) # type: DefaultDict[str, AccountType]

for resource, policy_document in resources.items():

try:

policy = Policy(policy_document)

except:

print(policy_document)

continue

for unparsed_principal in policy.whos_allowed():

try:

principal = ARN(unparsed_principal.value) # type: Any

except Exception as e:

print(e)

findings[resource].known_accounts.append(Finding(principal=unparsed_principal, external_id=True))

continue

# Check if Principal is an AWS Service

if principal.service:

findings[resource].aws_services.append(Finding(principal=principal.arn, external_id=True))

# Check against org_accounts

elif principal.account_number in accounts["org_accounts"]:

findings[resource].org_accounts.append(Finding(principal=principal.arn, external_id=True))

# Check against known external accounts

elif (

principal.account_number in accounts["known_accounts"]

or ConditionTuple(category="saml-endpoint", value="https://signin.aws.amazon.com/saml")

in policy.whos_allowed()

):

sts_set = False

for pstate in policy.statements:

if "sts" in pstate.action_summary():

try:

conditions = [

k.lower() for k in list(pstate.statement["Condition"]["StringEquals"].keys())

]

if "sts:externalid" in conditions:

findings[resource].known_accounts.append(

Finding(principal=principal.arn, external_id=True)

)

except:

findings[resource].known_accounts.append(

Finding(principal=principal.arn, external_id=False)

)

finally:

sts_set = True

break

if not sts_set:

findings[resource].known_accounts.append(Finding(principal=principal.arn, external_id=False))

# Unknown Account

else:

sts_set = False

for pstate in policy.statements:

if "sts" in pstate.action_summary():

try:

conditions = [

k.lower() for k in list(pstate.statement["Condition"]["StringEquals"].keys())

]

if "sts:externalid" in conditions:

findings[resource].unknown_accounts.append(

Finding(principal=principal.arn, external_id=True)

)

except:

findings[resource].unknown_accounts.append(

Finding(principal=principal.arn, external_id=False)

)

finally:

break

if not sts_set:

findings[resource].unknown_accounts.append(Finding(principal=principal.arn, external_id=False))

return findings

def cli():

parser = argparse.ArgumentParser(description="Scan an AWS Account for external trusts")

parser.add_argument(

"--reporttype",

dest="reporttype",

action="store",

default="all",

help="Type of report to generate. JSON or HTML",

)

parser.add_argument(

"--include_service_roles",

dest="service_roles",

action="store_false",

default=False,

help="Include service roles in the report",

)

parser.add_argument(

"--include_aws_service_roles",

dest="aws_service_roles",

action="store_false",

default=False,

help="Include AWS roles in the report",

)

parser.add_argument(

"-w",

"--write-output",

dest="write_output",

action="store",

default=False,

help="Path to write output",

)

args = parser.parse_args()

reporttype = args.reporttype

service_roles = args.service_roles

aws_service_roles = args.aws_service_roles

write_output = args.write_output

s = Scan(service_roles, aws_service_roles)

r = Report(s.findings, s.known_accounts_data)

if reporttype.lower() == "json":

summary = r.JSON_report()

elif reporttype.lower() == "html":

summary = r.HTML_report()

else:

summary = r.JSON_report()

if write_output:

with open(f"{write_output}", "w") as f:

f.write(summary)

sys.stdout.write(summary)

if __name__ == "__main__":

cli() | AWSXenos | /AWSXenos-0.0.2.tar.gz/AWSXenos-0.0.2/awsxenos/scan.py | scan.py |

import inspect

import types

__all__ = ["aliases", "expose", "make_callable", "AWSpiderPlugin"]

EXPOSED_FUNCTIONS = {}

CALLABLE_FUNCTIONS = {}

MEMOIZED_FUNCTIONS = {}

FUNCTION_ALIASES = {}

def aliases(*args):

def decorator(f):

FUNCTION_ALIASES[id(f)] = args

return f

return decorator

def expose(func=None, interval=0, name=None, memoize=False):

if func is not None:

EXPOSED_FUNCTIONS[id(func)] = {"interval":interval, "name":name}

return func

def decorator(f):

EXPOSED_FUNCTIONS[id(f)] = {"interval":interval, "name":name}

return f

return decorator

def make_callable(func=None, interval=0, name=None, memoize=False):

if func is not None:

CALLABLE_FUNCTIONS[id(func)] = {"interval":interval, "name":name}

return func

def decorator(f):

CALLABLE_FUNCTIONS[id(f)] = {"interval":interval, "name":name}

return f

return decorator

class AWSpiderPlugin(object):

def __init__(self, spider):

self.spider = spider

check_method = lambda x:isinstance(x[1], types.MethodType)

instance_methods = filter(check_method, inspect.getmembers(self))

for instance_method in instance_methods:

instance_id = id(instance_method[1].__func__)

if instance_id in EXPOSED_FUNCTIONS:

self.spider.expose(

instance_method[1],

interval=EXPOSED_FUNCTIONS[instance_id]["interval"],

name=EXPOSED_FUNCTIONS[instance_id]["name"])

if instance_id in FUNCTION_ALIASES:

for name in FUNCTION_ALIASES[instance_id]:

self.spider.expose(

instance_method[1],

interval=EXPOSED_FUNCTIONS[instance_id]["interval"],

name=name)

if instance_id in CALLABLE_FUNCTIONS:

self.spider.expose(

instance_method[1],

interval=CALLABLE_FUNCTIONS[instance_id]["interval"],

name=CALLABLE_FUNCTIONS[instance_id]["name"])

if instance_id in FUNCTION_ALIASES:

for name in CALLABLE_FUNCTIONS[instance_id]:

self.spider.expose(

instance_method[1],

interval=CALLABLE_FUNCTIONS[instance_id]["interval"],

name=name)

def setReservationFastCache(self, uuid, data):

return self.spider.setReservationFastCache(uuid, data)

def setReservationCache(self, uuid, data):

return self.spider.setReservationCache(uuid, data)

def getPage(self, *args, **kwargs):

return self.spider.getPage(*args, **kwargs) | AWSpider | /AWSpider-0.3.2.12.tar.gz/AWSpider-0.3.2.12/awspider/plugin.py | plugin.py |

from twisted.internet.defer import DeferredList

from twisted.web.client import HTTPClientFactory, _parse

from twisted.internet import reactor

import xml.etree.cElementTree as ET

import logging

logger = logging.getLogger("main")

import random

import socket

def getPage( url, method='GET', postdata=None, headers=None, agent="AWSpider", timeout=60, cookies=None, followRedirect=1 ):

scheme, host, port, path = _parse(url)

factory = HTTPClientFactory(

url,

method=method,

postdata=postdata,

headers=headers,

agent=agent,

timeout=timeout,

cookies=cookies,

followRedirect=followRedirect

)

if scheme == 'https':

from twisted.internet import ssl

contextFactory = ssl.ClientContextFactory()

reactor.connectSSL(host, port, factory, contextFactory, timeout=timeout)

else:

reactor.connectTCP(host, port, factory, timeout=timeout)

return factory.deferred

class NetworkAddressGetter():

local_ip = None

public_ip = None

def __init__( self ):

self.ip_functions = [self.getDomaintools, self.getIPPages]

random.shuffle(self.ip_functions)

def __call__( self ):

d = self.getAmazonIPs()

d.addCallback( self._getAmazonIPsCallback )

d.addErrback( self.getPublicIP )

return d

def getAmazonIPs( self ):

logger.debug( "Getting local IP from Amazon." )

a = getPage( "http://169.254.169.254/2009-04-04/meta-data/local-ipv4", timeout=5 )

logger.debug( "Getting public IP from Amazon." )

b = getPage( "http://169.254.169.254/2009-04-04/meta-data/public-ipv4", timeout=5 )

d = DeferredList([a,b], consumeErrors=True)

return d

def _getAmazonIPsCallback( self, data ):

if data[0][0] == True:

self.local_ip = data[0][1]

logger.debug( "Got local IP %s from Amazon." % self.local_ip )

else:

logger.debug( "Could not get local IP from Amazon." )

if data[1][0] == True:

public_ip = data[1][1]

logger.debug( "Got public IP %s from Amazon." % public_ip )

response = {}

if self.local_ip is not None:

response["local_ip"] = self.local_ip

response["public_ip"] = public_ip

return response

else:

logger.debug( "Could not get public IP from Amazon." )

raise Exception( "Could not get public IP from Amazon." )

def getPublicIP( self, error=None ):

if len(self.ip_functions) > 0:

func = self.ip_functions.pop()

d = func()

d.addCallback( self._getPublicIPCallback )

d.addErrback( self.getPublicIP )

return d

else:

logger.error( "Unable to get public IP address. Check your network connection" )

response = {}

if self.local_ip is not None:

response["local_ip"] = self.local_ip

else:

response["local_ip"] = socket.gethostbyname(socket.gethostname())

return response

def _getPublicIPCallback( self, public_ip ):

response = {}

response["public_ip"] = public_ip

if self.local_ip is not None:

response["local_ip"] = self.local_ip

else:

response["local_ip"] = socket.gethostbyname(socket.gethostname())

return response

def getIPPages(self):

logger.debug( "Getting public IP from ippages.com." )

d = getPage( "http://www.ippages.com/xml/", timeout=5 )

d.addCallback( self._getIPPagesCallback )

return d

def _getIPPagesCallback(self, data ):

domaintools_xml = ET.XML( data )

public_ip = domaintools_xml.find("ip").text

logger.debug( "Got public IP %s from ippages.com." % public_ip )

return public_ip

def getDomaintools(self):

logger.debug( "Getting public IP from domaintools.com." )

d = getPage( "http://ip-address.domaintools.com/myip.xml", timeout=5 )

d.addCallback( self._getDomaintoolsCallback )

return d

def _getDomaintoolsCallback(self, data):

domaintools_xml = ET.XML( data )

public_ip = domaintools_xml.find("ip_address").text

logger.debug( "Got public IP %s from domaintools.com." % public_ip )

return public_ip

def getNetworkAddress():

n = NetworkAddressGetter()

d = n()

d.addCallback( _getNetworkAddressCallback )

return d

def _getNetworkAddressCallback( data ):

return data

if __name__ == "__main__":

import logging.handlers

handler = logging.StreamHandler()

formatter = logging.Formatter("%(levelname)s: %(message)s %(pathname)s:%(lineno)d")

handler.setFormatter(formatter)

logger.addHandler(handler)

logger.setLevel(logging.DEBUG)

reactor.callWhenRunning( getNetworkAddress )

reactor.run() | AWSpider | /AWSpider-0.3.2.12.tar.gz/AWSpider-0.3.2.12/awspider/networkaddress.py | networkaddress.py |

import cPickle

import twisted.python.failure

import datetime

import dateutil.parser

import hashlib

import logging

import time

import copy

from twisted.internet.defer import maybeDeferred

from .requestqueuer import RequestQueuer

from .unicodeconverter import convertToUTF8, convertToUnicode

from .exceptions import StaleContentException

class ReportedFailure(twisted.python.failure.Failure):

pass

# A UTC class.

class CoordinatedUniversalTime(datetime.tzinfo):

ZERO = datetime.timedelta(0)

def utcoffset(self, dt):

return self.ZERO

def tzname(self, dt):

return "UTC"

def dst(self, dt):

return self.ZERO

UTC = CoordinatedUniversalTime()

LOGGER = logging.getLogger("main")

class PageGetter:

def __init__(self,

s3,

aws_s3_http_cache_bucket,

time_offset=0,

rq=None):

"""

Create an S3 based HTTP cache.

**Arguments:**

* *s3* -- S3 client object.

* *aws_s3_http_cache_bucket* -- S3 bucket to use for the HTTP cache.

**Keyword arguments:**

* *rq* -- Request Queuer object. (Default ``None``)

"""

self.s3 = s3

self.aws_s3_http_cache_bucket = aws_s3_http_cache_bucket

self.time_offset = time_offset

if rq is None:

self.rq = RequestQueuer()

else:

self.rq = rq

def clearCache(self):

"""

Clear the S3 bucket containing the S3 cache.

"""

d = self.s3.emptyBucket(self.aws_s3_http_cache_bucket)

return d

def getPage(self,

url,

method='GET',

postdata=None,

headers=None,

agent="AWSpider",

timeout=60,

cookies=None,

follow_redirect=1,

prioritize=False,

hash_url=None,

cache=0,

content_sha1=None,

confirm_cache_write=False):

"""

Make a cached HTTP Request.

**Arguments:**

* *url* -- URL for the request.

**Keyword arguments:**

* *method* -- HTTP request method. (Default ``'GET'``)

* *postdata* -- Dictionary of strings to post with the request.

(Default ``None``)

* *headers* -- Dictionary of strings to send as request headers.

(Default ``None``)

* *agent* -- User agent to send with request. (Default

``'AWSpider'``)

* *timeout* -- Request timeout, in seconds. (Default ``60``)

* *cookies* -- Dictionary of strings to send as request cookies.

(Default ``None``).

* *follow_redirect* -- Boolean switch to follow HTTP redirects.

(Default ``True``)

* *prioritize* -- Move this request to the front of the request

queue. (Default ``False``)

* *hash_url* -- URL string used to indicate a common resource.

Example: "http://digg.com" and "http://www.digg.com" could both

use hash_url, "http://digg.com" (Default ``None``)

* *cache* -- Cache mode. ``1``, immediately return contents of

cache if available. ``0``, check resource, return cache if not

stale. ``-1``, ignore cache. (Default ``0``)

* *content_sha1* -- SHA-1 hash of content. If this matches the

hash of data returned by the resource, raises a

StaleContentException.

* *confirm_cache_write* -- Wait to confirm cache write before returning.

"""

request_kwargs = {

"method":method.upper(),

"postdata":postdata,

"headers":headers,

"agent":agent,

"timeout":timeout,

"cookies":cookies,

"follow_redirect":follow_redirect,

"prioritize":prioritize}

cache = int(cache)

if cache not in [-1,0,1]:

raise Exception("Unknown caching mode.")

if not isinstance(url, str):

url = convertToUTF8(url)

if hash_url is not None and not isinstance(hash_url, str):

hash_url = convertToUTF8(hash_url)

# Create request_hash to serve as a cache key from

# either the URL or user-provided hash_url.

if hash_url is None:

request_hash = hashlib.sha1(cPickle.dumps([

url,

headers,

agent,

cookies])).hexdigest()

else:

request_hash = hashlib.sha1(cPickle.dumps([

hash_url,

headers,

agent,

cookies])).hexdigest()

if request_kwargs["method"] != "GET":

d = self.rq.getPage(url, **request_kwargs)

d.addCallback(self._checkForStaleContent, content_sha1, request_hash)

return d

if cache == -1:

# Cache mode -1. Bypass cache entirely.

LOGGER.debug("Getting request %s for URL %s." % (request_hash, url))

d = self.rq.getPage(url, **request_kwargs)

d.addCallback(self._returnFreshData,

request_hash,

url,

confirm_cache_write)

d.addErrback(self._requestWithNoCacheHeadersErrback,

request_hash,

url,

confirm_cache_write,

request_kwargs)

d.addCallback(self._checkForStaleContent, content_sha1, request_hash)

return d

elif cache == 0:

# Cache mode 0. Check cache, send cached headers, possibly use cached data.

LOGGER.debug("Checking S3 Head object request %s for URL %s." % (request_hash, url))

# Check if there is a cache entry, return headers.

d = self.s3.headObject(self.aws_s3_http_cache_bucket, request_hash)

d.addCallback(self._checkCacheHeaders,

request_hash,

url,

request_kwargs,

confirm_cache_write,

content_sha1)

d.addErrback(self._requestWithNoCacheHeaders,

request_hash,

url,

request_kwargs,

confirm_cache_write)

d.addCallback(self._checkForStaleContent, content_sha1, request_hash)

return d

elif cache == 1:

# Cache mode 1. Use cache immediately, if possible.

LOGGER.debug("Getting S3 object request %s for URL %s." % (request_hash, url))

d = self.s3.getObject(self.aws_s3_http_cache_bucket, request_hash)

d.addCallback(self._returnCachedData, request_hash)

d.addErrback(self._requestWithNoCacheHeaders,

request_hash,

url,

request_kwargs,

confirm_cache_write)

d.addCallback(self._checkForStaleContent, content_sha1, request_hash)

return d

def _checkCacheHeaders(self,

data,

request_hash,

url,

request_kwargs,

confirm_cache_write,

content_sha1):

LOGGER.debug("Got S3 Head object request %s for URL %s." % (request_hash, url))

http_history = {}

#if "content-length" in data["headers"] and int(data["headers"]["content-length"][0]) == 0:

# raise Exception("Zero Content length, do not use as cache.")

if "content-sha1" in data["headers"]:

http_history["content-sha1"] = data["headers"]["content-sha1"][0]

# Filter?

if "request-failures" in data["headers"]:

http_history["request-failures"] = data["headers"]["request-failures"][0].split(",")

if "content-changes" in data["headers"]:

http_history["content-changes"] = data["headers"]["content-changes"][0].split(",")

# If cached data is not stale, return it.

if "cache-expires" in data["headers"]:

expires = dateutil.parser.parse(data["headers"]["cache-expires"][0])

now = datetime.datetime.now(UTC)

if expires > now:

if "content-sha1" in http_history and http_history["content-sha1"] == content_sha1:

LOGGER.debug("Raising StaleContentException (1) on %s" % request_hash)

raise StaleContentException()

LOGGER.debug("Cached data %s for URL %s is not stale. Getting from S3." % (request_hash, url))

d = self.s3.getObject(self.aws_s3_http_cache_bucket, request_hash)

d.addCallback(self._returnCachedData, request_hash)

d.addErrback(

self._requestWithNoCacheHeaders,

request_hash,

url,

request_kwargs,

confirm_cache_write,

http_history=http_history)

return d

modified_request_kwargs = copy.deepcopy(request_kwargs)

# At this point, cached data may or may not be stale.

# If cached data has an etag header, include it in the request.

if "cache-etag" in data["headers"]:

modified_request_kwargs["etag"] = data["headers"]["cache-etag"][0]

# If cached data has a last-modified header, include it in the request.

if "cache-last-modified" in data["headers"]:

modified_request_kwargs["last_modified"] = data["headers"]["cache-last-modified"][0]

LOGGER.debug("Requesting %s for URL %s with etag and last-modified headers." % (request_hash, url))

# Make the request. A callback means a 20x response. An errback

# could be a 30x response, indicating the cache is not stale.

d = self.rq.getPage(url, **modified_request_kwargs)

d.addCallback(

self._returnFreshData,

request_hash,

url,

confirm_cache_write,

http_history=http_history)

d.addErrback(

self._handleRequestWithCacheHeadersError,

request_hash,

url,

request_kwargs,

confirm_cache_write,

data,

http_history,

content_sha1)

return d

def _returnFreshData(self,

data,

request_hash,

url,

confirm_cache_write,

http_history=None):

LOGGER.debug("Got request %s for URL %s." % (request_hash, url))

data["pagegetter-cache-hit"] = False

data["content-sha1"] = hashlib.sha1(data["response"]).hexdigest()

if http_history is not None and "content-sha1" in http_history:

if http_history["content-sha1"] == data["content-sha1"]:

return data

d = maybeDeferred(self._storeData,

data,

request_hash,

confirm_cache_write,

http_history=http_history)

d.addErrback(self._storeDataErrback, data, request_hash)

return d

def _requestWithNoCacheHeaders(self,

error,

request_hash,

url,

request_kwargs,

confirm_cache_write,

http_history=None):

try:

error.raiseException()

except StaleContentException, e:

LOGGER.debug("Raising StaleContentException (2) on %s" % request_hash)

raise StaleContentException()

except Exception, e:

pass

# No header stored in the cache. Make the request.

LOGGER.debug("Unable to find header for request %s on S3, fetching from %s." % (request_hash, url))

d = self.rq.getPage(url, **request_kwargs)

d.addCallback(

self._returnFreshData,

request_hash,

url,

confirm_cache_write,

http_history=http_history)

d.addErrback(

self._requestWithNoCacheHeadersErrback,

request_hash,

url,

confirm_cache_write,

request_kwargs,

http_history=http_history)

return d

def _requestWithNoCacheHeadersErrback(self,

error,

request_hash,

url,

confirm_cache_write,

request_kwargs,

http_history=None):

LOGGER.error(error.value.__dict__)

LOGGER.error("Unable to get request %s for URL %s.\n%s" % (

request_hash,

url,

error))

if http_history is None:

http_history = {}

if "request-failures" not in http_history:

http_history["request-failures"] = [str(int(self.time_offset + time.time()))]

else:

http_history["request-failures"].append(str(int(self.time_offset + time.time())))

http_history["request-failures"] = http_history["request-failures"][-3:]

LOGGER.debug("Writing data for failed request %s to S3." % request_hash)

headers = {}

headers["request-failures"] = ",".join(http_history["request-failures"])

d = self.s3.putObject(

self.aws_s3_http_cache_bucket,

request_hash,

"",

content_type="text/plain",

headers=headers)

if confirm_cache_write:

d.addCallback(self._requestWithNoCacheHeadersErrbackCallback, error)

return d

return error

def _requestWithNoCacheHeadersErrbackCallback(self, data, error):

return error

def _handleRequestWithCacheHeadersError(self,

error,

request_hash,

url,

request_kwargs,

confirm_cache_write,

data,

http_history,

content_sha1):

if error.value.status == "304":

if "content-sha1" in http_history and http_history["content-sha1"] == content_sha1:

LOGGER.debug("Raising StaleContentException (3) on %s" % request_hash)

raise StaleContentException()

LOGGER.debug("Request %s for URL %s hasn't been modified since it was last downloaded. Getting data from S3." % (request_hash, url))

d = self.s3.getObject(self.aws_s3_http_cache_bucket, request_hash)

d.addCallback(self._returnCachedData, request_hash)

d.addErrback(

self._requestWithNoCacheHeaders,

request_hash,

url,

request_kwargs,

confirm_cache_write,

http_history=http_history)

return d

else:

if http_history is None:

http_history = {}

if "request-failures" not in http_history:

http_history["request-failures"] = [str(int(self.time_offset + time.time()))]

else:

http_history["request-failures"].append(str(int(self.time_offset + time.time())))

http_history["request-failures"] = http_history["request-failures"][-3:]

LOGGER.debug("Writing data for failed request %s to S3. %s" % (request_hash, error))

headers = {}

for key in data["headers"]:

headers[key] = data["headers"][key][0]

headers["request-failures"] = ",".join(http_history["request-failures"])

d = self.s3.putObject(

self.aws_s3_http_cache_bucket,

request_hash,

data["response"],

content_type=data["headers"]["content-type"][0],

headers=headers)

if confirm_cache_write:

d.addCallback(self._handleRequestWithCacheHeadersErrorCallback, error)

return d

return ReportedFailure(error)

def _handleRequestWithCacheHeadersErrorCallback(self, data, error):

return ReportedFailure(error)

def _returnCachedData(self, data, request_hash):

LOGGER.debug("Got request %s from S3." % (request_hash))

data["pagegetter-cache-hit"] = True

data["status"] = 304

data["message"] = "Not Modified"

if "content-sha1" in data["headers"]:

data["content-sha1"] = data["headers"]["content-sha1"][0]

del data["headers"]["content-sha1"]

else:

data["content-sha1"] = hashlib.sha1(data["response"]).hexdigest()

if "cache-expires" in data["headers"]:

data["headers"]["expires"] = data["headers"]["cache-expires"]

del data["headers"]["cache-expires"]

if "cache-etag" in data["headers"]:

data["headers"]["etag"] = data["headers"]["cache-etag"]

del data["headers"]["cache-etag"]

if "cache-last-modified" in data["headers"]:

data["headers"]["last-modified"] = data["headers"]["cache-last-modified"]

del data["headers"]["cache-last-modified"]

return data

def _storeData(self,

data,

request_hash,

confirm_cache_write,

http_history=None):

if len(data["response"]) == 0:

return self._storeDataErrback(Failure(exc_value=Exception("Response data is of length 0")), response_data, request_hash)

#data["content-sha1"] = hashlib.sha1(data["response"]).hexdigest()

if http_history is None:

http_history = {}

if "content-sha1" not in http_history:

http_history["content-sha1"] = data["content-sha1"]

if "content-changes" not in http_history:

http_history["content-changes"] = []

if data["content-sha1"] != http_history["content-sha1"]:

http_history["content-changes"].append(str(int(self.time_offset + time.time())))

http_history["content-changes"] = http_history["content-changes"][-10:]

LOGGER.debug("Writing data for request %s to S3." % request_hash)

headers = {}

http_history["content-changes"] = filter(lambda x:len(x) > 0, http_history["content-changes"])

headers["content-changes"] = ",".join(http_history["content-changes"])

headers["content-sha1"] = data["content-sha1"]

if "cache-control" in data["headers"]:

if "no-cache" in data["headers"]["cache-control"][0]:

return data

if "expires" in data["headers"]:

headers["cache-expires"] = data["headers"]["expires"][0]

if "etag" in data["headers"]:

headers["cache-etag"] = data["headers"]["etag"][0]

if "last-modified" in data["headers"]:

headers["cache-last-modified"] = data["headers"]["last-modified"][0]

if "content-type" in data["headers"]:

content_type = data["headers"]["content-type"][0]

d = self.s3.putObject(

self.aws_s3_http_cache_bucket,

request_hash,

data["response"],

content_type=content_type,

headers=headers)

if confirm_cache_write:

d.addCallback(self._storeDataCallback, data)

d.addErrback(self._storeDataErrback, data, request_hash)

return d

return data

def _storeDataCallback(self, data, response_data):

return response_data

def _storeDataErrback(self, error, response_data, request_hash):

LOGGER.error("Error storing data for %s" % (request_hash))

return response_data

def _checkForStaleContent(self, data, content_sha1, request_hash):

if "content-sha1" not in data:

data["content-sha1"] = hashlib.sha1(data["response"]).hexdigest()

if content_sha1 == data["content-sha1"]:

LOGGER.debug("Raising StaleContentException (4) on %s" % request_hash)

raise StaleContentException(content_sha1)

else:

return data | AWSpider | /AWSpider-0.3.2.12.tar.gz/AWSpider-0.3.2.12/awspider/pagegetter.py | pagegetter.py |

import codecs

#######################################################

#

# Based on Beautiful Soup's Unicode, Dammit

# http://www.crummy.com/software/BeautifulSoup/

#

#######################################################

# Autodetects character encodings.

# Download from http://chardet.feedparser.org/

try:

import chardet

# import chardet.constants

# chardet.constants._debug = 1

except ImportError:

chardet = None

# cjkcodecs and iconv_codec make Python know about more character encodings.

# Both are available from http://cjkpython.i18n.org/

# They're built in if you use Python 2.4.

try:

import cjkcodecs.aliases

except ImportError:

pass

try:

import iconv_codec

except ImportError:

pass

def convertToUnicode( s ):

s = UnicodeConverter( s ).unicode

if isinstance( s, unicode ):

return s

else:

return None

def convertToUTF8( s ):

s = UnicodeConverter( s ).unicode

if isinstance( s, unicode ):

return s.encode("utf-8")

else:

return None

class UnicodeConverter:

"""A class for detecting the encoding of a *ML document and

converting it to a Unicode string. If the source encoding is

windows-1252, can replace MS smart quotes with their HTML or XML

equivalents."""

# This dictionary maps commonly seen values for "charset" in HTML

# meta tags to the corresponding Python codec names. It only covers