sha

null | last_modified

null | library_name

stringclasses 154

values | text

stringlengths 1

900k

| metadata

stringlengths 2

348k

| pipeline_tag

stringclasses 45

values | id

stringlengths 5

122

| tags

sequencelengths 1

1.84k

| created_at

stringlengths 25

25

| arxiv

sequencelengths 0

201

| languages

sequencelengths 0

1.83k

| tags_str

stringlengths 17

9.34k

| text_str

stringlengths 0

389k

| text_lists

sequencelengths 0

722

| processed_texts

sequencelengths 1

723

| tokens_length

sequencelengths 1

723

| input_texts

sequencelengths 1

61

| embeddings

sequencelengths 768

768

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

null | null | transformers |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# text_classification_model

This model is a fine-tuned version of [dmis-lab/biobert-v1.1](https://huggingface.co/dmis-lab/biobert-v1.1) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5013

- Accuracy: 0.8046

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

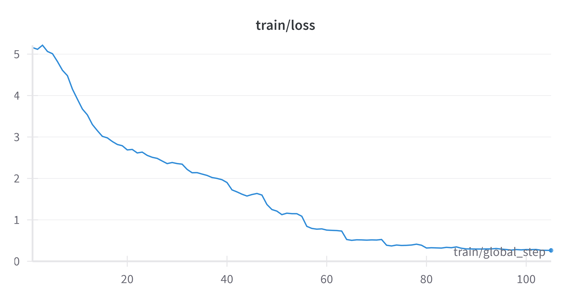

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 22 | 0.5339 | 0.7586 |

| No log | 2.0 | 44 | 0.5013 | 0.8046 |

### Framework versions

- Transformers 4.37.2

- Pytorch 2.2.0+cu118

- Datasets 2.16.1

- Tokenizers 0.15.1

| {"tags": ["generated_from_trainer"], "metrics": ["accuracy"], "base_model": "dmis-lab/biobert-v1.1", "model-index": [{"name": "text_classification_model", "results": []}]} | text-classification | DifeiT/text_classification_model | [

"transformers",

"safetensors",

"bert",

"text-classification",

"generated_from_trainer",

"base_model:dmis-lab/biobert-v1.1",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | 2024-02-07T17:52:09+00:00 | [] | [] | TAGS

#transformers #safetensors #bert #text-classification #generated_from_trainer #base_model-dmis-lab/biobert-v1.1 #autotrain_compatible #endpoints_compatible #region-us

| text\_classification\_model

===========================

This model is a fine-tuned version of dmis-lab/biobert-v1.1 on the None dataset.

It achieves the following results on the evaluation set:

* Loss: 0.5013

* Accuracy: 0.8046

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 2e-05

* train\_batch\_size: 16

* eval\_batch\_size: 16

* seed: 42

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* num\_epochs: 2

### Training results

### Framework versions

* Transformers 4.37.2

* Pytorch 2.2.0+cu118

* Datasets 2.16.1

* Tokenizers 0.15.1

| [

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 2",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.37.2\n* Pytorch 2.2.0+cu118\n* Datasets 2.16.1\n* Tokenizers 0.15.1"

] | [

"TAGS\n#transformers #safetensors #bert #text-classification #generated_from_trainer #base_model-dmis-lab/biobert-v1.1 #autotrain_compatible #endpoints_compatible #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 2",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.37.2\n* Pytorch 2.2.0+cu118\n* Datasets 2.16.1\n* Tokenizers 0.15.1"

] | [

59,

98,

4,

33

] | [

"passage: TAGS\n#transformers #safetensors #bert #text-classification #generated_from_trainer #base_model-dmis-lab/biobert-v1.1 #autotrain_compatible #endpoints_compatible #region-us \n### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 2### Training results### Framework versions\n\n\n* Transformers 4.37.2\n* Pytorch 2.2.0+cu118\n* Datasets 2.16.1\n* Tokenizers 0.15.1"

] | [

-0.10944147408008575,

0.053713109344244,

-0.0012172600254416466,

0.09685907512903214,

0.18238595128059387,

0.020832300186157227,

0.13931429386138916,

0.09377682209014893,

-0.07848889380693436,

0.04008615016937256,

0.13206815719604492,

0.14133526384830475,

-0.008675121702253819,

0.16392108798027039,

-0.08909863233566284,

-0.21879731118679047,

0.025050809606909752,

0.02071434073150158,

-0.08834467828273773,

0.10643043369054794,

0.09624755382537842,

-0.14774082601070404,

0.08955550938844681,

-0.03434509411454201,

-0.207407146692276,

0.00881494302302599,

0.0553937703371048,

-0.05102277919650078,

0.14984570443630219,

0.024462778121232986,

0.15369917452335358,

0.03893576189875603,

0.10093061625957489,

-0.18052497506141663,

0.011437870562076569,

0.042021073400974274,

0.02648477628827095,

0.07708080112934113,

0.037301138043403625,

-0.017489928752183914,

0.05725602060556412,

-0.10364264994859695,

0.06894387304782867,

0.017666658386588097,

-0.13752369582653046,

-0.2031944841146469,

-0.058352235704660416,

-0.010801785625517368,

0.0787140280008316,

0.07358325272798538,

-0.010892292484641075,

0.14382968842983246,

-0.0729546993970871,

0.0944102331995964,

0.20459027588367462,

-0.2701003849506378,

-0.06430114805698395,

0.060392409563064575,

0.023324577137827873,

0.1002800241112709,

-0.11046892404556274,

-0.0037615918554365635,

0.07767703384160995,

0.017220722511410713,

0.12441705167293549,

-0.03553120419383049,

-0.05518724024295807,

0.002343076514080167,

-0.1426006555557251,

-0.0005174351390451193,

0.13404510915279388,

0.03724352642893791,

-0.05427277460694313,

-0.02378535084426403,

-0.06184786185622215,

-0.13202042877674103,

-0.053002506494522095,

-0.05237647518515587,

0.046896111220121384,

-0.04868428036570549,

-0.07995172590017319,

0.018487239256501198,

-0.09201040863990784,

-0.09484755992889404,

-0.04152214154601097,

0.21071873605251312,

0.032743990421295166,

-0.007539564743638039,

-0.019732248038053513,

0.10068050026893616,

-0.029322553426027298,

-0.1320481151342392,

0.014815191738307476,

0.014006175100803375,

-0.00897915568202734,

-0.0884924978017807,

-0.07716692984104156,

-0.048334330320358276,

0.02986922487616539,

0.12099963426589966,

-0.08950315415859222,

0.03600364923477173,

0.01634262502193451,

0.008918359875679016,

-0.10139903426170349,

0.1684676557779312,

-0.0327543206512928,

-0.0258408784866333,

0.02148263156414032,

0.07736220210790634,

-0.0022698857355862856,

0.013961223885416985,

-0.10193688422441483,

0.00019641190010588616,

0.14216648042201996,

0.02088511735200882,

-0.10290362685918808,

0.0860971137881279,

-0.04768868908286095,

-0.0018666052492335439,

0.010576008819043636,

-0.09318279474973679,

0.02219928614795208,

0.0043305689468979836,

-0.06172427535057068,

-0.05596868321299553,

0.015378701500594616,

0.01716172695159912,

0.03147584944963455,

0.10603844374418259,

-0.09269438683986664,

0.017550207674503326,

-0.08681812137365341,

-0.12800553441047668,

-0.021957794204354286,

-0.042389318346977234,

0.04347999766469002,

-0.12978413701057434,

-0.14203672111034393,

-0.019650088623166084,

0.026431744918227196,

-0.021515075117349625,

-0.008943391963839531,

-0.06419497728347778,

-0.07108835130929947,

0.014853970147669315,

-0.007146491203457117,

0.08230005204677582,

-0.05792856216430664,

0.11181183159351349,

0.08113070577383041,

0.08492080867290497,

-0.04523259773850441,

0.03567865118384361,

-0.11335788667201996,

0.024061355739831924,

-0.22633272409439087,

0.03245256096124649,

-0.05812952294945717,

0.07699701935052872,

-0.07123402506113052,

-0.07975713908672333,

0.012106812559068203,

0.005820432677865028,

0.07998047769069672,

0.12684284150600433,

-0.14418508112430573,

-0.061777085065841675,

0.18012897670269012,

-0.0921759381890297,

-0.1344652622938156,

0.09928448498249054,

-0.06801936030387878,

0.07480364292860031,

0.08894957602024078,

0.1834031641483307,

0.07122062891721725,

-0.09436396509408951,

0.02383040450513363,

-0.026409579440951347,

0.05848385766148567,

-0.018161416053771973,

0.05640963092446327,

0.0267308559268713,

-0.06756096333265305,

0.027944866567850113,

-0.05011734366416931,

0.06232248991727829,

-0.11001894623041153,

-0.06908006966114044,

-0.03447173908352852,

-0.13179911673069,

0.09532072395086288,

0.04908110201358795,

0.07920943200588226,

-0.12393853068351746,

-0.04151648283004761,

0.09390159696340561,

0.08193828910589218,

-0.06172265484929085,

0.002540494315326214,

-0.05530254542827606,

0.049597371369600296,

-0.06648841500282288,

-0.026741569861769676,

-0.16817978024482727,

-0.05255373567342758,

0.015340513549745083,

0.04574039950966835,

0.00004033983714180067,

-0.009098571725189686,

0.08820394426584244,

0.09360433369874954,

-0.07612961530685425,

-0.036884501576423645,

-0.010022558271884918,

0.022541483864188194,

-0.13563644886016846,

-0.19085825979709625,

0.01047260407358408,

-0.03670208156108856,

0.14667633175849915,

-0.2441888302564621,

0.037163570523262024,

-0.04167518764734268,

0.07537330687046051,

0.03990240395069122,

-0.006320870481431484,

-0.037152159959077835,

0.06952279061079025,

-0.03545098379254341,

-0.04864800348877907,

0.05043410882353783,

-0.01203925535082817,

-0.08098333328962326,

-0.05036576837301254,

-0.13813824951648712,

0.20611323416233063,

0.12068458646535873,

-0.09682420641183853,

-0.12776437401771545,

-0.02167893387377262,

-0.034498412162065506,

-0.011536805890500546,

-0.05715937167406082,

0.012261644937098026,

0.12502562999725342,

-0.031977150589227676,

0.14727851748466492,

-0.06989437341690063,

-0.014709606766700745,

0.021126138046383858,

-0.05938083305954933,

0.038372814655303955,

0.10522614419460297,

0.05773712322115898,

-0.12059011310338974,

0.13729991018772125,

0.15997649729251862,

-0.07133892923593521,

0.1307358592748642,

-0.029713621363043785,

-0.06010982021689415,

-0.016541296616196632,

0.001726074144244194,

0.004471072927117348,

0.10864102840423584,

-0.0879756361246109,

-0.002041374798864126,

0.0012930853990837932,

0.03475108742713928,

-0.005258531775325537,

-0.20824356377124786,

-0.04437149316072464,

0.049808427691459656,

-0.02962687984108925,

-0.0012958107981830835,

-0.03175896406173706,

0.008196007460355759,

0.1261981874704361,

0.008520365692675114,

-0.08047447353601456,

0.028357403352856636,

0.005453096237033606,

-0.09650448709726334,

0.2326478362083435,

-0.08493473380804062,

-0.1123005598783493,

-0.09199995547533035,

-0.06692710518836975,

-0.032092656940221786,

0.05514267459511757,

0.05572918429970741,

-0.10129750519990921,

-0.024450527504086494,

-0.07109130918979645,

0.0338052399456501,

0.04334910586476326,

0.049882616847753525,

0.006040588486939669,

0.0031205983832478523,

0.08937637507915497,

-0.0926060602068901,

-0.011365486308932304,

-0.05776748061180115,

-0.053323887288570404,

0.061247725039720535,

0.02101087011396885,

0.11927686631679535,

0.14184343814849854,

-0.04717878997325897,

-0.012255189009010792,

-0.03461328148841858,

0.25444555282592773,

-0.06457799673080444,

-0.03344396874308586,

0.11338948458433151,

-0.018093833699822426,

0.020262060686945915,

0.14821286499500275,

0.04923391714692116,

-0.12073425203561783,

0.05118073895573616,

0.01709880121052265,

-0.024112019687891006,

-0.1864570528268814,

-0.04284955561161041,

-0.018189460039138794,

-0.0235457681119442,

0.08104851096868515,

0.00032875960459932685,

0.028729287907481194,

0.07786870747804642,

0.030807314440608025,

0.07678067684173584,

-0.020389433950185776,

0.059017930179834366,

0.07763490825891495,

0.04024020582437515,

0.12397356331348419,

-0.03144150599837303,

-0.08991508185863495,

0.01921992562711239,

-0.02411729283630848,

0.19463585317134857,

0.018954474478960037,

0.06977277994155884,

0.0323481522500515,

0.14031986892223358,

0.011361903510987759,

0.09096373617649078,

0.021542860195040703,

-0.07325871288776398,

-0.0034972778521478176,

-0.048798661679029465,

-0.05156064033508301,

0.033712297677993774,

-0.104979507625103,

0.06849634647369385,

-0.1571032702922821,

0.014617995359003544,

0.05687861517071724,

0.18357312679290771,

0.06320270150899887,

-0.3373221457004547,

-0.10466318577528,

0.01694956235587597,

-0.010051094926893711,

-0.03657052293419838,

0.01876251958310604,

0.13176007568836212,

-0.06766627728939056,

0.017562415450811386,

-0.05771118402481079,

0.06515300273895264,

-0.020717047154903412,

0.06038397550582886,

0.015499471686780453,

0.08052708208560944,

-0.024586202576756477,

0.05432366952300072,

-0.3042301535606384,

0.2793554961681366,

0.012210153974592686,

0.06556405127048492,

-0.03780967742204666,

-0.024056198075413704,

0.029662681743502617,

0.12182503193616867,

0.05872020497918129,

-0.009794577956199646,

-0.0816820040345192,

-0.25857847929000854,

-0.03291274979710579,

0.03796238452196121,

0.12509401142597198,

-0.03750426322221756,

0.12067973613739014,

-0.027341321110725403,

-0.0010998295620083809,

0.09030421823263168,

-0.03803448751568794,

-0.10686028748750687,

-0.051484230905771255,

-0.03348612040281296,

0.012945868074893951,

0.033540260046720505,

-0.07689404487609863,

-0.09008171409368515,

-0.12332030385732651,

0.14220641553401947,

0.006214819382876158,

-0.015378865413367748,

-0.12370700389146805,

0.08555404841899872,

0.04773669317364693,

-0.08023428171873093,

0.044547706842422485,

0.021515965461730957,

0.07623177021741867,

0.033010274171829224,

-0.05450659990310669,

0.13603758811950684,

-0.0655127614736557,

-0.1781526803970337,

-0.07798083126544952,

0.09323669970035553,

0.03301475942134857,

0.05458112061023712,

0.012319988571107388,

0.01033119484782219,

0.0044071548618376255,

-0.07190921902656555,

0.03312186524271965,

-0.010622088797390461,

0.0541493259370327,

0.04495525360107422,

-0.08211244642734528,

-0.0021786990109831095,

-0.06927915662527084,

-0.02396918274462223,

0.17776599526405334,

0.297780841588974,

-0.08776199817657471,

-0.02129926159977913,

0.043650995939970016,

-0.059134211391210556,

-0.21653319895267487,

0.08894554525613785,

0.053849756717681885,

0.012121160514652729,

0.0522770993411541,

-0.1414755880832672,

0.12409757077693939,

0.0909690260887146,

-0.017719784751534462,

0.0910387933254242,

-0.25454992055892944,

-0.14038456976413727,

0.1300736963748932,

0.18365289270877838,

0.16742165386676788,

-0.15010781586170197,

-0.016715718433260918,

-0.045613765716552734,

-0.08707917481660843,

0.09077699482440948,

-0.09202206879854202,

0.1033034399151802,

-0.01270392443984747,

0.06278050690889359,

0.02649436518549919,

-0.04918475076556206,

0.12513813376426697,

-0.0018358483212068677,

0.1299515813589096,

-0.05811672657728195,

-0.03587581217288971,

0.008275458589196205,

-0.0555911511182785,

0.006941251456737518,

-0.04123333841562271,

0.04955550655722618,

-0.04250127449631691,

-0.03541916236281395,

-0.06391727924346924,

0.035054683685302734,

-0.030096497386693954,

-0.08096279203891754,

-0.030498169362545013,

0.03459034487605095,

0.03894248232245445,

-0.025111252442002296,

0.09888976812362671,

-0.01711418852210045,

0.17377015948295593,

0.10366731882095337,

0.08191465586423874,

-0.05310077220201492,

0.037362828850746155,

0.027515094727277756,

-0.027638491243124008,

0.05311397463083267,

-0.14523997902870178,

0.05440497770905495,

0.12668871879577637,

0.0070512848906219006,

0.13906927406787872,

0.08052156120538712,

-0.006918452680110931,

0.007764794863760471,

0.06947522610425949,

-0.16803422570228577,

-0.07592634111642838,

-0.018707675859332085,

-0.06512561440467834,

-0.11830834299325943,

0.07510421425104141,

0.1169174537062645,

-0.07880526781082153,

-0.0014482070691883564,

-0.042913150042295456,

-0.006208662409335375,

-0.050461892038583755,

0.21409302949905396,

0.07794249802827835,

0.05812203139066696,

-0.07844134420156479,

0.0452500656247139,

0.03187374770641327,

-0.05232816934585571,

0.0032691743690520525,

0.03438930958509445,

-0.10011693090200424,

-0.0399065837264061,

0.09293470531702042,

0.21144318580627441,

-0.04817677661776543,

-0.02658548392355442,

-0.1481412649154663,

-0.1338554471731186,

0.03606114909052849,

0.2073807269334793,

0.10772853344678879,

0.013466369360685349,

-0.005924009718000889,

0.010830284096300602,

-0.14224162697792053,

0.10971441119909286,

0.01849628984928131,

0.10111907869577408,

-0.1547643542289734,

0.17289574444293976,

-0.03544080629944801,

0.014903687871992588,

-0.045301403850317,

0.042208049446344376,

-0.14071819186210632,

0.002551877172663808,

-0.14835818111896515,

-0.028921348974108696,

-0.009597928263247013,

0.003390712197870016,

0.008998354896903038,

-0.07688302546739578,

-0.041871003806591034,

0.0022497614845633507,

-0.11201237887144089,

-0.007811462506651878,

0.03592488169670105,

0.04870067164301872,

-0.10575336962938309,

-0.05586298555135727,

0.021493513137102127,

-0.06583820283412933,

0.05665113404393196,

0.04225341975688934,

0.030601469799876213,

0.06402783840894699,

-0.18039238452911377,

0.013439156115055084,

0.08495180308818817,

-0.007136237807571888,

0.0591316893696785,

-0.0992807075381279,

-0.012164965271949768,

-0.0013019724283367395,

0.06921190768480301,

0.03157706558704376,

0.09674820303916931,

-0.12285931408405304,

0.008087694644927979,

-0.04216936230659485,

-0.05530915781855583,

-0.05417142063379288,

-0.002304220339283347,

0.07427043467760086,

-0.018157778307795525,

0.18111948668956757,

-0.10595612972974777,

0.021114764735102654,

-0.20948372781276703,

-0.011395052075386047,

-0.020164242014288902,

-0.11767257750034332,

-0.14222471415996552,

-0.061268970370292664,

0.06797051429748535,

-0.04016552492976189,

0.12408414483070374,

0.0034603846725076437,

0.07268661260604858,

0.028888950124382973,

-0.057374536991119385,

0.06592098623514175,

0.049189984798431396,

0.22791984677314758,

0.05299513787031174,

-0.05081650987267494,

0.03464484214782715,

0.08134470880031586,

0.12950441241264343,

0.09306518733501434,

0.1892467439174652,

0.16936533153057098,

-0.08003155887126923,

0.08958085626363754,

0.012182183563709259,

-0.04113255813717842,

-0.11338930577039719,

0.006892635021358728,

-0.0600421167910099,

0.043053336441516876,

-0.016288097947835922,

0.18478311598300934,

0.08301368355751038,

-0.15955188870429993,

0.01774722710251808,

-0.07066075503826141,

-0.08888113498687744,

-0.1111636534333229,

0.019713908433914185,

-0.10677595436573029,

-0.16524460911750793,

-0.010326463729143143,

-0.12074429541826248,

-0.011106397025287151,

0.09430275112390518,

-0.0010211615590378642,

-0.011070871725678444,

0.21120165288448334,

0.018921859562397003,

0.04328233376145363,

0.047798722982406616,

-0.004472021479159594,

-0.027789518237113953,

-0.07125243544578552,

-0.10124912112951279,

0.01261509396135807,

-0.02015545591711998,

0.019639333710074425,

-0.06816554814577103,

-0.07298167049884796,

0.03365177661180496,

-0.01116187870502472,

-0.11435437947511673,

0.014770505018532276,

0.034746699035167694,

0.03431622311472893,

0.024469122290611267,

0.01213701069355011,

-0.0016238611424341798,

-0.0048667145892977715,

0.23973706364631653,

-0.07336733490228653,

-0.08220797777175903,

-0.10190779715776443,

0.2968635857105255,

0.056785743683576584,

0.05357861891388893,

0.007695462089031935,

-0.09174983203411102,

0.035212527960538864,

0.22693172097206116,

0.1801627278327942,

-0.10174953937530518,

0.009756136685609818,

-0.03523220866918564,

-0.013595182448625565,

-0.02621130645275116,

0.11767565459012985,

0.10920900106430054,

-0.013167845085263252,

-0.08153272420167923,

-0.0391206257045269,

-0.031048519536852837,

-0.006851898971945047,

-0.03118930757045746,

0.04448593035340309,

0.05371248349547386,

0.027859695255756378,

-0.05896543338894844,

0.05645964667201042,

-0.03966822102665901,

-0.09456275403499603,

0.04876530542969704,

-0.19543996453285217,

-0.1341046541929245,

-0.03566019982099533,

0.06559237092733383,

-0.008533270098268986,

0.05935603752732277,

-0.03833581507205963,

-0.004726612940430641,

0.0512823686003685,

-0.02766638807952404,

-0.04454982653260231,

-0.08385512232780457,

0.06086578965187073,

-0.0851513147354126,

0.18500041961669922,

-0.03128710389137268,

0.05256910249590874,

0.12217538058757782,

0.03458341211080551,

-0.07562374323606491,

0.09914025664329529,

0.04126355051994324,

-0.09176097810268402,

0.04579070955514908,

0.11148159205913544,

-0.04996888339519501,

0.11220591515302658,

0.04785284399986267,

-0.1799127608537674,

0.026669692248106003,

-0.06564437597990036,

-0.0837085098028183,

-0.05238509550690651,

-0.04786420240998268,

-0.04691922292113304,

0.12872935831546783,

0.20321623980998993,

-0.03545892611145973,

0.04360802471637726,

-0.0484817773103714,

0.03462126478552818,

0.06825310736894608,

0.03988729044795036,

-0.06021851301193237,

-0.26811113953590393,

0.019299326464533806,

0.10951387882232666,

-0.028530731797218323,

-0.2805952727794647,

-0.08311720192432404,

-0.017992230132222176,

-0.041358236223459244,

-0.08075045794248581,

0.09241402894258499,

0.10346631705760956,

0.05906432121992111,

-0.06448473781347275,

-0.1474694162607193,

-0.07103075832128525,

0.18663744628429413,

-0.11860021948814392,

-0.12294918298721313

] |

null | null | transformers |

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

| {"library_name": "transformers", "tags": []} | text-generation | delli/mistral-7b-address-validator-merged | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | 2024-02-07T17:52:31+00:00 | [

"1910.09700"

] | [] | TAGS

#transformers #safetensors #mistral #text-generation #conversational #arxiv-1910.09700 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# Model Card for Model ID

## Model Details

### Model Description

This is the model card of a transformers model that has been pushed on the Hub. This model card has been automatically generated.

- Developed by:

- Funded by [optional]:

- Shared by [optional]:

- Model type:

- Language(s) (NLP):

- License:

- Finetuned from model [optional]:

### Model Sources [optional]

- Repository:

- Paper [optional]:

- Demo [optional]:

## Uses

### Direct Use

### Downstream Use [optional]

### Out-of-Scope Use

## Bias, Risks, and Limitations

### Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

## Training Details

### Training Data

### Training Procedure

#### Preprocessing [optional]

#### Training Hyperparameters

- Training regime:

#### Speeds, Sizes, Times [optional]

## Evaluation

### Testing Data, Factors & Metrics

#### Testing Data

#### Factors

#### Metrics

### Results

#### Summary

## Model Examination [optional]

## Environmental Impact

Carbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).

- Hardware Type:

- Hours used:

- Cloud Provider:

- Compute Region:

- Carbon Emitted:

## Technical Specifications [optional]

### Model Architecture and Objective

### Compute Infrastructure

#### Hardware

#### Software

[optional]

BibTeX:

APA:

## Glossary [optional]

## More Information [optional]

## Model Card Authors [optional]

## Model Card Contact

| [

"# Model Card for Model ID",

"## Model Details",

"### Model Description\n\n\n\nThis is the model card of a transformers model that has been pushed on the Hub. This model card has been automatically generated.\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:",

"### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:",

"## Uses",

"### Direct Use",

"### Downstream Use [optional]",

"### Out-of-Scope Use",

"## Bias, Risks, and Limitations",

"### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.",

"## How to Get Started with the Model\n\nUse the code below to get started with the model.",

"## Training Details",

"### Training Data",

"### Training Procedure",

"#### Preprocessing [optional]",

"#### Training Hyperparameters\n\n- Training regime:",

"#### Speeds, Sizes, Times [optional]",

"## Evaluation",

"### Testing Data, Factors & Metrics",

"#### Testing Data",

"#### Factors",

"#### Metrics",

"### Results",

"#### Summary",

"## Model Examination [optional]",

"## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:",

"## Technical Specifications [optional]",

"### Model Architecture and Objective",

"### Compute Infrastructure",

"#### Hardware",

"#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:",

"## Glossary [optional]",

"## More Information [optional]",

"## Model Card Authors [optional]",

"## Model Card Contact"

] | [

"TAGS\n#transformers #safetensors #mistral #text-generation #conversational #arxiv-1910.09700 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"# Model Card for Model ID",

"## Model Details",

"### Model Description\n\n\n\nThis is the model card of a transformers model that has been pushed on the Hub. This model card has been automatically generated.\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:",

"### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:",

"## Uses",

"### Direct Use",

"### Downstream Use [optional]",

"### Out-of-Scope Use",

"## Bias, Risks, and Limitations",

"### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.",

"## How to Get Started with the Model\n\nUse the code below to get started with the model.",

"## Training Details",

"### Training Data",

"### Training Procedure",

"#### Preprocessing [optional]",

"#### Training Hyperparameters\n\n- Training regime:",

"#### Speeds, Sizes, Times [optional]",

"## Evaluation",

"### Testing Data, Factors & Metrics",

"#### Testing Data",

"#### Factors",

"#### Metrics",

"### Results",

"#### Summary",

"## Model Examination [optional]",

"## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:",

"## Technical Specifications [optional]",

"### Model Architecture and Objective",

"### Compute Infrastructure",

"#### Hardware",

"#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:",

"## Glossary [optional]",

"## More Information [optional]",

"## Model Card Authors [optional]",

"## Model Card Contact"

] | [

60,

6,

3,

82,

28,

3,

4,

9,

9,

10,

42,

20,

3,

4,

5,

9,

11,

13,

3,

12,

5,

4,

5,

3,

4,

9,

53,

9,

8,

6,

3,

14,

8,

7,

9,

4

] | [

"passage: TAGS\n#transformers #safetensors #mistral #text-generation #conversational #arxiv-1910.09700 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n# Model Card for Model ID## Model Details### Model Description\n\n\n\nThis is the model card of a transformers model that has been pushed on the Hub. This model card has been automatically generated.\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:## Uses### Direct Use### Downstream Use [optional]### Out-of-Scope Use## Bias, Risks, and Limitations### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.## How to Get Started with the Model\n\nUse the code below to get started with the model.## Training Details### Training Data### Training Procedure#### Preprocessing [optional]#### Training Hyperparameters\n\n- Training regime:#### Speeds, Sizes, Times [optional]## Evaluation### Testing Data, Factors & Metrics#### Testing Data#### Factors#### Metrics### Results#### Summary## Model Examination [optional]## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:## Technical Specifications [optional]### Model Architecture and Objective### Compute Infrastructure#### Hardware#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:## Glossary [optional]## More Information [optional]## Model Card Authors [optional]## Model Card Contact"

] | [

-0.04571164771914482,

0.1637648642063141,

-0.005522117950022221,

0.017756497487425804,

0.09821303188800812,

0.01318030059337616,

0.06541220843791962,

0.1127115860581398,

-0.017605241388082504,

0.1127321794629097,

0.030432263389229774,

0.09820804744958878,

0.1134178638458252,

0.14702944457530975,

-0.003594378475099802,

-0.22472713887691498,

0.052083637565374374,

-0.12124937027692795,

-0.03241228312253952,

0.1181139275431633,

0.14941681921482086,

-0.09871039539575577,

0.07234785705804825,

-0.030714161694049835,

-0.01334790326654911,

-0.03167412802577019,

-0.05947697162628174,

-0.045681875199079514,

0.046136777848005295,

0.0657167062163353,

0.06853367388248444,

0.007354621775448322,

0.08972878009080887,

-0.2669793367385864,

0.019881360232830048,

0.06918594241142273,

-0.0025153355672955513,

0.07059336453676224,

0.06344282627105713,

-0.07033728063106537,

0.10271385312080383,

-0.051166124641895294,

0.1467856466770172,

0.08377711474895477,

-0.09116126596927643,

-0.18892322480678558,

-0.08764564990997314,

0.0990586131811142,

0.17651304602622986,

0.04750865325331688,

-0.024397386237978935,

0.09895956516265869,

-0.0878119245171547,

0.015860557556152344,

0.052259236574172974,

-0.07261253148317337,

-0.05407591536641121,

0.061004482209682465,

0.07816638052463531,

0.06616047024726868,

-0.12551534175872803,

-0.02998468652367592,

0.005221198312938213,

0.011705057695508003,

0.07518111169338226,

0.01836656779050827,

0.15222862362861633,

0.03479425609111786,

-0.12653809785842896,

-0.04834689199924469,

0.0983143299818039,

0.03359128534793854,

-0.043975554406642914,

-0.247073233127594,

-0.031072303652763367,

-0.026882093399763107,

-0.030029185116291046,

-0.038772210478782654,

0.04153512790799141,

-0.006745535880327225,

0.08434242010116577,

-0.0040448750369250774,

-0.07344388216733932,

-0.03874153643846512,

0.06087949126958847,

0.0669754296541214,

0.029331250116229057,

-0.013996441848576069,

0.010876164771616459,

0.11490162461996078,

0.10806918889284134,

-0.12199585139751434,

-0.05589085817337036,

-0.06492951512336731,

-0.08786392956972122,

-0.04284887760877609,

0.033410828560590744,

0.03509693965315819,

0.05435176193714142,

0.2536843419075012,

0.009815474040806293,

0.06126174330711365,

0.03745805472135544,

0.007310505956411362,

0.059651583433151245,

0.10812553018331528,

-0.05987109988927841,

-0.10409316420555115,

-0.02881651371717453,

0.08857584744691849,

0.006609630770981312,

-0.03354408219456673,

-0.05052083358168602,

0.05901389569044113,

0.021856583654880524,

0.11749778687953949,

0.08884359151124954,

0.00984770804643631,

-0.07126569002866745,

-0.06146538630127907,

0.19450126588344574,

-0.16384615004062653,

0.04264351725578308,

0.03702449053525925,

-0.039683789014816284,

-0.0003956064465455711,

0.011445282027125359,

0.01843930408358574,

-0.023893611505627632,

0.09238249063491821,

-0.05498874559998512,

-0.04001082479953766,

-0.1106586754322052,

-0.0339570976793766,

0.034455835819244385,

0.010122774168848991,

-0.03529255837202072,

-0.03252722695469856,

-0.08346389979124069,

-0.07506290078163147,

0.09339368343353271,

-0.07379438728094101,

-0.04854428768157959,

-0.018830472603440285,

-0.0752616599202156,

0.02326788194477558,

0.02032634988427162,

0.07736726850271225,

-0.023358777165412903,

0.04288764297962189,

-0.054010841995477676,

0.05824148654937744,

0.11001134663820267,

0.035365406423807144,

-0.05824809893965721,

0.06025301292538643,

-0.2382364422082901,

0.09637492895126343,

-0.07412451505661011,

0.05830197036266327,

-0.15449334681034088,

-0.02627694234251976,

0.04870045557618141,

0.0076532382518053055,

-0.009597796015441418,

0.13436771929264069,

-0.21578943729400635,

-0.026375943794846535,

0.16865074634552002,

-0.10160042345523834,

-0.06946627050638199,

0.05867103114724159,

-0.049256108701229095,

0.10817171633243561,

0.03891118988394737,

-0.025492025539278984,

0.06244310364127159,

-0.12527504563331604,

0.007147894706577063,

-0.04992884770035744,

-0.016554534435272217,

0.1592475026845932,

0.07294736802577972,

-0.07235062122344971,

0.07110220938920975,

0.025814544409513474,

-0.027441376820206642,

-0.04532165080308914,

-0.016039686277508736,

-0.10585595667362213,

0.014911207370460033,

-0.061168964952230453,

0.01876060478389263,

-0.020111115649342537,

-0.08977947384119034,

-0.028080428019165993,

-0.1748371720314026,

-0.026230180636048317,

0.085477814078331,

-0.007464459165930748,

-0.018854627385735512,

-0.11770102381706238,

0.008567224256694317,

0.044854406267404556,

0.006109896115958691,

-0.13499478995800018,

-0.04764661565423012,

0.027907660230994225,

-0.16220368444919586,

0.033779170364141464,

-0.05184612050652504,

0.05056280270218849,

0.026674345135688782,

-0.029802238568663597,

-0.025906935334205627,

0.022987615317106247,

0.006545235402882099,

-0.011514187790453434,

-0.24465326964855194,

-0.026841215789318085,

-0.026506783440709114,

0.166712686419487,

-0.20777921378612518,

0.03577128052711487,

0.08057375997304916,

0.15318496525287628,

0.011457439512014389,

-0.04087435454130173,

0.005527274217456579,

-0.06868630647659302,

-0.025992877781391144,

-0.05823420733213425,

-0.002480053110048175,

-0.03337050974369049,

-0.04843711107969284,

0.04469521716237068,

-0.1662919819355011,

-0.03491327911615372,

0.09593124687671661,

0.06427760422229767,

-0.13986408710479736,

-0.023568401113152504,

-0.03526119887828827,

-0.049809779971838,

-0.047768235206604004,

-0.06002878025174141,

0.11181395500898361,

0.058611296117305756,

0.04419868439435959,

-0.059296321123838425,

-0.07637067884206772,

-0.0028071242850273848,

-0.014342374168336391,

-0.01986078731715679,

0.097631074488163,

0.06816094368696213,

-0.1381729394197464,

0.09227006882429123,

0.09810956567525864,

0.07738673686981201,

0.09273158758878708,

-0.02444581687450409,

-0.08119411021471024,

-0.0471174530684948,

0.03257923200726509,

0.018235107883810997,

0.1276484578847885,

-0.027872784063220024,

0.04268912971019745,

0.0421174094080925,

-0.018595336005091667,

0.013991083949804306,

-0.08597505837678909,

0.033884208649396896,

0.02703946642577648,

-0.0159194003790617,

0.04745442420244217,

-0.037611253559589386,

0.024539871141314507,

0.08754327148199081,

0.04615016281604767,

0.033831849694252014,

0.015717241913080215,

-0.05243339762091637,

-0.10873834043741226,

0.1642032116651535,

-0.12759798765182495,

-0.22238075733184814,

-0.13922695815563202,

0.003997850697487593,

0.036267586052417755,

-0.01646288111805916,

0.002834152430295944,

-0.060960907489061356,

-0.12132686376571655,

-0.08726011961698532,

0.015815909951925278,

0.050406474620103836,

-0.0912260189652443,

-0.060087788850069046,

0.056193675845861435,

0.037736181169748306,

-0.14546552300453186,

0.01776101253926754,

0.04850281774997711,

-0.09700650721788406,

-0.004754792433232069,

0.07885372638702393,

0.06784981489181519,

0.17673011124134064,

0.018112216144800186,

-0.021776698529720306,

0.031116241589188576,

0.20988549292087555,

-0.13491620123386383,

0.11005933582782745,

0.13349974155426025,

-0.09236859530210495,

0.08153878152370453,

0.20252206921577454,

0.04006611555814743,

-0.09986240416765213,

0.032548144459724426,

0.02142537757754326,

-0.027797512710094452,

-0.2441972941160202,

-0.07161470502614975,

-0.004515932407230139,

-0.06051458790898323,

0.07499068230390549,

0.09190185368061066,

0.08272628486156464,

0.011750337667763233,

-0.09449771046638489,

-0.08492138236761093,

0.06362129002809525,

0.10420511662960052,

0.02181125245988369,

-0.009744768962264061,

0.09036174416542053,

-0.03286943957209587,

0.01948373205959797,

0.08554471284151077,

0.0038120283279567957,

0.18320275843143463,

0.051725953817367554,

0.19073979556560516,

0.07944851368665695,

0.06951095163822174,

0.012023290619254112,

0.011227634735405445,

0.018135491758584976,

0.03228217363357544,

-0.003646562807261944,

-0.08350840210914612,

-0.02080707624554634,

0.1153142973780632,

0.0672341138124466,

0.012952476739883423,

0.01729460060596466,

-0.04021955281496048,

0.08128432929515839,

0.18377035856246948,

-0.0093126455321908,

-0.177269846200943,

-0.06024068966507912,

0.07718996703624725,

-0.09723462164402008,

-0.09738315641880035,

-0.01454379502683878,

0.030975129455327988,

-0.1702532023191452,

0.025819219648838043,

-0.023134231567382812,

0.11114585399627686,

-0.13745717704296112,

-0.020040949806571007,

0.07143081724643707,

0.07336213439702988,

0.004178736824542284,

0.055973317474126816,

-0.16574905812740326,

0.1074945405125618,

0.007851972244679928,

0.06788748502731323,

-0.0949488952755928,

0.10003086179494858,

-0.002759356750175357,

-0.016956903040409088,

0.13766175508499146,

0.003847390878945589,

-0.0742180123925209,

-0.07706846296787262,

-0.08544620126485825,

-0.010016623884439468,

0.12665624916553497,

-0.13990990817546844,

0.08602021634578705,

-0.03789570555090904,

-0.04160536453127861,

-0.0009961887262761593,

-0.09994571655988693,

-0.11771732568740845,

-0.18694964051246643,

0.060274846851825714,

-0.13818500936031342,

0.030693015083670616,

-0.1080726683139801,

-0.033236145973205566,

-0.03044886700809002,

0.18898600339889526,

-0.23496590554714203,

-0.07289838045835495,

-0.14654842019081116,

-0.10314314812421799,

0.14515270292758942,

-0.05135014280676842,

0.0824703797698021,

-0.007518251892179251,

0.16955603659152985,

0.01909777894616127,

-0.024870775640010834,

0.09702518582344055,

-0.09090493619441986,

-0.19369281828403473,

-0.07736486196517944,

0.1553725302219391,

0.13563397526741028,

0.03274888917803764,

-0.0031351360958069563,

0.03731042891740799,

-0.016484085470438004,

-0.119691863656044,

0.016338739544153214,

0.17828133702278137,

0.06005066633224487,

0.02449444867670536,

-0.025351086631417274,

-0.12034450471401215,

-0.07065033912658691,

-0.028268499299883842,

0.030481377616524696,

0.1794593334197998,

-0.06955225765705109,

0.18364831805229187,

0.147920161485672,

-0.05845186114311218,

-0.20284810662269592,

0.01105605997145176,

0.03317207098007202,

-0.00011460785754024982,

0.025185899809002876,

-0.19945523142814636,

0.08448769152164459,

0.004838644526898861,

-0.0498092919588089,

0.1281348466873169,

-0.17351724207401276,

-0.14425379037857056,

0.07726620137691498,

0.03829115256667137,

-0.1926836371421814,

-0.12892304360866547,

-0.09138946235179901,

-0.04540696740150452,

-0.18867050111293793,

0.09461917728185654,

0.031194355338811874,

0.009373899549245834,

0.030387504026293755,

0.030604345723986626,

0.01938873715698719,

-0.04181704297661781,

0.1860174536705017,

-0.023930367082357407,

0.028327496722340584,

-0.08596936613321304,

-0.07190530747175217,

0.0391114242374897,

-0.05227291211485863,

0.07252339273691177,

-0.023452037945389748,

0.00719826715067029,

-0.09769386798143387,

-0.04156304895877838,

-0.03843177855014801,

0.01581472158432007,

-0.09648153930902481,

-0.08523351699113846,

-0.04445706307888031,

0.09780744463205338,

0.09553340077400208,

-0.03473082184791565,

-0.024805041030049324,

-0.07508285343647003,

0.04805302992463112,

0.19605006277561188,

0.17889533936977386,

0.03904116898775101,

-0.07846304774284363,

-0.0033101453445851803,

-0.010484009049832821,

0.04490501061081886,

-0.20383046567440033,

0.06269704550504684,

0.05393069609999657,

0.019165942445397377,

0.11697915196418762,

-0.01937638409435749,

-0.15321338176727295,

-0.07137971371412277,

0.062210626900196075,

-0.05747547000646591,

-0.19925202429294586,

0.008424095809459686,

0.062047190964221954,

-0.16446428000926971,

-0.045800499618053436,

0.046785544604063034,

-0.004990153945982456,

-0.03839265555143356,

0.022938871756196022,

0.09231305122375488,

0.0029900665394961834,

0.07426668703556061,

0.052022483199834824,

0.0835016593337059,

-0.1060708537697792,

0.07922257483005524,

0.08730976283550262,

-0.08381073921918869,

0.022620677947998047,

0.10530175268650055,

-0.061487648636102676,

-0.03560204058885574,

0.017662353813648224,

0.08361397683620453,

0.018624287098646164,

-0.03893670439720154,

0.014383325353264809,

-0.1065717563033104,

0.059272702783346176,

0.08645539730787277,

0.03302672877907753,

0.01618802361190319,

0.034192394465208054,

0.04655340686440468,

-0.06840039044618607,

0.122025266289711,

0.032824426889419556,

0.017204686999320984,

-0.035474274307489395,

-0.04102595895528793,

0.01851540431380272,

-0.03368416428565979,

-0.005532157141715288,

-0.03097093477845192,

-0.07835554331541061,

-0.015077406540513039,

-0.16520504653453827,

-0.009829589165747166,

-0.05936548113822937,

0.012285472825169563,

0.031714752316474915,

-0.034721489995718,

0.008415459655225277,

0.009580436162650585,

-0.07713334262371063,

-0.06541574746370316,

-0.01965213567018509,

0.0961783304810524,

-0.1606777459383011,

0.022340767085552216,

0.08350874483585358,

-0.12098895758390427,

0.09293801337480545,

0.01664864458143711,

-0.00869405921548605,

0.02654755860567093,

-0.1516905426979065,

0.03389517217874527,

-0.03324367105960846,

0.009356614202260971,

0.04251125827431679,

-0.2180858999490738,

-0.0012979574967175722,

-0.034122150391340256,

-0.06511902064085007,

-0.008563618175685406,

-0.035606082528829575,

-0.1133907288312912,

0.10431582480669022,

0.007158213295042515,

-0.08918852359056473,

-0.031932637095451355,

0.02896781638264656,

0.08660420775413513,

-0.02103978954255581,

0.1533614844083786,

-0.008595003746449947,

0.07452014833688736,

-0.16158120334148407,

-0.019116591662168503,

-0.0044966633431613445,

0.021838920190930367,

-0.020337330177426338,

-0.011089952662587166,

0.043057333678007126,

-0.02310733124613762,

0.1769370436668396,

-0.034001484513282776,

0.02080564945936203,

0.06879838556051254,

0.02382824197411537,

-0.03270673379302025,

0.10420172661542892,

0.04176081717014313,

0.020029285922646523,

0.016749408096075058,

0.0014026050921529531,

-0.04661702737212181,

-0.03435906395316124,

-0.1965997964143753,

0.07266207784414291,

0.15759599208831787,

0.09697116911411285,

-0.019108884036540985,

0.07821404188871384,

-0.0993313267827034,

-0.10917975008487701,

0.12915705144405365,

-0.04755320027470589,

-0.004375945311039686,

-0.07154709100723267,

0.13273866474628448,

0.14712604880332947,

-0.18722544610500336,

0.07334931939840317,

-0.07133730500936508,

-0.04749078303575516,

-0.10922681540250778,

-0.194550022482872,

-0.05630992352962494,

-0.049111537635326385,

-0.015855323523283005,

-0.04727233946323395,

0.07431400567293167,

0.05443255603313446,

0.007043207995593548,

-0.0018872307846322656,

0.06250270456075668,

-0.02979675866663456,

-0.004455813206732273,

0.033084239810705185,

0.06524696946144104,

0.012280851602554321,

-0.028982065618038177,

0.017169395461678505,

-0.009704679250717163,

0.04565926641225815,

0.06593092530965805,

0.0490880124270916,

-0.02946917712688446,

0.01301988959312439,

-0.040264759212732315,

-0.10370729863643646,

0.044506072998046875,

-0.02268853597342968,

-0.081757090985775,

0.15341326594352722,

0.023376943543553352,

0.008703592233359814,

-0.018961627036333084,

0.23797030746936798,

-0.07337556779384613,

-0.09915944188833237,

-0.14910556375980377,

0.10603363811969757,

-0.037726908922195435,

0.05897798761725426,

0.04798928648233414,

-0.10144850611686707,

0.018896711990237236,

0.1251462697982788,

0.16306589543819427,

-0.03724272549152374,

0.020064668729901314,

0.030806828290224075,

0.005520908627659082,

-0.035788439214229584,

0.04845234379172325,

0.06755134463310242,

0.16263099014759064,

-0.046816933900117874,

0.09447267651557922,

0.0011601726291701198,

-0.09597980976104736,

-0.03777771443128586,

0.10832508653402328,

-0.014584118500351906,

0.018404638394713402,

-0.059979453682899475,

0.11911186575889587,

-0.06456011533737183,

-0.2371375411748886,

0.062140509486198425,

-0.06866546720266342,

-0.13664314150810242,

-0.023452885448932648,

0.08483598381280899,

-0.011404541321098804,

0.028394777327775955,

0.07356005162000656,

-0.07185159623622894,

0.20126941800117493,

0.03666449710726738,

-0.05399559810757637,

-0.054549336433410645,

0.0827551931142807,

-0.09896446764469147,

0.27000707387924194,

0.015913790091872215,

0.048061735928058624,

0.1041264757514,

-0.008932216092944145,

-0.13759581744670868,

0.019727399572730064,

0.0954047441482544,

-0.10358903557062149,

0.041838936507701874,

0.19829733669757843,

-0.0014832824235782027,

0.1230277270078659,

0.07854447513818741,

-0.07668869197368622,

0.0473078191280365,

-0.08185897022485733,

-0.06852826476097107,

-0.0918748751282692,

0.10061057657003403,

-0.07712632417678833,

0.14169210195541382,

0.13906599581241608,

-0.05018797889351845,

0.011615060269832611,

-0.031394075602293015,

0.04402702674269676,

0.0006254917825572193,

0.10420145094394684,

0.002576707163825631,

-0.18477243185043335,

0.02472778968513012,

0.006634650751948357,

0.10846512019634247,

-0.15925930440425873,

-0.09642539173364639,

0.03936212509870529,

0.004935122560709715,

-0.06595125794410706,

0.1294470727443695,

0.055943287909030914,

0.043614063411951065,

-0.039108045399188995,

-0.036952149122953415,

-0.006302761845290661,

0.13504701852798462,

-0.1053730770945549,

0.002390247769653797

] |

null | null | spacy | | Feature | Description |

| --- | --- |

| **Name** | `en_pipeline_ner_model_4` |

| **Version** | `0.0.0` |

| **spaCy** | `>=3.7.2,<3.8.0` |

| **Default Pipeline** | `transformer`, `ner` |

| **Components** | `transformer`, `ner` |

| **Vectors** | 0 keys, 0 unique vectors (0 dimensions) |

| **Sources** | n/a |

| **License** | n/a |

| **Author** | [n/a]() |

### Label Scheme

<details>

<summary>View label scheme (4 labels for 1 components)</summary>

| Component | Labels |

| --- | --- |

| **`ner`** | `allergy_name`, `cancer`, `chronic_disease`, `treatment` |

</details>

### Accuracy

| Type | Score |

| --- | --- |

| `ENTS_F` | 76.70 |

| `ENTS_P` | 76.74 |

| `ENTS_R` | 76.67 |

| `TRANSFORMER_LOSS` | 655099.91 |

| `NER_LOSS` | 820705.40 | | {"language": ["en"], "tags": ["spacy", "token-classification"]} | token-classification | rame/en_pipeline_ner_model_4 | [

"spacy",

"token-classification",

"en",

"model-index",

"region:us"

] | 2024-02-07T17:53:07+00:00 | [] | [

"en"

] | TAGS

#spacy #token-classification #en #model-index #region-us

|

### Label Scheme

View label scheme (4 labels for 1 components)

### Accuracy

| [

"### Label Scheme\n\n\n\nView label scheme (4 labels for 1 components)",

"### Accuracy"

] | [

"TAGS\n#spacy #token-classification #en #model-index #region-us \n",

"### Label Scheme\n\n\n\nView label scheme (4 labels for 1 components)",

"### Accuracy"

] | [

21,

16,

5

] | [

"passage: TAGS\n#spacy #token-classification #en #model-index #region-us \n### Label Scheme\n\n\n\nView label scheme (4 labels for 1 components)### Accuracy"

] | [

-0.0629965215921402,

0.1127549558877945,

-0.0035688134375959635,

0.020475171506404877,

0.10941386967897415,

0.06543945521116257,

0.2395509034395218,

0.05881859362125397,

0.23315228521823883,

0.050546180456876755,

0.035238079726696014,

0.07008600234985352,

0.045243993401527405,

0.263205349445343,

-0.09855789691209793,

-0.25912654399871826,

0.08800046890974045,

-0.011709385551512241,

0.061339739710092545,

0.13040849566459656,

0.04597558081150055,

-0.15077102184295654,

0.06081318482756615,

-0.06474439054727554,

-0.23377834260463715,

0.019462455064058304,

0.012478584423661232,

-0.09941231459379196,

0.07105932384729385,

-0.055700480937957764,

0.2166791707277298,

0.03704650700092316,

0.0488293282687664,

-0.21853342652320862,

0.006961038336157799,

-0.02179640904068947,

-0.043409865349531174,

0.07565315812826157,

0.05987602844834328,

-0.02151237241923809,

-0.08126477897167206,

-0.06972235441207886,

0.06890376657247543,

0.047078780829906464,

-0.14771313965320587,

-0.12832365930080414,

-0.021124741062521935,

0.1172269806265831,

0.10345267504453659,

-0.07128811627626419,

-0.020044108852744102,

0.054196108132600784,

-0.09787078201770782,

0.06574584543704987,

0.1409544199705124,

-0.31780141592025757,

-0.00248851184733212,

0.2135266810655594,

-0.05709154158830643,

0.11749033629894257,

-0.042847875505685806,

0.13884171843528748,

0.11255859583616257,

-0.01418056059628725,

-0.021425170823931694,

-0.016048626974225044,

0.08750426769256592,

0.0225397739559412,

-0.12652936577796936,

-0.0755700096487999,

0.5208337306976318,

0.08621324598789215,

-0.030275540426373482,

-0.11447595059871674,

-0.07186107337474823,

-0.150767520070076,

-0.09402980655431747,

-0.04420548304915428,

0.05545538291335106,

0.005052806809544563,

0.12306582182645798,

0.1029614582657814,

-0.09189879149198532,

-0.04171714186668396,

-0.14007526636123657,

0.26023033261299133,

0.002765404526144266,

0.09592457115650177,

-0.18221217393875122,

0.011721187271177769,

-0.10990888625383377,

-0.0701146051287651,

0.03921227902173996,

-0.09617437422275543,

-0.10228899121284485,

-0.046767037361860275,

0.03423115983605385,

0.11132217943668365,

0.07235271483659744,

0.07743702828884125,

-0.02171197533607483,

0.05824723839759827,

-0.03004535101354122,

0.05682354420423508,

0.15125319361686707,

0.1797948181629181,

-0.031689099967479706,

-0.028811266645789146,

-0.05536315590143204,

-0.06843414157629013,

0.03400057554244995,

-0.042799871414899826,

-0.13218502700328827,

0.026495318859815598,

0.09848130494356155,

0.1085473820567131,

-0.08328228443861008,

-0.03431198000907898,

-0.1288781762123108,

-0.06635984778404236,

0.07488603889942169,

-0.12038165330886841,

0.019561003893613815,

-0.0023647071793675423,

-0.005154100712388754,

0.11204953491687775,

-0.14458084106445312,

-0.02124967612326145,

0.04426572471857071,

0.0018498738063499331,

-0.11425764113664627,

0.008885269984602928,

-0.014460807666182518,

-0.10038325190544128,

0.0019156528869643807,

-0.11302948743104935,

0.032213177531957626,

-0.03517122194170952,

-0.1180223673582077,

-0.00859137438237667,

-0.028855212032794952,

-0.07637713104486465,

0.017950614914298058,

-0.02928520366549492,

-0.0582575798034668,

-0.01633014716207981,

0.02470465749502182,

-0.054275911301374435,

-0.09066230058670044,

-0.013594737276434898,

-0.011551475152373314,

0.10902853310108185,

-0.05366065353155136,

0.035621024668216705,

-0.05428226292133331,

0.08306460082530975,

-0.20197319984436035,

0.04508884251117706,

-0.0691397562623024,

0.0849027931690216,

-0.06024731695652008,

-0.07593771815299988,

0.0217578262090683,

0.0010386473732069135,

-0.12731923162937164,

0.16644708812236786,

-0.20622028410434723,

-0.07564926892518997,

0.20652420818805695,

-0.18348082900047302,

-0.10889304429292679,

0.038103748112916946,

-0.004521177150309086,

0.06402774155139923,

0.08456556499004364,

0.1693429946899414,

-0.0014997667167335749,

-0.10260968655347824,

-0.013415055349469185,

0.09594006091356277,

-0.05491052567958832,

-0.02500947378575802,

0.09379595518112183,

0.0247876588255167,

-0.0071521359495818615,

0.04088488593697548,

0.06621330231428146,

-0.12745298445224762,

-0.07154490053653717,

-0.059119563549757004,

-0.016269836574792862,

0.021569810807704926,

0.07567098736763,

0.028460077941417694,

0.033695731312036514,

-0.05473762005567551,

0.019963817670941353,

0.01055237464606762,

0.053166650235652924,

0.026366740465164185,

-0.06747597455978394,

-0.046096526086330414,

0.1322956085205078,

-0.14790485799312592,

-0.06882842630147934,

-0.17548711597919464,

-0.18087124824523926,

0.05610192194581032,

0.05858113616704941,

0.013782133348286152,

0.168969064950943,

0.010925764217972755,

0.004736734554171562,

0.00934684183448553,

-0.02314884401857853,

0.0050194780342280865,

0.07848679274320602,

-0.056586142629384995,

-0.16833335161209106,

-0.05202233046293259,

-0.10370951890945435,

0.06763279438018799,

-0.03746706619858742,

0.013026319444179535,

0.18849658966064453,

0.06991005688905716,

0.053974367678165436,

0.047983989119529724,

0.05191269516944885,

0.02876236103475094,

-0.025574609637260437,

-0.044866837561130524,

0.0832766517996788,

-0.10133487731218338,

-0.052205801010131836,

-0.07887792587280273,

-0.1253003478050232,

0.10041849315166473,

0.13725978136062622,

-0.13619215786457062,

-0.04700793698430061,

-0.07362968474626541,

-0.011995785869657993,

0.005399442743510008,

-0.10918592661619186,

0.003282226389274001,

-0.07030165195465088,

-0.027009248733520508,

0.0362655334174633,

-0.08074366301298141,

-0.024509768933057785,

0.034440118819475174,

-0.027143774554133415,

-0.16165883839130402,

0.10482843965291977,

-0.026797421276569366,

-0.20369654893875122,

0.1561061441898346,

0.25011971592903137,

0.19331912696361542,

0.0965486541390419,

-0.038412172347307205,

-0.036731258034706116,

-0.03808433935046196,

-0.012585306540131569,

-0.09400312602519989,

0.1799236238002777,

-0.1847221851348877,

-0.04176001995801926,

0.06102627143263817,

0.048408545553684235,

0.014471020549535751,

-0.1940488964319229,

0.0032003303058445454,

-0.02705218456685543,

-0.042314913123846054,

-0.08343712240457535,

-0.040769439190626144,

0.022866275161504745,

0.13133691251277924,

0.05275994911789894,

-0.18165427446365356,

0.06942607462406158,

-0.05328456684947014,

-0.058905716985464096,

0.15342184901237488,

-0.08790675550699234,

-0.2607112228870392,

-0.1321117877960205,

-0.09695693105459213,

-0.08170688897371292,

0.053103383630514145,

-0.026049582287669182,

-0.1221856102347374,

-0.04161803424358368,

0.03181920200586319,

-0.03143416717648506,

-0.15791521966457367,

-0.030441422015428543,

-0.02375221997499466,

0.08804883807897568,

-0.1463676244020462,

-0.03281635791063309,

-0.10361362993717194,

-0.08881634473800659,

0.08846709877252579,

0.109955795109272,

-0.17878031730651855,

0.0656815767288208,

0.30231374502182007,

-0.01682211272418499,

0.09849884361028671,

-0.007908549159765244,

0.1263122707605362,

-0.08478435128927231,

0.03434423357248306,

0.102773517370224,

0.043746642768383026,

0.03921223804354668,

0.2606252133846283,

0.07646768540143967,

-0.15783065557479858,

-0.04413260892033577,

-0.050116196274757385,

-0.11250732094049454,

-0.1371503621339798,

-0.11949079483747482,

-0.047457531094551086,

0.0018151853000745177,

0.04173680767416954,

0.021884718909859657,

0.027724772691726685,

0.05979352071881294,

0.032658208161592484,

-0.010304611176252365,

0.03817787021398544,

0.047241613268852234,

0.08965195715427399,

-0.0770988017320633,

0.09295348823070526,

-0.04419315978884697,

-0.07711686939001083,

0.07554645836353302,

0.11415284126996994,

0.1947050243616104,

0.19520893692970276,

0.05366821214556694,

0.08315850794315338,

-0.008946546353399754,

0.1494431346654892,

0.08043286204338074,

0.1458728164434433,

-0.024984760209918022,

-0.026519525796175003,

-0.0607585683465004,

0.008731319569051266,

0.056777339428663254,

-0.007761273067444563,

-0.07124421000480652,

-0.08041974902153015,

-0.07592456787824631,

0.08818446099758148,

-0.00402224063873291,

0.2683714032173157,

-0.22317084670066833,

0.014325995929539204,

0.1264825314283371,

0.08657713234424591,

-0.07659213989973068,

0.08995836973190308,

0.035323332995176315,

-0.10990273207426071,

0.0604853518307209,

-0.015346257016062737,

0.11201559752225876,

-0.1258959174156189,

0.009451201185584068,

-0.06256631016731262,

-0.046079572290182114,

-0.02384765073657036,

0.08621480315923691,

-0.057491641491651535,

0.32044050097465515,

0.04048808664083481,

-0.05771759897470474,

-0.0750327780842781,

-0.005863197147846222,

0.008971141651272774,

0.25033485889434814,

0.21518391370773315,

0.042560648173093796,

-0.22389960289001465,

-0.22780710458755493,

-0.024198587983846664,

-0.022732695564627647,

0.17322656512260437,

-0.05266254395246506,

0.04015713185071945,

0.018581973388791084,

0.0026184506714344025,

-0.03398444503545761,

0.02748211845755577,

-0.031158771365880966,

-0.024752674624323845,

0.03245184198021889,

0.08539164066314697,

-0.09668367356061935,

-0.007438742555677891,

-0.0648895800113678,

-0.15202893316745758,

0.13488507270812988,

0.000039352311432594433,

-0.11223579943180084,

-0.09655392915010452,

-0.0024250110145658255,

0.09870228171348572,

-0.019588764756917953,

-0.025776077061891556,

-0.058460723608732224,

0.15039412677288055,

0.013788600452244282,

-0.0933091938495636,

0.11571164429187775,

-0.035254642367362976,

-0.031234735623002052,

-0.06324572116136551,

0.16843536496162415,

-0.04176986590027809,

-0.01776798628270626,

0.06187696382403374,

0.07659410685300827,

-0.01941763050854206,

-0.11150353401899338,

0.11919628828763962,

-0.008797447197139263,

0.03596549853682518,

0.3197479546070099,

-0.10335519909858704,

-0.13108688592910767,

-0.045769188553094864,

0.08548711985349655,

0.11065994203090668,

0.24135228991508484,

-0.08199042826890945,

0.07414133846759796,

0.0649099051952362,

-0.03023097850382328,

-0.17827677726745605,

-0.024937152862548828,

-0.15955850481987,

0.01741856336593628,

-0.017146704718470573,

-0.07503925263881683,

0.1552862823009491,

0.024803558364510536,

-0.051573943346738815,

0.09173392504453659,

-0.2225973904132843,

-0.05328788608312607,

0.19856737554073334,

0.0514913946390152,

0.20914073288440704,

-0.06807520240545273,

-0.11668818444013596,

-0.06808353960514069,

-0.15781031548976898,

0.1404138207435608,

-0.0052726310677826405,

0.10044469684362411,

-0.046283286064863205,

0.011080998927354813,

0.03344593569636345,

-0.009972160682082176,

0.22414728999137878,

0.148310124874115,

0.09784389287233353,

0.03122054785490036,

-0.2104639858007431,

0.19675104320049286,

-0.0058021266013383865,

-0.00042371219024062157,

0.20054872334003448,

0.010687658563256264,