sha

stringlengths 40

40

| text

stringlengths 1

13.4M

| id

stringlengths 2

117

| tags

sequencelengths 1

7.91k

| created_at

stringlengths 25

25

| metadata

stringlengths 2

875k

| last_modified

stringlengths 25

25

| arxiv

sequencelengths 0

25

| languages

sequencelengths 0

7.91k

| tags_str

stringlengths 17

159k

| text_str

stringlengths 1

447k

| text_lists

sequencelengths 0

352

| processed_texts

sequencelengths 1

353

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|

d20b701b465db13f05b495c191abf0e4a0e02065 |

This is a dataset created using [vector-io](https://github.com/ai-northstar-tech/vector-io)

| aintech/vdf_PC_ANN_Fashion-MNIST_d784_euclidean | [

"vdf",

"vector-io",

"vector-dataset",

"vector-embeddings",

"region:us"

] | 2024-01-09T18:05:42+00:00 | {"tags": ["vdf", "vector-io", "vector-dataset", "vector-embeddings"], "dataset_info": {"features": [{"name": "id", "dtype": "string"}, {"name": "vector", "sequence": "float64"}], "splits": [{"name": "train", "num_bytes": 62838890, "num_examples": 10000}], "download_size": 5101858, "dataset_size": 62838890}, "configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}]}]} | 2024-01-10T12:12:56+00:00 | [] | [] | TAGS

#vdf #vector-io #vector-dataset #vector-embeddings #region-us

|

This is a dataset created using vector-io

| [] | [

"TAGS\n#vdf #vector-io #vector-dataset #vector-embeddings #region-us \n"

] |

4ef97d413d69dea3949358ce9a899162f3891b72 | # Dataset Card for "ultrafeedback_quality_binarized"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | jan-hq/ultrafeedback_quality_binarized | [

"region:us"

] | 2024-01-09T18:29:00+00:00 | {"configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}, {"split": "test", "path": "data/test-*"}]}], "dataset_info": {"features": [{"name": "source", "dtype": "string"}, {"name": "prompt", "dtype": "string"}, {"name": "chosen", "list": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "chosen-rating", "dtype": "float64"}, {"name": "chosen-model", "dtype": "string"}, {"name": "rejected", "list": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "rejected-rating", "dtype": "float64"}, {"name": "rejected-model", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 654240429.3032981, "num_examples": 139196}, {"name": "test", "num_bytes": 72697036.69670185, "num_examples": 15467}], "download_size": 396128426, "dataset_size": 726937466.0}} | 2024-01-09T18:29:37+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "ultrafeedback_quality_binarized"

More Information needed | [

"# Dataset Card for \"ultrafeedback_quality_binarized\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"ultrafeedback_quality_binarized\"\n\nMore Information needed"

] |

f96e32e2a1a64ab3b9aab8d67b9259a87e5203b9 | # Dataset Card for "parallel-pt-nl-pl"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | gowitheflowlab/parallel-pt-nl-pl | [

"region:us"

] | 2024-01-09T18:46:06+00:00 | {"configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}]}], "dataset_info": {"features": [{"name": "sentence1", "dtype": "string"}, {"name": "sentence2", "dtype": "string"}, {"name": "label", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 210221145.70946357, "num_examples": 1201407}], "download_size": 140654042, "dataset_size": 210221145.70946357}} | 2024-01-09T18:46:42+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "parallel-pt-nl-pl"

More Information needed | [

"# Dataset Card for \"parallel-pt-nl-pl\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"parallel-pt-nl-pl\"\n\nMore Information needed"

] |

e71a5b30eb0db9954addf9836cd99966afdb3a7b | # LegalPT

LegalPT aggregates the maximum amount of publicly available legal data in Portuguese, drawing from varied sources including legislation, jurisprudence, legal articles, and government documents.

## Dataset Details

Dataset is composed by six corpora:

[Ulysses-Tesemõ](https:github.com/ulysses-camara/ulysses-tesemo), [MultiLegalPile (PT)](https://arxiv.org/abs/2306.02069v2), [ParlamentoPT](http://arxiv.org/abs/2305.06721),

[Iudicium Textum](https://www.inf.ufpr.br/didonet/articles/2019_dsw_Iudicium_Textum_Dataset.pdf), [Acordãos TCU](https://link.springer.com/chapter/10.1007/978-3-030-61377-8_46), and

[DataSTF](https://legalhackersnatal.wordpress.com/2019/05/09/mais-dados-juridicos/).

- **MultiLegalPile**: a multilingual corpus of legal texts comprising 689 GiB of data, covering 24 languages in 17 jurisdictions. The corpus is separated by language, and the subset in Portuguese contains 92GiB of data, containing 13.76 billion words. This subset includes the jurisprudence of the Court of Justice of São Paulo (CJPG), appeals from the [5th Regional Federal Court (BRCAD-5)](https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0272287), the Portuguese subset of legal documents from the European Union, known as [EUR-Lex](https://eur-lex.europa.eu/homepage.html), and a filter for legal documents from [MC4](http://arxiv.org/abs/2010.11934).

- **Ulysses-Tesemõ**: a legal corpus in Brazilian Portuguese, composed of 2.2 million documents, totaling about 26GiB of text obtained from 96 different data sources. These sources encompass legal, legislative, academic papers, news, and related comments. The data was collected through web scraping of government websites.

- **ParlamentoPT**: a corpus for training language models in European Portuguese. The data was collected from the Portuguese government portal and consists of 2.6 million documents of transcriptions of debates in the Portuguese Parliament.

- **Iudicium Textum**: consists of rulings, votes, and reports from the Supreme Federal Court (STF) of Brazil, published between 2010 and 2018. The dataset contains 1GiB of data extracted from PDFs.

- **Acordãos TCU**: an open dataset from the Tribunal de Contas da União (Brazilian Federal Court of Accounts), containing 600,000 documents obtained by web scraping government websites. The documents span from 1992 to 2019.

- **DataSTF**: a dataset of monocratic decisions from the Superior Court of Justice (STJ) in Brazil, containing 700,000 documents (5GiB of data).

### Dataset Description

- **Curated by:** [More Information Needed]

- **Funded by:** [More Information Needed]

- **Language(s) (NLP):** Brazilian Portuguese (pt-BR)

- **License:** [Creative Commons Attribution 4.0 International Public License](https://creativecommons.org/licenses/by/4.0/deed.en)

### Dataset Sources

- **Repository:** https://github.com/eduagarcia/roberta-legal-portuguese

- **Paper:** [More Information Needed]

## Dataset Structure

<!-- This section provides a description of the dataset fields, and additional information about the dataset structure such as criteria used to create the splits, relationships between data points, etc. -->

[More Information Needed]

## Data Collection and Processing

LegalPT is deduplicated using [MinHash algorithm](https://dl.acm.org/doi/abs/10.5555/647819.736184) and [Locality Sensitive Hashing](https://dspace.mit.edu/bitstream/handle/1721.1/134231/v008a014.pdf?sequence=2&isAllowed=y), following the approach of [Lee et al. (2022)](http://arxiv.org/abs/2107.06499).

We used 5-grams and a signature of size 256, considering two documents to be identical if their Jaccard Similarity exceeded 0.7.

Duplicate rate found by the Minhash-LSH algorithm for the LegalPT corpus:

| **Corpus** | **Documents** | **Docs. after deduplication** | **Duplicates (%)** |

|--------------------------|:--------------:|:-----------------------------:|:------------------:|

| Ulysses-Tesemõ | 2,216,656 | 1,737,720 | 21.61 |

| MultiLegalPile (PT) | | | |

| CJPG | 14,068,634 | 6,260,096 | 55.50 |

| BRCAD-5 | 3,128,292 | 542,680 | 82.65 |

| EUR-Lex (Caselaw) | 104,312 | 78,893 | 24.37 |

| EUR-Lex (Contracts) | 11,581 | 8,511 | 26.51 |

| EUR-Lex (Legislation) | 232,556 | 95,024 | 59.14 |

| Legal MC4 | 191,174 | 187,637 | 1.85 |

| ParlamentoPT | 2,670,846 | 2,109,931 | 21.00 |

| Iudicium Textum | 198,387 | 153,373 | 22.69 |

| Acordãos TCU | 634,711 | 462,031 | 27.21 |

| DataSTF | 737,769 | 310,119 | 57.97 |

| **Total (LegalPT)** | **24,194,918** | **11,946,015** | **50.63** |

## Citation

```bibtex

@InProceedings{garcia2024_roberlexpt,

author="Garcia, Eduardo A. S.

and Silva, N{\'a}dia F. F.

and Siqueira, Felipe

and Gomes, Juliana R. S.

and Albuqueruqe, Hidelberg O.

and Souza, Ellen

and Lima, Eliomar

and De Carvalho, André",

title="RoBERTaLexPT: A Legal RoBERTa Model pretrained with deduplication for Portuguese",

booktitle="Computational Processing of the Portuguese Language",

year="2024",

publisher="Association for Computational Linguistics"

}

```

## Acknowledgment

This work has been supported by the AI Center of Excellence (Centro de Excelência em Inteligência Artificial – CEIA) of the Institute of Informatics at the Federal University of Goiás (INF-UFG). | eduagarcia/LegalPT | [

"task_categories:text-generation",

"size_categories:10M<n<100M",

"language:pt",

"license:cc-by-4.0",

"legal",

"arxiv:2306.02069",

"arxiv:2305.06721",

"arxiv:2010.11934",

"arxiv:2107.06499",

"region:us"

] | 2024-01-09T19:03:24+00:00 | {"language": ["pt"], "license": "cc-by-4.0", "size_categories": ["10M<n<100M"], "task_categories": ["text-generation"], "tags": ["legal"], "dataset_info": [{"config_name": "acordaos_tcu", "features": [{"name": "id", "dtype": "int64"}, {"name": "text", "dtype": "string"}, {"name": "meta", "struct": [{"name": "dedup", "struct": [{"name": "exact_norm", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "exact_hash_idx", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}]}, {"name": "minhash", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}, {"name": "minhash_idx", "dtype": "int64"}]}]}]}], "splits": [{"name": "train", "num_bytes": 3494790013, "num_examples": 634711}], "download_size": 1653039356, "dataset_size": 3494790013}, {"config_name": "all", "features": [{"name": "text", "dtype": "string"}, {"name": "meta", "struct": [{"name": "dedup", "struct": [{"name": "exact_norm", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "exact_hash_idx", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}]}, {"name": "minhash", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}, {"name": "minhash_idx", "dtype": "int64"}]}]}]}, {"name": "source", "dtype": "string"}, {"name": "orig_id", "dtype": "int64"}, {"name": "id", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 7047806791, "num_examples": 1399648}], "download_size": 3783112421, "dataset_size": 7047806791}, {"config_name": "datastf", "features": [{"name": "text", "dtype": "string"}, {"name": "meta", "struct": [{"name": "dedup", "struct": [{"name": "exact_norm", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "exact_hash_idx", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}]}, {"name": "minhash", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}, {"name": "minhash_idx", "dtype": "int64"}]}]}]}, {"name": "id", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 3699382656, "num_examples": 737769}], "download_size": 1724245648, "dataset_size": 3699382656}, {"config_name": "iudicium_textum", "features": [{"name": "text", "dtype": "string"}, {"name": "meta", "struct": [{"name": "dedup", "struct": [{"name": "exact_norm", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "exact_hash_idx", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}]}, {"name": "minhash", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}, {"name": "minhash_idx", "dtype": "int64"}]}]}]}, {"name": "id", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 896139675, "num_examples": 198387}], "download_size": 408025309, "dataset_size": 896139675}, {"config_name": "mlp_pt_BRCAD-5", "features": [{"name": "id", "dtype": "int64"}, {"name": "text", "dtype": "string"}, {"name": "meta", "struct": [{"name": "dedup", "struct": [{"name": "exact_norm", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "exact_hash_idx", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}]}, {"name": "minhash", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}, {"name": "minhash_idx", "dtype": "int64"}]}]}]}], "splits": [{"name": "train", "num_bytes": 20311710293, "num_examples": 3128292}], "download_size": 9735599974, "dataset_size": 20311710293}, {"config_name": "mlp_pt_CJPG", "features": [{"name": "id", "dtype": "int64"}, {"name": "text", "dtype": "string"}, {"name": "meta", "struct": [{"name": "dedup", "struct": [{"name": "exact_norm", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "exact_hash_idx", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}]}, {"name": "minhash", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}, {"name": "minhash_idx", "dtype": "int64"}]}]}]}], "splits": [{"name": "train", "num_bytes": 63201157801, "num_examples": 14068634}], "download_size": 30473107046, "dataset_size": 63201157801}, {"config_name": "mlp_pt_eurlex-caselaw", "features": [{"name": "id", "dtype": "int64"}, {"name": "text", "dtype": "string"}, {"name": "meta", "struct": [{"name": "dedup", "struct": [{"name": "exact_norm", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "exact_hash_idx", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}]}, {"name": "minhash", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}, {"name": "minhash_idx", "dtype": "int64"}]}]}]}], "splits": [{"name": "train", "num_bytes": 1499601545, "num_examples": 104312}], "download_size": 627235870, "dataset_size": 1499601545}, {"config_name": "mlp_pt_eurlex-contracts", "features": [{"name": "id", "dtype": "int64"}, {"name": "text", "dtype": "string"}, {"name": "meta", "struct": [{"name": "dedup", "struct": [{"name": "exact_norm", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "exact_hash_idx", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}]}, {"name": "minhash", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}, {"name": "minhash_idx", "dtype": "int64"}]}]}]}], "splits": [{"name": "train", "num_bytes": 467200973, "num_examples": 11581}], "download_size": 112805426, "dataset_size": 467200973}, {"config_name": "mlp_pt_eurlex-legislation", "features": [{"name": "id", "dtype": "int64"}, {"name": "text", "dtype": "string"}, {"name": "meta", "struct": [{"name": "dedup", "struct": [{"name": "exact_norm", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "exact_hash_idx", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}]}, {"name": "minhash", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}, {"name": "minhash_idx", "dtype": "int64"}]}]}]}], "splits": [{"name": "train", "num_bytes": 5669271303, "num_examples": 232556}], "download_size": 1384571339, "dataset_size": 5669271303}, {"config_name": "mlp_pt_legal-mc4", "features": [{"name": "id", "dtype": "int64"}, {"name": "text", "dtype": "string"}, {"name": "meta", "struct": [{"name": "dedup", "struct": [{"name": "exact_norm", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "exact_hash_idx", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}]}, {"name": "minhash", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}, {"name": "minhash_idx", "dtype": "int64"}]}]}]}], "splits": [{"name": "train", "num_bytes": 4483889482, "num_examples": 191174}], "download_size": 2250422592, "dataset_size": 4483889482}, {"config_name": "parlamento-pt", "features": [{"name": "id", "dtype": "int64"}, {"name": "text", "dtype": "string"}, {"name": "meta", "struct": [{"name": "dedup", "struct": [{"name": "exact_norm", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "exact_hash_idx", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}]}, {"name": "minhash", "struct": [{"name": "cluster_main_idx", "dtype": "int64"}, {"name": "cluster_size", "dtype": "int64"}, {"name": "is_duplicate", "dtype": "bool"}, {"name": "minhash_idx", "dtype": "int64"}]}]}]}], "splits": [{"name": "train", "num_bytes": 2867291543, "num_examples": 2670846}], "download_size": 1319479156, "dataset_size": 2867291543}], "configs": [{"config_name": "acordaos_tcu", "data_files": [{"split": "train", "path": "acordaos_tcu/train-*"}]}, {"config_name": "all", "data_files": [{"split": "train", "path": "all/train-*"}]}, {"config_name": "datastf", "data_files": [{"split": "train", "path": "datastf/train-*"}]}, {"config_name": "iudicium_textum", "data_files": [{"split": "train", "path": "iudicium_textum/train-*"}]}, {"config_name": "mlp_pt_BRCAD-5", "data_files": [{"split": "train", "path": "mlp_pt_BRCAD-5/train-*"}]}, {"config_name": "mlp_pt_CJPG", "data_files": [{"split": "train", "path": "mlp_pt_CJPG/train-*"}]}, {"config_name": "mlp_pt_eurlex-caselaw", "data_files": [{"split": "train", "path": "mlp_pt_eurlex-caselaw/train-*"}]}, {"config_name": "mlp_pt_eurlex-contracts", "data_files": [{"split": "train", "path": "mlp_pt_eurlex-contracts/train-*"}]}, {"config_name": "mlp_pt_eurlex-legislation", "data_files": [{"split": "train", "path": "mlp_pt_eurlex-legislation/train-*"}]}, {"config_name": "mlp_pt_legal-mc4", "data_files": [{"split": "train", "path": "mlp_pt_legal-mc4/train-*"}]}, {"config_name": "parlamento-pt", "data_files": [{"split": "train", "path": "parlamento-pt/train-*"}]}]} | 2024-02-09T16:36:38+00:00 | [

"2306.02069",

"2305.06721",

"2010.11934",

"2107.06499"

] | [

"pt"

] | TAGS

#task_categories-text-generation #size_categories-10M<n<100M #language-Portuguese #license-cc-by-4.0 #legal #arxiv-2306.02069 #arxiv-2305.06721 #arxiv-2010.11934 #arxiv-2107.06499 #region-us

| LegalPT

=======

LegalPT aggregates the maximum amount of publicly available legal data in Portuguese, drawing from varied sources including legislation, jurisprudence, legal articles, and government documents.

Dataset Details

---------------

Dataset is composed by six corpora:

Ulysses-Tesemõ, MultiLegalPile (PT), ParlamentoPT,

Iudicium Textum, Acordãos TCU, and

DataSTF.

* MultiLegalPile: a multilingual corpus of legal texts comprising 689 GiB of data, covering 24 languages in 17 jurisdictions. The corpus is separated by language, and the subset in Portuguese contains 92GiB of data, containing 13.76 billion words. This subset includes the jurisprudence of the Court of Justice of São Paulo (CJPG), appeals from the 5th Regional Federal Court (BRCAD-5), the Portuguese subset of legal documents from the European Union, known as EUR-Lex, and a filter for legal documents from MC4.

* Ulysses-Tesemõ: a legal corpus in Brazilian Portuguese, composed of 2.2 million documents, totaling about 26GiB of text obtained from 96 different data sources. These sources encompass legal, legislative, academic papers, news, and related comments. The data was collected through web scraping of government websites.

* ParlamentoPT: a corpus for training language models in European Portuguese. The data was collected from the Portuguese government portal and consists of 2.6 million documents of transcriptions of debates in the Portuguese Parliament.

* Iudicium Textum: consists of rulings, votes, and reports from the Supreme Federal Court (STF) of Brazil, published between 2010 and 2018. The dataset contains 1GiB of data extracted from PDFs.

* Acordãos TCU: an open dataset from the Tribunal de Contas da União (Brazilian Federal Court of Accounts), containing 600,000 documents obtained by web scraping government websites. The documents span from 1992 to 2019.

* DataSTF: a dataset of monocratic decisions from the Superior Court of Justice (STJ) in Brazil, containing 700,000 documents (5GiB of data).

### Dataset Description

* Curated by:

* Funded by:

* Language(s) (NLP): Brazilian Portuguese (pt-BR)

* License: Creative Commons Attribution 4.0 International Public License

### Dataset Sources

* Repository: URL

* Paper:

Dataset Structure

-----------------

Data Collection and Processing

------------------------------

LegalPT is deduplicated using MinHash algorithm and Locality Sensitive Hashing, following the approach of Lee et al. (2022).

We used 5-grams and a signature of size 256, considering two documents to be identical if their Jaccard Similarity exceeded 0.7.

Duplicate rate found by the Minhash-LSH algorithm for the LegalPT corpus:

Acknowledgment

--------------

This work has been supported by the AI Center of Excellence (Centro de Excelência em Inteligência Artificial – CEIA) of the Institute of Informatics at the Federal University of Goiás (INF-UFG).

| [

"### Dataset Description\n\n\n* Curated by:\n* Funded by:\n* Language(s) (NLP): Brazilian Portuguese (pt-BR)\n* License: Creative Commons Attribution 4.0 International Public License",

"### Dataset Sources\n\n\n* Repository: URL\n* Paper:\n\n\nDataset Structure\n-----------------\n\n\nData Collection and Processing\n------------------------------\n\n\nLegalPT is deduplicated using MinHash algorithm and Locality Sensitive Hashing, following the approach of Lee et al. (2022).\n\n\nWe used 5-grams and a signature of size 256, considering two documents to be identical if their Jaccard Similarity exceeded 0.7.\n\n\nDuplicate rate found by the Minhash-LSH algorithm for the LegalPT corpus:\n\n\n\nAcknowledgment\n--------------\n\n\nThis work has been supported by the AI Center of Excellence (Centro de Excelência em Inteligência Artificial – CEIA) of the Institute of Informatics at the Federal University of Goiás (INF-UFG)."

] | [

"TAGS\n#task_categories-text-generation #size_categories-10M<n<100M #language-Portuguese #license-cc-by-4.0 #legal #arxiv-2306.02069 #arxiv-2305.06721 #arxiv-2010.11934 #arxiv-2107.06499 #region-us \n",

"### Dataset Description\n\n\n* Curated by:\n* Funded by:\n* Language(s) (NLP): Brazilian Portuguese (pt-BR)\n* License: Creative Commons Attribution 4.0 International Public License",

"### Dataset Sources\n\n\n* Repository: URL\n* Paper:\n\n\nDataset Structure\n-----------------\n\n\nData Collection and Processing\n------------------------------\n\n\nLegalPT is deduplicated using MinHash algorithm and Locality Sensitive Hashing, following the approach of Lee et al. (2022).\n\n\nWe used 5-grams and a signature of size 256, considering two documents to be identical if their Jaccard Similarity exceeded 0.7.\n\n\nDuplicate rate found by the Minhash-LSH algorithm for the LegalPT corpus:\n\n\n\nAcknowledgment\n--------------\n\n\nThis work has been supported by the AI Center of Excellence (Centro de Excelência em Inteligência Artificial – CEIA) of the Institute of Informatics at the Federal University of Goiás (INF-UFG)."

] |

f49945e2ff49770328f685873ea1dfb00cda218b |

# REBUS

REBUS: A Robust Evaluation Benchmark of Understanding Symbols

[**Paper**](https://arxiv.org/abs/2401.05604) | [**🤗 Dataset**](https://huggingface.co/datasets/cavendishlabs/rebus) | [**GitHub**](https://github.com/cvndsh/rebus) | [**Website**](https://cavendishlabs.org/rebus/)

## Introduction

Recent advances in large language models have led to the development of multimodal LLMs (MLLMs), which take both image data and text as an input. Virtually all of these models have been announced within the past year, leading to a significant need for benchmarks evaluating the abilities of these models to reason truthfully and accurately on a diverse set of tasks. When Google announced Gemini Pro (Gemini Team et al., 2023), they displayed its ability to solve rebuses—wordplay puzzles which involve creatively adding and subtracting letters from words derived from text and images. The diversity of rebuses allows for a broad evaluation of multimodal reasoning capabilities, including image recognition, multi-step reasoning, and understanding the human creator's intent.

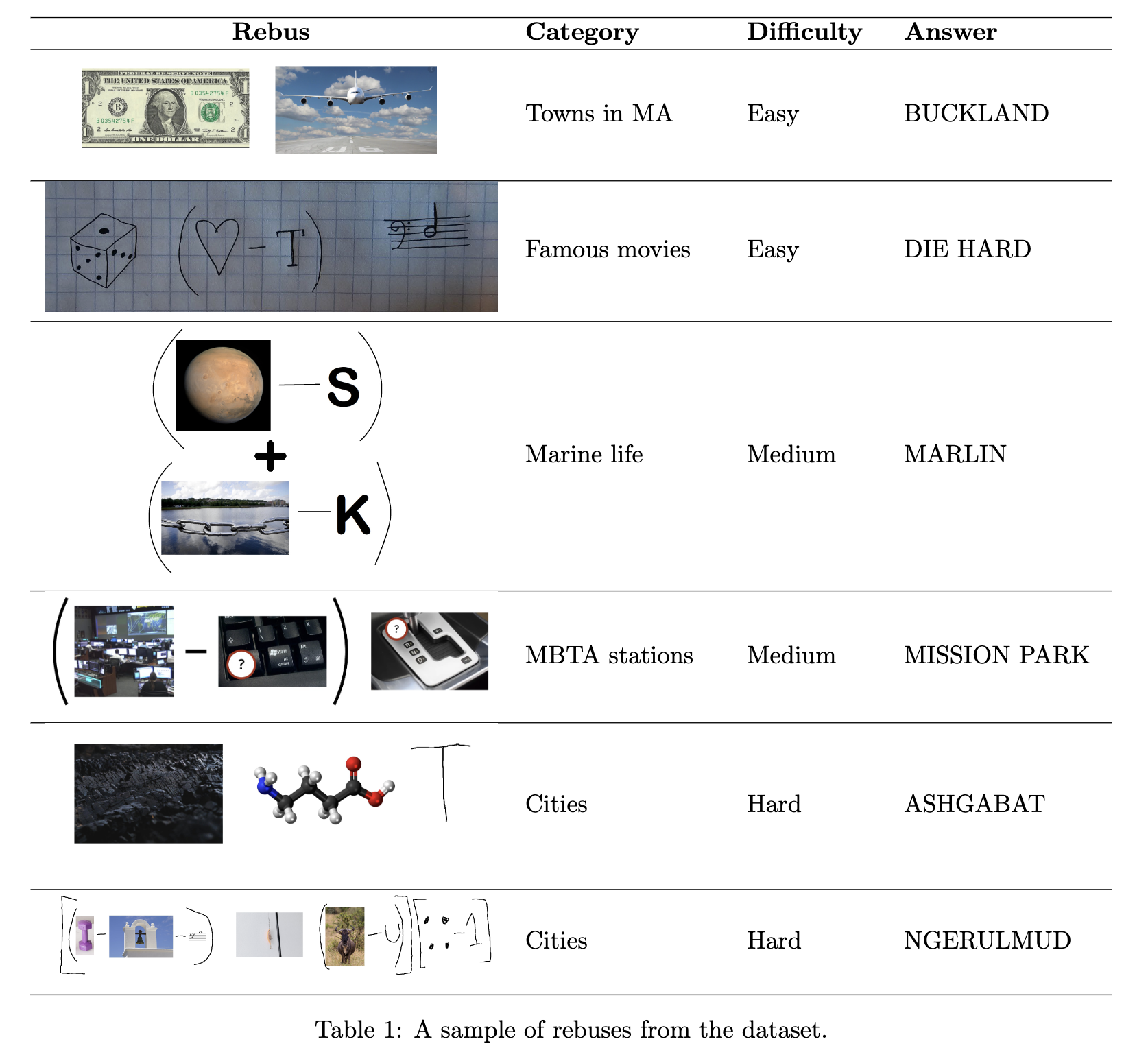

We present REBUS: a collection of 333 hand-crafted rebuses spanning 13 diverse categories, including hand-drawn and digital images created by nine contributors. Samples are presented in the table below. Notably, GPT-4V, the most powerful model we evaluated, answered only 24% of puzzles correctly, highlighting the poor capabilities of MLLMs in new and unexpected domains to which human reasoning generalizes with comparative ease. Open-source models perform even worse, with a median accuracy below 1%. We notice that models often give faithless explanations, fail to change their minds after an initial approach doesn't work, and remain highly uncalibrated on their own abilities.

## Evaluation results

| Model | Overall | Easy | Medium | Hard |

| ----------------- | ------------- | ------------- | ------------- | ------------ |

| GPT-4V | **24.0** | **33.0** | **13.2** | **7.1** |

| Gemini Pro | 13.2 | 19.4 | 5.3 | 3.6 |

| LLaVa-1.5-13B | 1.8 | 2.6 | 0.9 | 0.0 |

| LLaVa-1.5-7B | 1.5 | 2.6 | 0.0 | 0.0 |

| BLIP2-FLAN-T5-XXL | 0.9 | 0.5 | 1.8 | 0.0 |

| CogVLM | 0.9 | 1.6 | 0.0 | 0.0 |

| QWEN | 0.9 | 1.6 | 0.0 | 0.0 |

| InstructBLIP | 0.6 | 0.5 | 0.9 | 0.0 |

| cavendishlabs/rebus | [

"arxiv:2401.05604",

"region:us"

] | 2024-01-09T19:10:22+00:00 | {"dataset_info": {"features": [{"name": "Filename", "dtype": "string"}, {"name": "Solution", "dtype": "string"}, {"name": "Also accept", "dtype": "string"}, {"name": "Theme", "dtype": "string"}, {"name": "Difficulty", "dtype": "string"}, {"name": "Exact spelling?", "dtype": "string"}, {"name": "Specific reference", "dtype": "string"}, {"name": "Reading?", "dtype": "string"}, {"name": "Attribution", "dtype": "string"}, {"name": "Author", "dtype": "string"}, {"name": "image", "dtype": "image"}], "splits": [{"name": "train", "num_bytes": 51545282.0, "num_examples": 333}], "download_size": 47656838, "dataset_size": 51545282.0}, "configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}]}]} | 2024-01-12T01:30:58+00:00 | [

"2401.05604"

] | [] | TAGS

#arxiv-2401.05604 #region-us

| REBUS

=====

REBUS: A Robust Evaluation Benchmark of Understanding Symbols

Paper | Dataset | GitHub | Website

Introduction

------------

Recent advances in large language models have led to the development of multimodal LLMs (MLLMs), which take both image data and text as an input. Virtually all of these models have been announced within the past year, leading to a significant need for benchmarks evaluating the abilities of these models to reason truthfully and accurately on a diverse set of tasks. When Google announced Gemini Pro (Gemini Team et al., 2023), they displayed its ability to solve rebuses—wordplay puzzles which involve creatively adding and subtracting letters from words derived from text and images. The diversity of rebuses allows for a broad evaluation of multimodal reasoning capabilities, including image recognition, multi-step reasoning, and understanding the human creator's intent.

We present REBUS: a collection of 333 hand-crafted rebuses spanning 13 diverse categories, including hand-drawn and digital images created by nine contributors. Samples are presented in the table below. Notably, GPT-4V, the most powerful model we evaluated, answered only 24% of puzzles correctly, highlighting the poor capabilities of MLLMs in new and unexpected domains to which human reasoning generalizes with comparative ease. Open-source models perform even worse, with a median accuracy below 1%. We notice that models often give faithless explanations, fail to change their minds after an initial approach doesn't work, and remain highly uncalibrated on their own abilities.

!image

Evaluation results

------------------

| [] | [

"TAGS\n#arxiv-2401.05604 #region-us \n"

] |

25046c986975a77f226ae59deeb12df4f86a3a40 | # Dataset Card for "module_pairwise_dataset_neg_from_pos_pool_v3"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | zhan1993/module_pairwise_dataset_neg_from_pos_pool_v3 | [

"region:us"

] | 2024-01-09T20:41:36+00:00 | {"configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}]}], "dataset_info": {"features": [{"name": "eval_task", "dtype": "string"}, {"name": "sources_texts", "dtype": "string"}, {"name": "positive_expert_names", "dtype": "string"}, {"name": "negative_expert_names", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 258629092, "num_examples": 120865}], "download_size": 28424463, "dataset_size": 258629092}} | 2024-01-09T20:41:40+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "module_pairwise_dataset_neg_from_pos_pool_v3"

More Information needed | [

"# Dataset Card for \"module_pairwise_dataset_neg_from_pos_pool_v3\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"module_pairwise_dataset_neg_from_pos_pool_v3\"\n\nMore Information needed"

] |

4bf71c546082f351dd4abcd3850202bff4671c47 |

### Overview

DPO dataset meant to enhance python coding abilities.

This dataset uses the excellent https://huggingface.co/datasets/Vezora/Tested-22k-Python-Alpaca dataset as the "chosen" responses, given this dataset was already tested and validated.

The "rejected" values were generated with a mix of airoboros-l2-13b-3.1 and bagel-7b-v0.1.

The rejected values may actually be perfectly fine, but the assumption here is that the values are generally a lower quality than the chosen counterpart. Items with duplicate code blocks were removed.

### Contribute

If you're interested in new functionality/datasets, take a look at [bagel repo](https://github.com/jondurbin/bagel) and [airoboros](https://github.com/jondurbin/airoboros) and either make a PR or open an issue with details.

To help me with the fine-tuning costs, dataset generation, etc., please use one of the following:

- https://bmc.link/jondurbin

- ETH 0xce914eAFC2fe52FdceE59565Dd92c06f776fcb11

- BTC bc1qdwuth4vlg8x37ggntlxu5cjfwgmdy5zaa7pswf | jondurbin/py-dpo-v0.1 | [

"language:code",

"license:cc-by-4.0",

"region:us"

] | 2024-01-09T20:57:21+00:00 | {"language": ["code"], "license": "cc-by-4.0"} | 2024-01-11T10:16:18+00:00 | [] | [

"code"

] | TAGS

#language-code #license-cc-by-4.0 #region-us

|

### Overview

DPO dataset meant to enhance python coding abilities.

This dataset uses the excellent URL dataset as the "chosen" responses, given this dataset was already tested and validated.

The "rejected" values were generated with a mix of airoboros-l2-13b-3.1 and bagel-7b-v0.1.

The rejected values may actually be perfectly fine, but the assumption here is that the values are generally a lower quality than the chosen counterpart. Items with duplicate code blocks were removed.

### Contribute

If you're interested in new functionality/datasets, take a look at bagel repo and airoboros and either make a PR or open an issue with details.

To help me with the fine-tuning costs, dataset generation, etc., please use one of the following:

- URL

- ETH 0xce914eAFC2fe52FdceE59565Dd92c06f776fcb11

- BTC bc1qdwuth4vlg8x37ggntlxu5cjfwgmdy5zaa7pswf | [

"### Overview\n\nDPO dataset meant to enhance python coding abilities.\n\nThis dataset uses the excellent URL dataset as the \"chosen\" responses, given this dataset was already tested and validated.\n\nThe \"rejected\" values were generated with a mix of airoboros-l2-13b-3.1 and bagel-7b-v0.1.\n\nThe rejected values may actually be perfectly fine, but the assumption here is that the values are generally a lower quality than the chosen counterpart. Items with duplicate code blocks were removed.",

"### Contribute\n\nIf you're interested in new functionality/datasets, take a look at bagel repo and airoboros and either make a PR or open an issue with details.\n\nTo help me with the fine-tuning costs, dataset generation, etc., please use one of the following:\n\n- URL\n- ETH 0xce914eAFC2fe52FdceE59565Dd92c06f776fcb11\n- BTC bc1qdwuth4vlg8x37ggntlxu5cjfwgmdy5zaa7pswf"

] | [

"TAGS\n#language-code #license-cc-by-4.0 #region-us \n",

"### Overview\n\nDPO dataset meant to enhance python coding abilities.\n\nThis dataset uses the excellent URL dataset as the \"chosen\" responses, given this dataset was already tested and validated.\n\nThe \"rejected\" values were generated with a mix of airoboros-l2-13b-3.1 and bagel-7b-v0.1.\n\nThe rejected values may actually be perfectly fine, but the assumption here is that the values are generally a lower quality than the chosen counterpart. Items with duplicate code blocks were removed.",

"### Contribute\n\nIf you're interested in new functionality/datasets, take a look at bagel repo and airoboros and either make a PR or open an issue with details.\n\nTo help me with the fine-tuning costs, dataset generation, etc., please use one of the following:\n\n- URL\n- ETH 0xce914eAFC2fe52FdceE59565Dd92c06f776fcb11\n- BTC bc1qdwuth4vlg8x37ggntlxu5cjfwgmdy5zaa7pswf"

] |

7b630ac15135be066dbdb96b5edb44ac56f41dc7 | # Dataset Card for "cai-conversation-dev1704834920"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | vwxyzjn/cai-conversation-dev1704834920 | [

"region:us"

] | 2024-01-09T21:18:42+00:00 | {"dataset_info": {"features": [{"name": "index", "dtype": "int64"}, {"name": "prompt", "dtype": "string"}, {"name": "init_prompt", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "init_response", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "critic_prompt", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "critic_response", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "revision_prompt", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "revision_response", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "messages", "list": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "chosen", "list": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "rejected", "list": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}], "splits": [{"name": "train_sft", "num_bytes": 273314, "num_examples": 64}, {"name": "train_prefs", "num_bytes": 255493, "num_examples": 64}], "download_size": 266824, "dataset_size": 528807}, "configs": [{"config_name": "default", "data_files": [{"split": "train_sft", "path": "data/train_sft-*"}, {"split": "train_prefs", "path": "data/train_prefs-*"}]}]} | 2024-01-09T21:18:44+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "cai-conversation-dev1704834920"

More Information needed | [

"# Dataset Card for \"cai-conversation-dev1704834920\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"cai-conversation-dev1704834920\"\n\nMore Information needed"

] |

6b3d377e6c4a206c0290d15f061f84417998f4e8 | # Dataset Card for "parallel-9"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | gowitheflowlab/parallel-9 | [

"region:us"

] | 2024-01-09T21:41:44+00:00 | {"configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}]}], "dataset_info": {"features": [{"name": "sentence1", "dtype": "string"}, {"name": "sentence2", "dtype": "string"}, {"name": "label", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 649296909.6590501, "num_examples": 3322980}], "download_size": 428488796, "dataset_size": 649296909.6590501}} | 2024-01-09T21:54:40+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "parallel-9"

More Information needed | [

"# Dataset Card for \"parallel-9\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"parallel-9\"\n\nMore Information needed"

] |

8b02cb3ea33e74861fcc4d3c7b266f83b3b8c6b9 | # FLORES-200 EN-EL with prompts for translation by LLMs

Based on [FLORES-200](https://huggingface.co/datasets/Muennighoff/flores200) dataset.

Publication:

@article{nllb2022,

author = {NLLB Team, Marta R. Costa-jussà, James Cross, Onur Çelebi, Maha Elbayad, Kenneth Heafield, Kevin Heffernan, Elahe Kalbassi, Janice Lam, Daniel Licht, Jean Maillard, Anna Sun, Skyler Wang, Guillaume Wenzek, Al Youngblood, Bapi Akula, Loic Barrault, Gabriel Mejia Gonzalez, Prangthip Hansanti, John Hoffman, Semarley Jarrett, Kaushik Ram Sadagopan, Dirk Rowe, Shannon Spruit, Chau Tran, Pierre Andrews, Necip Fazil Ayan, Shruti Bhosale, Sergey Edunov, Angela Fan, Cynthia Gao, Vedanuj Goswami, Francisco Guzmán, Philipp Koehn, Alexandre Mourachko, Christophe Ropers, Safiyyah Saleem, Holger Schwenk, Jeff Wang},

title = {No Language Left Behind: Scaling Human-Centered Machine Translation},

year = {2022}

}

Number of examples : 1012

## FLORES-200 for EN to EL with 0-shot prompts

Contains 2 prompt variants:

- EN:\n\[English Sentence\]\nEL:

- English:\n\[English Sentence\]\nΕλληνικά:

## FLORES-200 for EL to EN with 0-shot prompts

Contains 2 prompt variants:

- EL:\n\[Greek Sentence\]\nEL:

- Ελληνικά:\n\[Greek Sentence\]\nEnglish:

## How to load datasets

```python

from datasets import load_dataset

input_file = 'flores200.en2el.test.0-shot.json'

dataset = load_dataset(

'json',

data_files=input_file,

field='examples',

split='train'

)

```

## How to generate translation results with different configurations

```python

from multiprocessing import cpu_count

def generate_translations(datapoint, config, config_name):

for idx, variant in enumerate(datapoint["prompts_results"]):

# REPLACE generate WITH ACTUAL FUNCTION WHICH TAKES GENERATION CONFIG

result = generate(variant["prompt"], config=config)

datapoint["prompts_results"][idx].update({config_name: result})

return datapoint

dataset = dataset.map(

function=generate_translations,

fn_kwargs={"config": config, "config_name": config_name},

keep_in_memory=False,

num_proc=min(len(dataset), cpu_count()),

)

```

## How to push updated datasets to hub

```python

from huggingface_hub import HfApi

input_file = "flores200.en2el.test.0-shot.json"

model_name = "meltemi-v0.2"

output_file = input_file.replace(".json", ".{}.json".format(model_name)

dataset.to_json(output_file,

force_ascii=False,

indent=4,

orient="index")

api = HfApi()

api.upload_file(

path_or_fileobj=output_file,

path_in_repo="results/{}/{}".format(model_name, output_file)

repo_id="ilsp/flores200-en-el-prompt",

repo_type="dataset",

)

```

| ilsp/flores200_en-el | [

"task_categories:translation",

"size_categories:1K<n<10K",

"language:en",

"language:el",

"license:cc-by-sa-4.0",

"region:us"

] | 2024-01-09T21:50:20+00:00 | {"language": ["en", "el"], "license": "cc-by-sa-4.0", "size_categories": ["1K<n<10K"], "task_categories": ["translation"], "dataset_info": {"features": [{"name": "en", "dtype": "string"}, {"name": "el", "dtype": "string"}], "splits": [{"name": "validation", "num_bytes": 406555, "num_examples": 997}, {"name": "test", "num_bytes": 427413, "num_examples": 1012}], "download_size": 481524, "dataset_size": 833968}, "configs": [{"config_name": "default", "data_files": [{"split": "validation", "path": "data/validation-*"}, {"split": "test", "path": "data/test-*"}]}]} | 2024-01-23T14:37:02+00:00 | [] | [

"en",

"el"

] | TAGS

#task_categories-translation #size_categories-1K<n<10K #language-English #language-Modern Greek (1453-) #license-cc-by-sa-4.0 #region-us

| # FLORES-200 EN-EL with prompts for translation by LLMs

Based on FLORES-200 dataset.

Publication:

@article{nllb2022,

author = {NLLB Team, Marta R. Costa-jussà, James Cross, Onur Çelebi, Maha Elbayad, Kenneth Heafield, Kevin Heffernan, Elahe Kalbassi, Janice Lam, Daniel Licht, Jean Maillard, Anna Sun, Skyler Wang, Guillaume Wenzek, Al Youngblood, Bapi Akula, Loic Barrault, Gabriel Mejia Gonzalez, Prangthip Hansanti, John Hoffman, Semarley Jarrett, Kaushik Ram Sadagopan, Dirk Rowe, Shannon Spruit, Chau Tran, Pierre Andrews, Necip Fazil Ayan, Shruti Bhosale, Sergey Edunov, Angela Fan, Cynthia Gao, Vedanuj Goswami, Francisco Guzmán, Philipp Koehn, Alexandre Mourachko, Christophe Ropers, Safiyyah Saleem, Holger Schwenk, Jeff Wang},

title = {No Language Left Behind: Scaling Human-Centered Machine Translation},

year = {2022}

}

Number of examples : 1012

## FLORES-200 for EN to EL with 0-shot prompts

Contains 2 prompt variants:

- EN:\n\[English Sentence\]\nEL:

- English:\n\[English Sentence\]\nΕλληνικά:

## FLORES-200 for EL to EN with 0-shot prompts

Contains 2 prompt variants:

- EL:\n\[Greek Sentence\]\nEL:

- Ελληνικά:\n\[Greek Sentence\]\nEnglish:

## How to load datasets

## How to generate translation results with different configurations

## How to push updated datasets to hub

| [

"# FLORES-200 EN-EL with prompts for translation by LLMs\nBased on FLORES-200 dataset.\n\nPublication:\n@article{nllb2022,\n author = {NLLB Team, Marta R. Costa-jussà, James Cross, Onur Çelebi, Maha Elbayad, Kenneth Heafield, Kevin Heffernan, Elahe Kalbassi, Janice Lam, Daniel Licht, Jean Maillard, Anna Sun, Skyler Wang, Guillaume Wenzek, Al Youngblood, Bapi Akula, Loic Barrault, Gabriel Mejia Gonzalez, Prangthip Hansanti, John Hoffman, Semarley Jarrett, Kaushik Ram Sadagopan, Dirk Rowe, Shannon Spruit, Chau Tran, Pierre Andrews, Necip Fazil Ayan, Shruti Bhosale, Sergey Edunov, Angela Fan, Cynthia Gao, Vedanuj Goswami, Francisco Guzmán, Philipp Koehn, Alexandre Mourachko, Christophe Ropers, Safiyyah Saleem, Holger Schwenk, Jeff Wang},\n title = {No Language Left Behind: Scaling Human-Centered Machine Translation},\n year = {2022}\n}\n\nNumber of examples : 1012",

"## FLORES-200 for EN to EL with 0-shot prompts\nContains 2 prompt variants:\n- EN:\\n\\[English Sentence\\]\\nEL:\n- English:\\n\\[English Sentence\\]\\nΕλληνικά:",

"## FLORES-200 for EL to EN with 0-shot prompts\nContains 2 prompt variants:\n- EL:\\n\\[Greek Sentence\\]\\nEL:\n- Ελληνικά:\\n\\[Greek Sentence\\]\\nEnglish:",

"## How to load datasets",

"## How to generate translation results with different configurations",

"## How to push updated datasets to hub"

] | [

"TAGS\n#task_categories-translation #size_categories-1K<n<10K #language-English #language-Modern Greek (1453-) #license-cc-by-sa-4.0 #region-us \n",

"# FLORES-200 EN-EL with prompts for translation by LLMs\nBased on FLORES-200 dataset.\n\nPublication:\n@article{nllb2022,\n author = {NLLB Team, Marta R. Costa-jussà, James Cross, Onur Çelebi, Maha Elbayad, Kenneth Heafield, Kevin Heffernan, Elahe Kalbassi, Janice Lam, Daniel Licht, Jean Maillard, Anna Sun, Skyler Wang, Guillaume Wenzek, Al Youngblood, Bapi Akula, Loic Barrault, Gabriel Mejia Gonzalez, Prangthip Hansanti, John Hoffman, Semarley Jarrett, Kaushik Ram Sadagopan, Dirk Rowe, Shannon Spruit, Chau Tran, Pierre Andrews, Necip Fazil Ayan, Shruti Bhosale, Sergey Edunov, Angela Fan, Cynthia Gao, Vedanuj Goswami, Francisco Guzmán, Philipp Koehn, Alexandre Mourachko, Christophe Ropers, Safiyyah Saleem, Holger Schwenk, Jeff Wang},\n title = {No Language Left Behind: Scaling Human-Centered Machine Translation},\n year = {2022}\n}\n\nNumber of examples : 1012",

"## FLORES-200 for EN to EL with 0-shot prompts\nContains 2 prompt variants:\n- EN:\\n\\[English Sentence\\]\\nEL:\n- English:\\n\\[English Sentence\\]\\nΕλληνικά:",

"## FLORES-200 for EL to EN with 0-shot prompts\nContains 2 prompt variants:\n- EL:\\n\\[Greek Sentence\\]\\nEL:\n- Ελληνικά:\\n\\[Greek Sentence\\]\\nEnglish:",

"## How to load datasets",

"## How to generate translation results with different configurations",

"## How to push updated datasets to hub"

] |

ddc0edd55a8a3b082cc88f56409ca4f91c6aa1f3 | # Dataset Card for "cai-conversation-dev1704836562"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | vwxyzjn/cai-conversation-dev1704836562 | [

"region:us"

] | 2024-01-09T21:53:37+00:00 | {"dataset_info": {"features": [{"name": "index", "dtype": "int64"}, {"name": "prompt", "dtype": "string"}, {"name": "init_prompt", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "init_response", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "critic_prompt", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "critic_response", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "revision_prompt", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "revision_response", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "messages", "list": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "chosen", "list": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "rejected", "list": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}], "splits": [{"name": "train_sft", "num_bytes": 265134, "num_examples": 64}, {"name": "train_prefs", "num_bytes": 247352, "num_examples": 64}], "download_size": 263052, "dataset_size": 512486}, "configs": [{"config_name": "default", "data_files": [{"split": "train_sft", "path": "data/train_sft-*"}, {"split": "train_prefs", "path": "data/train_prefs-*"}]}]} | 2024-01-09T21:53:39+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "cai-conversation-dev1704836562"

More Information needed | [

"# Dataset Card for \"cai-conversation-dev1704836562\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"cai-conversation-dev1704836562\"\n\nMore Information needed"

] |

9fd657c9c8120af8358a422cf777c86dbb231a7c | # Dataset Card for "parallel-all"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | gowitheflowlab/parallel-all | [

"region:us"

] | 2024-01-09T21:56:56+00:00 | {"configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}]}], "dataset_info": {"features": [{"name": "sentence1", "dtype": "string"}, {"name": "sentence2", "dtype": "string"}, {"name": "label", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 817156947.0942363, "num_examples": 4102854}], "download_size": 536400953, "dataset_size": 817156947.0942363}} | 2024-01-09T22:09:40+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "parallel-all"

More Information needed | [

"# Dataset Card for \"parallel-all\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"parallel-all\"\n\nMore Information needed"

] |

e751bcc90f4266cd682c959c4a2af77a9aebe469 |

Total train samples: 168397

Total test samples: 49233

Total tasks: 7

| Task | Train | Test |

| ---- | ----- | ---- |

|reference_number_association_without_question_boxes/2023-01-01|11481|3756|

|reference_numbers/2023-01-01|12739|3974|

|reference_number_association_with_question_boxes/2023-01-01|11481|3756|

|table_cell_incremental_without_question_boxes/2023-01-01|22884|10566|

|table_cell_incremental_with_question_boxes/2023-01-01|17986|6079|

|table_header_with_question_boxes/2023-01-01|80278|17362|

|key_value/2023-01-01|11548|3740|

Total artifact_qids: 15860

| looppayments/question_answering_token_classification_addendum | [

"region:us"

] | 2024-01-09T22:19:32+00:00 | {"pretty_name": "Question Answering Token Classification"} | 2024-02-11T00:04:56+00:00 | [] | [] | TAGS

#region-us

| Total train samples: 168397

Total test samples: 49233

Total tasks: 7

Task: reference\_number\_association\_without\_question\_boxes/2023-01-01, Train: 11481, Test: 3756

Task: reference\_numbers/2023-01-01, Train: 12739, Test: 3974

Task: reference\_number\_association\_with\_question\_boxes/2023-01-01, Train: 11481, Test: 3756

Task: table\_cell\_incremental\_without\_question\_boxes/2023-01-01, Train: 22884, Test: 10566

Task: table\_cell\_incremental\_with\_question\_boxes/2023-01-01, Train: 17986, Test: 6079

Task: table\_header\_with\_question\_boxes/2023-01-01, Train: 80278, Test: 17362

Task: key\_value/2023-01-01, Train: 11548, Test: 3740

Task: Total artifact\_qids: 15860, Train: , Test:

| [] | [

"TAGS\n#region-us \n"

] |

186ec54deb42b0ece6ca5565db1e3b31dc87ed37 | # Dataset Card for "mmlu-abstract_algebra-neg-prepend-verbal"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | joey234/mmlu-abstract_algebra-neg-prepend-verbal | [

"region:us"

] | 2024-01-10T00:09:24+00:00 | {"configs": [{"config_name": "default", "data_files": [{"split": "dev", "path": "data/dev-*"}, {"split": "test", "path": "data/test-*"}]}], "dataset_info": {"features": [{"name": "question", "dtype": "string"}, {"name": "choices", "sequence": "string"}, {"name": "answer", "dtype": {"class_label": {"names": {"0": "A", "1": "B", "2": "C", "3": "D"}}}}, {"name": "negate_openai_prompt", "struct": [{"name": "content", "dtype": "string"}, {"name": "role", "dtype": "string"}]}, {"name": "neg_question", "dtype": "string"}, {"name": "fewshot_context", "dtype": "string"}, {"name": "ori_prompt", "dtype": "string"}, {"name": "fewshot_context_neg", "dtype": "string"}, {"name": "fewshot_context_ori", "dtype": "string"}, {"name": "neg_prompt", "dtype": "string"}], "splits": [{"name": "dev", "num_bytes": 5843, "num_examples": 5}, {"name": "test", "num_bytes": 554556, "num_examples": 100}], "download_size": 90058, "dataset_size": 560399}} | 2024-01-10T05:14:13+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "mmlu-abstract_algebra-neg-prepend-verbal"

More Information needed | [

"# Dataset Card for \"mmlu-abstract_algebra-neg-prepend-verbal\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"mmlu-abstract_algebra-neg-prepend-verbal\"\n\nMore Information needed"

] |

194f772681ecf1bb14fe891227c1ac50d1677cc3 | # Dataset Card for "paraphrase_collections_enhanced"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | xwjzds/paraphrase_collections_enhanced | [

"region:us"

] | 2024-01-10T01:06:53+00:00 | {"dataset_info": {"features": [{"name": "input", "dtype": "string"}, {"name": "output", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 37345974, "num_examples": 243754}], "download_size": 22571420, "dataset_size": 37345974}} | 2024-01-10T01:06:56+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "paraphrase_collections_enhanced"

More Information needed | [

"# Dataset Card for \"paraphrase_collections_enhanced\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"paraphrase_collections_enhanced\"\n\nMore Information needed"

] |

7406ce7439851d2ee395c9e0f7bce444d25ae1e8 | # Dataset Card for Indian Sweets

| VishalMysore/Hindi_Mithai | [

"language:hi",

"license:apache-2.0",

"region:us"

] | 2024-01-10T01:37:24+00:00 | {"language": ["hi"], "license": "apache-2.0"} | 2024-01-10T14:56:41+00:00 | [] | [

"hi"

] | TAGS

#language-Hindi #license-apache-2.0 #region-us

| # Dataset Card for Indian Sweets

| [

"# Dataset Card for Indian Sweets"

] | [

"TAGS\n#language-Hindi #license-apache-2.0 #region-us \n",

"# Dataset Card for Indian Sweets"

] |

de4f517a81d79f63d33f4eb01ade2065a7ea397e | # Open-Orca-FLAN-50K-Synthetic-5-Models Dataset Card

### Dataset Summary

The Open-Orca-FLAN-50K-Synthetic-5-Models dataset is a large-scale, synthetic dataset based on 50K filtered examples from [Open-Orca/Flan](https://huggingface.co/datasets/Open-Orca/FLAN) . It contains 50,000 examples, each consisting of a prompt, a completion, and the corresponding task. Additionally, it includes model-generated responses from five different models: [ignos-Mistral-T5-7B-v1](https://huggingface.co/ignos/Mistral-T5-7B-v1), [cognAI-lil-c3po](https://huggingface.co/cognAI/lil-c3po), [viethq188-Rabbit-7B-DPO-Chat](https://huggingface.co/viethq188/Rabbit-7B-DPO-Chat), [cookinai-DonutLM-v1](https://huggingface.co/cookinai/DonutLM-v1), and [v1olet-v1olet-merged-dpo-7B](https://huggingface.co/v1olet/v1olet_merged_dpo_7B). This dataset is particularly useful for research in natural language understanding, language model comparison, and AI-generated text analysis.

### Supported Tasks

- **Natural Language Understanding:** The dataset can be used to train models to understand and generate human-like text.

- **Model Comparison:** Researchers can compare the performance of different language models using this dataset.

- **CoE Router Reward Modeling:** The responses from the 5 models can be used to train the routing mechanism given a query

- **Text Generation:** It's suitable for training and evaluating models on text generation tasks.

### Languages

The dataset is primarily in English.

## Dataset Structure

### Data Instances

A typical data instance comprises the following fields:

- `prompt`: The input prompt (string).

- `completion`: The expected completion of the prompt (string).

- `task`: The specific task or category the example belongs to (string).

- Model-generated responses from five different models, each in a separate field.

### Data Fields

- `prompt`: A string containing the input prompt.

- `completion`: A string containing the expected response or completion to the prompt.

- `task`: A string indicating the type of task.

- `ignos-Mistral-T5-7B-v1`: Model-generated response from ignos-Mistral-T5-7B-v1.

- `cognAI-lil-c3po`: Model-generated response from cognAI-lil-c3po.

- `viethq188-Rabbit-7B-DPO-Chat`: Model-generated response from viethq188-Rabbit-7B-DPO-Chat.

- `cookinai-DonutLM-v1`: Model-generated response from cookinai-DonutLM-v1.

- `v1olet-v1olet-merged-dpo-7B`: Model-generated response from v1olet-v1olet-merged-dpo-7B.

### Data Splits

The dataset is not split into traditional training, validation, and test sets. It contains 50,000 examples in a single batch, designed for evaluation and comparison purposes.

## Dataset Creation

### Curation Rationale

This dataset was curated to provide a diverse and extensive set of prompts and completions, along with responses from various state-of-the-art language models, for comprehensive evaluation and comparison in language understanding and generation tasks.

### Source Data

#### Initial Data Collection and Normalization

Data was synthetically generated, ensuring a wide variety of prompts, tasks, and model-generated responses.

#### Who are the source language producers?

The prompts and completions are from a known dataset, and the responses are produced by the specified language models.

### Annotations

The dataset does not include manual annotations. The responses are generated by the models listed.

### Personal and Sensitive Information

Since the dataset is synthetic, it does not contain any personal or sensitive information.

## Considerations for Using the Data

### Social Impact of Dataset

This dataset contributes to the advancement of natural language processing by providing a rich source for model comparison and analysis.

### Discussion of Biases

As the dataset is generated by AI models, it may inherit biases present in those models. Users should be aware of this when analyzing the data.

### Other Known Limitations

The effectiveness of the dataset is contingent on the quality and diversity of the synthetic data and the responses generated by the models.

### Licensing Information

Please refer to the repository for licensing information.

### Citation Information

```

@inproceedings{open-orca-flan-50k-synthetic-5-models,

title={Open-Orca-FLAN-50K-Synthetic-5-Models},

author={Kaizhao Liang}

}

``` | kz919/open-orca-flan-50k-synthetic-5-models | [

"task_categories:text-generation",

"size_categories:10K<n<100K",

"language:en",

"license:apache-2.0",

"region:us"

] | 2024-01-10T02:08:52+00:00 | {"language": ["en"], "license": "apache-2.0", "size_categories": ["10K<n<100K"], "task_categories": ["text-generation"], "pretty_name": "synthetic open-orca flan", "dataset_info": {"features": [{"name": "prompt", "dtype": "string"}, {"name": "completion", "dtype": "string"}, {"name": "task", "dtype": "string"}, {"name": "ignos-Mistral-T5-7B-v1", "dtype": "string"}, {"name": "cognAI-lil-c3po", "dtype": "string"}, {"name": "viethq188-Rabbit-7B-DPO-Chat", "dtype": "string"}, {"name": "cookinai-DonutLM-v1", "dtype": "string"}, {"name": "v1olet-v1olet-merged-dpo-7B", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 103557970, "num_examples": 50000}], "download_size": 47451297, "dataset_size": 103557970}, "configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}]}]} | 2024-01-12T20:37:03+00:00 | [] | [

"en"

] | TAGS

#task_categories-text-generation #size_categories-10K<n<100K #language-English #license-apache-2.0 #region-us

| # Open-Orca-FLAN-50K-Synthetic-5-Models Dataset Card

### Dataset Summary

The Open-Orca-FLAN-50K-Synthetic-5-Models dataset is a large-scale, synthetic dataset based on 50K filtered examples from Open-Orca/Flan . It contains 50,000 examples, each consisting of a prompt, a completion, and the corresponding task. Additionally, it includes model-generated responses from five different models: ignos-Mistral-T5-7B-v1, cognAI-lil-c3po, viethq188-Rabbit-7B-DPO-Chat, cookinai-DonutLM-v1, and v1olet-v1olet-merged-dpo-7B. This dataset is particularly useful for research in natural language understanding, language model comparison, and AI-generated text analysis.

### Supported Tasks

- Natural Language Understanding: The dataset can be used to train models to understand and generate human-like text.

- Model Comparison: Researchers can compare the performance of different language models using this dataset.

- CoE Router Reward Modeling: The responses from the 5 models can be used to train the routing mechanism given a query

- Text Generation: It's suitable for training and evaluating models on text generation tasks.

### Languages

The dataset is primarily in English.

## Dataset Structure

### Data Instances

A typical data instance comprises the following fields:

- 'prompt': The input prompt (string).

- 'completion': The expected completion of the prompt (string).

- 'task': The specific task or category the example belongs to (string).

- Model-generated responses from five different models, each in a separate field.

### Data Fields

- 'prompt': A string containing the input prompt.

- 'completion': A string containing the expected response or completion to the prompt.

- 'task': A string indicating the type of task.

- 'ignos-Mistral-T5-7B-v1': Model-generated response from ignos-Mistral-T5-7B-v1.

- 'cognAI-lil-c3po': Model-generated response from cognAI-lil-c3po.

- 'viethq188-Rabbit-7B-DPO-Chat': Model-generated response from viethq188-Rabbit-7B-DPO-Chat.

- 'cookinai-DonutLM-v1': Model-generated response from cookinai-DonutLM-v1.

- 'v1olet-v1olet-merged-dpo-7B': Model-generated response from v1olet-v1olet-merged-dpo-7B.

### Data Splits

The dataset is not split into traditional training, validation, and test sets. It contains 50,000 examples in a single batch, designed for evaluation and comparison purposes.

## Dataset Creation

### Curation Rationale

This dataset was curated to provide a diverse and extensive set of prompts and completions, along with responses from various state-of-the-art language models, for comprehensive evaluation and comparison in language understanding and generation tasks.

### Source Data

#### Initial Data Collection and Normalization

Data was synthetically generated, ensuring a wide variety of prompts, tasks, and model-generated responses.

#### Who are the source language producers?

The prompts and completions are from a known dataset, and the responses are produced by the specified language models.

### Annotations

The dataset does not include manual annotations. The responses are generated by the models listed.

### Personal and Sensitive Information

Since the dataset is synthetic, it does not contain any personal or sensitive information.

## Considerations for Using the Data

### Social Impact of Dataset

This dataset contributes to the advancement of natural language processing by providing a rich source for model comparison and analysis.

### Discussion of Biases

As the dataset is generated by AI models, it may inherit biases present in those models. Users should be aware of this when analyzing the data.

### Other Known Limitations

The effectiveness of the dataset is contingent on the quality and diversity of the synthetic data and the responses generated by the models.

### Licensing Information

Please refer to the repository for licensing information.

| [

"# Open-Orca-FLAN-50K-Synthetic-5-Models Dataset Card",

"### Dataset Summary\n\nThe Open-Orca-FLAN-50K-Synthetic-5-Models dataset is a large-scale, synthetic dataset based on 50K filtered examples from Open-Orca/Flan . It contains 50,000 examples, each consisting of a prompt, a completion, and the corresponding task. Additionally, it includes model-generated responses from five different models: ignos-Mistral-T5-7B-v1, cognAI-lil-c3po, viethq188-Rabbit-7B-DPO-Chat, cookinai-DonutLM-v1, and v1olet-v1olet-merged-dpo-7B. This dataset is particularly useful for research in natural language understanding, language model comparison, and AI-generated text analysis.",

"### Supported Tasks\n\n- Natural Language Understanding: The dataset can be used to train models to understand and generate human-like text.\n- Model Comparison: Researchers can compare the performance of different language models using this dataset.\n- CoE Router Reward Modeling: The responses from the 5 models can be used to train the routing mechanism given a query\n- Text Generation: It's suitable for training and evaluating models on text generation tasks.",

"### Languages\n\nThe dataset is primarily in English.",

"## Dataset Structure",

"### Data Instances\n\nA typical data instance comprises the following fields:\n- 'prompt': The input prompt (string).\n- 'completion': The expected completion of the prompt (string).\n- 'task': The specific task or category the example belongs to (string).\n- Model-generated responses from five different models, each in a separate field.",

"### Data Fields\n\n- 'prompt': A string containing the input prompt.\n- 'completion': A string containing the expected response or completion to the prompt.\n- 'task': A string indicating the type of task.\n- 'ignos-Mistral-T5-7B-v1': Model-generated response from ignos-Mistral-T5-7B-v1.\n- 'cognAI-lil-c3po': Model-generated response from cognAI-lil-c3po.\n- 'viethq188-Rabbit-7B-DPO-Chat': Model-generated response from viethq188-Rabbit-7B-DPO-Chat.\n- 'cookinai-DonutLM-v1': Model-generated response from cookinai-DonutLM-v1.\n- 'v1olet-v1olet-merged-dpo-7B': Model-generated response from v1olet-v1olet-merged-dpo-7B.",

"### Data Splits\n\nThe dataset is not split into traditional training, validation, and test sets. It contains 50,000 examples in a single batch, designed for evaluation and comparison purposes.",

"## Dataset Creation",

"### Curation Rationale\n\nThis dataset was curated to provide a diverse and extensive set of prompts and completions, along with responses from various state-of-the-art language models, for comprehensive evaluation and comparison in language understanding and generation tasks.",

"### Source Data",

"#### Initial Data Collection and Normalization\n\nData was synthetically generated, ensuring a wide variety of prompts, tasks, and model-generated responses.",

"#### Who are the source language producers?\n\nThe prompts and completions are from a known dataset, and the responses are produced by the specified language models.",

"### Annotations\n\nThe dataset does not include manual annotations. The responses are generated by the models listed.",

"### Personal and Sensitive Information\n\nSince the dataset is synthetic, it does not contain any personal or sensitive information.",

"## Considerations for Using the Data",

"### Social Impact of Dataset\n\nThis dataset contributes to the advancement of natural language processing by providing a rich source for model comparison and analysis.",

"### Discussion of Biases\n\nAs the dataset is generated by AI models, it may inherit biases present in those models. Users should be aware of this when analyzing the data.",

"### Other Known Limitations\n\nThe effectiveness of the dataset is contingent on the quality and diversity of the synthetic data and the responses generated by the models.",

"### Licensing Information\n\nPlease refer to the repository for licensing information."

] | [

"TAGS\n#task_categories-text-generation #size_categories-10K<n<100K #language-English #license-apache-2.0 #region-us \n",

"# Open-Orca-FLAN-50K-Synthetic-5-Models Dataset Card",

"### Dataset Summary\n\nThe Open-Orca-FLAN-50K-Synthetic-5-Models dataset is a large-scale, synthetic dataset based on 50K filtered examples from Open-Orca/Flan . It contains 50,000 examples, each consisting of a prompt, a completion, and the corresponding task. Additionally, it includes model-generated responses from five different models: ignos-Mistral-T5-7B-v1, cognAI-lil-c3po, viethq188-Rabbit-7B-DPO-Chat, cookinai-DonutLM-v1, and v1olet-v1olet-merged-dpo-7B. This dataset is particularly useful for research in natural language understanding, language model comparison, and AI-generated text analysis.",

"### Supported Tasks\n\n- Natural Language Understanding: The dataset can be used to train models to understand and generate human-like text.\n- Model Comparison: Researchers can compare the performance of different language models using this dataset.\n- CoE Router Reward Modeling: The responses from the 5 models can be used to train the routing mechanism given a query\n- Text Generation: It's suitable for training and evaluating models on text generation tasks.",

"### Languages\n\nThe dataset is primarily in English.",

"## Dataset Structure",

"### Data Instances\n\nA typical data instance comprises the following fields:\n- 'prompt': The input prompt (string).\n- 'completion': The expected completion of the prompt (string).\n- 'task': The specific task or category the example belongs to (string).\n- Model-generated responses from five different models, each in a separate field.",

"### Data Fields\n\n- 'prompt': A string containing the input prompt.\n- 'completion': A string containing the expected response or completion to the prompt.\n- 'task': A string indicating the type of task.\n- 'ignos-Mistral-T5-7B-v1': Model-generated response from ignos-Mistral-T5-7B-v1.\n- 'cognAI-lil-c3po': Model-generated response from cognAI-lil-c3po.\n- 'viethq188-Rabbit-7B-DPO-Chat': Model-generated response from viethq188-Rabbit-7B-DPO-Chat.\n- 'cookinai-DonutLM-v1': Model-generated response from cookinai-DonutLM-v1.\n- 'v1olet-v1olet-merged-dpo-7B': Model-generated response from v1olet-v1olet-merged-dpo-7B.",

"### Data Splits\n\nThe dataset is not split into traditional training, validation, and test sets. It contains 50,000 examples in a single batch, designed for evaluation and comparison purposes.",

"## Dataset Creation",

"### Curation Rationale\n\nThis dataset was curated to provide a diverse and extensive set of prompts and completions, along with responses from various state-of-the-art language models, for comprehensive evaluation and comparison in language understanding and generation tasks.",

"### Source Data",

"#### Initial Data Collection and Normalization\n\nData was synthetically generated, ensuring a wide variety of prompts, tasks, and model-generated responses.",

"#### Who are the source language producers?\n\nThe prompts and completions are from a known dataset, and the responses are produced by the specified language models.",

"### Annotations\n\nThe dataset does not include manual annotations. The responses are generated by the models listed.",

"### Personal and Sensitive Information\n\nSince the dataset is synthetic, it does not contain any personal or sensitive information.",

"## Considerations for Using the Data",

"### Social Impact of Dataset\n\nThis dataset contributes to the advancement of natural language processing by providing a rich source for model comparison and analysis.",

"### Discussion of Biases\n\nAs the dataset is generated by AI models, it may inherit biases present in those models. Users should be aware of this when analyzing the data.",

"### Other Known Limitations\n\nThe effectiveness of the dataset is contingent on the quality and diversity of the synthetic data and the responses generated by the models.",

"### Licensing Information\n\nPlease refer to the repository for licensing information."

] |

e95858d016a7159c9e5cb7273ed3bb8ffef777e7 | # Dataset Card for "nft_prediction_all_with_dates"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | hongerzh/nft_prediction_all_with_dates | [

"region:us"

] | 2024-01-10T02:45:19+00:00 | {"configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}, {"split": "validation", "path": "data/validation-*"}, {"split": "test", "path": "data/test-*"}]}], "dataset_info": {"features": [{"name": "image", "dtype": "image"}, {"name": "label", "dtype": "float64"}, {"name": "time", "dtype": "float64"}, {"name": "text", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 5747708188.67, "num_examples": 29339}, {"name": "validation", "num_bytes": 1910519375.185, "num_examples": 9777}, {"name": "test", "num_bytes": 2129490317.38, "num_examples": 9780}], "download_size": 9022605212, "dataset_size": 9787717881.235}} | 2024-01-10T03:51:44+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "nft_prediction_all_with_dates"

More Information needed | [

"# Dataset Card for \"nft_prediction_all_with_dates\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"nft_prediction_all_with_dates\"\n\nMore Information needed"

] |

84892d5711e2f6996463bb5a9941f47bd21e96c1 | # tokenspace directory

This directory contains utilities for the purpose of browsing the

"token space" of CLIP ViT-L/14

Primary tools are:

* "calculate-distances.py": allows command-line browsing of words and their neighbours

* "graph-embeddings.py": plots graph of full values of two embeddings

## (clipmodel,cliptextmodel)-calculate-distances.py

Loads the generated embeddings, reads in a word, calculates "distance" to every

embedding, and then shows the closest "neighbours".

To run this requires the files "embeddings.safetensors" and "dictionary",

in matching format

You will need to rename or copy appropriate files for this as mentioned below.

Note that SD models use cliptextmodel, NOT clipmodel

## graph-textmodels.py

Shows the difference between the same word, embedded by CLIPTextModel

vs CLIPModel

## graph-embeddings.py

Run the script. It will ask you for two text strings.

Once you enter both, it will plot the graph and display it for you

Note that this tool does not require any of the other files; just that you

have the requisite python modules installed. (pip install -r requirements.txt)

### embeddings.safetensors

You can either copy one of the provided files, or generate your own.

See generate-embeddings.py for that.

Note that you muist always use the "dictionary" file that matchnes your embeddings file

### embeddings.allids.safetensors

DO NOT USE THIS ONE for programs that expect a matching dictionary.

This one is purely numeric based.

Its intention is more for research datamining, but it does have a matching

graph front end, graph-byid.py

### dictionary

Make sure to always use the dictionary file that matches your embeddings file.

The "dictionary.fullword" file is pulled from fullword.json, which is distilled from "full words"

present in the ViT-L/14 CLIP model's provided token dictionary, called "vocab.json".

Thus there are only around 30,000 words in it

If you want to use the provided "embeddings.safetensors.huge" file, you will want to use the matching

"dictionary.huge" file, which has over 300,000 words

This huge file comes from the linux "wamerican-huge" package, which delivers it under

/usr/share/dict/american-english-huge

There also exists a "american-insane" package

## generate-embeddings.py

Generates the "embeddings.safetensor" file, based on the "dictionary" file present.

Takes a few minutes to run, depending on size of the dictionary

The shape of the embeddings tensor, is

[number-of-words][768]

Note that yes, it is possible to directly pull a tensor from the CLIP model,

using keyname of text_model.embeddings.token_embedding.weight

This will NOT GIVE YOU THE RIGHT DISTANCES!

Hence why we are calculating and then storing the embedding weights actually

generated by the CLIP process

## fullword.json

This file contains a collection of "one word, one CLIP token id" pairings.

The file was taken from vocab.json, which is part of multiple SD models in huggingface.co

The file was optimized for what people are actually going to type as words.

First all the non-(/w) entries were stripped out.

Then all the garbage punctuation and foreign characters were stripped out.

Finally, the actual (/w) was stripped out, for ease of use.

| ppbrown/tokenspace | [

"region:us"

] | 2024-01-10T02:54:05+00:00 | {} | 2024-01-26T04:25:37+00:00 | [] | [] | TAGS

#region-us

| # tokenspace directory

This directory contains utilities for the purpose of browsing the

"token space" of CLIP ViT-L/14

Primary tools are:

* "URL": allows command-line browsing of words and their neighbours

* "URL": plots graph of full values of two embeddings

## (clipmodel,cliptextmodel)-URL

Loads the generated embeddings, reads in a word, calculates "distance" to every

embedding, and then shows the closest "neighbours".