text

stringlengths 226

34.5k

|

|---|

Rock, Paper, Scissor, Spock, Lizard in python, Player 2 automatically wins

Question: for an exercise we need to recreate the game played by the members of the

bigbang theory: Rock, Paper, Scissor, Spock, Lizard. I managed to recreate it

almost completely, the only problem is: Player 2 automatically wins. Can

someone tell me where I need to change the code and also explain why?

import sys

t = len(sys.argv)

if(t < 2 or t > 3):

print("Usage: rpsls.py symbool1 symbool2")

exit()

i = 1

while (i > 0):

a = sys.argv[1]

b = sys.argv[2]

a = a.lower()

b = b.lower()

if(a != "rock" and a != "paper" and a != "scissor" and a != "lizard" and a != "spock"):

print("What's that? please use a real symbol!")

elif(b != "rock" and b != "paper" and b != "scissor" and b != "lizard" and b != "spock"):

print("What's that? please use a real symbol!")

else:

if (a == "paper" and b == "scissor"):

s = True

i = 0

else:

s = False

i = 0

if(a == "paper" and b == "rock"):

s = True

i = 0

else:

s = False

i = 0

if(a == "rock" and b == "lizard"):

s = True

i = 0

else:

s = False

i = 0

if(a == "lizard" and b == "spock"):

s = True

i = 0

else:

s = False

i = 0

if(a == "spock" and b == "scissors"):

s = True

i = 0

else:

s = False

i = 0

if(a == "scissor" and b == "lizard"):

s = True

i = 0

else:

s = False

i = 0

if(a == "lizard" and b == "paper"):

s = True

i = 0

else:

s = False

i = 0

if(a == "paper" and b == "spock"):

s = True

i = 0

else:

s = False

i = 0

if(a == "spock" and b == "rock"):

s = True

i = 0

else:

s = False

i = 0

if(a == "rock" and b == "scissor"):

s = True

i = 0

else:

s = False

i = 0

if(a == b):

print("It's a tie!")

i = 0

exit()

if(s == True):

print("Player 1 wins!")

if(s == False):

print("Player 2 wins!")

Answer: Each of your if statements has an else. Only one of the if statements can be

true, so that means that all the other else statements are evaluated. The

result of that is that the last else statement - which sets s to False - will

"win", so player 2 wins.

You should drop all your else statements, and restructure your code as a

series of `if...elif...` blocks:

if a == "paper" and b == "scissor":

s = True

i = 0

elif a == "paper" and b == "rock":

(Note, if conditions don't need parentheses.)

|

Linking library in python

Question: I want to use the Cantera library in python. I have been using it for C++ and

I am linking my adding these couple lines to my makefile:

CANT_LIB = $HOME/usr/local/Cantera201/lib/

CANT_INC = $HOME/usr/local/Cantera201/include/ -I $HOME/usr/local/Cantera201/include/cantera \

with `CANT_LIB` and `CANT_INC` being called when compiling.

I have very limited experience with python. Is there an equivalent to linking

libraries in python? I have tried adding the cantera path to `PYTHONPATH` but

it did not work. I am working on a Linux server on which I do not have access

to super user and `python 2.6.6`.

Answer: You need to install Cantera's Python module to use it, the raw C/C++ libraries

aren't enough. If you install using [the directions on their

website](http://www.cantera.org/docs/sphinx/html/install.html) it should be

installed to the appropriate Python `site-packages` directory automatically,

and [available for use with just `import

cantera`](http://www.cantera.org/docs/sphinx/html/cython/migrating.html#importing-

the-python-module).

|

Broke Python on Mac by uninstalling Python wrong, how to get Modules to work again?

Question: I'm trying to use Python with the Twisted framework, and have been struggling

to get it running.

I've got some dirt simple python code:

from twisted.internet import reactor

reactor.run()

Buy when I run `python server.py` I get back:

>

> File "server.py", line 1, in <module>

> from twisted.internet import reactor File

> "/Library/Python/2.7/site-packages/twisted/__init__.py", line 53, in

> <module>

> _checkRequirements() File "/Library/Python/2.7/site-

> packages/twisted/__init__.py", line 37, in

> _checkRequirements

> raise ImportError(required + ": no module named zope.interface.")

> ImportError: Twisted requires zope.interface 3.6.0 or later: no module

> named zope.interface.

>

My first assumption was to run `pip install zope.interface`

Unfortunately, all I get from this is:

>

> Requirement already satisfied (use --upgrade to upgrade):

> zope.interface in

>

> /System/Library/Frameworks/Python.framework/Versions/2.7/Extras/lib/python

> Requirement already satisfied (use --upgrade to upgrade): setuptools

> in

>

> /System/Library/Frameworks/Python.framework/Versions/2.7/Extras/lib/python

> (from zope.interface)

>

I also tried `easy_install zope.interface`

But that yields similar results:

>

> Searching for zope.interface

> Best match: zope.interface 4.1.1

> zope.interface 4.1.1 is already the active version in easy-install.pth

>

> Using

> /System/Library/Frameworks/Python.framework/Versions/2.7/Extras/lib/python

> Processing dependencies for zope.interface

> Finished processing dependencies for zope.interface

>

Since apparently zope is installed on my machine, I'm thinking that the

problem could possibly be with the python I've got installed. I installed

Python3 with brew earlier this year, and I think I may have messed up the

python configuration. Any ideas?

**Update** The default python that is on mac should work for everything I was

trying to do above, I verified this on another mac computer. The problem I was

having was that I had corrupted my default python image that came with my mac.

By following the post below I was able to create working virtualenv again for

my python.

Answer: You should do all of your Python work in a

[virtualenv](https://virtualenv.readthedocs.org/en/latest/). If you were to

make a fresh virtualenv for your Twisted development, and always activate it

before working on it, you would have much tighter control over what gets

installed and it would be a lot easier to diagnose problems. So please make a

new virtualenv and `pip install twisted` in it, and then this problem should

go away.

|

Finding the mode of a list using ONLY loops and creating lists in python

Question: I have been trying to figure out how to find the mode of a list **WITHOUT**

using sorting functions nor importing any modules for quite awhile now...

Here's what I have so far:

d = {}

def mode():

for i in mode:

if i in d:

d[i] += 1

else:

d[i] = 1

return

How do I find the output such as the following:

print(mode([1, 5, 6, 1, 1, 2]))

[1]

print(mode([5, 6, 7, 8, 9]))

[5, 6, 7, 8, 9]

Thanks a bunch!

Answer: You need to count the occurrences in your dict and extract the `max` based on

the value returning the list itself if there is no mode.

def mode(l):

d= {}

for i in l:

d.setdefault(i, 0)

d[i] += 1

mx = max(d,key=d.get)

return d[mx] if d[mx] > 1 else l

|

BioPython AlignIO ValueError says strings must be same length?

Question: Input fasta-format text file:

<http://www.jcvi.org/cgi-

bin/tigrfams/DownloadFile.cgi?file=/opt/www/www_tmp/tigrfams/fa_alignment_PF00205.txt>

#!/usr/bin/python

from Bio import AlignIO

seq_file = open('/path/to/fa_alignment_PF00205.txt')

alignment = AlignIO.read(seq_file, "fasta")

Error:

ValueError: Sequences must all be the same length

The input sequences shouldn't have to be the same length since on ClustalOmega

you can align sequences of differing lengths.

This also doesn't work...gets the same error:

alignment = AlignIO.parse(seq_file,"fasta")

for record in alignment:

print(record.id)

**Does anybody who is familiar with BioPython know how to get around this to

align sequences from fasta files?**

Answer: Pad the sequence that is too short and write the records to to a temporary

FASTA file. Than your alignments works as expected:

from Bio import AlignIO

from Bio import SeqIO

from Bio import Seq

import os

input_file = '/path/to/fa_alignment_PF00205.txt'

records = SeqIO.parse(input_file, 'fasta')

records = list(records) # make a copy, otherwise our generator

# is exhausted after calculating maxlen

maxlen = max(len(record.seq) for record in records)

# pad sequences so that they all have the same length

for record in records:

if len(record.seq) != maxlen:

sequence = str(record.seq).ljust(maxlen, '.')

record.seq = Seq.Seq(sequence)

assert all(len(record.seq) == maxlen for record in records)

# write to temporary file and do alignment

output_file = '{}_padded.fasta'.format(os.path.splitext(input_file)[0])

with open(output_file, 'w') as f:

SeqIO.write(records, f, 'fasta')

alignment = AlignIO.read(output_file, "fasta")

print alignment

This outputs:

SingleLetterAlphabet() alignment with 104 rows and 275 columns

TKAAIELIADHQ.......LTVLADLLVHRLQ..AVKELEALLA...QAL SP|A2VGF0.1/208-339

LQELASVINQHE...KV..MLFCGHGCR...Y..AVEEVMALAK...EDL SP|A3D4X6.1/190-319

IKKIAQAIEKAK...KP..VICAGGGVINS.N..ASEELLTLSR...KEL SP|A3DID9.1/192-327

IDEAAEAINKAE...RP..VILAGGGVSIA.G..ANKELFEFAT...QLL SP|A3DIY4.1/192-327

IEKAIELINSSQ...RP..FICSGGGVISS.E..ASEELIQFAE...KIL SP|A4XHS0.1/191-326

IKRAVEAIENSQ...RP..VICSGGGVIAS.R..ASDELKILVE...SEI SP|A4XIL5.1/194-328

VRQAARIIMESE...RP..VIYAGGGVRIS.G..AAPELLELSE...RAL SP|A5D4V9.1/192-327

LQALAQRILRAQ...RP..VIITGDEIVKS.D..ALQAAADFAS...LQL SP|A5ECG1.1/192-328

VEKAVELLWSAR...RV..LVISGRGAR...G..AGPELIGLLD...RAM SP|A5EDH4.1/198-324

IQKAARLIETAE...KP..VIIAGHGVNIS.G..ANEELKTLAE...KSL SP|A5FR34.1/193-328

LDALARDLDSAA...RV..TIYAGIGAR...G..AAARVVQLAG...EAL SP|A5FTR0.1/189-317

VADVAALLRAAR...RP..VIVAGGGVIHSG...AEERLATFAA...DAL SP|A5G0X6.1/217-351

IAEAVSALKGAK...RP..IIYTGGGLINS.GPESAELIVQLAK...RAL SP|A5G2E1.1/199-336

LKKAAEIINRAK...RP..LIYAGGGITLA.G..ASAELRALAA...ALL SP|A5GC69.1/192-327

CRDIVGKLLQSH...RP..VVLGGTGVRLS.R..TEQRLLALVE...DVF SP|A5W0I1.1/200-336

LDQAALKLAAAE...RP..MIIAGGGA..L.H..AAEQLAQLSA...AGL SP|A5W220.1/196-326

LQRAADILNTGH...KV..AILVGAGAL...Q..ATEQVIAIAE...RAL SP|A5W364.1/198-328

IRKAAEMLLAAK...RP..VVYSGGGVILG.G..GSEALTEIAK...SEM SP|A5W954.1/196-331

...

LTELQERLANAQ...RP..VVILGGSRWSD.A..AVQQFTRFAE...... SP|Q220C3.1/190-328

|

GAE/P transaction using static method

Question: I'm trying to figure out how to organize app engine code with transactions.

Currently I have a separate python file with all my transaction functions. For

transactions that are closely related to entities, I was wondering if it made

sense to use a `@staticmethod` for the transaction.

Here is a simple example:

class MyEntity(ndb.Model):

n = ndb.IntegerProperty(default=0)

@staticmethod

@ndb.transactional # does the order of the decorators matter?

def increment_n(my_entity_key):

my_entity = my_entity_key.get()

my_entity.n += 1

my_entity.put()

def do_something(self):

MyEntity.increment_n(self.key)

It would be nice to have `increment_n` associated with the entity definition,

but I have never seen anyone do this so I was wondering if this would be a bad

idea.

MY SOLUTION:

Following Brent's answer, I've implemented this:

class MyEntity(ndb.Model):

n = ndb.IntegerProperty(default=0)

@staticmethod

@ndb.transactional

def increment_n_transaction(my_entity_key):

my_entity = my_entity_key.get()

my_entity.increment_n()

def increment_n(self):

self.n += 1

self.put()

This way I can keep entity related code all in one place and I can easily use

the transactional version or not as needed.

Answer: Yes, it makes sense to use a `@staticmethod` in this case, since the function

doesn't use a class or an instance (`self`).

And yes, the order of decorators is important, as noted in @Kekito's later

answer.

|

Time Looping Python

Question: How can I write code to loop in time python? I want this code loop 10 minutes

in python.

msList =[]

msg = str(raw_input('Input Data :'))

msgList.append(msg)

I do not wanna use `crontab` because I want this code looping in my program.

Answer: Use the `sleep` function from the `time` module. This should do what you're

asking:

from time import sleep

msList =[]

while True:

msg = str(raw_input('Input Data :'))

msgList.append(msg)

sleep(600)

|

Insert into MySQl database after reading csv file?

Question: I have a csv file like this:

[email protected], 01-05-2014

[email protected], 01-05-2014

[email protected], 01-05-2014

[email protected], 01-05-2014

I need to read the above csv file and extract domain name and also the count

of emails address by domain name and date as well. All these things I need to

insert into MySQL database but somehow I am stuck how to insert into MySQL

database after iterating the list I got.

Query will be like this:

INSERT INTO domains(domain_name, cnt, date_of_entry) VALUES (%s, %s, %s);

Below is the code

#!/usr/bin/python

import fileinput

import csv

import os

import sys

import MySQLdb

from collections import defaultdict

lst = defaultdict(list)

d_lst = defaultdict(list)

# ======================== Defined Functions ======================

def get_file_path(filename):

currentdirpath = os.getcwd()

# get current working directory path

filepath = os.path.join(currentdirpath, filename)

return filepath

# ===========================================================

def read_CSV(filepath):

domain_list = []

domain_date_list = []

sorted_domain_list_bydate = defaultdict(list)

with open(filepath, 'rb') as csvfile:

reader = csv.reader(csvfile)

for row in reader:

# insert the 1st & 2nd column of the CSV file into a set called input_list

email = row[0].strip().lower()

date = row[1].strip()

domain_date_list.append([date, email[ email.find("@") : ]])

domain_list.append(email[ email.find("@") : ])

for k, v in domain_date_list:

sorted_domain_list_bydate[k].append(v)

# remove duplicates from domain list

domain_list = list(set(domain_list))

return sorted_domain_list_bydate, domain_list

# ===========================================================

def update_DB(lst):

# open a database connection

db = MySQLdb.connect(host="localhost", # your host, usually localhost

user="root", # your username

passwd="abcdef1234", # your password

db="test") # name of the data base

cur = db.cursor()

a = []

for k, v in lst.items():

# now what should I do here?

# this is what I am confuse

db.commit()

db.close()

# ==========================================================

# ======================= main program =======================================

path = get_file_path('emails.csv')

[lst, d_lst] = read_CSV(path) # read the input file

update_DB(lst) # insert data into domains table

I am confuse in `update_DB` method.

Answer: I am not sure why you have such a complicated program for a simple task. Lets

start from the top:

1. You need to first organize your data correctly by domain, date and then count.

import csv

from collections import defuaultdict, Counter

domain_counts = defaultdict(Counter)

with open('somefile.csv') as f:

reader = csv.reader(f)

for row in reader:

domain_counts[row[0].split('@')[1].strip()][row[1]] += 1

2. Next, you need to insert each _row_ correctly in the database:

db = MySQLdb.connect(...)

cur = db.cursor()

q = 'INSERT INTO domains(domain_name, cnt, date_of_entry) VALUES(%s, %s, %s)'

for domain, data in domain_counts.iteritems():

for email_date, email_count in data.iteritems():

cur.execute(q, (domain, email_count, email_date))

db.commit()

* * *

As your dates are not being inserted correctly, try this updated query

instead:

q = """INSERT INTO

domains(domain_name, cnt, date_of_entry)

VALUES(%s, %s, STR_TO_DATE(%s, '%d-%m-%Y'))"""

|

Python connection to Oracle database

Question: I am writing a Python script to fetch and update some data on a remote oracle

database from a Linux server. I would like to know how can I connect to remote

oracle database from the server.

Do I necessarily need to have an oracle client installed on my server or any

connector can be used for the same?

And also if I use `cx_Oracle` module in Python, is there any dependency that

has to be fulfilled for making it work?

Answer: You have to Install Instance_client for cx_oracle driver to interact with

remote oracle server

<http://www.oracle.com/technetwork/database/features/instant-

client/index-097480.html>.

Use SQLAlchemy (Object Relational Mapper) to make the connection and interact

with Oracle Database.

The below code you can refer for oracle DB connection.

> > > from sqlalchemy import create_engine

>>>

>>> from sqlalchemy.orm import sessionmaker

>>>

>>> engine = create_engine('oracle+cx_oracle://test_user:test_user@ORACSG')

>>>

>>> session_factory = sessionmaker(bind=engine, autoflush=False)

>>>

>>> session = session_factory()

>>>

>>> res = session.execute("select * from emp");

>>>

>>> print res.fetchall()

|

Why Response.Cookies is empty?

Question: When I run this python script

import requests

main_page_request = requests.get("http://carkit.kg/")

cookie = main_page_request.cookies.get("csrftoken", "")

I'm getting proper result, but when I run this code at C#:

string url = @"http://carkit.kg";

HttpWebRequest request = (HttpWebRequest)WebRequest.Create(url);

HttpWebResponse response = (HttpWebResponse)request.GetResponse();

Debug.Log(response.Cookies["csrftoken"]); // prints "Null"

it says that response.Cookies is empty. What is the problem?

Answer: You have to add a cookie container to the request. Then it returns the cookie:

CookieContainer c = new CookieContainer();

HttpWebRequest request = (HttpWebRequest)WebRequest.Create(url);

request.CookieContainer = c;

HttpWebResponse response = (HttpWebResponse)request.GetResponse();

Console.WriteLine(response.Cookies["csrftoken"]);

// prints "csrftoken=E1iRIi7cQvxvJcnSgOgaEP3XPxTHRUfT"

|

sending data using post in python to php with variable

Question: I have this script and I want to replace the date `2015-05-01` (from and to)

with the current date. I am thinking of using **import datetime** and

something like this (I am new to python):

from StringIO import StringIO

import urllib

import urllib2

url = 'http://fme.discomap.eea.europa.eu/fmedatastreaming/AirQuality/AirQualityUTDExport.fmw'

data = "POSTDATA=FromDate=2015-05-01&ToDate=2015-06-01&Countrycode=&InsertedSinceDate=&UpdatedSinceDate=&Pollutant=PM10&Namespace=&Format=XML&UserToken= "

req = urllib2.Request(url, data)

response = urllib2.urlopen(req)

the_page = response.read()

print the_page

**New script**

from StringIO import StringIO

import urllib

import urllib2

import datetime

i = datetime.datetime.now() // gets the date

url = 'http://fme.discomap.eea.europa.eu/fmedatastreaming/AirQuality/AirQualityUTDExport.fmw'

data = "POSTDATA=FromDate="i&ToDate="i"&Countrycode=&InsertedSinceDate=&UpdatedSinceDate=&Pollutant=PM10&Namespace=&Format=XML&UserToken=" //i replaced the fixed date with the variable i

req = urllib2.Request(url, data)

response = urllib2.urlopen(req)

the_page = response.read()

print the_page

Answer: I don't mean to sound harsh, but being new to a language is no excuse for not

learning the language's syntax (quite on the contrary). This line:

data = "POSTDATA=FromDate="i&ToDate="i"&Countrycode=&InsertedSinceDate=&UpdatedSinceDate=&Pollutant=PM10&Namespace=&Format=XML&UserToken="

is obviously broken and raises a SyntaxError:

bruno@bigb:~/Work/playground$ python

Python 2.7.3 (default, Jun 22 2015, 19:33:41)

>>> data = "POSTDATA=FromDate="i&ToDate="i"&Countrycode=&InsertedSinceDate=&UpdatedSinceDate=&Pollutant=PM10&Namespace=&Format=XML&UserToken="

File "<stdin>", line 1

data = "POSTDATA=FromDate="i&ToDate="i"&Countrycode=&InsertedSinceDate=&UpdatedSinceDate=&Pollutant=PM10&Namespace=&Format=XML&UserToken="

^

SyntaxError: invalid syntax

>>>

In this statement the rhs expression actually begins with :

* `"POSTDATA=FromDate="` which is a legal literal string

* `i&ToDate` which is parsed as "`i`" (identifier) "`&`" (operator) "`ToDate`" (identifier)

The mere juxtaposition of a literal string and an identifier (without an

operator) is actually illegal:

bruno@bigb:~/Work/playground$ python

Python 2.7.3 (default, Jun 22 2015, 19:33:41)

>>> i = 42

>>> "foo" i

File "<stdin>", line 1

"foo" i

^

SyntaxError: invalid syntax

>>>

Obviously what you want here is string concatenation, which is expressed by

the `add` ("`+`") operator, so it should read:

"POSTDATA=FromDate=" + i

Then since "&ToDate" is supposed to be a string literal instead of an operator

and a variable you'd have to quote it:

"POSTDATA=FromDate=" + i + "&ToDate="

Then concatenate the current date again:

"POSTDATA=FromDate=" + i + "&ToDate=" + i + "etc..."

Now in your code `i` (not how I would have named a date BTW but anyway) is a

`datetime` object, not a string, so now you'll get a `TypeError` because you

cannot concatenate a string with anything else than a string (hopefully - it

wouldn't make any sense).

FWIW what you want here is not a `datetime` object but the textual ("string")

representation of the date in the "YYYY-MM-DD" format. You can get this from

the `datetime` object using it's `strftime()` method:

today = datetime.datetime.now().strftime("%Y-%m-%d)

Now you have a string that you can concatenate:

data = "POSTDATA=FromDate=" + today + "&ToDate=" + today + "etc..."

This being said:

* this kind of operation is usually done using [string formatting](https://docs.python.org/2/library/string.html#string-formatting)

* and as Ekrem Dogan mentionned, the simplest solution here is to use a higher-level package that will take care of all the boring details of HTTP requests - `requests` being the de facto standard.

|

Killing a Python program using ctrl C

Question: I have an assignment in school which i can't get around and i'm stuck with.

the assignment is to build a program that infinitly spews out random numbers

in a EasyGUI messagebox ( Yeah i know EasyGUI is old xD )

this is my source code:

import easygui

while True:

easygui.msgbox(random.randint(-100, 100))

The problem is that when i run this i can't get out of it. I should be allowed

to use ctrl+C but that doesn't work. Am i missing something?

Thank you in advance!

Answer: using signalhandlers does not seem to be a trivial task when it comes to

easygui, if you can work with quitting when `x` is pressed you can do the

following:

while True:

e = easygui.msgbox(random.randint(-100, 100))

if e is None:

break

`e` will either be a string `"OK"` if you press ok or None if `x` is pressed

so it is probably the simplest way to quit and end the loop.

|

Unable to run python script from shell but able to run it from eclipse(PyDev)

Question: Python version:2.7 OS: CentOS

I have a python project with multiple files spread across different

directories. I am able to run this through Eclipse(PyDev). However I am unable

to run it from linux shell.

The directory structure looks like this:

Projectrepo

|

|

__|__

src conf

| |

| |

buildexec.py |

|

script_variables, list_of_scripts

`buildexec.py` is my main script. `script_variables` and `list_of_scripts` are

two modules which I am referencing from buildexec.py.

I have included `from conf.script_variables import *` in my main script and it

is working fine when I run it on eclipse. But, when I try to run it on shell,

I get an error

`'Traceback (most recent call last): File "buildexec.py", line 6, in <module>

from conf.script_variables import * ImportError: No module named

conf.script_variables'`

I have added PYTHONPATH=/usr/bin/python2.7 and have exported it..

Also, in the main script, I have added

`sys.path.append('/home/tejas/Projectrepo/conf')` before importing the

modules.

Answer: Was a simple solution! My pythonpath was pointing to the default directory

/usr/bin/python2.7. I added the location of the user defined modules also in

the python path using `export

PYTHONPATH=/usr/bin/python2.7:/home/tejas/Projectrepo/conf`

|

write chinese words in csv file using python2.7

Question: I am trying to write Chinese words like 花花公子昊天鞋类专营店 in a CSV file in python,

but not able to do it. I tried solution given here("[issues with writing

Chinese to csv file in

Python](http://stackoverflow.com/questions/29442448/issues-with-writing-

chinese-to-csv-file-in-python)"). Any help will be appreciated.

Answer: The module `unicodecsv` helps with that (you can install that with **pip**):

import unicodecsv

w = unicodecsv.writer(open("test.csv", "w"))

w.writerow((u"花花公子昊天鞋类专营店", 78.10))

del w

The resulting csv file opens succesfully in OpenOffice.

You can also read it back in Python:

r = unicodecsv.reader(open("test.csv", "rb"))

for row in r:

print row[0], row[1]

And when run, it should print:

(user@motoom) ~/Prj/python $ python chinesecsv.py

花花公子昊天鞋类专营店 78.1

|

Parse lines into individual segments - python

Question: I'm new to python and having issues working with a text file. The text file

structure being used is shown. What I'm trying to do is first split the two

polylines into their own variable and then split each variable into individual

coordinates. The end goal is to have it structured as:

polyline 1:

[###, ###] [###, ###]

polyline 2:

[###, ###] [###, ###]

Text file structure:

Polyline;

1: ###,###; ###,###

2: ###,###; ###,###; ###,###

The code I've tried is just working with a single line. While I've been able

to split the single line, I have not been able to move to the next step which

is to split the line further.

f=open('txt.txt', 'r')

pl = []

for line in f.read().split('\n'):

if (line.find('1: ') !=-1):

ln = line.split('1: ')

print ln

f.close()

What is the best way to split the line to the end state?

Answer: First of all you can use `with ... as` statement to open a file which will

close the file at the end of block , secondly you don't have to read the file

and split with `\n` just use a for loop to loop over your file object.

Also for checking the start with digit number you can us regex and in this

case you can use `re.match` function, then you can split the line with `;` and

using a list comprehension split another parts with `,` :

import re

with open('txt.txt') as f:

for line in f:

if re.match(r'\d:.*',line):

ln = [var.split(',') for var in line.split(';')]

print ln

|

Mongodb lack of precision incrementing floats

Question: I have a problem because Mongodb doesn't seem to maintain precision when

incrementing floats. For example, the following should yield `2.0`:

from decimal import Decimal # for python precision

for i in range(40):

db.test.update({}, {'$inc': {'count': float(Decimal(1) / 20)}}, upsert=True)

print db.test.find_one()['count']

2.000000000000001

How can I get around this issue?

Answer: Unfortunately, you can't -- at least not directly. Mongo stores floating-point

numbers as double-precision IEEE floats

(<https://en.wikipedia.org/wiki/IEEE_floating_point>), and those rounding

errors are inherent to the format.

I'm noticing you're using Decimals in your code -- they're converted to Python

floats (which are doubles) before being sent to the DB. If you want to keep

your true decimal precision, you'll have to store your numbers as stringified

Decimals, which means you'll also have to give up Mongo's number-handling

facilities such as `$inc`.

It is, sadly, a tradeoff you'll be confronted to in most databases and

programming languages: IEEE floating-point numbers is the format CPUs natively

deal with, and any attempts to stray away from them (to use arbitrary-

precision decimals like `decimal.Decimal`) come with a big performance and

usability penalty.

|

how to fetch all the documents of the mongodb collection in python

Question: I want to fetch all the documents of the some collection of MongoDB and print

certain attributes within each document. But I am unable to do so.

My code:

import csv

from pymongo import MongoClient

db_client = MongoClient()

db = db_client.pg_new_sw_cur

db_collection_users = db.pg_new_sw

#with open("pg_details_new.csv",'w') as f:

for post in db_collection_users.find():

print post['user_name']

print "==========================="

It is printing nothing on the screen after running it when the collection

consists of thousands of objects/documents when viewed using robomongo.

I am new to MongoDB. So, please help me.

Answer: It would be a wild guess, but I suspect that you have specified an incorrect

collection name:

db_collection_users = db.pg_new_sw

Recheck that `pg_new_sw` actually exists in your local `MongoDB` server

instance and contains documents inside.

|

Unique Template Class without __LINE__ or __COUNTER__ Macros

Question: First, let me start with what I'm trying to do. I'm working on a library that

makes embedding a Python interpreter in C++ code a bit easier, and I'd like to

leverage some C++11 features. I'm using std::functions quite heavily, which is

a problem since Python makes heavy use of classic function pointers.

I've been using fredbaba's solution found at

<http://stackoverflow.com/a/18422878>

which may not be a good idea, but the reasoning seems sound; for every

std::function you'd like a pointer too, you need to create some class or

struct with a static function that invokes the std::function of interest, and

any class function pointers can point to the static function.

However, that means you've got to ensure every struct created is unique; the

poster from that thread uses a unique integer as an identifier, which is

cumbersome. In my code I use the LINE macro (COUNTER never seems to work), but

that of courses forces me to put everything in one file to avoid line number

conflicts.

I've found similar questions asked but none of them really do it; in order for

this to work the identifier has to be a compile time constant, and many of the

solutions I've found fail in this regard.

Is this possible? Can I cheat the system and get pointers to my

std::functions? If you're wondering why I need to... I take a std::function

that wraps some C++ function, capture it in yet another function, then store

that in a std::list. Then I create a function pointer to each list element and

put them in a Python Module I create.

// Store these where references are safe

using PyFunc = std::function<PyObject *(PyObject *, PyObject *)>;

std::list<PyFunc> lst_ExposedFuncs;

...

// Expose some R fn(Args...){ ... return R(); }

template <size_t idx, typename R, typename ... Args>

static void Register_Function(std::string methodName, std::function<R(Args...)> fn, std::string docs = "")

{

// Capture the function you'd like to expose in a PyFunc

PyFunc pFn = [fn](PyObject * s, PyObject * a)

{

// Convert the arguments to a std::tuple

std::tuple<Args...> tup;

convert(a, tup);

// Invoke the function with a tuple

R rVal = call<R>(fn, tup);

// Convert rVal to some PyObject and return

return alloc_pyobject(rVal);

};

// Use the unique idx here, where I'll need the function pointer

lst_ExposedFunctions.push_back(pFn);

PyCFunction fnPtr = get_fn_ptr<idx>(lst_ExposedFunctions.back());

}

From there on I actually do something with fnPtr, but it's not important.

Is this crazy? Can I even capture a function like that?

John

Answer: I do something vaguely similar for a similar use case (actually a class

factory for use with a JNI). My technique is to use a `template struct` on an

`unsigned` with a specialisation for 0: Each `struct` contains a function -

you could adapt this to a `static` member for which a pointer would be valid.

I also show how you could call a function `foo` with a parameter dependent on

a particular specialisation (not sure you need this, but included just in

case).

extern bar* foo(const unsigned& id); // The function that gets called

template<unsigned N> struct Registrar

{

static bar* func()

{

return foo(N - 1);

}

private:

Registrar<N - 1> m_next; // Instantiate the next one.

};

template<> struct Registrar<0> // To block the recursion

{

};

namespace

{

Registrar</*ToDo - total number here*/> TheRegistrar;

}

`TheRegistrar` is pretty much a metasyntactic variable which ensures that a

given number of specialisations, and hence `static` functions are created.

(For various technical reasons I have to instantiate my templates in reverse

order: if you don't need that then you can adjust accordingly.)

I imagine it's the interplay between `func` and `foo` that you'll need to

adapt to your needs. Each `N`, of course, is that _compile-time_ constant that

you seek.

|

Python - Control window with pywinauto while the window is minimized or hidden

Question: **What I'm trying to do:**

I'm trying to create a script in python with pywinauto to automatically

install notepad++ in the background (hidden or minimized), notepad++ is just

an example since I will edit it to work with other software.

**Problem:**

The problem is that I want to do it while the installer is hidden or

minimized, but if I move my mouse the script will stop working.

**Question:**

How can I execute this script and make it work, while the notepad++ installer

is hidden or minimized.

**This is my code so far** :

import sys, os, pywinauto

pwa_app = pywinauto.application.Application()

app = pywinauto.Application().Start(r'npp.6.8.3.Installer.exe')

Wizard = app['Installer Language']

Wizard.NextButton.Click()

Wizard = app['Notepad++ v6.8.3 Setup']

Wizard.Wait('visible')

Wizard['Welcome to the Notepad++ v6.8.3 Setup'].Wait('ready')

Wizard.NextButton.Click()

Wizard['License Agreement'].Wait('ready')

Wizard['I &Agree'].Click()

Wizard['Choose Install Location'].Wait('ready')

Wizard.Button2.Click()

Wizard['Choose Components'].Wait('ready')

Wizard.Button2.Click()

Wizard['Create Shortcut on Desktop'].Wait('enabled').CheckByClick()

Wizard.Install.Click()

Wizard['Completing the Notepad++ v6.8.3 Setup'].Wait('ready', timeout=30)

Wizard['CheckBox'].Wait('enabled').Click()

Wizard.Finish.Click()

Wizard.WaitNot('visible')

Answer: The problem is here:

Wizard['Create Shortcut on Desktop'].Wait('enabled').CheckByClick()

`CheckByClick()` uses `ClickInput()` method that moves real mouse cursor and

performs a realistic click.

Use `Check()` method instead.

[EDIT] If the installer doesn't handle BM_SETCHECK properly the workaround may

look so:

checkbox = Wizard['Create Shortcut on Desktop'].Wait('enabled')

if checkbox.GetCheckState() != pywinauto.win32defines.BST_CHECKED:

checkbox.Click()

I will fix it in the next pywinauto release by creating methods `CheckByClick`

and `CheckByClickInput` respectively.

* * *

[EDIT 2] I tried your script with my fix and it works perfectly (and very

fast) with and without mouse moves. Win7 x64, 32-bit Python 2.7, pywinauto

0.5.3, run as administrator.

import sys, os, pywinauto

app = pywinauto.Application().Start(r'npp.6.8.3.Installer.exe')

Wizard = app['Installer Language']

Wizard.Minimize()

Wizard.NextButton.Click()

Wizard = app['Notepad++ v6.8.3 Setup']

Wizard.Wait('visible')

Wizard.Minimize()

Wizard['Welcome to the Notepad++ v6.8.3 Setup'].Wait('ready')

Wizard.NextButton.Click()

Wizard.Minimize()

Wizard['License Agreement'].Wait('ready')

Wizard['I &Agree'].Click()

Wizard.Minimize()

Wizard['Choose Install Location'].Wait('ready')

Wizard.Button2.Click()

Wizard.Minimize()

Wizard['Choose Components'].Wait('ready')

Wizard.Button2.Click()

Wizard.Minimize()

checkbox = Wizard['Create Shortcut on Desktop'].Wait('enabled')

if checkbox.GetCheckState() != pywinauto.win32defines.BST_CHECKED:

checkbox.Click()

Wizard.Install.Click()

Wizard['Completing the Notepad++ v6.8.3 Setup'].Wait('ready', timeout=30)

Wizard.Minimize()

Wizard['CheckBox'].Wait('enabled').Click()

Wizard.Finish.Click()

Wizard.WaitNot('visible')

|

scipy.io.savemat How to save global variables?

Question: I'm trying to use Python like one would do in Matlab. Basically I have some

Python code for which I have run and it has generated some global variables.

Say, a = 5 b = 3

I would like to save these to a .mat file , that will be openable by Matlab.

The goal is to be able to see the global variables in Matlab, just as one

would when saving to a .mat file in Matlab.

I've seen examples where savemat is used to save dictionaries/arrays, but not

where it saves the global variables.

How may I do so? Is this something that scipy just cannot do?

Thanks.

Answer: You most probably don't want to save globals, but locals (though the variables

are local to the interpreter). You can access and update them through the

`locals()` and `globals()` functions.

From there you can use your preferred method of storage, such as

[pickle](https://docs.python.org/3/library/pickle.html),

[marshal](https://docs.python.org/3/library/marshal.html),

[json](https://docs.python.org/3/library/json.html) or others, depending on

which level of security you want and which object types you have at hand.

However, be wary that messing with those functions may get you into some

trouble, since they also report functions and some internal variables.

However, if you intend to (like Matlab) just load and store variables through

the GUI, you may want to check the **[Spyder

IDE](http://pythonhosted.org/spyder/)** ([GitHub

project](https://github.com/spyder-ide/spyder)). It is a very nice IDE, geared

toward scientific usage and very similar (in appearance) to Matlab's old

interface. Most importantly, it offers a tab/pane named "**Variable explorer**

" which tracks what you want to store, and have both a "**Save data as...** "

and "**Import data** " buttons and the "Save data as..." dialog supports

saving to Spyder data files (_.spydata), Matlab files (_.mat) and HDF5 (*.h5).

If you want to do this programatically, you may check how Spyder does it.

|

randint in Python 3.5 doesn't work

Question: I am a new to Python, and I ran into some issues when developing a _D &D Dice_

program.

import random

print("Hi,here you can roll all D´n´D Dice´s!")

dice=input("What dice do u want?D4,D6,D8,D10,D12,D20 or D100?")

if dice=="D4" or "d4":

print(random.randint(1,4))

elif dice=="D6" or "d6":

print(random.randint(1,6))

elif dice=="D8" or "d8":

print(random.randint(1,8))

elif dice=="D10"or"d10":

print(random.randint(1,10))

elif dice=="D12"or"d12":

print(random.randint(1,12))

elif dice=="D20"or"d20":

print(random.randint(1,20))

elif dice=="D100"or"d100":

print(random.randint(1,100))

else: print("No?Ok!")

When I run the program and enter the number of dice, it always enter the first

`if` statement.

Answer: You want to write:

if dice=="D4" or dice=="d4":

instead of:

if dice=="D4" or "d4":

`"d4"` by itself has a (true) boolean value because it isn't an empty string.

|

Select option from dynamic dropdown list using selenium

Question: I am trying to fill out the dropdown menus found on this

[homepage](http://www.tirerack.com/content/tirerack/desktop/en/homepage.html)

using Python and the selenium package. To Select Make I am using the following

code

from selenium import webdriver

from selenium.webdriver import ActionChains

from selenium.webdriver.support.select import Select

driver = webdriver.Firefox()

driver.implicitly_wait(5)

driver.get('http://www.tirerack.com/content/tirerack/desktop/en/homepage.html')

button = driver.find_element_by_tag_name('button')

ActionChains(driver).click(button).perform()

select_make = driver.find_element_by_id('vehicle-make')

Select(select_make).select_by_value("BMW")

However this does not seem to actually "Select the BMW" option. I tried to

follow the method explained in

[this](http://sqa.stackexchange.com/questions/1355/what-is-the-correct-way-to-

select-an-option-using-seleniums-python-webdriver) post. Can someone show me

what I am doing wrong?

Answer: From the question you linked to the accepted answer iterates over the options

and finds the matching text.

select_make = driver.find_element_by_id('vehical-make')

for option in select_make.find_elements_by_tag_name('option'):

if option.text == 'BMW':

option.click() # select() in earlier versions of webdriver

break

Running this in Java I got the message that the element is not visible, so I

forced it:

WebDriver driver = new FirefoxDriver();

driver.get("http://www.tirerack.com/content/tirerack/desktop/en/homepage.html");

Thread.sleep(3000);

driver.findElement(By.tagName("button")).click();

WebElement select_make = driver.findElement(By.id("vehicle-make"));

select_make.click();

JavascriptExecutor js = (JavascriptExecutor) driver;

String jsDisplay = "document.getElementById(\"vehicle-make\").style.display=\"block\"";

js.executeScript(jsDisplay, select_make);

for (WebElement option : select_make.findElements(By.tagName("option"))) {

System.out.println(option.getText());

if ("BMW".equals(option.getText())) {

option.click();

break;

}

}

If you add the JavascriptExecutor lines (in python) I think it will work.

|

Python Tkinter: Reading Serial Values

Question: I would like to read serial values into my Tkinter GUI. Either into a text

window or eventually to a text label widget which updates every second or so.

The issue I am having is with the queue class. The error I am getting is:

AttributeError: 'Applcation' object has no attribute 'queue'

Here is my code:

#!/usr/bin/env python

from tkinter import *

from tkinter import messagebox

from time import sleep

import picamera

import os

import serial

import sys

import RPi.GPIO as GPIO

import _thread

import threading

import random

import queue

# Setup GPIO pin(s)

GPIO.setmode(GPIO.BCM)

GPIO.setwarnings(False)

GPIO.setup(18, GPIO.OUT)

GPIO.output(18, False)

#==============================================================

# Declaration of Constants

# none used

#==============================================================

class SerialThread(threading.Thread):

def __init__(self, queue):

threading.Thread.__init__(self)

self.queue = queue.Queue()

def read_sensor_values(self):

ser = serial.Serial('/dev/ttyUSB0', 9600)

while True:

if ser.inWaiting:

text = ser.readline(s.inWaiting)

self.queue.put(text)

self.pressures_txt.insert(0.0,values)

class Application(Frame):

""" GUI Application for taking photos. """

def __init__(self, master):

super(Application, self).__init__(master)

self.grid()

self.create_widgets()

self.setup_camera()

def create_widgets(self):

Checkbutton( self, text = "Read Pressure Values", variable = self.mode2, command = self.process_serial, bg = 'white').grid(row = 4, column = 0, sticky = W+E+N+S)

# create text field to display pressure values from arduino

self.pressures_txt = Text(self, height = 3, wrap = WORD)

self.pressures_txt.grid(row=9, column = 0, columnspan =3)

def process_serial(self):

#self.text.delete(1.0, END)

while self.queue.qsize():

try:

self.text.insert(END, self.queue.get())

self.pressures_txt.insert(0.0, self.queue.get())

except queue.Empty:

pass

self.after('1000', self.process_serial)

#................. end of method: read_sensor_values ................

#=================================================================

# main

#=================================================================

root = Tk() # Create the GUI root object

root.title("Control V1.0")

app = Application(root) # Create the root application window

root.mainloop()

The code I have posted is an abbreviated version of the entire program. I have

removed sections that are supposedly irrelevant. I am running in python3. I

suspect I may have an error in indentation but I am not certain.

I am using the code from the following link for my serial reading class:

<https://www.daniweb.com/programming/software-

development/threads/496499/using-a-checkbutton-to-import-serial-data-into-

python-tkinter>

Answer: The problem is that the `self.queue` in `read_sensor_values` belongs to a

`SerialThread` object. When you say `self.queue` in the `process_serial`

method of your `Application` object, it refers to a non-existent `queue`

attribute of the `Application` object. Perhaps you ought to make the

`SerialThread` object an attribute of your `Application` object. Then

`process_serial` could refer to `self.serial.queue` or whatever you name it.

|

generic scoring module using python pandas

Question: Hi I'm trying to develop a generic scoring module for grading students based

on variety of attributes. I'm trying to develop a generic method using python

pandas Input: An input data frame with student ID and UG Major and attributes

for scoring (I called df_input) An input ref. data frame that contains scoring

params

Process: Based on the variable type, developing a process to calculate scores

for each attribute

Output: Input data frame with added cols that capture the attribute score

Example:

df_input

+

------------+-----------+----+------------+-----+------+

| STUDENT_ID | UG_MAJOR | C1 | C2 | C3 | C4 |

+------------+-----------+----+------------+-----+------+

| 123 | MATH | A | 8000-10000 | 12% | 9000 |

| 234 | ALL_OTHER | B | 1500-2000 | 10% | 1500 |

| 345 | ALL_OTHER | A | 2800-3000 | 8% | 2300 |

| 456 | ALL_OTHER | A | 8000-10000 | 12% | 3200 |

| 980 | ALL_OTHER | C | 1000-2500 | 15% | 2700 |

+------------+-----------+----+------------+-----+------+

df_ref +

---------+---------+---------+

| REF_COL | REF_VAL | REF_SCR |

+---------+---------+---------+

| C1 | A | 10 |

| C1 | B | 20 |

| C1 | C | 30 |

| C1 | NULL | 0 |

| C1 | MISSING | 0 |

| C1 | A | 20 |

| C1 | B | 30 |

| C1 | C | 40 |

| C1 | NULL | 10 |

| C1 | MISSING | 10 |

| C2 | <1000 | 0 |

| C2 | >1000 | 20 |

| C2 | >7000 | 30 |

| C2 | >9500 | 40 |

| C2 | MISSING | 0 |

| C2 | NULL | 0 |

| C3 | <3% | 5 |

| C3 | >3% | 10 |

| C3 | >5% | 100 |

| C3 | >7% | 200 |

| C3 | >10% | 300 |

| C3 | NULL | 0 |

| C3 | MISSING | 0 |

| C4 | <5000 | 10 |

| C4 | >5000 | 20 |

| C4 | >10000 | 30 |

| C4 | >15000 | 40 |

+---------+---------+---------+

+------------+-----------+----+------------+-----+------+--------+--------+--------+---------+

| Req.Output | | | | | | | | | |

+------------+-----------+----+------------+-----+------+--------+--------+--------+---------+

| STUDENT_ID | UG_MAJOR | C1 | C2 | C3 | C4 | C1_SCR | C2_SCR | C3_SCR | TOT_SCR |

| 123 | MATH | A | 8000-10000 | 12% | 9000 | | | | |

| 234 | ALL_OTHER | B | 1500-2000 | 10% | 1500 | | | | |

| 345 | ALL_OTHER | A | 2800-3000 | 8% | 2300 | | | | |

| 456 | ALL_OTHER | A | 8000-10000 | 12% | 3200 | | | | |

| 980 | ALL_OTHER | C | 1000-2500 | 15% | 2700 | | | | |

+------------+-----------+----+------------+-----+------+--------+--------+--------+---------+

I want to see if any thing like a function be developed to accomplish this

Thank you Pari

Answer: If I understand the question correctly, you are trying to store a collection

of rules in `df_ref` that are to be applied to `df_input` to generate scores.

While this certainly can be done, you should make sure that your rules are

well defined. This would also guide you in writing the corresponding scoring

function.

For instance, suppose one of the students gets a value of `10000` in column

`C3`. `10000` is larger than `1000`, `7000` and `9500`. This means that the

score is ambiguous. Suppose you want to choose the highest of all scores from

this particular column. Then, you need another table specifying the choice

rule for each column when multiple scores are selected.

Second, you should think about the type of Python variable stored in 'REF_VAL'

column. If `>7000` is a string, you would have to do extra work to determine

the score. Consider storing this as `7000` instead and specifying comparison

operator elsewhere.

Finally, looking at your current rules, there seems to be a pattern. Each

score is associated with `NULL`, `MISSING` or a range cutoff. This can be

captured as follows:

import pandas as pd

import numpy as np

from itertools import dropwhile

# stores values and scores for special values and cutoff values

sample_range_rule = {

'MISSING' : 0,

'NULL' : 0,

'VALS' : [

(0, 0),

(10, 50),

(70, 75),

(90, 100),

(100, 100)

]

}

# takes a dict with rules and produces a scoring function

def getScoringFunction(range_rule):

def score(val):

if val == 'MISSING':

return range_rule['MISSING']

elif val == 'NULL':

return range_rule['NULL']

else:

return dropwhile(lambda (cutoff, score): cutoff < val,

range_rule['VALS']).next()[1]

return score

sample_scoring_function = getScoringFunction(sample_range_rule)

for test_value in ['MISSING', 'NULL', 0, 12, 55, 66, 99]:

print 'Input', test_value,

print 'Output', sample_scoring_function(test_value)

After you have a dict specifying a rule for every column, you can do the

following:

`df['Ck_SCR'] = df['Ck'].apply(getScoringFunction(Ck_dct))`

Converting pandas DataFrame with two columns to a dict of this form should not

be to difficult.

|

MySQL special chars lost when queried in Python. (But works with Django.)

Question: I have a MySQL database that is utf8 encoded. It contains a row with a value

"sauté". Note the accent over the e.

When I select this data using a Django application then it correctly detects

the é. However, when I execute it as a Python program then this information

(the special e) seems to be lost.

I've tried various combinations of encode and decode and "from **future**

import unicode_literals" and putting a "u" in front of the sauté string and

putting a u in front of the query string. No luck. How can I get this info

correctly out of my database (with Python) and test for it?

# Connection when excute as .py: (Django is usual in settings.py)

cursor = MySQLdb.connect(host='<xxx>',user="<xxx>", passwd="<xxx>", db="<xxx>",

unix_socket = 'path/mysql.sock'

).cursor()

# Same code in Django and Python execution:

cursor.execute(select line from my_table where id = 27)

results = cursor.fetchall()

for r in results:

line = r[0]

if re.search("sauté", line):

do_something() # Should get here, but only with Django

Answer: Thanks to JD Vangsness (in the comments) for the answer.

I needed to add charset='utf8' to the connection parameters.

cursor = MySQLdb.connect(host='<xxx>',user="<xxx>", charset='utf8', passwd="<xxx>", db="<xxx>", unix_socket = 'path/mysql.sock').cursor()

And then I also needed to make the comparison string unicode:

u"sauté"

|

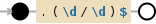

IGNORECASE errors in Python's re.Scanner?

Question: There is hidden but well known

[functionality](http://code.activestate.com/recipes/457664-hidden-scanner-

functionality-in-re-module/) in re module

import re

def s_ident(scanner, token): return token

def s_operator(scanner, token): return "op%s" % token

def s_float(scanner, token): return float(token)

def s_int(scanner, token): return int(token)

scanner = re.Scanner([

(r"[a-zA-Z]\w*", s_ident),

(r"\d+\.\d*", s_float),

(r"\d+", s_int),

(r"=|\+|-|\*|/", s_operator),

(r"\s+", None),

])

print scanner.scan("Sum = 3*foo + 312.50 + bar")

# (['Sum', 'op=', 3, 'op*', 'foo', 'op+', 312.5, 'op+', 'bar'], '')

I want to use IGNORECASE flag here but it seems it does not work:

import re

def s_ident(scanner, token): return token

def s_operator(scanner, token): return "op%s" % token

def s_float(scanner, token): return float(token)

def s_int(scanner, token): return int(token)

scanner = re.Scanner([

(r"(?i)[a-z]\w*", s_ident),

(r"\d+\.\d*", s_float),

(r"\d+", s_int),

(r"=|\+|-|\*|/", s_operator),

(r"\s+", None),

])

print scanner.scan("Sum = 3*foo + 312.50 + bar")

# ([], 'Sum = 3*foo + 312.50 + bar')

Is it a issue of the Scanner or error in my code? Is it possible to implement

non-case-sensitive matching using Scanner?

This issue was initially reproduced on Python 2.7.9.

Expected value: (['Sum', 'op=', 3, 'op*', 'foo', 'op+', 312.5, 'op+', 'bar'],

'')

Actual value: ([], 'Sum = 3*foo + 312.50 + bar')

Answer: You can pass the `flags` parameter to the constructor.

scanner = re.Scanner([

(r"[a-z]\w*", s_ident),

(r"\d+\.\d*", s_float),

(r"\d+", s_int),

(r"=|\+|-|\*|/", s_operator),

(r"\s+", None),

], flags=re.IGNORECASE)

Source for `Scanner`:

<https://github.com/python/cpython/blob/master/Lib/re.py#L345>

|

Django - Autocomplete_Light's "Add Another" popup declares: "'initial' is an invalid keyword argument for this function"

Question: I am working on getting the "add another" popup to work with `django-

autocomplete_light`.

Following along in the docs:

<http://django-autocomplete-light.readthedocs.org/en/latest/addanother.html>

I have set up my URLs:

import autocomplete_light.shortcuts as al

from AlmondKing.FinancialLogs import models

from AlmondKing.FinancialLogs import forms

urlpatterns = [

url(r'^branches/autocreate/$', al.CreateView.as_view(

model=models.CompanyBranch, form_class=forms.CompanyBranch),

name='branch_autocreate'),

]

and my autocomplete_light_registry.py

al.register(CompanyBranch,

search_fields=['^branch_name'],

attrs={

'placeholder': 'Branch',

'data-autocomplete-minimum-characters': 1,

},

widget_attrs={

'data-widget-maximum-values': 1,

'class': 'modern-style',

},

add_another_url_name='company:branch_autocreate',

)

However, when I click the plus sign to add a new related object, I get the

following error:

> TypeError at /company/branches/autocreate/

>

> 'initial' is an invalid keyword argument for this function

I've been trying to find a way to do this for a while and I'm so close!

Now, I am hoping someone can read the traceback and help me understand what

went wrong:

Environment:

Request Method: GET

Request URL: http://localhost:8000/company/branches/autocreate/?_popup=1&winName=id_branch

Django Version: 1.8.2

Python Version: 3.4.3

Installed Applications:

('django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

'AlmondKing.InventoryLogs',

'AlmondKing.FinancialLogs',

'AlmondKing.AKGenius',

'autocomplete_light')

Installed Middleware:

('django.contrib.sessions.middleware.SessionMiddleware',

'django.middleware.common.CommonMiddleware',

'django.middleware.csrf.CsrfViewMiddleware',

'django.contrib.auth.middleware.AuthenticationMiddleware',

'django.contrib.auth.middleware.SessionAuthenticationMiddleware',

'django.contrib.messages.middleware.MessageMiddleware',

'django.middleware.clickjacking.XFrameOptionsMiddleware',

'django.middleware.security.SecurityMiddleware',

'AlmondKing.AKGenius.middleware.RequireLoginMiddleware')

Traceback:

File "C:\Users\Adam\Envs\AlmondKing\lib\site-packages\django\core\handlers\base.py" in get_response

132. response = wrapped_callback(request, *callback_args, **callback_kwargs)

File "C:\Users\Adam\Envs\AlmondKing\lib\site-packages\django\views\generic\base.py" in view

71. return self.dispatch(request, *args, **kwargs)

File "C:\Users\Adam\Envs\AlmondKing\lib\site-packages\django\views\generic\base.py" in dispatch

89. return handler(request, *args, **kwargs)

File "C:\Users\Adam\Envs\AlmondKing\lib\site-packages\django\views\generic\edit.py" in get

245. return super(BaseCreateView, self).get(request, *args, **kwargs)

File "C:\Users\Adam\Envs\AlmondKing\lib\site-packages\django\views\generic\edit.py" in get

205. form = self.get_form()

File "C:\Users\Adam\Envs\AlmondKing\lib\site-packages\django\views\generic\edit.py" in get_form

74. return form_class(**self.get_form_kwargs())

File "C:\Users\Adam\Envs\AlmondKing\lib\site-packages\django\db\models\base.py" in __init__

480. raise TypeError("'%s' is an invalid keyword argument for this function" % list(kwargs)[0])

Exception Type: TypeError at /company/branches/autocreate/

Exception Value: 'initial' is an invalid keyword argument for this function

Answer: Try this:

add_another_url_name='company:branch_autocreate',

add_another_url_name='branch_autocreate',

|

Python File handling: standard input and output

Question:

import sys

fileobject=open('file.txt','w')

fileobject.write(sys.stdin.readline())

Cat

In the above code, shouldn't cat be in file after execution? However, when I

run it, I find file empty. If my code is wrong, can someone explain how

`sys.stdin.read()` and `sys.stdout.write()` work and their uses?

Answer: You need to close the file

import sys

fileobject = open('file.txt', 'w')

fileobject.write(sys.stdin.readline())

Cat

fileobject.close()

If you want to see the updated file content before closing the file or exiting

the program you can use flush():

fileobject.flush()

Check this [StackOverflow

Question](http://stackoverflow.com/questions/14555402/understanding-stdin-

stdout-stderr%20question) for standard input/output

|

DHT Sensor Python script error

Question: I have a sensor type DHT22 connected to a raspberry. I have written a script

in python but when I run it I get errors

#!/usr/bin/python

import MySQLdb

import subprocess

import re

import sys

import time

import datetime

import Adafruit_DHT

conn = MySQLdb.connect("localhost","zeus","gee3g673r","logi")

while(True):

date = time.strftime("%d/%m/%Y")

clock = time.strftime("%H:%M")

#output = subprocess.check_output(["/usr/bin/AdafruitDHT.py 2302", "4"]);

output = Adafruit_DHT.read_retry(Adafruit_DHT.AM2302, 4)

matches = re.search("Temp =\s+([0-9.]+)", output)

if (not matches):

time.sleep(0)

continue

temp = float(matches.group(1))

matches = re.search("Hum =\s+([0-9.]+)", output)

if (not matches):

time.sleep(0)

continue

humidity = float(matches.group(1))

# MYSQL DATA Processing

c = conn.cursor()

c.execute("INSERT INTO data_th (date, clock, temp, hum) VALUES (%s, %s,%s, %s)",(date, clock, temp, humidity))

#print "DB Loaded"

time.sleep(360)

This is the error encountered on running the script:

root@raspberrypi:/home# ./hdt.py

Traceback (most recent call last):

File "./dht.py", line 22, in <module>

matches = re.search("Temp =\s+([0-9.]+)", output)

File "/usr/lib/python2.7/re.py", line 142, in search

return _compile(pattern, flags).search(string)

TypeError: expected string or buffer

Answer: Adafruit_DHT.read_retry() does not return string. re.search expects string as

second parameter.

Please have a look at code below (taken from

[Adafruit_Python_DHT/examples](https://github.com/adafruit/Adafruit_Python_DHT/blob/master/examples/AdafruitDHT.py)):

# Try to grab a sensor reading. Use the read_retry method which will retry up

# to 15 times to get a sensor reading (waiting 2 seconds between each retry).

humidity, temperature = Adafruit_DHT.read_retry(sensor, pin)

# Un-comment the line below to convert the temperature to Fahrenheit.

# temperature = temperature * 9/5.0 + 32

# Note that sometimes you won't get a reading and

# the results will be null (because Linux can't

# guarantee the timing of calls to read the sensor).

# If this happens try again!

if humidity is not None and temperature is not None:

print 'Temp={0:0.1f}* Humidity={1:0.1f}%'.format(temperature, humidity)

else:

print 'Failed to get reading. Try again!'

sys.exit(1)

|

a "Pygame error", not able to open .wav file

Question: I have a problem with my Pygame program. I need help. The wav file is in the

same directory as the python file. I run It in terminal- Python3:

import pygame.mixer

sounds = pygame.mixer

sounds.init()

def wait_finish(channel):

while channel.get_busy():

pass

asked = 0

true = 0

false = 0

choice = str(input("Push 1 for true, 2 for false, 0 to end"))

while choice != '0':

if choice == '1':

asked = asked + 1

true = true + 1

s = sounds.Sound("correct.wav")

wait_finish(s.play())

if choice == '2':

asked = asked + 1

false = false + 1

s = sounds.Sound("wrong.wav")

wait_finish(s.play())

choice = str(input("Push 1 for true, 2 for false, 0 to end"))

print ("you asked" +str(asked) + "questions")

print ("there were" +str(false) + "wrong answers")

print ("and" + str(true) + "correct answers")

...it throws- pygame.error: Unable to open file 'correct.wav'

Answer: Rather than have a discussion in the comments section I'll post this.

Once `def wait_finish(channnel):` has been altered to `def

wait_finish(channel):` and the issue with `sounds.Sound` rather than

`sounds.Sounds` has been resolved, the program works fine with normal .wav

files on my machine.

I am convinced that the error of Sound or Sounds for calling the wrong.wav

file to be played would explain the "unable to open file wrong.wav" message.

If pygame doesn't like something about the file it may well not play it and

this is where a line like:

sounds.pre_init(frequency=22050, size=-16, channels=2, buffer=4096)

(called before `sounds.init()`) might come into play.

(NB you might have to use a different buffer option as I am testing with

pygame for python 2.7)

On my box, if pygame doesn't like or cannot find the file, I get no error at

all but the speakers click when the call to play is made.

All I can suggest at this point is that you try an entirely different wav file

to the current one you are using for wrong answers and see if it makes a

difference. Just for the record, I changed your wait_finish function to :

def wait_finish(channel):

while channel.get_busy():

pygame.time.Clock().tick(10)

|

Merging tables from two different databases (Python)

Question: I have two databases `Database1.db` and `Database2.db`. The databases contain

tables with **matching names and matching columns** (and the Primary key is

the 'Date' column in both). The only difference between the two is that the

entries in Database1 are from 2013 and the entries in Database2 are from 2014.

I would like to merge these two databases so that all the 2013 and 2014 data

ends up **in one table** in a third database (let's call it Database3.db).

To be clear, here is what the databases I'm working with currently contain and

what I want the third resulting database to contain:

Database1.db:

Table Name: GERMANY_BERLIN

Date Morning Day Evening Night

01.01.2013 0.5 0.2 0.2 0.1

02.01.2013 0.4 0.3 0.1 0.2

...

Database2.db:

Table Name: GERMANY_BERLIN

Date Morning Day Evening Night

01.01.2014 0.6 0.2 0.1 0.1

02.01.2014 0.5 0.2 0.3 0.0

...

I would like to have create a resulting Database3 with the following data:

Database2.db:

Table Name: GERMANY_BERLIN

Date Morning Day Evening Night

01.01.2013 0.5 0.2 0.2 0.1

02.01.2013 0.4 0.3 0.1 0.2

01.01.2014 0.6 0.2 0.1 0.1

02.01.2014 0.5 0.2 0.3 0.0

...

I haven't been able to find anything directly helpful on this online yet

(perhaps JOINS could be used somehow?

bhttp://www.tutorialspoint.com/sqlite/sqlite_using_joins.htm) so any

suggestions would be greatly appreciated!

PS. SQLite has been used to create the existing databases and is the database-

related Python library that I'm most familiar with

Answer: You can easly export a .db into a csv ([How to export sqlite to CSV in Python

without being formatted as a

list?](http://stackoverflow.com/questions/10522830/how-to-export-sqlite-to-

csv-in-python-without-being-formatted-as-a-list)) and import it again into .db

([Importing a CSV file into a sqlite3 database table using

Python](http://stackoverflow.com/questions/2887878/importing-a-csv-file-into-

a-sqlite3-database-table-using-python)) for the step 1 just append the result

to the same cvs file.

|

Python 3 CSV not writing

Question: When I open my csv file I see nothing. Is this the right way to build a csv

file? Just trying to learn it all. Thanks for all your help.

import csv

from urllib.request import urlopen

from bs4 import BeautifulSoup

html = urlopen("http://shop.nordstrom.com/c/designer-handbags?dept=8000001&origin=topnav#category=b60133547&type=category&color=&price=&brand=&stores=&instoreavailability=false&lastfilter=&sizeFinderId=0&resultsmode=&segmentId=0&page=1&partial=1&pagesize=100&contextualsortcategoryid=0")

nordHandbags = BeautifulSoup(html)

bagList = nordHandbags.findAll("a", {"class":"title"})

f = csv.writer(open("./nordstrom.csv", "w"))

f.writerow(["Product Title"])

for title in bagList:

productTitles = title.contents[0]

f.writerow([productTitles])

Answer: Really hard to see how you could fail to have at least a `"Product Title"`

header in that file. Are you checking the file _after_ you have tgerminated

the Python interpreter? This, because there is no explicit close of the file

in that code, and until it is closed, its contents may be cached in memory.

More Pythonic, and avoiding this problem, is

with open("./nordstrom.csv", "w") as csvfile:

f = csv.writer( csvfile)

f.writerow(["Product Title"])

# etc.

pass # close the with block, csvfile is now closed.

Also (grasping at straws) are you opening the file with a text editor to check

it, or just using the `type` command in Windows cmd.exe? Because, if the file

doesn't contain an explicit LF, the `C:\wherever\ >`prompt may overwrite the

header before you see it.

|

How to authenticate in django app via C#?

Question: I have a python script:

import requests

main_page_request = requests.get("http://carkit.kg/")

csrf_cookie = main_page_request.cookies.get("csrftoken", "")

r = requests.post("http://carkit.kg/", data={u'username': u'admin', u'password': u'admin', 'csrfmiddlewaretoken': csrf_cookie }, cookies={'csrftoken': csrf_cookie})

print r.url

carkit.kg/ - is a login url in django app. Script prints one url if

authentication succeed and another in other case. I tried to rewrite this

script in C# (Unity3D game):

//get token

string url = "http://carkit.kg";

HttpWebRequest tokenRequest = (HttpWebRequest)WebRequest.Create(url);

tokenRequest.CookieContainer = new CookieContainer();

HttpWebResponse tokenResponse = (HttpWebResponse)tokenRequest.GetResponse();

String token = tokenResponse.Cookies["csrftoken"].ToString().Split('=')[1];

//login

HttpWebRequest loginRequest = (HttpWebRequest)WebRequest.Create(url);

loginRequest.Method = "POST";

loginRequest.CookieContainer = new CookieContainer();

loginRequest.ContentType = "application/x-www-form-urlencoded";

loginRequest.CookieContainer.Add(new Cookie("csrftoken", token, "/", "carkit.kg"));

String postData = "username=" + tempEmail;

postData += "&password=" + tempPass;

postData += "&csrfmiddlewaretoken=" + token;

byte[] data = Encoding.ASCII.GetBytes(postData);

loginRequest.ContentLength = data.Length + 1;

Debug.Log(data.Length);

loginRequest.Timeout = 3000;

String encoded = System.Convert.ToBase64String(System.Text.Encoding.GetEncoding("ISO-8859-1").GetBytes(tempEmail + ":" + tempPass));

loginRequest.Headers.Add("Authorization", "Basic " + encoded);

loginRequest.GetRequestStream().Write(data, 0, data.Length);

loginRequest.PreAuthenticate=true;

HttpWebResponse authResponse = (HttpWebResponse)loginRequest.GetResponse();

But I'm getting error 411 or timed out request. How to get the same result in

C# code as in python?

Answer: I setted [django rest api url](http://www.django-rest-framework.org/api-

guide/authentication/) as simple post request destination and django returned

token in data.

|

How to tell python to reload module whenever it sees import statement

Question: I'm writing a program to manage the flow of processing data. User can write

code of several method to process data, called 'flow code', and tell my

program to compare the result of each method. The code is executed by `exec`.

There's also a git repo containing functions to call, and user can import them

in the 'flow code'.

My question is, because user can change repo between each execution, how to

tell python to reload modules when it sees an import statement in 'flow code'?

My current solution is that, before executing a line, check type of all local

variable of 'flow code' and reload it if the type is `ModuleType`. However,

this is very inefficient. Is there any better solution, like hooks?

Someone said `imputil` can make hooks, but the documentation says:

> Deprecated since version 2.6: The imputil module has been removed in Python

> 3.

And I'm using python 2.7

Answer: Use reload method.

e.g.

>>> import test

>>> test.a

1

>>> test.a = 3

>>> test.a

3

>>> reload(test)

<module 'test' from 'test.pyc'>

>>> test.a

1

>>>

|

a simple python code that astonishingly ignores if condition & statement

Question: I have a really simple code here. But the problem is really bothering me. In

line no 7, there is a if-condition; astonishingly this if condition is never

addressed! I ran the program and checked multiple times using python version

2.7. It behaves as if the if-condition and its statements (along with break)

is inside the comment and is simply ignored.

from random import randint

random_number = randint(1, 10)

guesses_left = 3

while (guesses_left>=1):

print (random_number)

guess = raw_input('Guess ?')

if guess == random_number: #this statement is never checked?

print ('You win!')

break

guesses_left -= 1

else:

print ('You lose.')

Answer:

if guess == random_number: #this statement is never checked?

It _is_ being checked, but it’s just _always_ false.

`guess` is a _string_ since it comes from `raw_input` but `random_number` is

an int coming from `randint`. Since a string can never be equal to an int, the

expression is always false.

You need to convert the input into an int first: `int(guess)`. But make sure

you check for errors when the user does not enter a number.

|

creating daemon using Python libtorrent for fetching meta data of 100k+ torrents

Question: I am trying to fetch meta data of around 10k+ torrents per day using python

libtorrent.

This is the current flow of code

1. Start libtorrent Session.

2. Get total counts of torrents we need metadata for uploaded within last 1 day.

3. get torrent hashes from DB in chunks

4. create magnet link using those hashes and add those magnet URI's in the session by creating handle for each magnet URI.

5. sleep for a second while Meta Data is fetched and keep checking whether meta data s found or not.

6. If meta data is received add it in DB else check if we have been looking for meta data for around 10 minutes , if yes then remove the handle i.e. dont look for metadata no more for now.

7. do above indefinitely. and save session state for future.

so far I have tried this.

#!/usr/bin/env python

# this file will run as client or daemon and fetch torrent meta data i.e. torrent files from magnet uri

import libtorrent as lt # libtorrent library

import tempfile # for settings parameters while fetching metadata as temp dir

import sys #getting arguiments from shell or exit script

from time import sleep #sleep

import shutil # removing directory tree from temp directory

import os.path # for getting pwd and other things

from pprint import pprint # for debugging, showing object data

import MySQLdb # DB connectivity

import os

from datetime import date, timedelta

session = lt.session(lt.fingerprint("UT", 3, 4, 5, 0), flags=0)

session.listen_on(6881, 6891)

session.add_extension('ut_metadata')

session.add_extension('ut_pex')

session.add_extension('smart_ban')

session.add_extension('metadata_transfer')

session_save_filename = "/magnet2torrent/magnet_to_torrent_daemon.save_state"