repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 18,658 | closed | Update run_clm_flax.py from single TPU worker to multiple TPU workers | Current code only works on 1 TPU worker. If there's multiple TPU workers, the data need to be split into multiple workers first then shard to local devices. The same issue for T5 language modeling with flax: https://github.com/google/flax/discussions/2017

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 08-17-2022 00:50:41 | 08-17-2022 00:50:41 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_18658). All of your documentation changes will be reflected on that endpoint.<|||||>WDYT @sanchit-gandhi?<|||||>Hey @congyingxia and sorry for the delay!

We try to keep these examples as simple as possible. In that spirit, we have limited the example scripts to single host training (v3-8). Unfortunately, scaling up to multi-host training is non-trivial: it requires another driver VM to keep the TPU hosts synced and execute commands across TPUs in parallel. For this reason, we have currently omitted multi-host training/inference, and instead focussed on single host training. If you're interested in running training/inference on a pod, I would suggest looking at the repo https://github.com/huggingface/bloom-jax-inference, which details how inference for LLM's can be scaled up to an arbitrary number of TPU devices with MP + DP.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 18,657 | closed | Fix for issue #12182 to ensure that the tutorial for zero shot distillation works | # What does this PR do?

The code for training models via zero shot distillation was breaking because the .map() function was removing the _labels_ field from the dataset object. This PR fixes the issue by changing the way the tokenizer is called via the map() function.

Fixes [https://github.com/huggingface/transformers/issues/12182](https://github.com/huggingface/transformers/issues/12182

)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [X] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [X] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed.

@patil-suraj

@VictorSanh

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 08-16-2022 21:17:28 | 08-16-2022 21:17:28 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_18657). All of your documentation changes will be reflected on that endpoint.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 18,656 | closed | BigBird inference: same input data gives different outputs | ### System Info

- pytorch==1.10.2

- transformers==4.20.1

- python=3.9.7

-

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [x] My own task or dataset (give details below)

### Reproduction

```

from transformers import (BigBirdForQuestionAnswering, BigBirdTokenizer)

import torch

device = "cuda:0" if torch.cuda.is_available() else "cpu"

tokenizer = BigBirdTokenizer.from_pretrained("model path ")

model = BigBirdForQuestionAnswering.from_pretrained("model path")

def question_answer(model,tokenizer,text, question, handle_impossible_answer=False):

encoded_inputs = tokenizer(question, text, return_tensors="pt").to(device)

start_positions = torch.tensor([1]).to((device), non_blocking=True)

end_positions = torch.tensor([3]).to((device), non_blocking=True)

bb_model = model.to((device), non_blocking=True)

with torch.no_grad():

outputs = bb_model(

**encoded_inputs,

start_positions=start_positions,

end_positions=end_positions,

output_attentions=True)

print(outputs)

##note this is a sample input chunks:

chunks = ["Scikit-learn is a free software machine learning library for the Python programming language.",

"It features various classification, regression and clustering algorithms including support-vector machines", "sklearn is a lib"]

question = "what is sklearn?"

for chunk in chunks:

print(question_answer(chunk, question,handle_impossible_answer=False))

print("-----------------------------------------------------")

print(question_answer(chunk, question,handle_impossible_answer=False))

print("------xxxxxxx-----------------------------------------------")

```

### Expected behavior

Every time for the exact same input data, the output tensors are the same for the first time.

However, in the 2nd, 3rd runs and so on the tensors vary from the 1st run.

run1 :

```

BigBirdForQuestionAnsweringModelOutput(loss=tensor(1000012.9375), start_logits=tensor([[-1.3296e+00, -1.0000e+06, -1.0000e+06, ..., -1.1439e+01,

-1.0714e+01, -1.0375e+01]]), end_logits=tensor([[-3.0393e+00, -1.0000e+06, -1.0000e+06, ..., -8.3824e+00,

-7.1354e+00, -5.7355e+00]]), pooler_output=None, hidden_states=None, attentions=(tensor([[[[1.7520e-01, 3.3716e-03, 1.7215e-03, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[3.4175e-01, 4.7108e-02, 2.2092e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[6.5068e-02, 6.3478e-01, 1.1994e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...,

[3.0409e-03, 6.9234e-05, 1.2098e-04, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[6.5139e-03, 5.8579e-05, 4.8735e-05, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[1.9445e-02, 2.0696e-04, 2.2188e-04, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00]],

[[2.0401e-02, 9.3263e-04, 1.9891e-03, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[1.7520e-02, 1.4839e-02, 1.2475e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[7.2214e-02, 3.1718e-02, 1.0765e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...,

[2.3348e-03, 6.1570e-04, 8.4744e-04, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...

[9.8621e-02, 1.7792e-06, 4.8031e-06, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00]]]])))

```

run 2:

```

BigBirdForQuestionAnsweringModelOutput(loss=tensor(1000012.9375), start_logits=tensor([[-1.3296e+00, -1.0000e+06, -1.0000e+06, ..., -1.1439e+01,

-1.0714e+01, -1.0375e+01]]), end_logits=tensor([[-3.0393e+00, -1.0000e+06, -1.0000e+06, ..., -8.3824e+00,

-7.1354e+00, -5.7355e+00]]), pooler_output=None, hidden_states=None, attentions=(tensor([[[[1.7520e-01, 3.3716e-03, 1.7215e-03, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[3.4175e-01, 4.7108e-02, 2.2092e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[6.5068e-02, 6.3478e-01, 1.1994e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...,

[3.0409e-03, 6.9234e-05, 1.2098e-04, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[6.5139e-03, 5.8579e-05, 4.8735e-05, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[1.9445e-02, 2.0696e-04, 2.2188e-04, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00]],

[[2.0401e-02, 9.3263e-04, 1.9891e-03, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[1.7520e-02, 1.4839e-02, 1.2475e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[7.2214e-02, 3.1718e-02, 1.0765e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...,

[2.3348e-03, 6.1570e-04, 8.4744e-04, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...

[1.1374e-05, 2.7021e-06, 4.4213e-07, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00]]]])))

```

run 3:

```

BigBirdForQuestionAnsweringModelOutput(loss=tensor(1000012.9375), start_logits=tensor([[-1.3296e+00, -1.0000e+06, -1.0000e+06, ..., -1.1439e+01,

-1.0714e+01, -1.0375e+01]]), end_logits=tensor([[-3.0393e+00, -1.0000e+06, -1.0000e+06, ..., -8.3824e+00,

-7.1354e+00, -5.7355e+00]]), pooler_output=None, hidden_states=None, attentions=(tensor([[[[1.7520e-01, 3.3716e-03, 1.7215e-03, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[3.4175e-01, 4.7108e-02, 2.2092e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[6.5068e-02, 6.3478e-01, 1.1994e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...,

[3.0409e-03, 6.9234e-05, 1.2098e-04, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[6.5139e-03, 5.8579e-05, 4.8735e-05, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[1.9445e-02, 2.0696e-04, 2.2188e-04, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00]],

[[2.0401e-02, 9.3263e-04, 1.9891e-03, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[1.7520e-02, 1.4839e-02, 1.2475e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[7.2214e-02, 3.1718e-02, 1.0765e-02, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...,

[2.3348e-03, 6.1570e-04, 8.4744e-04, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...

[1.1374e-05, 2.7021e-06, 4.4213e-07, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00]]]])))

```

Can you please look into this @ydshieh?

| 08-16-2022 16:11:39 | 08-16-2022 16:11:39 | @NautiyalAmit Sure!<|||||>@NautiyalAmit The provided code snippet has several issues that it fails to run.

The "model path " is not a valid model name on the Hub, and I don't know which one you tried.

The call `print(question_answer(chunk, question,handle_impossible_answer=False))` has missing arguments which gives

```bash

Traceback (most recent call last):

File "/home/yih_dar_huggingface_co/transformers/run_bigbird.py", line 31, in <module>

print(question_answer(chunk, question,handle_impossible_answer=False))

TypeError: question_answer() missing 2 required positional arguments: 'text' and 'question'

```

Could you fix the code snippet, please 🙏 . Thank you.<|||||>Please find the code with the correct args:

```

from transformers import (BigBirdForQuestionAnswering, BigBirdTokenizer)

import torch

device = "cuda:0" if torch.cuda.is_available() else "cpu"

tokenizer = BigBirdTokenizer.from_pretrained("model path ")

model = BigBirdForQuestionAnswering.from_pretrained("model path")

device = "cuda:0" if torch.cuda.is_available() else "cpu"

model = model.to((device), non_blocking=True)

def question_answer(text, question, handle_impossible_answer=False):

encoded_inputs = tokenizer(question, text, return_tensors="pt").to(device)

start_positions = torch.tensor([1]).to((device), non_blocking=True)

end_positions = torch.tensor([3]).to((device), non_blocking=True)

# self.model.eval()

bbmodel = model.to((device), non_blocking=True)

with torch.no_grad(): # reduce memory consumption

outputs = bbmodel(

**encoded_inputs,

start_positions=start_positions,

end_positions=end_positions,

output_attentions=True)

print(outputs)

##note this is a sample input chunks:

chunks = ["Scikit-learn is a free software machine learning library for the Python programming language.",

"It features various classification, regression and clustering algorithms including support-vector machines", "sklearn is a lib"]

question = "what is sklearn?"

for chunk in chunks:

print(question_answer(chunk, question,handle_impossible_answer=False))

print("-----------------------------------------------------")

print(question_answer(chunk, question,handle_impossible_answer=False))

`print("------xxxxxxx-----------------------------------------------")`

<|||||>Thanks @NautiyalAmit ! The following 2 lines would still fail . Could you specify the exact checkpoint name you used? Thanks.

```python

tokenizer = BigBirdTokenizer.from_pretrained("model path ")

model = BigBirdForQuestionAnswering.from_pretrained("model path")

```<|||||>Hi @ydshieh , you can check on base checkpoint 0 from: https://console.cloud.google.com/storage/browser/bigbird-transformer/pretrain/bigbr_base?pageState=(%22StorageObjectListTable%22:(%22f%22:%22%255B%255D%22))&prefix=&forceOnObjectsSortingFiltering=false<|||||>@NautiyalAmit I would like to help if the code snippet is self-contained, which means it should be able to run directly. (In some special case, I agree some manual actions might be necessary).

Here the code snippet is incomplete (missing `model path `). Even the provided GCS link contains TF checkpoint files, which could not be loaded with the `.from_pretrained` method in `transformers` models.

As you found the issue, you must have something that could run on your side. Please try to help us debug more easily in order to investigate the issue you encountered.

I would guess you have used a model from [HuggingFace Hub](https://huggingface.co/models), with probably an official bigbird model checkpoint. But it would still be very nice if you can specify explicitly in the code snippet. Thank you.

<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 18,655 | closed | Generate: deprecate the use of model `config` as a source of defaults | EDIT: Updated with the discussion up to [2022/08/20](https://github.com/huggingface/transformers/issues/18655#issuecomment-1221047772)

## Why?

A confusing part of `generate` is how the defaults are set. When a certain argument is not specified, we attempt to fetch it from the model `config` file. This makes `generate` unpredictable and hard to fully document (the default values change for each model), as well as a major source of issues :hocho:

## How?

We have the following requirements:

1️⃣ The existing behavior can’t be removed, i.e., we must be able to use the model `config.json` as a source of generation parameters by default;

2️⃣ We do need per-model defaults -- some models are designed to do a certain thing (e.g. summarization), which requires a specific generation configuration.

3️⃣ Users must have full control over generate, with minimal hidden behavior.

Ideally, we also want to:

4️⃣ Have separation of concerns and use a new `generate_config.json` to parameterize generation;

A TL;DR of the plan consists in changing the paradigm from “non-specified `generate` arguments are overridden by the [model] configuration file” to “`generate` arguments will override the [generate] configuration file, which is always used”. With proper documentation changes and logging/warnings, the user will be aware of what's being set for `generate`.

### Step 1: Define a new generate config file and class

Similar to the model config, we want a `.json` file to store the generation defaults. The class itself can be a very simplified version of `PretrainedConfig`, also with functionality to load/store from the hub.

### Step 2: Integrate loading generate config file in `.from_pretrained()`

The generation configuration file should be loaded when initializing the model with a `from_pretrained()` method. A couple of things to keep in mind:

1. There will be a new `kwarg` in `from_pretrained`, `generate_config` (or `generation_config`? Leaning toward the former as it has the same name as the function);

2. It will default to `generate_config.json` (contrarily to the model `config`, which defaults to `None`). This will allow users to set this argument to `None`, to load a model with an empty generate config. Some users have requested a feature like this;

3. Because the argument can take a path, it means that users can store/load multiple generate configs if they wish to do so (e.g. to use the same model for summarization, creative generation, factual question-answering, etc) 🚀

5. Only models that can run `generate` will attempt to load it;

6. If there is no `generate_config.json` in the repo, it will attempt to initialize the generate configuration from the model `config.json`. This means that this solution will not change any `generate` behavior and will NOT need a major release 👼

7. To keep the user in the loop, log ALL parameters set when loading the generation config file. Something like the snippet below.

8. Because this happens at `from_pretrained()` time, logging will only happen at most once and will not be verbose.

```

`facebook/opt-1.3b` generate configuration loaded from `generate_config.json`. The following generation defaults were set:

- max_length: 20

- foo: bar

- baz: qux

```

### Step 3: Generate uses the generate config class internally

Instead of using the configuration to override arguments when they are not set, overwrite a copy of the generation config at `generate` time. I.e. instead of:

```

arg = arg if arg is not None else self.config.arg

...

```

do

```

generate_config = self.generate_config.copy()

generate_config.arg = arg if arg is not None

...

```

This change has three main benefits:

1. We can improve the readability of the code, as we gain the ability to pass configs around. E.g. [this function](https://github.com/huggingface/transformers/blob/30992ef0d911bdeca425969d210771118a5cd1ac/src/transformers/generation_utils.py#L674) won't need to take a large list of arguments nor to bother with their initialization.

2. Building `generate` argument validation *for each type of generation* can be built in simple functions that don't need ~30 arguments as input 🙃

3. The three frameworks (PT/TF/FLAX) can share functionality like argument validation, decreasing maintenance burden.

### Step 4: Document and open PRs with the generation config file

Rewrite part of the documentation to explain that a generation config is ALWAYS used (regardless of having defaults loaded from the hub or not). Open Hub PRs to pull generate-specific parameters from `config.json` to `generate_config.json`

## Pros/Cons

Pros:

- Better awareness -- any `generate` default will be logged to the screen when loading a generate-compatible model;

- Full control -- the users can choose NOT to load generation parameters or easily load a set of options from an arbitrary file;

- Enables more readable `generate` code;

- Enables sharing `generate`-related code across frameworks;

- Doesn't need a major release.

Cons:

- Pulling the generate parameters into their own files won't happen everywhere, as merging the changes described in step 4 is not feasible for all models (e.g. due to unresponsive model owners);

- Logging loaded defaults may not be enough to stop issues related to the default values, as the logs can be ignored;

- Another config file (and related code) to maintain. | 08-16-2022 15:31:25 | 08-16-2022 15:31:25 | cc @patrickvonplaten <|||||>I like the idea of using a `use_config_defaults` a lot - think that's a great additional safety mechanism to ensure it's possible to keep backward compatibility.

Also we were thinking about the idea of having a `generation_config.json` file that can optionally be passed to `generate` by the user and that includes all the default values that are set in the config at the moment. This would also make it easier to possible have multiple different generation configs.

Some models like `bart-large`: https://huggingface.co/facebook/bart-large/blob/main/config.json#L45 always have certain generation parameters enabled by default and IMO it would be a smoother transition to help the user extract a `generation_config.json` from `config.json` and then always pass this config if present in the repo to `generate(...)` **instead** of forcing the user to always pass all those arguments to generate.

With the config, we could do something like the following automatically:

- User runs model repo with `generate`. We detect that no `generation_config.json` is present and that default generation params are used from `config.json`

- We throw a warning that states "no generation config detected, we strongly advise you to run the following code snippet on your repo to create a `generate_config.json` file

- We keep all the generation params in `config.json` though to keep backwards compatibility with `use_config_defaults`

- However if a `generation_config.py` is present we always use this and do not look into the config

- We have to make an exception with `max_length=20` because it's always set and we don't want to create a `generation_config.py` for all models

Also happy to jump on a call to brainstorm about this a bit!<|||||>Fair point! 👍

From the comment above, let's consider the updated requirements:

1. Until `v5`, the default behavior can’t change, i.e., we will use the model `config.json` as a source of defaults;

2. From `v5` onwards, the default behavior is to use `generate_config.json` as a source of defaults;

3. The transition should be as smooth as possible — the users should be able to anticipate this transition, so nothing changes when we release the new major version;

4. We want to use defaults (many models are designed to do a certain thing) while also enabling power users to have full control over `generate`.

______________________

A solution that fits all requirements is the ability to specify where the defaults should be loaded from, with default paths controlled by us. With the aid of a script to create the new generation config file from the existing model config file, the transition should be smooth and users can anticipate any change.

E.g. if we have a `generation_config_file` flag, defaulting to `None` and where a path in the model repo can be specified, then we could:

- Set `generation_config_file="config.json"`, which would mimic the existing default behavior (and would be the default behavior for now);

- Set `generation_config_file="generation_config.json"`, which would use the new config file for generation (which would be the default behavior in the future);

- Set `generation_config_file` to ANY generation config path, so that power users can have multiple configurations for the same model;

- Set `generation_config_file=False` (or other non-`None` falsy value) to not use any configuration at all.

We seem to need two warnings ⚠️ :

1. [Needed because in `v5` we will be defaulting to a new config file, which may not exist in a user's model repo, and the model may have generation parameters in its config] If the configuration file does not exist, fall back to `config.json` and warn about it. We can quickly scan `config.json` to avoid raising a warning if it doesn't contain any generation argument;

2. [Needed because the default behavior will still be to use values from a config, and many users are not aware of it] If `generation_config_file` is not specifically set by the user, a warning should be raised if the config replaces any argument. Many configs don't replace any value.

Both warnings can be avoided by specifying the `generation_config_file` argument. They may be a bit verbose, but I think verbosity (which can be shut down easily) is preferable to magic confusing behavior.

The `max_length=20` default (and other similar defaults) can be easily added -- `max_length = max_length if max_length is not None else 20` after attempting to load the configs. We can add them to the argument's documentation (see below).

__________________________________

🤔 The only issue I have with this approach is that it is hell to document (similar to the current approach). Having "this argument defaults to X or to `config.argument`" for all arguments' documentation line is verbose and confusing, and users need to be aware that the configuration files play an important role.

My suggestion here would be to make `generation_config_file` the second argument of `generate` (after `input_ids`), so that it becomes immediately clear that `generate` argument defaults can be set through a file. Then, I would remove further references to the config in the docs, relying on the warnings to remind the user of what's going on. I think it is clear by now that long docs don't avoid simple issues :(

WDYT?

(P.S.: will edit the issue after we settle on an approach :) )<|||||>Cool, I think this is going into a very nice direction! A couple more questions to think about:

- Do we really want a `generate_config_file` keyword argument for `generate(...)` ? For me it would be much more intuitive to just have `config: Optional[Dict]` as an argument. This would then mean it requires the user to do one more step for a specific config:

```python

generate_config = # load generation config from path

model.generate(input_ids, config=generate_config)

```

- We could add a `config` argument to the init of `GenerationMixin` which would make backwards compatibility then very easy:

- `from_pretrained(...)` would load either a `generation_config.json` or if not present a `config.json` and then set it as `self.generation_config = config` => then every generation model would have access to `self.generation_config` . In `generate` would could then add a `self.generate_config = config if config is not None else self.generate_config (the default one)` and then overwrite `self.generate_config` once more with if the user passes generate args into `generate` directly (e.g. model.generate(top_k=top_k)`

- Overall I think we cannot really come around the fact the we need to store a config inside the model somewhere because it'd be a bit to me to load a config **upon calling generate**. E.g. `model.generate(..., generate_config="config.json")` would have to load a config which opens too many problems with internet connection etc....

- What type should `generation_config` be? Just a `dict` or let's maybe create a class for it (similar to `BloomConfig`). Creating its own class probably also helps with documentation

-> What do you think? <|||||>@patrickvonplaten Agreed, the argument name is a bit too long 😅 However, if we decide to go the `GenerationMixin.__init__` route, we can't pick `config` -- `PreTrainedModel`, which inherits from `GenerationMixin`, uses a `config` argument for the model config. Perhaps `generation_config`? We could then do `.from_pretrained(foo, generation_config=bar)`.

I love the ideas you gave around the config:

1. if it is part of the `__init__` and if we always attempt to load the new file format before falling back to the original config, it actually means we don't need to do a major release to build the final version of this updated configuration handling! No need to change defaults with a new release at all ❤️ ;

2. The idea of "arguments write into a config that is always used" as opposed to "config is used when no arguments are passed" is much clearer to explain. We gain the ability to pass config files around (as opposed to tens of arguments), and it also opens the door to exporting generation configurations;

3. Despite the above, we need to be careful with the overwrites: if a user calls `model.generate(top_k=top_k)` and then `model.generate(temperature=temperature)`, `top_k` should be the original config's `top_k`. Copies of objects are needed;

4. Agreed, having all downloads/file paths in the same place is helpful.

Regarding `dict` vs `class` -- I'd go with `class` (or perhaps a simpler `dataclass`). Much easier to document and enforce correctness, e.g. check if the right arguments are being used with a certain generation type.

__________________________

It seems like we are in agreement. Are there more issues we can anticipate?<|||||>Very nice summary @gante thanks for writing this all down - I agree with all the above point!

@LysandreJik @sgugger and maybe @thomwolf could you take a quick look here? I think @gante and I have now an actionable plan for `generate()` and would be ready to open a PR.

Before starting the PR, it would be nice if you could check if you generally agree with our comments here so that we're not totally on a different page before opening such a big PR. The PR will then take some time and require discussion, but I think we have a clear vision of what we want now<|||||>@patrickvonplaten @LysandreJik @sgugger @thomwolf -- I took the liberty of updating the issue at the top with the plan that originated from the discussion here (and also to structure the whole thing in my head better) :)<|||||>Thanks for the write-up! I think this is a much welcome change that will tremendously improve the way we use `generate`.

Writing down some thoughts below.

- Very personal, but I think `generation_config` sounds more explicit. `generate` is very understandable by us because we know what is the "generate method", but "generation config" sounds so much clearer to me than "generate config".

- Would the generate config class be able to load from other models? i.e., could we load a generation config specific to `bart-large-cnn` in `t5-large`? Would we enforce model-specificity, or would we allow this to work? How would we handle model-specific attributes (maybe there aren't any, there seems to be only RAG that has its own `generate` method)?

- Could we store multiple generation configs in the same repo? How would you handle a model that can have several generation configuration, for example a model such as a prefix-LM that could do both translation and summarization with the same checkpoint?

The biggest work here will likely be education & documentation. I think this will already make things much clearer, but I suppose the much awaited generate method doc rework will be an absolute requirement after this refactor!<|||||>Agreed, the biggest issue is and will be education and documentation. Hopefully, this will make the process easier 🙏

- Regarding one or multiple generation config classes: there are two arguments in `generate` that are used with a limited number of (encoder-decoder) models, `forced_bos_token_id` and `forced_eos_token_id`. Additionally, there is one argument, `encoder_no_repeat_ngram_size`, that is only used in encoder-decoder models (and have no effect on decoder-only). The remaining **36** arguments are usable by all models. IMO, having a single class would make documentation (the key issue) much simpler, and model<>arguments verification can be done in the function (as it is done in the present).

- Regarding multiple configs in the same repo: Yes that would be doable. According to the plan above, through the specification of a different `generation_config` files. But @LysandreJik raised a good point, as the name of the files containing the defaults for different tasks may not be immediately obvious to the users, which implies more documentation pain. Perhaps we can take the chance here to approximate `generate` to `pipeline`, which we know is user-friendly -- in the `pipeline`, [specific config parameters are loaded for each task](https://github.com/huggingface/transformers/blob/051311ff66e7b23bfcfc42bc514c969517323ce9/src/transformers/pipelines/base.py#L783) (here's an [example of a config with task-specific parameters](https://huggingface.co/facebook/bart-large-cnn/blob/main/config.json#L55)). We could use the exact same structure with the new `generation_config` files, where all task-specific arguments can be set this way, and `generate` could gain a new `task` argument. That way, there would be a higher incentive to set task-specific parameters that would work across the HF ecosystem (`generate` and `pipeline` for now, perhaps more in the future).

```python

# with the `task` parameter, it is trivial to share the parameters for some desired behavior

# When loading the model, the existence of task-specific options would be logged to the user.

model = AutoModelForSeq2SeqLM.from_pretrained("...")

input_prompt = ...

task_tokens = model.generate(**input_prompt, task="my_task")

# There would be an exception if `my_task` is not specified in the generation config file.

```<|||||>The plan looks good to me, but the devil will be in the details ;-) Looking forward to the PRs actioning this!<|||||>Closing -- `generation_config` is now the source of defaults for `.generate()` |

transformers | 18,654 | closed | Update TF fine-tuning docs | This PR updates the fine-tuning sidebar tutorial with modern TF methods that were added in the most recent release. | 08-16-2022 15:16:23 | 08-16-2022 15:16:23 | _The documentation is not available anymore as the PR was closed or merged._<|||||>@stevhliu is there a better way to format a link to the `prepare_tf_dataset` docs [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_18654/en/training#loading-data-as-a-tfdatadataset) than the way I did it here? It prints the whole `TFPreTrainedModel.prepare_tf_dataset` text, which looks a bit ugly on the page!<|||||>Yes, I believe you can just squiggly it!

[`~TFPreTrainedModel.prepare_tf_dataset`]<|||||>@stevhliu Thank you for the suggestions! I'll make more edits to incorporate the other bits and ping you again for a final look.<|||||>@sgugger @stevhliu I finished incorporating your edits and did some other cleanup. I also replaced `to_tf_dataset` in the other fine-tuning pages. I didn't touch the translations, though - should I edit those too?<|||||>@sgugger I tried those edits but it looked a little odd because there was no separate header for the PyTorch section. I added a `Train` header to the whole thing with a brief intro, and then a `Train with PyTorch Trainer` header inside that block, which I think works a little better and makes it easier for people to find what they want in the sidebar. Let me know what you think!<|||||>@sgugger :facepalm: I knew I'd regret pinging you before waiting for the job to finish and checking it myself. Fixed! |

transformers | 18,653 | closed | Generate: validate `model_kwargs` on FLAX (and catch typos in generate arguments) | # What does this PR do?

FLAX version of https://github.com/huggingface/transformers/pull/18261

Adds model_kwargs validation to FLAX generate, which also catches typos in the arguments. See the PR above for more details and an example of the error message users will see. | 08-16-2022 14:42:31 | 08-16-2022 14:42:31 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 18,652 | closed | Add cross entropy loss with stable custom gradient | # What does this PR do?

This PR adds Flax code for a cross entropy loss calculation with an additional term to stabilize gradients for bfloat16 training.

The loss function is authored by the T5X Authors (https://github.com/google-research/t5x/blob/90d74fa703075d8b9808ae572602bc48759f8bcc/t5x/losses.py#L25)

Also add 'z_loss' as training argument to the T5 Flax pre-training script.

If z_loss > 0, then an auxiliary loss equal to z_loss*log(z)^2

will be added to the cross entropy loss (z = softmax normalization constant).

The two uses of z_loss are:

1. To keep the logits from drifting too far from zero, which can cause

unacceptable roundoff errors in bfloat16.

2. To encourage the logits to be normalized log-probabilities.

While the z_loss function is only added to the t5 flax pretraining script, this loss function might be interesting for

other flax pre-training scripts. I did not test this.

Finally, there is a (currently unused) function `compute_weighted_cross_entropy` with z_loss and label smoothing,

which might be useful for other flax training scripts as well.

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [*] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [*] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

No but I tested with the t5 flax norwegian script, bfloat16 and z_loss set to 1e-4, the setting used in t5 gin configs.

In my own tests I've seen the following consistently:

Without z_loss and bfloat16, the loss will either diverge or converge on a higher plateau than training with float32.

With z_loss and bfloat16, set to 1e-4, loss curves almost match curves with float32 training.

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

@patrickvonplaten, @patil-suraj

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

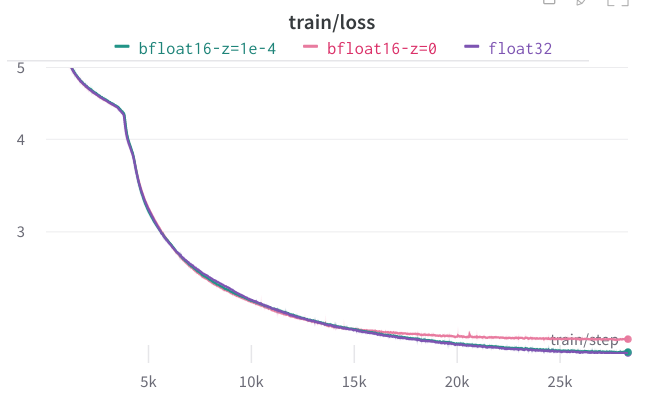

| 08-16-2022 14:32:43 | 08-16-2022 14:32:43 | float32 compared with bfloat16 without and with z_loss

<|||||>The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_18652). All of your documentation changes will be reflected on that endpoint.<|||||>Hey @yhavinga,

Thanks a lot for your PR. Could we maybe add this to the `examples/research_folder` instead of the official examples?

The reason is that we won't have time to maintain this example and we would have need to check those loss curves on more than just Norwegian.

Would that be ok for you? <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored.<|||||>Apologies for the long delay.

In the meantime I've noticed that term added to the loss (z_loss * jax.lax.square(log_z)) might in fact be (similar to) L2 regularization, and that this kind of regularization might in fact already be available through Optax Adafactors weight_decay_rate parameter. I currently do not have access to TRC so cannot test this, but thought it might be interesting to others training with bfloat16 and run_t5_mlm_flax.py if they might hit this page. |

transformers | 18,651 | closed | Generate: validate `model_kwargs` on TF (and catch typos in generate arguments) | # What does this PR do?

TF version of https://github.com/huggingface/transformers/pull/18261

Adds `model_kwargs` validation to TF `generate`, which also catches typos in the arguments. See the PR above for more details and an example of the error message users will see.

Since TF had no dedicated file for `generate` tests, I took the liberty to create it and move some existing tests there (>70% of the diff is due to moving things around :) ). The test for this new check was also added there. | 08-16-2022 13:28:04 | 08-16-2022 13:28:04 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 18,650 | closed | Allow users to force TF availability | We have a user report that with custom Tensorflow builds and package names that `_tf_available` can return `False` even if `import tensorflow` succeeds, because the user's package name isn't in the [allowed list](https://github.com/huggingface/transformers/blob/02b176c4ce14340d26d42825523f406959c6c202/src/transformers/utils/import_utils.py#L63L75).

This is quite niche, so I don't want to do anything that could affect other users and workflows, but I added a `FORCE_TF_AVAILABLE` envvar that will skip version checks and just make sure TF is treated as available. @sgugger WDYT, or is there a better solution?

Fixed #18642 | 08-16-2022 12:28:29 | 08-16-2022 12:28:29 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Ah, additional nit: I'd document this somewhere.<|||||>@LysandreJik I think a lot of these envvars aren't documented anywhere - I can't see any documentation for USE_TF or USE_TORCH! Maybe we should make a separate docs PR with a list of envvars that `transformers` uses?<|||||>That would be fantastic :)

Thanks for your contribution, merging! |

transformers | 18,649 | closed | When resuming from checkpoint with Trainer using a streamed dataset, use the Datasets API to skip | ### Feature request

Huggingface Datasets has a feature where you can instantiate datasets in streaming mode, so you don't have to download the whole thing onto your machine. The API has a skip function. The Transformers Trainer doesn't use this, it just iterates through all the batches to be skipped.

I propose Trainer checks whether the given dataset is a Datasets one in streaming mode, and if so, it uses the skip function.

### Motivation

I've been using the C4 dataset in streaming mode because of its size. Whenever I resume from a checkpoint, it takes a long time to skip. Around an hour for 200k batches. With this change, it should be effectively instant, which would save me a lot of time.

### Your contribution

I can make the change if no one else wants to. In which case, I'd like to be assured that the change will be reviewed and merged in a reasonable timeframe rather than being lost in the sea of pull requests. | 08-16-2022 10:48:22 | 08-16-2022 10:48:22 | WDYT @lhoestq?<|||||>Unfortunately the `skip` function does the same. Though if you call `skip` before `map` it won't apply the preprocessing on the examples to skip and save time.

Therefore I don't think it using `skip` would have a big impact here<|||||>Thanks for your insight @lhoestq . Is there a technical reason the function is implemented that way? I assume random access is supported, given that shuffling of enormous datasets is allowed and fast.<|||||>Random access is not supported for streaming datasets. Shuffling is approximate by shuffling the dataset shards order, and using a shuffle buffer, see the documentation here: https://huggingface.co/docs/datasets/v2.4.0/en/stream#shuffle<|||||>Would it be possible to skip whole shards when a large number of samples need to be skipped?<|||||>In the general case we don't know in advance how many examples there are per shard (it depends on the data file format). In many cases we would need some extra metadata somewhere that says how many examples each shard contain.

For example the C4 dataset is made out gzipped JSON Lines files - you don't know in advance how many examples each shard contain, because you need to uncompress the data and count the EOL.

For certain file formats like Parquet or Arrow however, the number of examples is known for free, as metadata included in the file itself. So maybe for those specific formats we could do something<|||||>Ah, this needs deeper changes than I thought then. Still, the metadata would be nice to have. It could even be generated lazily when people stream the dataset, to avoid a bit upfront cost. Alternatively, the streamed dataset client could locally keep a cache of how many samples each shard it's iterated through contains. I'd prefer the former, so it doesn't matter if the cache is cleared or you switch machines.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 18,648 | closed | TF: Fix generation repetition penalty with XLA | # What does this PR do?

There was a dynamic shape being fetched as a static shape, causing issues from the 2nd generation iteration.

Fixes #18630 | 08-16-2022 10:34:17 | 08-16-2022 10:34:17 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 18,647 | closed | Fix cost condition in DetrHungarianMatcher and YolosHungarianMatcher to allow zero-cost | # What does this PR do?

Fixes costs condition in DetrHungarianMatcher and YolosHungarianMatcher. In https://github.com/huggingface/transformers/pull/16720 a bug was introduced while switching from asserts to conditions. Currently, any zero-cost will result in a ValueError:

```python

if class_cost == 0 or bbox_cost == 0 or giou_cost == 0:

raise ValueError("All costs of the Matcher can't be 0")

```

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

@LysandreJik @sgugger based on reviewers of previous PR https://github.com/huggingface/transformers/pull/16720.

| 08-16-2022 09:41:41 | 08-16-2022 09:41:41 | _The documentation is not available anymore as the PR was closed or merged._<|||||>This looks reasonable given the error message! cc @NielsRogge |

transformers | 18,646 | closed | [bnb] Small improvements on utils | # What does this PR do?

Fixes a small typo in `bitsandbytes.py`, should address https://github.com/huggingface/blog/pull/463#discussion_r946067141

I will have to test it first and mark it as ready for review! | 08-16-2022 09:07:26 | 08-16-2022 09:07:26 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Can confirm the tests pass!<|||||>so will there always be just one module not to convert?

won't it be safer to have modules instead and work with the list?<|||||>I have proposed a small refactoring that includes:

- checking the list of modules to not convert instead of a single value.

- changing an error message as it confused some user. Check: https://github.com/TimDettmers/bitsandbytes/issues/10

The bnb slow tests are passing with this fix!<|||||>From https://github.com/huggingface/transformers/issues/18660 I also just added a commit to support having a custom list of the keys to ignore <|||||>Thanks a lot @stas00 !

There is no rush at all for this PR, we can definitely wait for @sgugger before moving forward with it <|||||>Can confirm the bnb slow tests are passing with the proposed fixes! Would love to have a final round of review 💪

cc @sgugger @stas00 <|||||>Can confirm the slow tests pass after rebasing on `main`, will merge once it's green! 🟢 |

transformers | 18,645 | closed | [BLOOM] Update doc with more details | # What does this PR do?

Addressing https://huggingface.co/bigscience/bloom/discussions/86

I think that we should add the full list of the trained languages on the documentation so that we can refer to that whenever any user will have any question related to the trained languages

cc @ydshieh @Muennighoff

| 08-16-2022 09:05:00 | 08-16-2022 09:05:00 | cc @cakiki <|||||>_The documentation is not available anymore as the PR was closed or merged._<|||||>I would remove it, as it doesn't matter for the architecture, which these docs explain afaict? It's just an artefact of the data the models are trained on, hence it's arldy on the pretrained model & dataset READMEs. If someone were to release a BLOOM architecture model trained on different languages, this would be confusing imo.

Also should probably say `Pre-trained BLOOM models were officially released in the following sizes:`, as theoretically it's available in whatever version/size someone wants, just need to train it from scratch

<|||||>Not sure if the model doc is restricted to the architecture.

- `T5` has `## Example scripts` section

- Some models include `training` and `generation` sections, for example, `t5` or `m2m_100`

- `Marian` has a `## Naming` section

But I agree that we can probably just have something similar to `Marian` doc:

```

Note: The list of languages used in training can be found [here](link to model card page).

```<|||||>Okay I agree with both points!

IMO we can just specify that this list of languages is only relevant for bloom models stated on the doc, and for custom bloom models one should refer to the corresponding model card.

<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 18,644 | closed | Change scheduled CIs to use torch 1.12.1 | # What does this PR do?

To align with CircleCI tests. | 08-16-2022 07:59:48 | 08-16-2022 07:59:48 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 18,643 | closed | `AttributeError: 'BertTokenizer' object has no attribute 'tokens_trie' | ### System Info

Google colab

### Who can help?

Anyone

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

Hi,

I have trained a model with `transformers==4.9.1`.

Now I want to run this saved model with the new version of transformers and I get this error: `AttributeError: 'BertTokenizer' object has no attribute 'tokens_trie'`.

As I looked at transformers codes `token_trie` has been added which was not in the previous versions if I am correct. How can I solve this problem from my side? and is there any possibilities that this compatibility problem can be handled in the newer versions of transformers?

### Expected behavior

None | 08-16-2022 07:33:54 | 08-16-2022 07:33:54 | @Narsil is the one knows the best.

This is added in the PR #13220<|||||>@roetezadi How did you solve this ? |

transformers | 18,642 | closed | _tf_available for customized built tensorflow | ### System Info

n/a

### Who can help?

@Rocketknight1

### Information

- [ ] The official example scripts

- [x] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

```

File "virtualenv_mlu/lib/python3.8/site-packages/transformers/pipelines/base.py", line 212, in infer_framework_load_model

raise RuntimeError(

RuntimeError: At least one of TensorFlow 2.0 or PyTorch should be installed. To install TensorFlow 2.0, read the instructions at https://www.tensorflow.org/install/ To install PyTorch, read the instructions at https://pytorch.or

```

### Expected behavior

https://github.com/huggingface/transformers/blob/02b176c4ce14340d26d42825523f406959c6c202/src/transformers/utils/import_utils.py#L63L75

I built a tensorflow-xxu for our in house accelerator and tried to run the transformer example.

I got the RuntimeError indicates tf is not available.

Currently the _tf_available has a hard-coded candidates list.

I'm not suire if extending the existing list is a good idea.

Maybe adding some runtime flexibility would be better?

Thanks

Kevin | 08-16-2022 07:31:33 | 08-16-2022 07:31:33 | Hi @kevint324 I think it (extending the list ) is fine if you would work with a specific `transformers` version. But it would be a bit tedious if you want to use newer versions constantly.

cc @Rocketknight1 for the idea regarding `adding some runtime flexibility`.<|||||>Changed the tag to `Feature request` instead :-)<|||||>Hi @kevint324, I filed a PR that might resolve this, but I want to check with other maintainers that it's okay before I merge it. In the meantime, can you try it out? Just run `pip install git+https://github.com/huggingface/transformers.git@allow_force_tf_availability`, then set the environment variable `FORCE_TF_AVAILABLE=1` before running your code, and it should skip those checks now.<|||||>Yes, it works.

Thanks for the quick fix. |

transformers | 18,641 | open | Add TF VideoMAE | ### Feature request

Add the [VideoMAE](https://huggingface.co/docs/transformers/main/en/model_doc/videomae) model in TensorFlow.

### Motivation

There's an evident scarcity of SoTA and easy-to-use video models in TensorFlow. I believe having VideoMAE in TensorFlow would greatly benefit the community.

### Your contribution

I am willing to contribute the model. Please assign it to me.

@amyeroberts possible to assign this to me? | 08-16-2022 01:49:01 | 08-16-2022 01:49:01 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored.<|||||>Please reopen the issue as I am working on it. <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored.<|||||>It's taking more time as I am in a job switch. Please reopen it. I apologise for the inconvenience. |

transformers | 18,640 | closed | Finetune guide for semantic segmentation | This PR creates a finetune guide for semantic segmentation in the docs. Unlike previous finetune guides, this one will include:

* metrics for evaluation

* a section for how to use the model for inference

* an embedded Gradio demo

🚧 To do:

- [x] create section for inference

- [ ] create Gradio demo | 08-16-2022 01:16:17 | 08-16-2022 01:16:17 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 18,639 | closed | CLIP output doesn't match the official weight | ### System Info

I am using transformer 4.20.1.

I found that the official CLIP model outputs don't match the hugging face one.

### Who can help?

@NielsRogge @stevhliu

### Information

- [X] The official example scripts

- [ ] My own modified scripts

### Tasks

- [X] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

To install clip, run `pip install git+https://github.com/openai/CLIP.git`

```python

import torch

import clip

from PIL import Image

import requests

device = "cpu"

model, preprocess = clip.load("ViT-B/32", device=device)

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

image = preprocess(image).unsqueeze(0).to(device)

text = clip.tokenize(["a photo of a cat", "a photo of a dog"]).to(device)

with torch.no_grad():

clip_image_features = model.encode_image(image)

clip_text_features = model.encode_text(text)

logits_per_image, logits_per_text = model(image, text)

probs = logits_per_image.softmax(dim=-1).cpu().numpy()

print("CLIP Label probs:", probs) # prints: [[0.9927937 0.00421068 0.00299572]]

from transformers import CLIPProcessor, CLIPModel

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(text=["a photo of a cat", "a photo of a dog"], images=image, return_tensors="pt", padding=True)

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image

probs = logits_per_image.softmax(dim=1)

print("HF Label probs:", probs) # prints: [[0.9927937 0.00421068 0.00299572]]

print(clip_text_features.shape)

print(outputs.text_model_output.pooler_output.shape)

print((clip_text_features - outputs.text_model_output.pooler_output).abs().max())

assert torch.allclose(clip_text_features, outputs.text_model_output.pooler_output, atol=1e-5)

```

### Expected behavior

The feature and probability should be the same. | 08-15-2022 23:24:24 | 08-15-2022 23:24:24 | Hi @xvjiarui, actually the output between original and hf is the same, but you compare with the wrong tensor. Firstly, we can compare the code between the original and hf (here we focus on the text features part).

```

# Original CLIP

def encode_image(self, image):

return self.visual(image.type(self.dtype))

def encode_text(self, text):

x = self.token_embedding(text).type(self.dtype) # [batch_size, n_ctx, d_model]

x = x + self.positional_embedding.type(self.dtype)

x = x.permute(1, 0, 2) # NLD -> LND

x = self.transformer(x)

x = x.permute(1, 0, 2) # LND -> NLD

x = self.ln_final(x).type(self.dtype)

# x.shape = [batch_size, n_ctx, transformer.width]

# take features from the eot embedding (eot_token is the highest number in each sequence)

x = x[torch.arange(x.shape[0]), text.argmax(dim=-1)] @ self.text_projection

return x

def forward(self, image, text):

image_features = self.encode_image(image)

text_features = self.encode_text(text)

# normalized features

image_features = image_features / image_features.norm(dim=1, keepdim=True)

text_features = text_features / text_features.norm(dim=1, keepdim=True)

# cosine similarity as logits

logit_scale = self.logit_scale.exp()

logits_per_image = logit_scale * image_features @ text_features.t()

logits_per_text = logits_per_image.t()

# shape = [global_batch_size, global_batch_size]

return logits_per_image, logits_per_text

```

```

# HF CLIP

def forward(

self,

input_ids: Optional[torch.LongTensor] = None,

pixel_values: Optional[torch.FloatTensor] = None,

attention_mask: Optional[torch.Tensor] = None,

position_ids: Optional[torch.LongTensor] = None,

return_loss: Optional[bool] = None,

output_attentions: Optional[bool] = None,

output_hidden_states: Optional[bool] = None,

return_dict: Optional[bool] = None,

) -> Union[Tuple, CLIPOutput]:

# Use CLIP model's config for some fields (if specified) instead of those of vision & text components.

output_attentions = output_attentions if output_attentions is not None else self.config.output_attentions

output_hidden_states = (

output_hidden_states if output_hidden_states is not None else self.config.output_hidden_states

)

return_dict = return_dict if return_dict is not None else self.config.use_return_dict

vision_outputs = self.vision_model(

pixel_values=pixel_values,

output_attentions=output_attentions,

output_hidden_states=output_hidden_states,

return_dict=return_dict,

)

text_outputs = self.text_model(

input_ids=input_ids,

attention_mask=attention_mask,

position_ids=position_ids,

output_attentions=output_attentions,

output_hidden_states=output_hidden_states,

return_dict=return_dict,

)

image_embeds = vision_outputs[1]

image_embeds = self.visual_projection(image_embeds)

text_embeds = text_outputs[1]

text_embeds = self.text_projection(text_embeds)

# normalized features

image_embeds = image_embeds / image_embeds.norm(p=2, dim=-1, keepdim=True)

text_embeds = text_embeds / text_embeds.norm(p=2, dim=-1, keepdim=True)

# cosine similarity as logits

logit_scale = self.logit_scale.exp()

logits_per_text = torch.matmul(text_embeds, image_embeds.t()) * logit_scale

logits_per_image = logits_per_text.T

loss = None

if return_loss:

loss = clip_loss(logits_per_text)

if not return_dict:

output = (logits_per_image, logits_per_text, text_embeds, image_embeds, text_outputs, vision_outputs)

return ((loss,) + output) if loss is not None else output

return CLIPOutput(

loss=loss,

logits_per_image=logits_per_image,

logits_per_text=logits_per_text,

text_embeds=text_embeds,

image_embeds=image_embeds,

text_model_output=text_outputs,

vision_model_output=vision_outputs,

)

```

you may found out that the output of original clip `encode_text` method should equal to `text_embeds = self.text_projection(text_embeds)` in the hf repo. You can modify your script like this one to check it.

```

import torch

import clip

from PIL import Image

import requests

import numpy as np

device = "cpu"

model, preprocess = clip.load("ViT-B/32", device=device)

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

image = preprocess(image).unsqueeze(0).to(device)

text = clip.tokenize(["a photo of a cat", "a photo of a dog"]).to(device)

with torch.no_grad():

clip_image_features = model.encode_image(image)

clip_text_features = model.encode_text(text)

logits_per_image, logits_per_text = model(image, text)

org_probs = logits_per_image.softmax(dim=-1).cpu().numpy()

print("CLIP Label probs:", org_probs) # prints: [[0.9927937 0.00421068 0.00299572]]

from transformers import CLIPProcessor, CLIPModel

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(

text=["a photo of a cat", "a photo of a dog"],

images=image,

return_tensors="pt",

padding=True,

)

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image

hf_probs = logits_per_image.softmax(dim=1).detach().cpu().numpy()

print("HF Label probs:", hf_probs) # prints: [[0.9927937 0.00421068 0.00299572]]

print(np.allclose(org_probs, hf_probs))

print(

torch.allclose(

clip_text_features,

model.text_projection(outputs.text_model_output.pooler_output).detach(),

atol=1e-5,

)

)

```<|||||>Hi @aRyBernAlTEglOTRO thanks for your reply.

I see. That makes sense. |

transformers | 18,638 | closed | Update run clm no trainer.py and run mlm no trainer.py | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes issue in selecting `no_decay` parameters, we need to exclude "layer_norm.weight" not "LayerNorm.weight"

Fixes issue that `resume_step` will not be constructed properly when the user continues to train from a checkpoint with `gradient_accumulation_steps != 1`

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 08-15-2022 21:49:16 | 08-15-2022 21:49:16 | _The documentation is not available anymore as the PR was closed or merged._<|||||>cc @muellerzr, would you like to take a look at this while Sylvain is away? |

transformers | 18,637 | closed | Update run_translation_no_trainer.py | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes issue in selecting `no_decay` parameters, we need to exclude "layer_norm.weight" not "LayerNorm.weight"

Fixes issue that `resume_step` will not construct properly when the user continues to train from a checkpoint with `gradient_accumulation_steps != 1`

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 08-15-2022 21:23:27 | 08-15-2022 21:23:27 | _The documentation is not available anymore as the PR was closed or merged._<|||||>cc @muellerzr would you like to take a look at this while Sylvain is away?<|||||>@zhoutang776 I believe these two are the same, no? https://github.com/huggingface/transformers/pull/18638<|||||>> @zhoutang776 I believe these two are the same, no? #18638

Yes, I guess other examples files have the same problem but I haven't checked other codes besides these three files. <|||||>Okay! Will just merge this one then 😄 Thanks for the bugfix and nice find! |

transformers | 18,636 | open | Support output_scores in XLA TF generate | ### Feature request

Support output_scores in XLA TF generate.

### Motivation

The scores would be critical and widely used in generation models for downstream applications. It is a confidence score for downstream thresholding or ranking. But it is not yet supported for XLA TF generate.

### Your contribution

N/A | 08-15-2022 19:43:56 | 08-15-2022 19:43:56 | cc @gante @sgugger <|||||>Hey @rossbucky 👋 The support for returning scores with XLA is in our plans. Like other XLA-related changes, it requires rewriting that part of the code to work with static shapes, as opposed to lists of tensors that grow as generate runs.