repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 21,974 | closed | [DETR, YOLOS] Fix device bug | # What does this PR do?

This PR fixes a device bug for DETR and YOLOS's `post_process_object_detection` methods, which currently give a device mismatch between CPU and CUDA when running the model on CUDA.

The PR also makes sure the postprocess methods are tested in the integration tests. | 03-06-2023 16:47:05 | 03-06-2023 16:47:05 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 21,973 | closed | GitHub Private Vulnerability reporting | ### Feature request

Enable Private vulnerability reporting in the GitHub repository, please.

https://docs.github.com/en/code-security/security-advisories/repository-security-advisories/configuring-private-vulnerability-reporting-for-a-repository

### Motivation

I may have identified something low-impact vulnerability, but I would like to report it privately via the usual channel and request a CVE in that case.

### Your contribution

The report that I will submit, when the feature is enabled, please. | 03-06-2023 16:13:51 | 03-06-2023 16:13:51 | cc @Michellehbn <|||||>Hi @Sim4n6, Thanks for reaching out to us! 🤗 We have a bug bounty program with HackerOne and would love for you to submit security vulnerability reports to https://hackerone.com/hugging_face. This is a private program so we will need to invite you. Do you happen to have an H1 username? Or you can send [email protected] an email and we'll send you an invite! <|||||>it is [Sim4n6](https://hackerone.com/sim4n6?type=user). No problem.<|||||>Invite sent! Thanks again! <|||||>Done. |

transformers | 21,972 | closed | Support for `Flax` Trainer | ### Feature request

A `Trainer` class similar to PyTorch/Tensorflow (deprecating in v5) .

### Motivation

The process of training HuggingFace models in `PyTorch` has become more accessible due to the availability of the `Trainer` class and extensive documentation and tutorials. Similarly, in `Tensorflow`, training models require only a single line of code (`model.fit`). and it makes sense to deprecate the current trainer to avoid redundancy.

However, the process of training `Flax` models currently requires boilerplate code similar to `PyTorch` which a `Trainer` class would be helpful to eliminate. Making flax `Trainer` available will allow for really fast training in GPU/TPUs and with the support of weights conversion, one can instantly convert the model to `Tensorflow`/`PyTorch` for inference and deployment.

### Your contribution

I can submit the PR.

cc @sanchit-gandhi since it's related to flax. | 03-06-2023 16:08:17 | 03-06-2023 16:08:17 | This is also something we do not want to add or maintain for Flax, as researchers usually dislike Trainer classes very much.<|||||>Understood, thanks for such a quick reply :hugs: |

transformers | 21,971 | closed | No clear documentation for enabling padding in FeatureExtractionPipeline | ### System Info

- `transformers` version: 4.26.0

- Platform: Linux-4.19.0-23-cloud-amd64-x86_64-with-debian-10.13

- Python version: 3.7.12

- Huggingface_hub version: 0.12.0

- PyTorch version (GPU?): 1.13.1+cu116 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: <fill in>

- Using distributed or parallel set-up in script?: <fill in>

### Who can help?

@Narsil

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

The documentation for the [FeatureExtractionPipeline](https://huggingface.co/docs/transformers/v4.26.1/en/main_classes/pipelines#transformers.FeatureExtractionPipeline) does not currently provide instructions on how to use the truncation and padding arguments.

These arguments can be passed in the `tokenize_kwargs` parameter, which is parsed by the [self._sanitize_parameters](https://github.com/huggingface/transformers/blob/v4.26.1/src/transformers/pipelines/feature_extraction.py#L58) method. While the `truncation` argument can also be passed as a separate keyword argument, the `padding` argument can only be recognized if it is included in `tokenize_kwargs`.

To improve clarity, it would be beneficial for the documentation to explicitly state that the existence of `tokenize_kwargs` parameter for passing tokenizer arguments and add that only the `truncation` argument can be used as a keyword argument, while other tokenizer parameters should be included in `tokenize_kwargs`.

I can submit a PR to add the documentation if it sounds good to you!

### Expected behavior

Below is the code to show that how padding should be used in FeatureExtractionPipeline.

```python

example = "After stealing money from the bank vault, the bank robber was seen fishing on the Mississippi river bank."

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased", use_fast=False)

pipeline_without_padding_as_an_argument = pipeline(

"feature-extraction",

model="bert-base-uncased",

tokenizer=tokenizer,

return_tensors=True,

)

pipeline_with_padding_as_an_argument = pipeline(

"feature-extraction",

model="bert-base-uncased",

tokenizer=tokenizer,

padding="max_length",

return_tensors=True,

)

pipeline_with_padding_in_kwarg = pipeline(

"feature-extraction",

model="bert-base-uncased",

tokenizer=tokenizer,

tokenize_kwargs={"padding": "max_length"},

return_tensors=True,

)

print(

pipeline_without_padding_as_an_argument(example).shape

) # torch.Size([1, 22, 768])

print(

pipeline_with_padding_as_an_argument(example).shape

) # torch.Size([1, 22, 768]) padding = max_length not working

print(

pipeline_with_padding_in_kwarg(example).shape

) # torch.Size([1, 512, 768]) padding = max_length working

``` | 03-06-2023 15:12:33 | 03-06-2023 15:12:33 | I simplified a bit for future readers:

```python

example = "After stealing money from the bank vault, the bank robber was seen fishing on the Mississippi river bank."

pipeline_without_padding_as_an_argument = pipeline(

"feature-extraction",

model="bert-base-uncased",

return_tensors=True,

)

pipeline_with_padding_in_kwarg = pipeline(

"feature-extraction",

model="bert-base-uncased",

tokenize_kwargs={"padding": "max_length"},

return_tensors=True,

)

```

Also please note that `padding: "max_length"` makes unnecessary long tensors in most cases, slowing down the overal inference of your model.

I would refrain heavily from using it in a pipeline. `{"padding": True}` should be better.<|||||>That makes sense. Do we need to make it clear in the documentation about how to use `tokenize_kwargs`?<|||||>PRs to make it clearer are welcome for sure ! <|||||>@Narsil Submitted PR #22031 that adds the `tokenize_kwargs` definition in `FeatureExtrationPipeline`. Thanks!<|||||>Thanks it looks great. |

transformers | 21,970 | closed | Unable to load a pretrained model | ### System Info

- `transformers` version: 4.18.0

- Platform: Linux-4.18.0-425.10.1.el8_7.x86_64-x86_64-with-glibc2.28

- Python version: 3.10.4

- Huggingface_hub version: 0.2.1

- PyTorch version (GPU?): 1.11.0 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: yes

- Using distributed or parallel set-up in script?: no

OSError: Can't load config for '~/Unixcoder/model/changesets_model'. If you were trying to load it from 'https://huggingface.co/models', make sure you don't have a local directory with the same name. Otherwise, make sure '~/Unixcoder/model/changesets_model' is the correct path to a directory containing a config.json file

I am trying to load a second model during the course of training the first model.

I am able to load the model for the first time, but not for the second time(even though all the config files are present)

I am trying to load the model downloaded from https://huggingface.co/microsoft/unixcoder-base/tree/main

### Who can help?

_No response_

### Information

- [X] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

1. load the model from downloaded version of https://huggingface.co/microsoft/unixcoder-base/tree/main

2. train the model on custom dataset

3. load the similar model that is pretrained on different dataset

### Expected behavior

model is successfully loaded | 03-06-2023 14:42:22 | 03-06-2023 14:42:22 | Could you paste the code you are using?

```py

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("microsoft/unixcoder-base")

model = AutoModel.from_pretrained("microsoft/unixcoder-base")

```

works perfectly fine.<|||||>from transformers import (WEIGHTS_NAME, AdamW, get_linear_schedule_with_warmup,

RobertaConfig, RobertaModel, RobertaTokenizer)

tokenizer = RobertaTokenizer.from_pretrained(args.model_name_or_path)

config = RobertaConfig.from_pretrained(args.model_name_or_path)

model = RobertaModel.from_pretrained(args.model_name_or_path)

.............................

model = RobertaModel.from_pretrained('./changesets_model')

model = Model(model)

model.to(args.device)<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 21,969 | closed | Add check before int casting for PIL conversion | # What does this PR do?

Adds safeguards when images are converted to PIL images:

* Additional condition if inferring `do_rescale`

* Raise an error if values cannot be cast to `uint8`

The PIL library is used for resizing images in image processors. If not explicitly set, whether or not to rescale pixel values is inferred based on the input type: if float then values are multiplied by 255. If the input image has integer values between 0-255, but are of floating type, then these pixel are rescaled to values between [0, 65025]. This results in overflow errors when cast to `uint` [here](https://github.com/huggingface/transformers/blob/bc33fbf956eef62d0ba8d3cd67ee955ad5defcdb/src/transformers/image_transforms.py#L162) before converting to a `PIL.Image.Image`.

Fixes #21915

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [x] Did you write any new necessary tests? | 03-06-2023 14:38:39 | 03-06-2023 14:38:39 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 21,968 | closed | [TF] Fix creating a PR while pushing in TF framework | # What does this PR do?

Fixes #21967, where models in TF can't push and open a PR. A test should probably be added | 03-06-2023 14:33:19 | 03-06-2023 14:33:19 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Yep, adding this<|||||>I had a question, the `create_pr` function parameter is not available in the corresponding `PyTorch` or `Flax` implementation, I was wondering if this is intended. <|||||>Not sure I follow, torch already has this parameter. |

transformers | 21,967 | closed | [TF] Can't open a pr when pushing | Creating a PR when uploading a model should work.

```python

>>> from transformers import FlaxT5ForConditionalGeneration, TFT5ForConditionalGeneration

>>> import jax.numpy as jnp

>>> model = FlaxT5ForConditionalGeneration.from_pretrained("./art/flan-ul2", dtype = jnp.bfloat16, from_pt = True)

>>> model.push_to_hub("google/flan-ul2", use_auth_token = "XXXXX",create_pr = True)

>>> del model

>>> model = TFT5ForConditionalGeneration.from_pretrained("./art/flan-ul2", from_pt = True)

>>> model.push_to_hub("google/flan-ul2", use_auth_token = "XXXXX",create_pr = True)

File "/home/arthur_huggingface_co/transformers/src/transformers/modeling_tf_utils.py", line 2986, in push_to_hub

self.create_model_card(**base_model_card_args)

TypeError: create_model_card() got an unexpected keyword argument 'create_pr'

```

| 03-06-2023 14:32:52 | 03-06-2023 14:32:52 | cc @gante |

transformers | 21,966 | closed | Use larger atol in `torch.allclose` for some tests | # What does this PR do?

Running CI against torch 2.0 (and therefore CUDA 11.7), some tests for `BridgeTowerModel` failed:

- test_disk_offload

- test_cpu_offload

- test_model_parallelism

While with torch `1.13.1` (with CUDA 11.6), the difference between `base_output` and `new_output` in these 3 tests are 0.0, we get `1e-7 ~ 3e-6` as difference for torch `2.0`.

**Deep debugging reveals that the first non-zero difference occurs when `nn.MultiheadAttention` is called.**

This PR increase the atol to `1e-5` (the default one is `1e-8`) in `ModelTesterMixin`.

If we don't feel super good with this for all model tests, we can override these 3 tests in `BridgeTowerModelTest`.

(But when more models start using `nn.MultiheadAttention`, it's best to keep this larger value in common testing file)

| 03-06-2023 14:30:42 | 03-06-2023 14:30:42 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 21,965 | closed | [🛠️] Fix-whisper-breaking-changes | # What does this PR do?

Should fix the backward compatibility issue with `model.config.forced_decoder_ids = ...` and should help users who want to generate with timestamps.

Fixes #21937 and #21878 | 03-06-2023 10:20:34 | 03-06-2023 10:20:34 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Test are failing because we do not check the `model.config` updating and will look good! <|||||>The same should now be applied to both the `TF` and the `Flax` version as the overwriting of the `generate` function is also supported. Will open a follow up PR for these <|||||>For TF and flax #21334 |

transformers | 21,964 | closed | Add BridgeTowerForContrastiveLearning | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- text models: @ArthurZucker and @younesbelkada

- vision models: @amyeroberts

- speech models: @sanchit-gandhi

- graph models: @clefourrier

Library:

- flax: @sanchit-gandhi

- generate: @gante

- pipelines: @Narsil

- tensorflow: @gante and @Rocketknight1

- tokenizers: @ArthurZucker

- trainer: @sgugger

Integrations:

- deepspeed: HF Trainer: @stas00, Accelerate: @pacman100

- ray/raytune: @richardliaw, @amogkam

Documentation: @sgugger, @stevhliu and @MKhalusova

HF projects:

- accelerate: [different repo](https://github.com/huggingface/accelerate)

- datasets: [different repo](https://github.com/huggingface/datasets)

- diffusers: [different repo](https://github.com/huggingface/diffusers)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Maintained examples (not research project or legacy):

- Flax: @sanchit-gandhi

- PyTorch: @sgugger

- TensorFlow: @Rocketknight1

-->

| 03-06-2023 07:16:56 | 03-06-2023 07:16:56 | _The documentation is not available anymore as the PR was closed or merged._<|||||>@sgugger We have addressed all of your review in the latest commit. We have also added tests for BridgeTowerForContrastiveLearning. Would you please review the latest commit? We are looking forward to having this PR merged into main.

Thanks<|||||>Thanks for adding this model @abhiwand @tileintel ! Great to see it merged into the library :)

For the model tests, some of the configuration values in `BridgeTowerModelTester` result in large models being created and used in the test suite e.g. `vocab_size = 50265` set [here](https://github.com/huggingface/transformers/blob/bcc8d30affba29c594320fc80e4a4422fb850175/tests/models/bridgetower/test_modeling_bridgetower.py#L97), which results in periodic OOM errors in the CI runs.

Could you add a follow up PR for `BridgeTowerModelTester` and `BridgeTowerModelTest` to have smaller default values to create lighter tests? A good reference for this would be [CLIP](https://github.com/huggingface/transformers/blob/main/tests/models/clip/test_modeling_clip.py). Ideally we would also have a similar structure of test classes for the different modalities i.e. `BridgeTower[Text|Vision]ModelTest(er)`. |

transformers | 21,963 | closed | Fix bert issue | # What does this PR do?

This PR fixes a bug that a user can encounter while using generate and models that use gradient_checkpointing.

Fixes issue #21737 for Bert.

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.(#21737)

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

@younesbelkada, @gante

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- text models: @ArthurZucker and @younesbelkada

- vision models: @amyeroberts

- speech models: @sanchit-gandhi

- graph models: @clefourrier

Library:

- flax: @sanchit-gandhi

- generate: @gante

- pipelines: @Narsil

- tensorflow: @gante and @Rocketknight1

- tokenizers: @ArthurZucker

- trainer: @sgugger

Integrations:

- deepspeed: HF Trainer: @stas00, Accelerate: @pacman100

- ray/raytune: @richardliaw, @amogkam

Documentation: @sgugger, @stevhliu and @MKhalusova

HF projects:

- accelerate: [different repo](https://github.com/huggingface/accelerate)

- datasets: [different repo](https://github.com/huggingface/datasets)

- diffusers: [different repo](https://github.com/huggingface/diffusers)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Maintained examples (not research project or legacy):

- Flax: @sanchit-gandhi

- PyTorch: @sgugger

- TensorFlow: @Rocketknight1

-->

| 03-06-2023 05:31:32 | 03-06-2023 05:31:32 | _The documentation is not available anymore as the PR was closed or merged._<|||||>@younesbelkada or @gante, Can anyone of you please help me with the error I am getting after running fix-copies. Running fix-copies made changes to multiple files(19) and now I am getting tests_torch, tests_torch_and_tf error on it.<|||||>Hi @saswatmeher

Thanks for the PR!

I would probably try:

1- `pip install --upgrade -e .["quality"]` and then run `make fix-copies`

Let us know if this works!<|||||>@younesbelkada It worked. Thanks! |

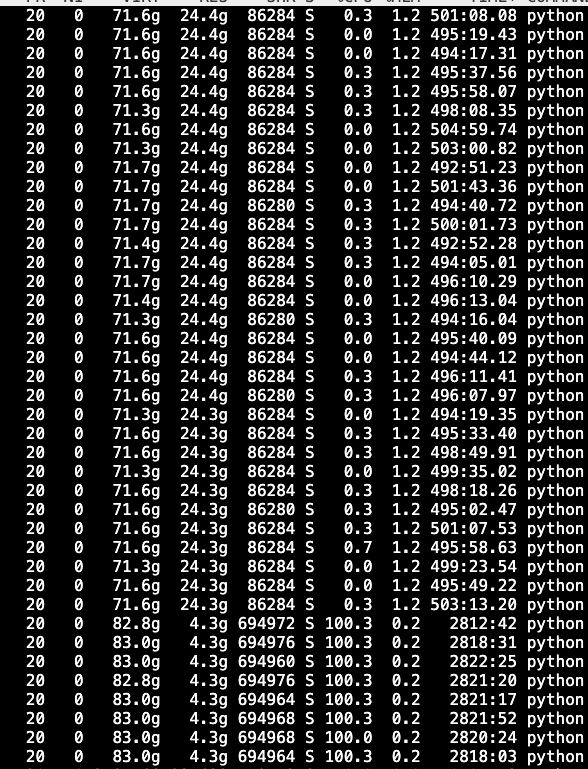

transformers | 21,962 | closed | use datasets streaming mode in trainer ddp mode cause memory leak | ### System Info

pytorch 1.11.0

py 3.8

cuda 11.3

transformers 4.26.1

datasets 2.9.0

### Who can help?

@sgugger

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

import os

import time

import datetime

import sys

import numpy as np

import random

import torch

from torch.utils.data import Dataset, DataLoader, random_split, RandomSampler, SequentialSampler,DistributedSampler,BatchSampler

torch.manual_seed(42)

from transformers import GPT2LMHeadModel, GPT2Tokenizer, GPT2Config, GPT2Model,DataCollatorForLanguageModeling,AutoModelForCausalLM

from transformers import AdamW, get_linear_schedule_with_warmup

hf_model_path ='./Wenzhong-GPT2-110M'

tokenizer = GPT2Tokenizer.from_pretrained(hf_model_path)

tokenizer.add_special_tokens({'pad_token': '<|pad|>'})

from datasets import load_dataset

gpus=8

max_len = 576

batch_size_node = 17

save_step = 5000

gradient_accumulation = 2

dataloader_num = 4

max_step = 351000*1000//batch_size_node//gradient_accumulation//gpus

#max_step = -1

print("total_step:%d"%(max_step))

import datasets

datasets.__version__

dataset = load_dataset("text", data_files="./gpt_data_v1/*",split='train',cache_dir='./dataset_cache',streaming=True)

print('load over')

shuffled_dataset = dataset.shuffle(seed=42)

print('shuffle over')

def dataset_tokener(example,max_lenth=max_len):

example['text'] = list(map(lambda x : x.strip()+'<|endoftext|>',example['text'] ))

return tokenizer(example['text'], truncation=True, max_length=max_lenth, padding="longest")

#return tokenizer(example[0], truncation=True, max_length=max_lenth, padding="max_length")

new_new_dataset = shuffled_dataset.map(dataset_tokener, batched=True, remove_columns=["text"])

print('map over')

configuration = GPT2Config.from_pretrained(hf_model_path, output_hidden_states=False)

model = AutoModelForCausalLM.from_pretrained(hf_model_path)

model.resize_token_embeddings(len(tokenizer))

seed_val = 42

random.seed(seed_val)

np.random.seed(seed_val)

torch.manual_seed(seed_val)

torch.cuda.manual_seed_all(seed_val)

from transformers import Trainer,TrainingArguments

import os

print("strat train")

training_args = TrainingArguments(output_dir="./test_trainer",

num_train_epochs=1.0,

report_to="none",

do_train=True,

dataloader_num_workers=dataloader_num,

local_rank=int(os.environ.get('LOCAL_RANK', -1)),

overwrite_output_dir=True,

logging_strategy='steps',

logging_first_step=True,

logging_dir="./logs",

log_on_each_node=False,

per_device_train_batch_size=batch_size_node,

warmup_ratio=0.03,

save_steps=save_step,

save_total_limit=5,

gradient_accumulation_steps=gradient_accumulation,

max_steps=max_step,

disable_tqdm=False,

data_seed=42

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=new_new_dataset,

eval_dataset=None,

tokenizer=tokenizer,

# Data collator will default to DataCollatorWithPadding, so we change it.

data_collator=DataCollatorForLanguageModeling(tokenizer,mlm=False),

#compute_metrics=compute_metrics if training_args.do_eval and not is_torch_tpu_available() else None,

#preprocess_logits_for_metrics=preprocess_logits_for_metrics

#if training_args.do_eval and not is_torch_tpu_available()

#else None,

)

trainer.train(resume_from_checkpoint=True)

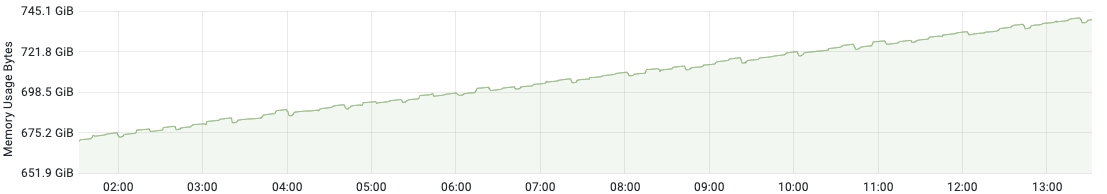

### Expected behavior

use the train code uppper

my dataset ./gpt_data_v1 have 1000 files, each file size is 120mb

start cmd is : python -m torch.distributed.launch --nproc_per_node=8 my_train.py

here is result:

here is memory usage monitor in 12 hours

every dataloader work allocate over 24gb cpu memory

according to memory usage monitor in 12 hours,sometime small memory releases, but total memory usage is increase.

i think datasets streaming mode should not used so much memery,so maybe somewhere has memory leak.

| 03-06-2023 05:22:53 | 03-06-2023 05:22:53 | cc @lhoestq <|||||>Hi ! The axis 0 in your plot is time. Do you know how many training steps it corresponds to ?

FYI the `text` loading in `datasets` samples text files line by line (with a buffer of >10MB to avoid small IO calls).

Moreover .shuffle() uses a shuffle buffer of 1,000 examples, and batched `map` uses batches of 1,000 examples as well.

Therefore unless there is a major leak somewhere, `datasets` doesn't use much RAM in streaming mode.

Feel free to try profiling memory usage and check where the biggest source of memory comes from in the code, that would be super helpful to diagnose the potential memory leak and fix it.<|||||>> Hi ! The axis 0 in your plot is time. Do you know how many training steps it corresponds to ?

>

> FYI the `text` loading in `datasets` samples text files line by line (with a buffer of >10MB to avoid small IO calls). Moreover .shuffle() uses a shuffle buffer of 1,000 examples, and batched `map` uses batches of 1,000 examples as well.

>

> Therefore unless there is a major leak somewhere, `datasets` doesn't use much RAM in streaming mode.

>

> Feel free to try profiling memory usage and check where the biggest source of memory comes from in the code, that would be super helpful to diagnose the potential memory leak and fix it.

it is roughly about between 500000 steps - 650000 steps <|||||>> Hi ! The axis 0 in your plot is time. Do you know how many training steps it corresponds to ?

>

> FYI the `text` loading in `datasets` samples text files line by line (with a buffer of >10MB to avoid small IO calls). Moreover .shuffle() uses a shuffle buffer of 1,000 examples, and batched `map` uses batches of 1,000 examples as well.

>

> Therefore unless there is a major leak somewhere, `datasets` doesn't use much RAM in streaming mode.

>

> Feel free to try profiling memory usage and check where the biggest source of memory comes from in the code, that would be super helpful to diagnose the potential memory leak and fix it.

i try use memory_profiler to profiling memory ,but profiling memory can only report the master thread memory. do you know which tool can report the dataloader worker thread memory?<|||||>I haven't tried memory_profiler with multiprocessing, but you can already try iterating on the DataLoader without multiprocessing and check if you observe a memory leak.<|||||>> I haven't tried memory_profiler with multiprocessing, but you can already try iterating on the DataLoader without multiprocessing and check if you observe a memory leak.

set dataloader_num=0 ,no memory leak<|||||>This could be an issue with the torch `DataLoader` then, or python multiprocessing.

On the `datasets` side this is the whole code that `yield` example if `num_worker > 0`:

https://github.com/huggingface/datasets/blob/c5ca1d86949ec3a5fdaec03b80500fb822bcfab4/src/datasets/iterable_dataset.py#L843

which is almost identical to the code without multiprocessing:

https://github.com/huggingface/datasets/blob/c5ca1d86949ec3a5fdaec03b80500fb822bcfab4/src/datasets/iterable_dataset.py#L937-L945

Could you check on another environment that you also observe the memory leak ?<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 21,961 | closed | Support customized vocabulary for decoding (in model.generate) | ### Feature request

Use case:

Given a small list of tokens that is a subset of the whole vocabulary of the tokenizers for T5. For example, ["put", "move", "pick", "up", "on", "in", "apple", "bag", ....]

And when we decode by using `model.generate()`, we want the model only output sentences that consist of words in the above list (i.e., limited vocabulary for beam searching or sampling).

Maybe it is already supported in some way?

### Motivation

For some applications, we only want to decode sentences with a limited vocabulary instead of allowing open-ended generation.

### Your contribution

I'm not sure what is the best way to add this feature, if it is easy to limit the vocab for generate functions, then I can help add this PR. | 03-06-2023 01:56:33 | 03-06-2023 01:56:33 | I have read this post: https://huggingface.co/blog/constrained-beam-search

But it seems that such Constraints can only support constraints of ensuring some tokens are part of the sentences but cannot prevent other tokens to be selected during decoding. <|||||>Found this post to use `bad_word_list` as the whole vocab - customized vocab as the input:

https://stackoverflow.com/questions/63920887/whitelist-tokens-for-text-generation-xlnet-gpt-2-in-huggingface-transformers

Will have a try but sounds like a bit awkward to use. <|||||>cc @gante <|||||>Hey @yuchenlin 👋

My first approach would be to use `bad_word_list`, passing to it all but the tokens you want to use. It's a no-code approach, but perhaps not the most efficient computationally.

Alternatively, you can write your own processor class that sets to `-inf` the logits of all but the tokens you want to consider. To do it, you would have to:

1. Write your own class that implements the logic. You can see plenty of examples in [this file](https://github.com/huggingface/transformers/blob/main/src/transformers/generation/logits_process.py)

2. Use your class at generation time, e.g.

```py

tokens_to_keep = tokenizer(xxx) # xxx = list with your valid words

my_processor = MyLogitsProcessorClass(tokens_to_keep=tokens_to_keep)

model.generate(inputs, ..., logits_processor=LogitsProcessorList([my_processor]))

```

I hope this short guide helps 🤗 <|||||>Hi @gante ,

Thanks a lot! Yeah I have tried with the `bad_wordLlist` (see example below) and I found that the generated outputs are much worse than before although they are indeed constrained to the given vocabulary. I was using beam search and I'm not sure if it is because that the vocab is so small that the normalization or other process becomes unstable.

I will try the logit processor idea as well. Thank you! :D

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("google/flan-t5-small")

model = AutoModelForSeq2SeqLM.from_pretrained("google/flan-t5-small")

whitelist = ["move", "it", "pick", "up", "focus", "on"]

whitelist_ids = [tokenizer.encode(word)[0] for word in whitelist]

bad_words_ids=[[id] for id in range(tokenizer.vocab_size) if id not in whitelist_ids]

encoder_input_str = "Explain this concept to me: machine learning"

input_ids = tokenizer(encoder_input_str, return_tensors="pt").input_ids

outputs = model.generate(

input_ids,

num_beams=10,

do_sample=False,

num_return_sequences=1,

no_repeat_ngram_size=1,

remove_invalid_values=True,

bad_words_ids = bad_words_ids,

)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

```<|||||>@yuchenlin haha yes, the quality of the output will likely decrease significantly, that is to be expected!

Instead of whitelisting words, consider the "soft-whitelisting" alternative: increase the odds of picking a token from the whitelist. You can easily implement this by changing the repetition penalty logits processor to boost the odds of certain tokens :)<|||||>Thanks a lot for the advice! I currently used a simpler method --- adding some random tokens (say 30% of the whole vocab) to the whitelist and it seems to help.

Will also try your idea soon! Thanks again! :D <|||||>Just in case you are interested in more diversity of these constraints, I wrote a whole package and paper about this idea: https://github.com/Hellisotherpeople/Constrained-Text-Generation-Studio<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 21,960 | closed | Add missing parameter definition in layoutlm config | Four parameters in `LayoutLM` config were missing definitions, Added their definition (copied from BertConfig).

# What does this PR do?

Fix docs, add parameter definition copying them from BertConfig

## Before submitting

- [X] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- text models: @ArthurZucker and @younesbelkada

- vision models: @amyeroberts

- speech models: @sanchit-gandhi

- graph models: @clefourrier

Library:

- flax: @sanchit-gandhi

- generate: @gante

- pipelines: @Narsil

- tensorflow: @gante and @Rocketknight1

- tokenizers: @ArthurZucker

- trainer: @sgugger

Integrations:

- deepspeed: HF Trainer: @stas00, Accelerate: @pacman100

- ray/raytune: @richardliaw, @amogkam

Documentation: @sgugger, @stevhliu and @MKhalusova

HF projects:

- accelerate: [different repo](https://github.com/huggingface/accelerate)

- datasets: [different repo](https://github.com/huggingface/datasets)

- diffusers: [different repo](https://github.com/huggingface/diffusers)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Maintained examples (not research project or legacy):

- Flax: @sanchit-gandhi

- PyTorch: @sgugger

- TensorFlow: @Rocketknight1

-->

| 03-06-2023 00:42:28 | 03-06-2023 00:42:28 | _The documentation is not available anymore as the PR was closed or merged._<|||||>cc @amyeroberts |

transformers | 21,959 | closed | Fix MinNewTokensLengthLogitsProcessor when used with a list of eos tokens | # What does this PR do?

MinNewTokensLengthLogitsProcessor is missing support for a list of eos token ids.

This PR adds the missing support, in the same way it was added in MinLengthLogitsProcessor.

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

@gante

| 03-05-2023 17:10:47 | 03-05-2023 17:10:47 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 21,958 | closed | Cannot get the model weight of T5 INT8 model with Transformers 4.26.1 | ### System Info

- `transformers` version: 4.26.1

- Platform: Linux-3.10.0-862.el7.x86_64-x86_64-with-glibc2.17

- Python version: 3.8.13

- Huggingface_hub version: 0.12.1

- PyTorch version (GPU?): 1.13.0+cpu

- Tensorflow version (GPU?): not installed (No)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: <No>

### Who can help?

@ArthurZucker @younesbelkada @sgu

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [X] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

The code is shown as follows:

```python

import torch

import transformers

from datasets import load_dataset

model_name = 't5-small'

model_fp32 = transformers.AutoModelForSeq2SeqLM.from_pretrained(

model_name,

)

model_int8 = torch.ao.quantization.quantize_dynamic(

model_fp32,

{torch.nn.Linear},

dtype=torch.qint8)

def get_example_inputs(model_name, dataset_name='sst2'):

tokenizer = transformers.AutoTokenizer.from_pretrained(model_name)

dataset = load_dataset(dataset_name, split='validation')

text = dataset[0]['text'] if dataset_name=='lambada' else dataset[0]['sentence']

input = tokenizer(text, padding='max_length', max_length=195, return_tensors='pt')

example_inputs = input['input_ids'][0].to('cpu').unsqueeze(0)

return example_inputs

example_inputs = get_example_inputs(model_name, dataset_name='lambada')

output = model_int8.generate(example_inputs)

print(output)

```

The error message is shown as follows,

[Issue Report.txt](https://github.com/huggingface/transformers/files/10905558/Issue.Report.txt)

### Expected behavior

This example's expected behavior is quantizing the FP32 T5 model into INT8 format using INT8 model to generate the output.

This code could make a success with the previous version Transformers 4.26.0. After the recent updates, This code cannot run normally anymore.

We found the error is result from the fix of another issue: https://github.com/huggingface/transformers/issues/20287. That is probably because that you have used weight from "self.wo". But that weight becomes a function in the INT8 module. It should be used by "self.wo.weight()". Please reconsider the previous fix for that issue to make it compatible. | 03-05-2023 08:14:46 | 03-05-2023 08:14:46 | Cool that this seems to have been fixed for you! Could you tell us what the solution was? (for futur reference and other users who might stumble upon the smae problem)<|||||>@ArthurZucker Hi, Arthur, we still did not fix this issue. Could you please have a check for this issue? We just modify the previous description to make the problem more clear for you to solve. <|||||>Hey! Is there a reason why you are not using `load_in_8bit = True`? If you install the `bits_and_bytes` library, getting the 8bit quantized version of the model is as easy as the following:

```python

import transformers

from datasets import load_dataset

model_name = 't5-small'

model = transformers.AutoModelForSeq2SeqLM.from_pretrained(model_name,load_in_8bit = True, device_map="auto")

```<|||||>Hello @XuhuiRen ,

Your script worked fine on the `main` branch of `transformers`

```python

import torch

import transformers

from datasets import load_dataset

model_name = 't5-small'

model_fp32 = transformers.AutoModelForSeq2SeqLM.from_pretrained(

model_name,

)

model_int8 = torch.ao.quantization.quantize_dynamic(

model_fp32,

{torch.nn.Linear},

dtype=torch.qint8

)

output = model_int8.generate(torch.LongTensor([[0, 1, 2, 3]]))

print(output)

```

Can you try it with:

```

pip install git+https://github.com/huggingface/transformers.git

```

For more context, the PR: https://github.com/huggingface/transformers/pull/21843 solved your issue<|||||>Hi, @ArthurZucker and @younesbelkada really thanks for your reply. Your solution is works for my issue. |

transformers | 21,957 | closed | Update expected values in `XLMProphetNetModelIntegrationTest` | # What does this PR do?

After #21870, we also need to update some expected values in `XLMProphetNetModelIntegrationTest` (as has been done for `ProphetNetModelIntegrationTest` in that PR) | 03-05-2023 05:23:43 | 03-05-2023 05:23:43 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 21,956 | closed | [Generate] Fix gradient_checkpointing and use_cache bug for BLOOM | Fixes #21737 for Bloom.

## Before submitting

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a GitHub issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

## Who can review?

cc @younesbelkada @gante | 03-05-2023 02:41:51 | 03-05-2023 02:41:51 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 21,955 | closed | LLaMA Implementation | # What does this PR do?

Implementation of LLaMA models (https://arxiv.org/abs/2302.13971). Model weights can be requested [here](https://docs.google.com/forms/d/e/1FAIpQLSfqNECQnMkycAp2jP4Z9TFX0cGR4uf7b_fBxjY_OjhJILlKGA/viewform). Weight conversion script is included.

Weights conversion can be run via:

```bash

python src/transformers/models/llama/convert_llama_weights_to_hf.py \

--input_dir /path/to/downloaded/llama/weights \

--model_size 7B \

--output_dir /output/path

```

Models can then be loaded via:

```python

tokenizer = transformers.LLaMATokenizer.from_pretrained("/output/path/tokenizer/")

model = transformers.LLaMAForCausalLM.from_pretrained("/output/path/llama-7b/")

```

Example:

```bash

batch = tokenizer(

"The primary use of LLaMA is research on large language models, including",

return_tensors="pt",

add_special_tokens=False

)

batch = {k: v.cuda() for k, v in batch.items()}

generated = model.generate(batch["input_ids"], max_length=100)

print(tokenizer.decode(generated[0]))

```

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes https://github.com/huggingface/transformers/issues/21796

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

@ArthurZucker @younesbelkada

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- text models: @ArthurZucker and @younesbelkada

- vision models: @amyeroberts

- speech models: @sanchit-gandhi

- graph models: @clefourrier

Library:

- flax: @sanchit-gandhi

- generate: @gante

- pipelines: @Narsil

- tensorflow: @gante and @Rocketknight1

- tokenizers: @ArthurZucker

- trainer: @sgugger

Integrations:

- deepspeed: HF Trainer: @stas00, Accelerate: @pacman100

- ray/raytune: @richardliaw, @amogkam

Documentation: @sgugger, @stevhliu and @MKhalusova

HF projects:

- accelerate: [different repo](https://github.com/huggingface/accelerate)

- datasets: [different repo](https://github.com/huggingface/datasets)

- diffusers: [different repo](https://github.com/huggingface/diffusers)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Maintained examples (not research project or legacy):

- Flax: @sanchit-gandhi

- PyTorch: @sgugger

- TensorFlow: @Rocketknight1

-->

| 03-05-2023 00:05:39 | 03-05-2023 00:05:39 | does this work with int8?<|||||>> does this work with int8?

No idea! I haven't messed with int8 too much myself. It ought to be compatible with whatever is already supported in the HF models.<|||||>nice work! thanks for the upload and I hope it gets pulled<|||||>_The documentation is not available anymore as the PR was closed or merged._<|||||>It looks like the tests which are currently failing are unrelated to the LLaMA code, so this should be good to review/use.

If folks can try it out (particularly with the larger, sharded models) and see if there are any issues, that will be helpful!<|||||>> It looks like the tests which are currently failing are unrelated to the LLaMA code, so this should be good to review/use.

>

> If folks can try it out (particularly with the larger, sharded models) and see if there are any issues, that will be helpful!

At lest the convert script seems to work fine. I was able to convert 7B to 30B. I do not have enough ram to convert 65B.<|||||>Great work. thanks for putting this together<|||||>After replacing transformers from Kobold with this PR I am able to load the shards as expected. Just I cant generate anything because Kobold still needs some changes.

<|||||>> > does this work with int8?

>

> No idea! I haven't messed with int8 too much myself. It ought to be compatible with whatever is already supported in the HF models.

Int8 seems not working but float16 is fine, in my hasty put-together test at https://github.com/zsc/llama_infer . Please throw a comment in case you find something!<|||||>@zphang I'm not able to get something like `tokenizer = AutoTokenizer.from_pretrained("/data/llama/hf/7b/tokenizer/")` to work. Is this intentional or just leaving AutoTokenizer for future work?<|||||>> @zphang I'm not able to get something like `tokenizer = AutoTokenizer.from_pretrained("/data/llama/hf/7b/tokenizer/")` to work. Is this intentional or just leaving AutoTokenizer for future work?

What issue are you having / what is the error?<|||||>I have tested the code and these are my findings:

1. The conversion script works.

2. Loading the model works.

3. Loading the tokenizer with `transformers.LLaMATokenizer.from_pretrained` works.

4. Loading the tokenizer with `AutoTokenizer.from_pretrained` does not work and generates this error:

```

OSError: /tmp/converted/tokenizer/ does not appear to have a file named config.json. Checkout

'https://huggingface.co//tmp/converted/tokenizer//None' for available files.

```

5. The generated text seems to be incoherent. If I try these default values for the generation parameters:

```

model.generate(input_ids, eos_token_id=2, do_sample=True, temperature=1, top_p=1, typical_p=1, repetition_penalty=1, top_k=50, min_length=0, no_repeat_ngram_size=0, num_beams=1, penalty_alpha=0, length_penalty=1, early_stopping=False, max_new_tokens=200).cuda()

```

with this prompt:

```

Common sense questions and answers

Question: What color is the sky?

Factual answer:

```

I get

```

Common sense questions and answers

Question: What color is the sky?

Factual answer: Tags: python, django, django-models

Question: Using Django with multiple databases

I am attempting to use django with multiple databases, and I have the following code:

\begin{code}

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.sqlite3',

'NAME': ':memory:',

},

'db_one': {

'ENGINE': 'django.db.backends.sqlite3',

'NAME': 'db_one',

},

'db_two': {

'ENGINE': 'django.db.backends.sqlite3',

'NAME': 'db_two',

},

}

```

It seems to me that prompts are being completely ignored.

6. Loading in 8-bit mode with `load_in_8bit=True` works.<|||||>This is OK: `tokenizer = transformers.LLaMATokenizer.from_pretrained("/data/llama/hf/7b/tokenizer/")`

If using `tokenizer = AutoTokenizer.from_pretrained("/data/llama/hf/7b/tokenizer/"` then it will complain no "config.json".

```

OSError: /data/llama/hf/7b/tokenizer/ does not appear to have a file named config.json. Checkout

'https://huggingface.co//data/llama/hf/7b/tokenizer//None' for available files.

```

I then hacked by softlinking `/data/llama/hf/7b/tokenizer/special_tokens_map.json` to `/data/llama/hf/7b/tokenizer/config.json` and it works. So maybe just rename?

Anyway, can now happily play with LLaMA in Hugging Face world and thanks for the great work!<|||||>Thanks for the comments. Looks like the saved tokenizer doesn't work for `AutoTokenizer` but works if you directly instantiate from `LLaMATokenizer`. Maybe one of the HF folks can chime in on the best way to address that.

> The generated text seems to be incoherent. If I try these default values for the generation parameters:

Can you check the input_ids you're using to generate? The tokenizer currently adds both BOS and EOS tokens by default, and an EOS might cause the model to ignore your prompt.

Perhaps I can set EOS to not be added by default so it operates closer to expected behavior.<|||||>For this prompt:

```

'Common sense questions and answers\n\nQuestion: What color is the sky?\nFactual answer:'

```

these are the input_ids:

```

tensor([[ 1, 13103, 4060, 5155, 322, 6089, 13, 13, 16492, 29901,

1724, 2927, 338, 278, 14744, 29973, 13, 29943, 19304, 1234,

29901, 2]], device='cuda:0')

```

I do not know how to interpret these numbers, but if there is an EOS token in that tensor and that token is causing the text generation to derail, changing that default would be valuable.<|||||>1 is BOS and 2 is EOS. Can you try without the last input id?

I also added an example in my PR message.<|||||>I confirm that doing this

```

input_ids = input_ids[:, :-1]

```

to remove the last input id before calling `model.generate(...)` causes the text generation to become coherent:

```

Common sense questions and answers

Question: What color is the sky?

Factual answer: The sky is blue. The sky is blue, and it is a fact that it is blue. The sky is indisputably blue.

```<|||||>Added a commit that should fix the tokenizer issues, and not add BOS and EOS by default.<|||||>Awesome, I confirm that the text generation is coherent by default now.

I still cannot load the tokenizer with `AutoTokenizer.from_pretrained`. The error has now changed to this:

```

File "/tmp/transformers/src/transformers/models/auto/tokenization_auto.py", line 694, in from_pretrained

tokenizer_class_py, tokenizer_class_fast = TOKENIZER_MAPPING[type(config)]

File "/tmp/transformers/src/transformers/models/auto/auto_factory.py", line 610, in __getitem__

raise KeyError(key)

KeyError: <class 'transformers.models.llama.configuration_llama.LLaMAConfig'>

```<|||||>> > does this work with int8?

>

> No idea! I haven't messed with int8 too much myself. It ought to be compatible with whatever is already supported in the HF models.

After the fix with EOS, int8 (bitsandbytes) looks decent. Example in https://github.com/zsc/llama_infer/blob/main/README.md<|||||>After https://github.com/huggingface/transformers/pull/21955/commits/459e2ac9f551650ced58deb1c65f06c3d483d606, `AutoTokenizer.from_pretrained` now works as expected.

<|||||>KoboldAI now works<|||||>I'd like to see a more memory-efficient conversion script, the current version loads everything into system memory which makes converting the 30B and 65B variants challenging on some systems<|||||>Yes, this is a quick and dirty version that loads everything into memory.

One issue is that the way the weights are sharded (for tensor parallelism) is orthogonal to the way that HF shards the weights (by layer). So either we have to load everything in at once, or we have to load/write multiple times. The latter would be slower but useful for folks with less memory.<|||||>Has anyone tested loading 65B with `accelerate` to load on multiple GPUs?<|||||>I can't load the 7B model to cuda with one A4000

should I just change the gpu?

<|||||>I'm observing some strange behavior with the tokenizer when encoding sequences beginning with a newline:

```

>>> t = AutoTokenizer.from_pretrained("llama_hf/tokenizer")

>>> res = t.encode("\nYou:")

>>> res

[29871, 13, 3492, 29901]

>>> t.decode(res)

'You:'

```

The newline seems to get lost somewhere along the way.

EDIT: Looking into this, it seems it might be the expected behavior of `sentencepiece`.<|||||>> Has anyone tested loading 65B with `accelerate` to load on multiple GPUs?

||fp16|int8(bitsandbytes)|

|--|--|--|

|V100|OK, 5xV100|Bad results, short generated sequences|

|A100|OK, 6xA100 when using "auto"|OK, 3xA100|

Yes, I currently have a 65B fp16 model running on 6xV100 now (5X should be enough). My working code is at https://github.com/zsc/llama_infer/ . If there are CUDA OOM due to bad distribution of weights among cards, one thing worth trying is tweaking the device_map (`accelerate` seems to only counts weights when enforcing the memory cap in device_map, so there is an art for setting custom cap a little lower for every card, especially card 0).

Strangely, int8 (LLM.int8 to be specific) for 65B model works like a charm on A100, but leads to bad results on V100 with abnormally short generated sequences.<|||||>> Strangely, int8 (LLM.int8 to be specific) for 65B model works like a charm on A100, but leads to bad results on V100 with abnormally short generated sequences.

I will have a look at this later next week. The V100 takes a different code path than the A100 because the V100 does not support Int8 tensor cores. I think that is the issue here. We will soon publish FP4 inference which should be more universal and easier to use.<|||||>Jumping on @thomasw21 comment, we sadly cannot accept any code licensed GPLv3 as it would taint the whole Transformers library under that license. This means that the modeling code should be copied from GPT-NeoX whenever possible (with Copied from statements) since I believe that this model is very close to it and that you should be super familiar with it @zphang ;-) , and that no parts of the modeling code should be copy-pasted from the original Llama code.

We also cannot attribute Copyright to Meta-AI /Meta in all those files, as attributing that copyright would admit the code in the PR is based on theirs and thus get us back to the license problem.<|||||>I tried quantizing LLaMa using [GPTQ](https://arxiv.org/abs/2210.17323).

I've confirmed that 4 or 3 bit quantization works well, at least on LLaMa 7b.

| Model([LLaMa-7B](https://arxiv.org/abs/2302.13971)) | Bits | group-size | Wikitext2 | PTB | C4 |

| --------- | ---- | ---------- | --------- | --------- | ------- |

| FP16 | 16 | - | 5.67 | 8.79 | 7.05 |

| RTN | 4 | - | 6.28 | 9.68 | 7.70 |

| [GPTQ](https://arxiv.org/abs/2210.17323) | 4 | 64 | **6.16** | **9.66** | **7.52** |

| RTN | 3 | - | 25.66 | 61.25 | 28.19 |

| [GPTQ](https://arxiv.org/abs/2210.17323) | 3 | 64 | **12.24** | **16.77** | **9.55** |

I have released the [code](https://github.com/qwopqwop200/GPTQ-for-LLaMa) for this.

<|||||>Running into an issue with the following code

```

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("models/facebook_LLaMA-7b")

output = [[v] for k, v in tokenizer.get_vocab().items() if any(c in str(k) for c in "<>[]")]

debug_output = tokenizer.batch_decode(output)

print(debug_output)

```

This is supposed to only print tokens that are including one of the <>[] characters, but I am getting a wide range back at the beginning causing our code to effect way to many tokens. This includes tokens that are blatantly false such as every singular letter of the alphabet.

Is this to be expected with the kind of tokenizer that is used and do we have to find an alternative implementation or is something going wrong here on a deeper level?

If I try the same thing with a tokenizer from the usual models we run such as the GPT-J tokenizer this code runs properly.<|||||>> I tried quantizing LLaMa using [GPTQ](https://arxiv.org/abs/2210.17323). I've confirmed that 4 or 3 bit quantization works well, at least on LLaMa 7b.

>

> Model([LLaMa-7B](https://arxiv.org/abs/2302.13971)) Bits group-size Wikitext2 PTB C4

> FP16 16 - 5.67 8.79 7.05

> RTN 4 - 6.28 9.68 7.70

> [GPTQ](https://arxiv.org/abs/2210.17323) 4 64 **6.16** **9.66** **7.52**

> RTN 3 - 25.66 61.25 28.19

> [GPTQ](https://arxiv.org/abs/2210.17323) 3 64 **12.24** **16.77** **9.55**

> I have released the [code](https://github.com/qwopqwop200/GPTQ-for-LLaMa) for this.

Do you plan to publish 4-bit quantized models on the hub? If not, I'd be more than happy to generate them and push them up.<|||||>> > [GPTQ를](https://arxiv.org/abs/2210.17323) 사용하여 LLaMa를 양자화해 보았습니다 . 적어도 LLaMa 7b에서는 4비트 또는 3비트 양자화가 잘 작동함을 확인했습니다.

> > Model( [LLaMa-7B](https://arxiv.org/abs/2302.13971) ) Bits group-size Wikitext2 PTB C4 [FP16](https://github.com/qwopqwop200/GPTQ-for-LLaMa)

> > 16 - 5.67 8.79 7.05

> > RTN 4 -

> > 6.28 9.68 7.70

> > [GPTQ](https://arxiv.org/abs/2210.17323) 4 64 **6.16 ** **9.66 ** **7.52** RTN

> > 3 - 25.66 61.25 28.19 **GPTQ **

> > [3](https://arxiv.org/abs/2210.17323) 64 **12.75 ****1** .**** **** ****[](https://github.com/qwopqwop200/GPTQ-for-LLaMa)

>

> 허브에 4비트 양자화 모델을 게시할 계획입니까? 그렇지 않다면 기꺼이 생성하고 밀어 올릴 것입니다.

There are no plans to publish a 4-bit quantization model yet.

And to get advantages in speed and memory, you need to change and use dedicated 3-bit CUDA Kernels.

I haven't even tested 3-bit CUDA Kernels yet.<|||||>Maybe this issure need to be fixed?

the [llama-int8](https://github.com/tloen/llama-int8) can works.

but this

```

from transformers import LLaMaForCausalLM,LLaMaPreTrainedModel

model = LLaMaForCausalLM.from_pretrained("Z:/LLaMA/llama-7b-hf")

```

errors:

File [G:\pythons\huggingface\transformers\src\transformers\modeling_utils.py:2632](file:///G:/pythons/huggingface/transformers/src/transformers/modeling_utils.py:2632), in PreTrainedModel.from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

2622 if dtype_orig is not None:

2623 torch.set_default_dtype(dtype_orig)

2625 (

2626 model,

2627 missing_keys,

2628 unexpected_keys,

2629 mismatched_keys,

2630 offload_index,

2631 error_msgs,

-> 2632 ) = cls._load_pretrained_model(

2633 model,

2634 state_dict,

2635 loaded_state_dict_keys, # XXX: rename?

2636 resolved_archive_file,

2637 pretrained_model_name_or_path,

2638 ignore_mismatched_sizes=ignore_mismatched_sizes,

2639 sharded_metadata=sharded_metadata,

...

1268 return modules[name]

-> 1269 raise AttributeError("'{}' object has no attribute '{}'".format(

1270 type(self).__name__, name))

AttributeError: 'LLaMaLayerNorm' object has no attribute 'bias'

<|||||>#

> Maybe this issure need to be fixed?

>

> the [llama-int8](https://github.com/tloen/llama-int8) can works.

>

> but this

>

> ```

> from transformers import LLaMaForCausalLM,LLaMaPreTrainedModel

> model = LLaMaForCausalLM.from_pretrained("Z:/LLaMA/llama-7b-hf")

> ```

>

> errors:

>

> File G:\pythons\huggingface\transformers\src\transformers\modeling_utils.py:2632, in PreTrainedModel.from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs) 2622 if dtype_orig is not None: 2623 torch.set_default_dtype(dtype_orig) 2625 ( 2626 model, 2627 missing_keys, 2628 unexpected_keys, 2629 mismatched_keys, 2630 offload_index, 2631 error_msgs, -> 2632 ) = cls._load_pretrained_model( 2633 model, 2634 state_dict, 2635 loaded_state_dict_keys, # XXX: rename? 2636 resolved_archive_file, 2637 pretrained_model_name_or_path, 2638 ignore_mismatched_sizes=ignore_mismatched_sizes, 2639 sharded_metadata=sharded_metadata, ... 1268 return modules[name] -> 1269 raise AttributeError("'{}' object has no attribute '{}'".format( 1270 type(self).**name**, name))

>

> AttributeError: 'LLaMaLayerNorm' object has no attribute 'bias'

I'm not sure how that code even runs, the case doesn't match. You're importing `LLaMaForCausalLM` and the code provides `LLaMAForCausalLM`

Also, where did you pull the model from? Is that the copy from decapoda-research on the hub?<|||||>> Jumping on @thomasw21 comment, we sadly cannot accept any code licensed GPLv3 as it would taint the whole Transformers library under that license. This means that the modeling code should be copied from GPT-NeoX whenever possible (with Copied from statements) since I believe that this model is very close to it and that you should be super familiar with it @zphang ;-) , and that no parts of the modeling code should be copy-pasted from the original Llama code.

>

> We also cannot attribute Copyright to Meta-AI /Meta in all those files, as attributing that copyright would admit the code in the PR is based on theirs and thus get us back to the license problem.

Note that under Apache 2.0 the correct thing to do is credit _EleutherAI_ with the copyright. I have gone ahead and added the proper copyright headers, including adding a notice informing the reading that the code has been modified from it's original version and where it was adapted from.

**Note that I have not changed the code itself in any way.** If it is currently the case that some of the code is based on the FairSeq implementation that still needs to be removed and rewritten using GPT-NeoX (whether the implementation in this library or the GPT-NeoX library itself) as a reference. However once all GPLv3 licensed code has been removed, the headers I added should be correct.

cc: @zphang @sgugger @thomasw21 for visibility.

It might be worth mentioning this in the documentation of the model, as I anticipate this will cause some people confusion.

> Also, where did you pull the model from? Is that the copy from decapoda-research on the hub?

@zoidbb this PR includes a script for converting the models from their original format to a HF-compliant one.<|||||>@henk717 your code doesn't work for me with any model. I consistently error on the line `debug_output = tokenizer.batch_decode(output)`<|||||>Thanks for your contribution! However, I find that the model usually ends up repeating the same sentence during inference. For example, by running the code below:

```

import transformers

import os

os.environ['CUDA_VISIBLE_DEVICES']='3'

config = transformers.AutoConfig.from_pretrained("./llama-7b-hf/llama-7b")

tokenizer = transformers.AutoTokenizer.from_pretrained("./llama-7b-hf/tokenizer")

model = transformers.AutoModelForCausalLM.from_pretrained("./llama-7b-hf/llama-7b", config=config).cuda()

batch = tokenizer(

["The primary use of LLaMA is research on large language models, including "],

return_tensors="pt"

)

batch = {k: v.cuda() for k, v in batch.items()}

input_ids = batch["input_ids"] # tensor([[1,...]])

generated = model.generate(input_ids, temperature=0.8, top_p=0.95, max_length=256).cuda()

print(tokenizer.decode(generated[0])

```

**the LLaMA-7b-hf output is:**

**while the original LLaMA(7b) output is (temperature=0.8, top_p=0.95):**

Now it seems that the two models are quite different with each other. I wonder if they can output the same sentence, given the same input and parameters?<|||||>after install your branch of llama, i can't import LLaMaTokenizer as below

ImportError: cannot import name 'LLaMaTokenizer' from 'transformers'

but import LLaMaForCausalLM is successful...

how could this happen?<|||||>Hey all! Great to see that the community is so fired up for this PR 🤗 🔥 ! @zphang thanks a lot for this contribution, don't hesitate to ping me once this is ready for review,. In the mean time let's all give him some time, as it was never stated that this should already work or compile!

Let's wait until then to report potential issues ! 😉 <|||||>@StellaAthena Fully in line with your change of copyright and also to explicitly mention in the doc page of the model that the code is based on GPT-NeoX and not the original Llama code. This can be put in the usual place we say: "This model was contributed by xxx. The original code can be found [here](yyy)."<|||||>> > Has anyone tested loading 65B with `accelerate` to load on multiple GPUs?

>

> fp16 int8(bitsandbytes)

> V100 OK, 5xV100 Bad results, short generated sequences

> A100 OK, 6xA100 when using "auto" OK, 3xA100

> Yes, I currently have a 65B fp16 model running on 6xV100 now (5X should be enough). My working code is at https://github.com/zsc/llama_infer/ . If there are CUDA OOM due to bad distribution of weights among cards, one thing worth trying is tweaking the device_map (`accelerate` seems to only counts weights when enforcing the memory cap in device_map, so there is an art for setting custom cap a little lower for every card, especially card 0).

>

> Strangely, int8 (LLM.int8 to be specific) for 65B model works like a charm on A100, but leads to bad results on V100 with abnormally short generated sequences.

CC: @zsc

Just a heads up on the issue with V100 and bitsandbytes, I've encountered a bit weird and may be relevant behavior with long inputs, but relaxing the quantization threshold by `BitsAndBytesConfig(llm_int8_threshold=5.0)` (which I believe is set to 6 by default according to the [doc](https://huggingface.co/docs/transformers/main/main_classes/quantization)) resolved the issue. Ref: https://github.com/huggingface/transformers/issues/21987

<|||||>@zoidbb Thank you!!! I'm sorry, the error is the branch of "thomas/llama", not this!<|||||>Heads up to folks already using this PR: I will be pushing some changes today to address the maintainers' feedback that will break compatibility. In particular, I will be making a major change to how the RoPE encodings are computed. Please pull and re-convert the weights when the branch is updated. Sorry for the inconvenience.<|||||>>

Thanks for the heads up <|||||>As mentioned above, I've pushed an update (previous git hash was: 6a17e7f) that introduces some breaking changes to incorporate the Transformers maintainers' feedback. (There are still some tweaks to go, but nothing that should be breaking from here.)

**Please rerun the weight conversion scripts.**

Major changes:

- Removal of Decoder class

- `add_bos` on by default. `add_eos` is still off.

- Reimplementation of RoPE based on NeoX version. This required a weight permutation (NeoX's RoPE slices the head_dims in half, the Meta implementation interleaves), which is performed in the conversion script. **Note**: the weights are still the same shape, so if you don't rerun the conversion script and only rename the keys, the weights will load but be completely incorrect.

As before, please let me know if you run into any issues, it is great to have this many people trying and testing the implementation.<|||||>Running into issues with the model conversion on the latest update, loading the model fails (On the latest version of the pull request git) and pytorch_model-00033-of-00033 is missing.

Using the custom KoboldAI loader it reports a key error, and using the official HF loader it mentions not all weights could be initialized correctly but does finish the loading process.<|||||>> Running into issues with the model conversion on the latest update, loading the model fails (On the latest version of the pull request git) and pytorch_model-00033-of-00033 is missing.

>

> Using the custom KoboldAI loader it reports a key error, and using the official HF loader it mentions not all weights could be initialized correctly but does finish the loading process.

Confirmed this is an issue on 7B and 13B both, the last file is missing both from disk and from the index file.<|||||>@zphang you have a typo in the line defining the filename for the last .bin file, you use n_layers instead of n_layers+1. this leads to part 32 being overwritten, instead of part 33 being written (in the case of 7B).<|||||>Hmm now I am a little confused. There is an off-by-one error, but that's it going from 0 to n_layers instead of 1 to n_layers+1. It ought to load just fine though, since it's consistent with the index JSON.

(The +1 is for the embedding layers and final norm)<|||||>Pushed fix for off-by-one naming.<|||||>@zphang now im getting this error:

```

RuntimeError: Failed to import transformers.models.llama.tokenization_t5 because of the following error (look up to see its traceback):

No module named 'transformers.models.llama.tokenization_t5'

```<|||||>Try again now!<|||||>Seems to load, running the new model conversion through its paces. Thanks for your work.<|||||>I am not having any luck yet, my errors remain identical. For context I use AutoModelForCausalLM in the code.<|||||>> I am not having any luck yet, my errors remain identical. For context I use AutoModelForCausalLM in the code.

I'm also using that, and it's working fine. Did you make sure to grab the latest commits and install them? Also you have run the conversion again.<|||||>Late night coding moment, my update script tricked me into thinking it was applying the update but it was only cloning the git instead of applying it (Normally I run a different one that does more that does do that) so my last few attempts used the same code.

The update fixed it, our software also has some regex's to clean up output that were not being applied correctly previously because of an issue I did not manage to track down. On this new iteration of the fork that bug got fixed, so while I do not know what the cause behind that was on the old version I am happy to report the latest version is an improvement for us in functionality.<|||||>The model behaves much better with these latest changes, I was always confused why I either got long elaborate nonsense or really short answers when using it as a chatbot :P Thanks for your work @zphang this is epic.<|||||>Hi thanks for your great work. Can we fine-tune it for now?<|||||>@zphang there seems to be an issue when using generate with `num_return_sequences`.

Running this code:

```python