url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

1.83B

| node_id

stringlengths 18

32

| number

int64 1

6.09k

| title

stringlengths 1

290

| labels

list | state

stringclasses 2

values | locked

bool 1

class | milestone

dict | comments

int64 0

54

| created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| closed_at

stringlengths 20

20

⌀ | active_lock_reason

null | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict | is_pull_request

bool 2

classes | comments_text

sequence |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/2473 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2473/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2473/comments | https://api.github.com/repos/huggingface/datasets/issues/2473/events | https://github.com/huggingface/datasets/pull/2473 | 917,538,629 | MDExOlB1bGxSZXF1ZXN0NjY3MDU5MjI5 | 2,473 | Add Disfl-QA | [] | closed | false | null | 2 | 2021-06-10T16:18:00Z | 2021-07-29T11:56:19Z | 2021-07-29T11:56:18Z | null | Dataset: https://github.com/google-research-datasets/disfl-qa

To-Do: Update README.md and add YAML tags | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2473/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2473/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2473.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2473",

"merged_at": "2021-07-29T11:56:18Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2473.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2473"

} | true | [

"Sounds great! It'll make things easier for the user while accessing the dataset. I'll make some changes to the current file then.",

"I've updated with the suggested changes. Updated the README, YAML tags as well (not sure of Size category tag as I couldn't pass the path of `dataset_infos.json` for this dataset)\r\n"

] |

https://api.github.com/repos/huggingface/datasets/issues/35 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/35/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/35/comments | https://api.github.com/repos/huggingface/datasets/issues/35/events | https://github.com/huggingface/datasets/pull/35 | 611,413,731 | MDExOlB1bGxSZXF1ZXN0NDEyNjAyMTc0 | 35 | [Tests] fix typo | [] | closed | false | null | 0 | 2020-05-03T13:23:49Z | 2020-05-03T13:24:21Z | 2020-05-03T13:24:20Z | null | @lhoestq - currently the slow test fail with:

```

_____________________________________________________________________________________ DatasetTest.test_load_real_dataset_xnli _____________________________________________________________________________________

self = <tests.test_dataset_common.DatasetTest testMethod=test_load_real_dataset_xnli>, dataset_name = 'xnli'

@slow

def test_load_real_dataset(self, dataset_name):

with tempfile.TemporaryDirectory() as temp_data_dir:

> dataset = load(dataset_name, data_dir=temp_data_dir)

tests/test_dataset_common.py:153:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

../../python_bin/nlp/load.py:497: in load

dbuilder.download_and_prepare(**download_and_prepare_kwargs)

../../python_bin/nlp/builder.py:383: in download_and_prepare

self._download_and_prepare(dl_manager=dl_manager, download_config=download_config)

../../python_bin/nlp/builder.py:627: in _download_and_prepare

dl_manager=dl_manager, max_examples_per_split=download_config.max_examples_per_split,

../../python_bin/nlp/builder.py:431: in _download_and_prepare

split_generators = self._split_generators(dl_manager, **split_generators_kwargs)

../../python_bin/nlp/datasets/xnli/8bf4185a2da1ef2a523186dd660d9adcf0946189e7fa5942ea31c63c07b68a7f/xnli.py:95: in _split_generators

dl_dir = dl_manager.download_and_extract(_DATA_URL)

../../python_bin/nlp/utils/download_manager.py:246: in download_and_extract

return self.extract(self.download(url_or_urls))

../../python_bin/nlp/utils/download_manager.py:186: in download

self._record_sizes_checksums(url_or_urls, downloaded_path_or_paths)

../../python_bin/nlp/utils/download_manager.py:166: in _record_sizes_checksums

self._recorded_sizes_checksums[url] = get_size_checksum(path)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

path = ('', '/tmp/tmpkajlg9yc/downloads/c0f7773c480a3f2d85639d777e0e17e65527460310d80760fd3fc2b2f2960556.c952a63cb17d3d46e412ceb7dbcd656ce2b15cc9ef17f50c28f81c48a7c853b5')

def get_size_checksum(path: str) -> Tuple[int, str]:

"""Compute the file size and the sha256 checksum of a file"""

m = sha256()

> with open(path, "rb") as f:

E TypeError: expected str, bytes or os.PathLike object, not tuple

../../python_bin/nlp/utils/checksums_utils.py:81: TypeError

```

- the checksums probably need to be updated no? And we should also think about how to write a test for the checksums. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 1,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/35/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/35/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/35.diff",

"html_url": "https://github.com/huggingface/datasets/pull/35",

"merged_at": "2020-05-03T13:24:20Z",

"patch_url": "https://github.com/huggingface/datasets/pull/35.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/35"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/4979 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4979/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4979/comments | https://api.github.com/repos/huggingface/datasets/issues/4979/events | https://github.com/huggingface/datasets/pull/4979 | 1,374,820,758 | PR_kwDODunzps4_CouM | 4,979 | Fix missing tags in dataset cards | [] | closed | false | null | 1 | 2022-09-15T16:51:03Z | 2022-09-22T12:37:55Z | 2022-09-15T17:12:09Z | null | Fix missing tags in dataset cards:

- amazon_us_reviews

- art

- discofuse

- indic_glue

- ubuntu_dialogs_corpus

This PR partially fixes the missing tags in dataset cards. Subsequent PRs will follow to complete this task.

Related to:

- #4833

- #4891

- #4896

- #4908

- #4921

- #4931 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4979/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4979/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/4979.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4979",

"merged_at": "2022-09-15T17:12:09Z",

"patch_url": "https://github.com/huggingface/datasets/pull/4979.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4979"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._"

] |

https://api.github.com/repos/huggingface/datasets/issues/5797 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5797/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5797/comments | https://api.github.com/repos/huggingface/datasets/issues/5797/events | https://github.com/huggingface/datasets/issues/5797 | 1,685,501,199 | I_kwDODunzps5kdrUP | 5,797 | load_dataset is case sentitive? | [] | open | false | null | 2 | 2023-04-26T18:19:04Z | 2023-04-27T11:56:58Z | null | null | ### Describe the bug

load_dataset() function is case sensitive?

### Steps to reproduce the bug

The following two code, get totally different behavior.

1. load_dataset('mbzuai/bactrian-x','en')

2. load_dataset('MBZUAI/Bactrian-X','en')

### Expected behavior

Compare 1 and 2.

1 will download all 52 subsets, shell output:

```Downloading and preparing dataset json/MBZUAI--bactrian-X to xxx```

2 will only download single subset, shell output

```Downloading and preparing dataset bactrian-x/en to xxx```

### Environment info

Python 3.10.11

datasets Version: 2.11.0 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5797/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5797/timeline | null | null | null | null | false | [

"Hi @haonan-li , thank you for the report! It seems to be a bug on the [`huggingface_hub`](https://github.com/huggingface/huggingface_hub) site, there is even no such dataset as `mbzuai/bactrian-x` on the Hub. I opened and [issue](https://github.com/huggingface/huggingface_hub/issues/1453) there.",

"I think `load_dataset(\"mbzuai/bactrian-x\")` shouldn't be loaded at all and raise an error but because of [this fallback](https://github.com/huggingface/datasets/blob/main/src/datasets/load.py#L1194) to packaged loaders when no other options are applicable, it loads the dataset with standard `json` loader instead of the custom loading script."

] |

https://api.github.com/repos/huggingface/datasets/issues/1775 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1775/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1775/comments | https://api.github.com/repos/huggingface/datasets/issues/1775/events | https://github.com/huggingface/datasets/issues/1775 | 792,742,120 | MDU6SXNzdWU3OTI3NDIxMjA= | 1,775 | Efficient ways to iterate the dataset | [] | closed | false | null | 2 | 2021-01-24T07:54:31Z | 2021-01-24T09:50:39Z | 2021-01-24T09:50:39Z | null | For a large dataset that does not fits the memory, how can I select only a subset of features from each example?

If I iterate over the dataset and then select the subset of features one by one, the resulted memory usage will be huge. Any ways to solve this?

Thanks | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1775/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1775/timeline | null | completed | null | null | false | [

"It seems that selecting a subset of colums directly from the dataset, i.e., dataset[\"column\"], is slow.",

"I was wrong, ```dataset[\"column\"]``` is fast."

] |

https://api.github.com/repos/huggingface/datasets/issues/291 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/291/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/291/comments | https://api.github.com/repos/huggingface/datasets/issues/291/events | https://github.com/huggingface/datasets/pull/291 | 642,688,450 | MDExOlB1bGxSZXF1ZXN0NDM3NjM1NjMy | 291 | break statement not required | [] | closed | false | null | 3 | 2020-06-22T01:40:55Z | 2020-06-23T17:57:58Z | 2020-06-23T09:37:02Z | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/291/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/291/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/291.diff",

"html_url": "https://github.com/huggingface/datasets/pull/291",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/291.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/291"

} | true | [

"I guess,test failing due to connection error?",

"We just fixed the other dataset on master. Could you rebase from master and push to rerun the CI ?",

"If I'm not wrong this function returns None if no main class was found.\r\nI think it makes things less clear not to have a return at the end of the function.\r\nI guess we can have one return in the for loop instead of the break statement, AND one return at the end to explicitly return None.\r\nWhat do you think ?"

] |

|

https://api.github.com/repos/huggingface/datasets/issues/3042 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3042/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3042/comments | https://api.github.com/repos/huggingface/datasets/issues/3042/events | https://github.com/huggingface/datasets/pull/3042 | 1,020,047,289 | PR_kwDODunzps4s5Lxo | 3,042 | Improving elasticsearch integration | [] | open | false | null | 1 | 2021-10-07T13:28:35Z | 2022-07-06T15:19:48Z | null | null | - adding murmurhash signature to sample in index

- adding optional credentials for remote elasticsearch server

- enabling sample update in index

- upgrade the elasticsearch 7.10.1 python client

- adding ElasticsearchBulider to instantiate a dataset from an index and a filtering query | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3042/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3042/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3042.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3042",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/3042.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3042"

} | true | [

"@lhoestq @albertvillanova Iwas trying to fix the failing tests in circleCI but is there a test elasticsearch instance somewhere? If not, can I launch a docker container to have one?"

] |

https://api.github.com/repos/huggingface/datasets/issues/5211 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5211/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5211/comments | https://api.github.com/repos/huggingface/datasets/issues/5211/events | https://github.com/huggingface/datasets/pull/5211 | 1,438,544,617 | PR_kwDODunzps5CVgBx | 5,211 | Update Overview.ipynb google colab | [] | closed | false | null | 3 | 2022-11-07T15:23:52Z | 2022-11-29T15:59:48Z | 2022-11-29T15:54:17Z | null | - removed metrics stuff

- added image example

- added audio example (with ffmpeg instructions)

- updated the "add a new dataset" section | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5211/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5211/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/5211.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5211",

"merged_at": "2022-11-29T15:54:17Z",

"patch_url": "https://github.com/huggingface/datasets/pull/5211.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5211"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._",

"WDYT @albertvillanova ?",

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_5211). All of your documentation changes will be reflected on that endpoint."

] |

https://api.github.com/repos/huggingface/datasets/issues/3601 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3601/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3601/comments | https://api.github.com/repos/huggingface/datasets/issues/3601/events | https://github.com/huggingface/datasets/pull/3601 | 1,108,207,131 | PR_kwDODunzps4xROtF | 3,601 | Add conll2003 licensing | [] | closed | false | null | 0 | 2022-01-19T15:00:41Z | 2022-01-19T17:17:28Z | 2022-01-19T17:17:28Z | null | Following https://github.com/huggingface/datasets/issues/3582, this PR updates the licensing section of the CoNLL2003 dataset. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3601/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3601/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3601.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3601",

"merged_at": "2022-01-19T17:17:28Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3601.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3601"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/5740 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5740/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5740/comments | https://api.github.com/repos/huggingface/datasets/issues/5740/events | https://github.com/huggingface/datasets/pull/5740 | 1,664,132,130 | PR_kwDODunzps5OHI08 | 5,740 | Fix CI mock filesystem fixtures | [] | closed | false | null | 5 | 2023-04-12T08:52:35Z | 2023-04-13T11:01:24Z | 2023-04-13T10:54:13Z | null | This PR fixes the fixtures of our CI mock filesystems.

Before, we had to pass `clobber=True` to `fsspec.register_implementation` to overwrite the still present previously added "mock" filesystem. That meant that the mock filesystem fixture was not working properly, because the previously added "mock" filesystem, should have been deleted by the fixture.

This PR fixes the mock filesystem fixtures, so that the "mock" filesystem is properly deleted from the inner `fsspec` registry.

Tests were added to check the correct behavior of the mock filesystem fixtures.

Related to:

- #5733 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5740/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5740/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/5740.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5740",

"merged_at": "2023-04-13T10:54:13Z",

"patch_url": "https://github.com/huggingface/datasets/pull/5740.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5740"

} | true | [

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007003 / 0.011353 (-0.004350) | 0.004854 / 0.011008 (-0.006154) | 0.096982 / 0.038508 (0.058474) | 0.033218 / 0.023109 (0.010109) | 0.314088 / 0.275898 (0.038190) | 0.351315 / 0.323480 (0.027835) | 0.005679 / 0.007986 (-0.002307) | 0.005404 / 0.004328 (0.001075) | 0.071773 / 0.004250 (0.067522) | 0.044593 / 0.037052 (0.007540) | 0.323643 / 0.258489 (0.065154) | 0.357172 / 0.293841 (0.063331) | 0.036782 / 0.128546 (-0.091764) | 0.012146 / 0.075646 (-0.063501) | 0.334874 / 0.419271 (-0.084397) | 0.051475 / 0.043533 (0.007942) | 0.305949 / 0.255139 (0.050810) | 0.339326 / 0.283200 (0.056126) | 0.101509 / 0.141683 (-0.040174) | 1.458254 / 1.452155 (0.006099) | 1.535252 / 1.492716 (0.042535) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.264837 / 0.018006 (0.246831) | 0.441444 / 0.000490 (0.440955) | 0.003331 / 0.000200 (0.003131) | 0.000084 / 0.000054 (0.000030) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.026529 / 0.037411 (-0.010882) | 0.105924 / 0.014526 (0.091398) | 0.117191 / 0.176557 (-0.059365) | 0.176606 / 0.737135 (-0.560529) | 0.123452 / 0.296338 (-0.172887) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.412351 / 0.215209 (0.197142) | 4.135468 / 2.077655 (2.057813) | 1.912820 / 1.504120 (0.408700) | 1.738993 / 1.541195 (0.197798) | 1.754228 / 1.468490 (0.285738) | 0.692239 / 4.584777 (-3.892538) | 3.765672 / 3.745712 (0.019959) | 2.081141 / 5.269862 (-3.188720) | 1.425153 / 4.565676 (-3.140523) | 0.085055 / 0.424275 (-0.339220) | 0.011918 / 0.007607 (0.004311) | 0.517573 / 0.226044 (0.291529) | 5.179809 / 2.268929 (2.910881) | 2.471620 / 55.444624 (-52.973005) | 2.140634 / 6.876477 (-4.735843) | 2.200150 / 2.142072 (0.058077) | 0.831662 / 4.805227 (-3.973566) | 0.168828 / 6.500664 (-6.331836) | 0.062755 / 0.075469 (-0.012714) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.196890 / 1.841788 (-0.644898) | 14.826423 / 8.074308 (6.752114) | 14.020782 / 10.191392 (3.829390) | 0.161275 / 0.680424 (-0.519149) | 0.017467 / 0.534201 (-0.516734) | 0.422278 / 0.579283 (-0.157005) | 0.424053 / 0.434364 (-0.010311) | 0.490768 / 0.540337 (-0.049570) | 0.584490 / 1.386936 (-0.802446) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007102 / 0.011353 (-0.004250) | 0.005145 / 0.011008 (-0.005863) | 0.073823 / 0.038508 (0.035315) | 0.032947 / 0.023109 (0.009838) | 0.336978 / 0.275898 (0.061080) | 0.368961 / 0.323480 (0.045481) | 0.006052 / 0.007986 (-0.001934) | 0.003970 / 0.004328 (-0.000358) | 0.072925 / 0.004250 (0.068674) | 0.044502 / 0.037052 (0.007450) | 0.340849 / 0.258489 (0.082360) | 0.381487 / 0.293841 (0.087646) | 0.037207 / 0.128546 (-0.091339) | 0.012095 / 0.075646 (-0.063551) | 0.085206 / 0.419271 (-0.334065) | 0.056236 / 0.043533 (0.012703) | 0.334048 / 0.255139 (0.078909) | 0.360442 / 0.283200 (0.077242) | 0.104402 / 0.141683 (-0.037281) | 1.446907 / 1.452155 (-0.005248) | 1.542430 / 1.492716 (0.049713) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.238720 / 0.018006 (0.220714) | 0.445857 / 0.000490 (0.445367) | 0.009280 / 0.000200 (0.009080) | 0.000150 / 0.000054 (0.000095) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.028414 / 0.037411 (-0.008998) | 0.110506 / 0.014526 (0.095981) | 0.124593 / 0.176557 (-0.051964) | 0.170951 / 0.737135 (-0.566184) | 0.128033 / 0.296338 (-0.168305) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.426206 / 0.215209 (0.210997) | 4.267289 / 2.077655 (2.189634) | 2.026880 / 1.504120 (0.522760) | 1.844052 / 1.541195 (0.302858) | 1.897697 / 1.468490 (0.429207) | 0.713545 / 4.584777 (-3.871232) | 3.815052 / 3.745712 (0.069339) | 3.217091 / 5.269862 (-2.052770) | 1.790546 / 4.565676 (-2.775130) | 0.087501 / 0.424275 (-0.336774) | 0.012136 / 0.007607 (0.004529) | 0.534495 / 0.226044 (0.308451) | 5.325913 / 2.268929 (3.056984) | 2.484309 / 55.444624 (-52.960315) | 2.149721 / 6.876477 (-4.726756) | 2.158764 / 2.142072 (0.016692) | 0.855273 / 4.805227 (-3.949954) | 0.170374 / 6.500664 (-6.330290) | 0.064053 / 0.075469 (-0.011416) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.253171 / 1.841788 (-0.588617) | 15.254562 / 8.074308 (7.180254) | 14.242119 / 10.191392 (4.050727) | 0.159298 / 0.680424 (-0.521126) | 0.017504 / 0.534201 (-0.516696) | 0.419710 / 0.579283 (-0.159574) | 0.417879 / 0.434364 (-0.016485) | 0.486328 / 0.540337 (-0.054009) | 0.578933 / 1.386936 (-0.808003) |\n\n</details>\n</details>\n\n\n",

"_The documentation is not available anymore as the PR was closed or merged._",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.008476 / 0.011353 (-0.002877) | 0.005745 / 0.011008 (-0.005263) | 0.115307 / 0.038508 (0.076799) | 0.039356 / 0.023109 (0.016247) | 0.367155 / 0.275898 (0.091257) | 0.422147 / 0.323480 (0.098667) | 0.006817 / 0.007986 (-0.001168) | 0.004652 / 0.004328 (0.000323) | 0.084045 / 0.004250 (0.079795) | 0.055483 / 0.037052 (0.018431) | 0.364249 / 0.258489 (0.105760) | 0.415975 / 0.293841 (0.122134) | 0.041322 / 0.128546 (-0.087224) | 0.014178 / 0.075646 (-0.061469) | 0.392658 / 0.419271 (-0.026614) | 0.060156 / 0.043533 (0.016623) | 0.373938 / 0.255139 (0.118799) | 0.397494 / 0.283200 (0.114294) | 0.113811 / 0.141683 (-0.027872) | 1.688581 / 1.452155 (0.236427) | 1.790374 / 1.492716 (0.297658) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.222203 / 0.018006 (0.204196) | 0.471109 / 0.000490 (0.470619) | 0.007071 / 0.000200 (0.006871) | 0.000156 / 0.000054 (0.000102) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.032112 / 0.037411 (-0.005299) | 0.118726 / 0.014526 (0.104200) | 0.134918 / 0.176557 (-0.041639) | 0.207766 / 0.737135 (-0.529369) | 0.139756 / 0.296338 (-0.156582) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.479858 / 0.215209 (0.264649) | 4.798428 / 2.077655 (2.720773) | 2.221573 / 1.504120 (0.717453) | 1.964956 / 1.541195 (0.423761) | 2.021763 / 1.468490 (0.553273) | 0.820401 / 4.584777 (-3.764376) | 4.533887 / 3.745712 (0.788175) | 4.121332 / 5.269862 (-1.148529) | 2.195807 / 4.565676 (-2.369869) | 0.103133 / 0.424275 (-0.321142) | 0.014620 / 0.007607 (0.007013) | 0.605012 / 0.226044 (0.378967) | 5.966623 / 2.268929 (3.697694) | 2.844118 / 55.444624 (-52.600506) | 2.463569 / 6.876477 (-4.412907) | 2.597177 / 2.142072 (0.455105) | 0.983201 / 4.805227 (-3.822026) | 0.199500 / 6.500664 (-6.301164) | 0.078387 / 0.075469 (0.002918) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.401083 / 1.841788 (-0.440705) | 17.258725 / 8.074308 (9.184417) | 16.825992 / 10.191392 (6.634600) | 0.216762 / 0.680424 (-0.463662) | 0.021135 / 0.534201 (-0.513066) | 0.513688 / 0.579283 (-0.065595) | 0.488892 / 0.434364 (0.054529) | 0.566745 / 0.540337 (0.026408) | 0.688958 / 1.386936 (-0.697978) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007948 / 0.011353 (-0.003405) | 0.005981 / 0.011008 (-0.005027) | 0.084474 / 0.038508 (0.045966) | 0.037952 / 0.023109 (0.014843) | 0.383359 / 0.275898 (0.107461) | 0.409324 / 0.323480 (0.085844) | 0.006641 / 0.007986 (-0.001344) | 0.004785 / 0.004328 (0.000456) | 0.083214 / 0.004250 (0.078964) | 0.053177 / 0.037052 (0.016125) | 0.393147 / 0.258489 (0.134658) | 0.438496 / 0.293841 (0.144655) | 0.042090 / 0.128546 (-0.086456) | 0.013373 / 0.075646 (-0.062273) | 0.097585 / 0.419271 (-0.321686) | 0.056359 / 0.043533 (0.012826) | 0.378113 / 0.255139 (0.122974) | 0.403874 / 0.283200 (0.120674) | 0.123503 / 0.141683 (-0.018180) | 1.639557 / 1.452155 (0.187403) | 1.759787 / 1.492716 (0.267071) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.242534 / 0.018006 (0.224528) | 0.459040 / 0.000490 (0.458550) | 0.000454 / 0.000200 (0.000254) | 0.000066 / 0.000054 (0.000011) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.031747 / 0.037411 (-0.005664) | 0.125823 / 0.014526 (0.111297) | 0.138985 / 0.176557 (-0.037571) | 0.194371 / 0.737135 (-0.542764) | 0.148905 / 0.296338 (-0.147433) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.508201 / 0.215209 (0.292992) | 5.007519 / 2.077655 (2.929865) | 2.412956 / 1.504120 (0.908836) | 2.143378 / 1.541195 (0.602183) | 2.192966 / 1.468490 (0.724476) | 0.828497 / 4.584777 (-3.756280) | 4.496457 / 3.745712 (0.750745) | 2.397546 / 5.269862 (-2.872315) | 1.522889 / 4.565676 (-3.042787) | 0.099904 / 0.424275 (-0.324371) | 0.014561 / 0.007607 (0.006954) | 0.627417 / 0.226044 (0.401373) | 6.296441 / 2.268929 (4.027512) | 2.962858 / 55.444624 (-52.481767) | 2.543083 / 6.876477 (-4.333394) | 2.711884 / 2.142072 (0.569811) | 0.997969 / 4.805227 (-3.807259) | 0.200283 / 6.500664 (-6.300382) | 0.075934 / 0.075469 (0.000465) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.541707 / 1.841788 (-0.300081) | 17.791559 / 8.074308 (9.717251) | 16.782877 / 10.191392 (6.591485) | 0.171954 / 0.680424 (-0.508470) | 0.020506 / 0.534201 (-0.513695) | 0.504189 / 0.579283 (-0.075094) | 0.501655 / 0.434364 (0.067291) | 0.583120 / 0.540337 (0.042782) | 0.694931 / 1.386936 (-0.692005) |\n\n</details>\n</details>\n\n\n",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007613 / 0.011353 (-0.003740) | 0.005057 / 0.011008 (-0.005951) | 0.099147 / 0.038508 (0.060639) | 0.035358 / 0.023109 (0.012249) | 0.303442 / 0.275898 (0.027544) | 0.336898 / 0.323480 (0.013418) | 0.006216 / 0.007986 (-0.001770) | 0.004085 / 0.004328 (-0.000244) | 0.074567 / 0.004250 (0.070317) | 0.050917 / 0.037052 (0.013865) | 0.301786 / 0.258489 (0.043297) | 0.341362 / 0.293841 (0.047521) | 0.037019 / 0.128546 (-0.091528) | 0.011977 / 0.075646 (-0.063669) | 0.334688 / 0.419271 (-0.084583) | 0.051326 / 0.043533 (0.007793) | 0.299878 / 0.255139 (0.044739) | 0.325571 / 0.283200 (0.042371) | 0.110744 / 0.141683 (-0.030939) | 1.480898 / 1.452155 (0.028743) | 1.566917 / 1.492716 (0.074201) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.253249 / 0.018006 (0.235242) | 0.558576 / 0.000490 (0.558086) | 0.003838 / 0.000200 (0.003638) | 0.000085 / 0.000054 (0.000030) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.028731 / 0.037411 (-0.008681) | 0.110643 / 0.014526 (0.096117) | 0.119560 / 0.176557 (-0.056996) | 0.178010 / 0.737135 (-0.559126) | 0.130286 / 0.296338 (-0.166053) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.400190 / 0.215209 (0.184981) | 3.999326 / 2.077655 (1.921672) | 1.797332 / 1.504120 (0.293212) | 1.610808 / 1.541195 (0.069613) | 1.679949 / 1.468490 (0.211459) | 0.696539 / 4.584777 (-3.888238) | 3.784766 / 3.745712 (0.039054) | 2.205008 / 5.269862 (-3.064854) | 1.501697 / 4.565676 (-3.063979) | 0.085553 / 0.424275 (-0.338723) | 0.012223 / 0.007607 (0.004616) | 0.494858 / 0.226044 (0.268813) | 4.968535 / 2.268929 (2.699606) | 2.258759 / 55.444624 (-53.185865) | 1.926236 / 6.876477 (-4.950241) | 2.072155 / 2.142072 (-0.069917) | 0.838354 / 4.805227 (-3.966873) | 0.168810 / 6.500664 (-6.331854) | 0.064347 / 0.075469 (-0.011122) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.166696 / 1.841788 (-0.675091) | 14.721287 / 8.074308 (6.646979) | 14.319272 / 10.191392 (4.127880) | 0.144534 / 0.680424 (-0.535890) | 0.017502 / 0.534201 (-0.516699) | 0.422682 / 0.579283 (-0.156601) | 0.424426 / 0.434364 (-0.009938) | 0.493561 / 0.540337 (-0.046777) | 0.586765 / 1.386936 (-0.800171) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007764 / 0.011353 (-0.003589) | 0.005516 / 0.011008 (-0.005492) | 0.074745 / 0.038508 (0.036237) | 0.034364 / 0.023109 (0.011255) | 0.344318 / 0.275898 (0.068420) | 0.374779 / 0.323480 (0.051299) | 0.005904 / 0.007986 (-0.002082) | 0.004323 / 0.004328 (-0.000005) | 0.073191 / 0.004250 (0.068941) | 0.051549 / 0.037052 (0.014496) | 0.341792 / 0.258489 (0.083303) | 0.387576 / 0.293841 (0.093735) | 0.037483 / 0.128546 (-0.091063) | 0.012410 / 0.075646 (-0.063237) | 0.086480 / 0.419271 (-0.332791) | 0.050035 / 0.043533 (0.006502) | 0.335475 / 0.255139 (0.080336) | 0.361436 / 0.283200 (0.078236) | 0.106890 / 0.141683 (-0.034792) | 1.464032 / 1.452155 (0.011877) | 1.563490 / 1.492716 (0.070774) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.268765 / 0.018006 (0.250758) | 0.563811 / 0.000490 (0.563321) | 0.004904 / 0.000200 (0.004704) | 0.000096 / 0.000054 (0.000041) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.029885 / 0.037411 (-0.007526) | 0.113885 / 0.014526 (0.099359) | 0.124283 / 0.176557 (-0.052274) | 0.173619 / 0.737135 (-0.563517) | 0.131781 / 0.296338 (-0.164557) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.420296 / 0.215209 (0.205087) | 4.167656 / 2.077655 (2.090001) | 1.982356 / 1.504120 (0.478237) | 1.792181 / 1.541195 (0.250986) | 1.871459 / 1.468490 (0.402969) | 0.707066 / 4.584777 (-3.877711) | 3.835922 / 3.745712 (0.090210) | 3.506796 / 5.269862 (-1.763066) | 1.857172 / 4.565676 (-2.708505) | 0.086219 / 0.424275 (-0.338056) | 0.012404 / 0.007607 (0.004796) | 0.512393 / 0.226044 (0.286348) | 5.111623 / 2.268929 (2.842695) | 2.493523 / 55.444624 (-52.951101) | 2.188220 / 6.876477 (-4.688257) | 2.319096 / 2.142072 (0.177024) | 0.844084 / 4.805227 (-3.961144) | 0.171130 / 6.500664 (-6.329534) | 0.065913 / 0.075469 (-0.009556) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.284768 / 1.841788 (-0.557020) | 15.334610 / 8.074308 (7.260301) | 14.724436 / 10.191392 (4.533044) | 0.188425 / 0.680424 (-0.491999) | 0.017984 / 0.534201 (-0.516217) | 0.428150 / 0.579283 (-0.151133) | 0.429013 / 0.434364 (-0.005351) | 0.500818 / 0.540337 (-0.039519) | 0.592879 / 1.386936 (-0.794057) |\n\n</details>\n</details>\n\n\n",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006870 / 0.011353 (-0.004483) | 0.004702 / 0.011008 (-0.006306) | 0.099258 / 0.038508 (0.060750) | 0.029008 / 0.023109 (0.005899) | 0.330599 / 0.275898 (0.054701) | 0.361163 / 0.323480 (0.037683) | 0.005020 / 0.007986 (-0.002965) | 0.003474 / 0.004328 (-0.000855) | 0.075902 / 0.004250 (0.071651) | 0.037462 / 0.037052 (0.000410) | 0.336213 / 0.258489 (0.077724) | 0.370645 / 0.293841 (0.076804) | 0.032435 / 0.128546 (-0.096111) | 0.011686 / 0.075646 (-0.063960) | 0.326040 / 0.419271 (-0.093232) | 0.043750 / 0.043533 (0.000217) | 0.332629 / 0.255139 (0.077490) | 0.353302 / 0.283200 (0.070102) | 0.090421 / 0.141683 (-0.051262) | 1.470097 / 1.452155 (0.017942) | 1.544908 / 1.492716 (0.052191) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.213418 / 0.018006 (0.195411) | 0.434808 / 0.000490 (0.434319) | 0.005949 / 0.000200 (0.005749) | 0.000072 / 0.000054 (0.000018) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.023085 / 0.037411 (-0.014327) | 0.098222 / 0.014526 (0.083696) | 0.104543 / 0.176557 (-0.072013) | 0.165423 / 0.737135 (-0.571713) | 0.108732 / 0.296338 (-0.187606) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.433933 / 0.215209 (0.218724) | 4.334358 / 2.077655 (2.256704) | 2.013984 / 1.504120 (0.509864) | 1.862981 / 1.541195 (0.321787) | 1.873936 / 1.468490 (0.405446) | 0.699857 / 4.584777 (-3.884920) | 3.417815 / 3.745712 (-0.327897) | 1.946403 / 5.269862 (-3.323459) | 1.308683 / 4.565676 (-3.256994) | 0.083297 / 0.424275 (-0.340978) | 0.012610 / 0.007607 (0.005003) | 0.540877 / 0.226044 (0.314832) | 5.408293 / 2.268929 (3.139365) | 2.529574 / 55.444624 (-52.915050) | 2.201047 / 6.876477 (-4.675429) | 2.392966 / 2.142072 (0.250894) | 0.812719 / 4.805227 (-3.992509) | 0.154013 / 6.500664 (-6.346651) | 0.067614 / 0.075469 (-0.007855) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.228150 / 1.841788 (-0.613638) | 14.037090 / 8.074308 (5.962782) | 14.259416 / 10.191392 (4.068024) | 0.155554 / 0.680424 (-0.524870) | 0.016521 / 0.534201 (-0.517680) | 0.379615 / 0.579283 (-0.199668) | 0.421352 / 0.434364 (-0.013012) | 0.446512 / 0.540337 (-0.093825) | 0.531802 / 1.386936 (-0.855134) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006629 / 0.011353 (-0.004724) | 0.004432 / 0.011008 (-0.006577) | 0.076662 / 0.038508 (0.038154) | 0.027674 / 0.023109 (0.004565) | 0.341667 / 0.275898 (0.065769) | 0.376493 / 0.323480 (0.053014) | 0.005076 / 0.007986 (-0.002910) | 0.004655 / 0.004328 (0.000326) | 0.075698 / 0.004250 (0.071448) | 0.036905 / 0.037052 (-0.000147) | 0.342394 / 0.258489 (0.083905) | 0.383330 / 0.293841 (0.089489) | 0.031729 / 0.128546 (-0.096817) | 0.011582 / 0.075646 (-0.064064) | 0.085721 / 0.419271 (-0.333551) | 0.042012 / 0.043533 (-0.001521) | 0.342063 / 0.255139 (0.086924) | 0.367335 / 0.283200 (0.084136) | 0.089641 / 0.141683 (-0.052042) | 1.520353 / 1.452155 (0.068198) | 1.643653 / 1.492716 (0.150937) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.178995 / 0.018006 (0.160989) | 0.436544 / 0.000490 (0.436055) | 0.002311 / 0.000200 (0.002111) | 0.000081 / 0.000054 (0.000026) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.025386 / 0.037411 (-0.012026) | 0.099717 / 0.014526 (0.085192) | 0.110809 / 0.176557 (-0.065747) | 0.162931 / 0.737135 (-0.574204) | 0.110430 / 0.296338 (-0.185909) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.438592 / 0.215209 (0.223382) | 4.372560 / 2.077655 (2.294905) | 2.069686 / 1.504120 (0.565567) | 1.860576 / 1.541195 (0.319382) | 1.898161 / 1.468490 (0.429671) | 0.698353 / 4.584777 (-3.886424) | 3.462440 / 3.745712 (-0.283272) | 1.868602 / 5.269862 (-3.401260) | 1.160498 / 4.565676 (-3.405179) | 0.082869 / 0.424275 (-0.341406) | 0.012690 / 0.007607 (0.005083) | 0.533278 / 0.226044 (0.307233) | 5.386214 / 2.268929 (3.117285) | 2.519243 / 55.444624 (-52.925382) | 2.171109 / 6.876477 (-4.705368) | 2.272617 / 2.142072 (0.130544) | 0.805843 / 4.805227 (-3.999384) | 0.152275 / 6.500664 (-6.348389) | 0.068038 / 0.075469 (-0.007431) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.291967 / 1.841788 (-0.549821) | 14.386474 / 8.074308 (6.312166) | 14.180693 / 10.191392 (3.989301) | 0.131714 / 0.680424 (-0.548710) | 0.016596 / 0.534201 (-0.517605) | 0.384293 / 0.579283 (-0.194990) | 0.404051 / 0.434364 (-0.030313) | 0.452167 / 0.540337 (-0.088170) | 0.542718 / 1.386936 (-0.844218) |\n\n</details>\n</details>\n\n\n"

] |

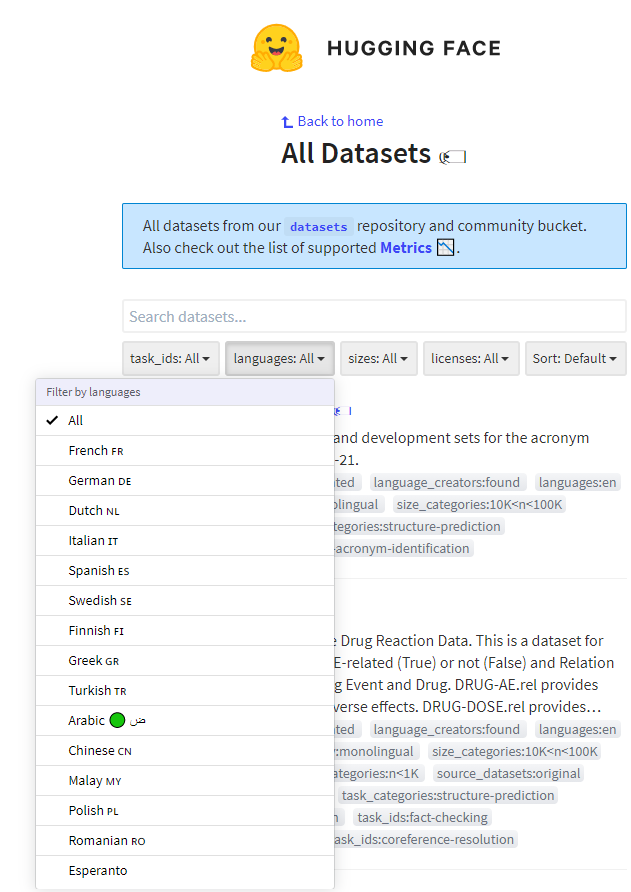

https://api.github.com/repos/huggingface/datasets/issues/1618 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1618/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1618/comments | https://api.github.com/repos/huggingface/datasets/issues/1618/events | https://github.com/huggingface/datasets/issues/1618 | 772,248,730 | MDU6SXNzdWU3NzIyNDg3MzA= | 1,618 | Can't filter language:EN on https://huggingface.co/datasets | [] | closed | false | null | 3 | 2020-12-21T15:23:23Z | 2020-12-22T17:17:00Z | 2020-12-22T17:16:09Z | null | When visiting https://huggingface.co/datasets, I don't see an obvious way to filter only English datasets. This is unexpected for me, am I missing something? I'd expect English to be selectable in the language widget. This problem reproduced on Mozilla Firefox and MS Edge:

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1618/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1618/timeline | null | completed | null | null | false | [

"cc'ing @mapmeld ",

"Full language list is now deployed to https://huggingface.co/datasets ! Recommend close",

"Cool @mapmeld ! My 2 cents (for a next iteration), it would be cool to have a small search widget in the filter dropdown as you have a ton of languages now here! Closing this in the meantime."

] |

https://api.github.com/repos/huggingface/datasets/issues/3516 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3516/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3516/comments | https://api.github.com/repos/huggingface/datasets/issues/3516/events | https://github.com/huggingface/datasets/pull/3516 | 1,092,657,738 | PR_kwDODunzps4weYhE | 3,516 | dataset `asset` - change to raw.githubusercontent.com URLs | [] | closed | false | null | 0 | 2022-01-03T16:43:57Z | 2022-01-03T17:39:02Z | 2022-01-03T17:39:01Z | null | Changed the URLs to the ones it was automatically re-directing.

Before, the download was failing | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3516/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3516/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3516.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3516",

"merged_at": "2022-01-03T17:39:01Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3516.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3516"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/5366 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5366/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5366/comments | https://api.github.com/repos/huggingface/datasets/issues/5366/events | https://github.com/huggingface/datasets/pull/5366 | 1,498,530,851 | PR_kwDODunzps5FjSFl | 5,366 | ExamplesIterable fixes | [] | closed | false | null | 1 | 2022-12-15T14:23:05Z | 2022-12-15T14:44:47Z | 2022-12-15T14:41:45Z | null | fix typing and ExamplesIterable.shard_data_sources | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5366/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5366/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/5366.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5366",

"merged_at": "2022-12-15T14:41:45Z",

"patch_url": "https://github.com/huggingface/datasets/pull/5366.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5366"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._"

] |

https://api.github.com/repos/huggingface/datasets/issues/3244 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3244/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3244/comments | https://api.github.com/repos/huggingface/datasets/issues/3244/events | https://github.com/huggingface/datasets/pull/3244 | 1,048,675,741 | PR_kwDODunzps4uSgG5 | 3,244 | Fix filter method for batched=True | [] | closed | false | null | 0 | 2021-11-09T14:30:59Z | 2021-11-09T15:52:58Z | 2021-11-09T15:52:57Z | null | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3244/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3244/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3244.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3244",

"merged_at": "2021-11-09T15:52:57Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3244.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3244"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/2765 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2765/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2765/comments | https://api.github.com/repos/huggingface/datasets/issues/2765/events | https://github.com/huggingface/datasets/issues/2765 | 962,861,395 | MDU6SXNzdWU5NjI4NjEzOTU= | 2,765 | BERTScore Error | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | 1 | 2021-08-06T15:58:57Z | 2021-08-09T11:16:25Z | 2021-08-09T11:16:25Z | null | ## Describe the bug

A clear and concise description of what the bug is.

## Steps to reproduce the bug

```python

predictions = ["hello there", "general kenobi"]

references = ["hello there", "general kenobi"]

bert = load_metric('bertscore')

bert.compute(predictions=predictions, references=references,lang='en')

```

# Bug

`TypeError: get_hash() missing 1 required positional argument: 'use_fast_tokenizer'`

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version:

- Platform: Colab

- Python version:

- PyArrow version:

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2765/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2765/timeline | null | completed | null | null | false | [

"Hi,\r\n\r\nThe `use_fast_tokenizer` argument has been recently added to the bert-score lib. I've opened a PR with the fix. In the meantime, you can try to downgrade the version of bert-score with the following command to make the code work:\r\n```\r\npip uninstall bert-score\r\npip install \"bert-score<0.3.10\"\r\n```"

] |

https://api.github.com/repos/huggingface/datasets/issues/2955 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2955/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2955/comments | https://api.github.com/repos/huggingface/datasets/issues/2955/events | https://github.com/huggingface/datasets/pull/2955 | 1,003,999,469 | PR_kwDODunzps4sHuRu | 2,955 | Update legacy Python image for CI tests in Linux | [] | closed | false | null | 1 | 2021-09-22T08:25:27Z | 2021-09-24T10:36:05Z | 2021-09-24T10:36:05Z | null | Instead of legacy, use next-generation convenience images, built from the ground up with CI, efficiency, and determinism in mind. Here are some of the highlights:

- Faster spin-up time - In Docker terminology, these next-gen images will generally have fewer and smaller layers. Using these new images will lead to faster image downloads when a build starts, and a higher likelihood that the image is already cached on the host.

- Improved reliability and stability - The existing legacy convenience images are rebuilt practically every day with potential changes from upstream that we cannot always test fast enough. This leads to frequent breaking changes, which is not the best environment for stable, deterministic builds. Next-gen images will only be rebuilt for security and critical-bugs, leading to more stable and deterministic images.

More info: https://circleci.com/docs/2.0/circleci-images | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2955/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2955/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2955.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2955",

"merged_at": "2021-09-24T10:36:05Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2955.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2955"

} | true | [

"There is an exception when running `pip install .[tests]`:\r\n```\r\nProcessing /home/circleci/datasets\r\nCollecting numpy>=1.17 (from datasets==1.12.2.dev0)\r\n Downloading https://files.pythonhosted.org/packages/45/b2/6c7545bb7a38754d63048c7696804a0d947328125d81bf12beaa692c3ae3/numpy-1.19.5-cp36-cp36m-manylinux1_x86_64.whl (13.4MB)\r\n 100% |████████████████████████████████| 13.4MB 3.9MB/s eta 0:00:011\r\n\r\n...\r\n\r\nCollecting faiss-cpu (from datasets==1.12.2.dev0)\r\n Downloading https://files.pythonhosted.org/packages/87/91/bf8ea0d42733cbb04f98d3bf27808e4919ceb5ec71102e21119398a97237/faiss-cpu-1.7.1.post2.tar.gz (41kB)\r\n 100% |████████████████████████████████| 51kB 30.9MB/s ta 0:00:01\r\n Complete output from command python setup.py egg_info:\r\n Traceback (most recent call last):\r\n File \"/home/circleci/.pyenv/versions/3.6.14/lib/python3.6/site-packages/setuptools/sandbox.py\", line 154, in save_modules\r\n yield saved\r\n File \"/home/circleci/.pyenv/versions/3.6.14/lib/python3.6/site-packages/setuptools/sandbox.py\", line 195, in setup_context\r\n yield\r\n File \"/home/circleci/.pyenv/versions/3.6.14/lib/python3.6/site-packages/setuptools/sandbox.py\", line 250, in run_setup\r\n _execfile(setup_script, ns)\r\n File \"/home/circleci/.pyenv/versions/3.6.14/lib/python3.6/site-packages/setuptools/sandbox.py\", line 45, in _execfile\r\n exec(code, globals, locals)\r\n File \"/tmp/easy_install-1pop4blm/numpy-1.21.2/setup.py\", line 34, in <module>\r\n method can be invoked.\r\n RuntimeError: Python version >= 3.7 required.\r\n```\r\n\r\nApparently, `numpy-1.21.2` tries to be installed in the temporary directory `/tmp/easy_install-1pop4blm` instead of the downloaded `numpy-1.19.5` (requirement of `datasets`).\r\n\r\nThis is caused because `pip` downloads the `.tar.gz` (instead of the `.whl`) and tries to build it in a tmp dir."

] |

https://api.github.com/repos/huggingface/datasets/issues/157 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/157/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/157/comments | https://api.github.com/repos/huggingface/datasets/issues/157/events | https://github.com/huggingface/datasets/issues/157 | 620,356,542 | MDU6SXNzdWU2MjAzNTY1NDI= | 157 | nlp.load_dataset() gives "TypeError: list_() takes exactly one argument (2 given)" | [] | closed | false | null | 11 | 2020-05-18T16:46:38Z | 2020-06-05T08:08:58Z | 2020-06-05T08:08:58Z | null | I'm trying to load datasets from nlp but there seems to have error saying

"TypeError: list_() takes exactly one argument (2 given)"

gist can be found here

https://gist.github.com/saahiluppal/c4b878f330b10b9ab9762bc0776c0a6a | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/157/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/157/timeline | null | completed | null | null | false | [

"You can just run: \r\n`val = nlp.load_dataset('squad')` \r\n\r\nif you want to have just the validation script you can also do:\r\n\r\n`val = nlp.load_dataset('squad', split=\"validation\")`",

"If you want to load a local dataset, make sure you include a `./` before the folder name. ",

"This happens by just doing run all cells on colab ... I assumed the colab example is broken. ",

"Oh I see you might have a wrong version of pyarrow install on the colab -> could you try the following. Add these lines to the beginning of your notebook, restart the runtime and run it again:\r\n```\r\n!pip uninstall -y -qq pyarrow\r\n!pip uninstall -y -qq nlp\r\n!pip install -qq git+https://github.com/huggingface/nlp.git\r\n```",

"> Oh I see you might have a wrong version of pyarrow install on the colab -> could you try the following. Add these lines to the beginning of your notebook, restart the runtime and run it again:\r\n> \r\n> ```\r\n> !pip uninstall -y -qq pyarrow\r\n> !pip uninstall -y -qq nlp\r\n> !pip install -qq git+https://github.com/huggingface/nlp.git\r\n> ```\r\n\r\nTried, having the same error.",

"Can you post a link here of your colab? I'll make a copy of it and see what's wrong",

"This should be fixed in the current version of the notebook. You can try it again",

"Also see: https://github.com/huggingface/nlp/issues/222",

"I am getting this error when running this command\r\n```\r\nval = nlp.load_dataset('squad', split=\"validation\")\r\n```\r\n\r\nFileNotFoundError: [Errno 2] No such file or directory: '/root/.cache/huggingface/datasets/squad/plain_text/1.0.0/dataset_info.json'\r\n\r\nCan anybody help?",

"It seems like your download was corrupted :-/ Can you run the following command: \r\n\r\n```\r\nrm -r /root/.cache/huggingface/datasets\r\n```\r\n\r\nto delete the cache completely and rerun the download? ",

"I tried the notebook again today and it worked without barfing. 👌 "

] |

https://api.github.com/repos/huggingface/datasets/issues/4833 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4833/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4833/comments | https://api.github.com/repos/huggingface/datasets/issues/4833/events | https://github.com/huggingface/datasets/pull/4833 | 1,336,946,965 | PR_kwDODunzps49E_Nk | 4,833 | Fix missing tags in dataset cards | [] | closed | false | null | 1 | 2022-08-12T09:04:52Z | 2022-09-22T14:41:23Z | 2022-08-12T09:45:55Z | null | Fix missing tags in dataset cards:

- boolq

- break_data

- definite_pronoun_resolution

- emo

- kor_nli

- pg19

- quartz

- sciq

- squad_es

- wmt14

- wmt15

- wmt16

- wmt17

- wmt18

- wmt19

- wmt_t2t

This PR partially fixes the missing tags in dataset cards. Subsequent PRs will follow to complete this task. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4833/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4833/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/4833.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4833",

"merged_at": "2022-08-12T09:45:55Z",

"patch_url": "https://github.com/huggingface/datasets/pull/4833.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4833"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._"

] |

https://api.github.com/repos/huggingface/datasets/issues/2643 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2643/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2643/comments | https://api.github.com/repos/huggingface/datasets/issues/2643/events | https://github.com/huggingface/datasets/issues/2643 | 944,220,273 | MDU6SXNzdWU5NDQyMjAyNzM= | 2,643 | Enum used in map functions will raise a RecursionError with dill. | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | open | false | null | 4 | 2021-07-14T09:16:08Z | 2021-11-02T09:51:11Z | null | null | ## Describe the bug

Enums used in functions pass to `map` will fail at pickling with a maximum recursion exception as described here: https://github.com/uqfoundation/dill/issues/250#issuecomment-852566284

In my particular case, I use an enum to define an argument with fixed options using the `TraininigArguments` dataclass as base class and the `HfArgumentParser`. In the same file I use a `ds.map` that tries to pickle the content of the module including the definition of the enum that runs into the dill bug described above.

## Steps to reproduce the bug

```python

from datasets import load_dataset

from enum import Enum

class A(Enum):

a = 'a'

def main():

a = A.a

def f(x):

return {} if a == a.a else x

ds = load_dataset('cnn_dailymail', '3.0.0')['test']

ds = ds.map(f, num_proc=15)

if __name__ == "__main__":

main()

```

## Expected results

The known problem with dill could be prevented as explained in the link above (workaround.) Since `HFArgumentParser` nicely uses the enum class for choices it makes sense to also deal with this bug under the hood.

## Actual results

```python

File "/home/xxxx/miniconda3/lib/python3.8/site-packages/dill/_dill.py", line 1373, in save_type

pickler.save_reduce(_create_type, (type(obj), obj.__name__,

File "/home/xxxx/miniconda3/lib/python3.8/pickle.py", line 690, in save_reduce

save(args)

File "/home/xxxx/miniconda3/lib/python3.8/pickle.py", line 558, in save

f(self, obj) # Call unbound method with explicit self

File "/home/xxxx/miniconda3/lib/python3.8/pickle.py", line 899, in save_tuple

save(element)

File "/home/xxxx/miniconda3/lib/python3.8/pickle.py", line 534, in save

self.framer.commit_frame()

File "/home/xxxx/miniconda3/lib/python3.8/pickle.py", line 220, in commit_frame

if f.tell() >= self._FRAME_SIZE_TARGET or force:

RecursionError: maximum recursion depth exceeded while calling a Python object

```

## Environment info

- `datasets` version: 1.8.0

- Platform: Linux-5.9.0-4-amd64-x86_64-with-glibc2.10

- Python version: 3.8.5

- PyArrow version: 3.0.0

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2643/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2643/timeline | null | null | null | null | false | [

"I'm running into this as well. (Thank you so much for reporting @jorgeecardona — was staring at this massive stack trace and unsure what exactly was wrong!)",