[VLDB' 25] ChatTS-14B Model

[VLDB' 25] ChatTS: Aligning Time Series with LLMs via Synthetic Data for Enhanced Understanding and Reasoning

ChatTS focuses on Understanding and Reasoning about time series, much like what vision/video/audio-MLLMs do.

This repo provides code, datasets and model for ChatTS: ChatTS: Aligning Time Series with LLMs via Synthetic Data for Enhanced Understanding and Reasoning.

ChatTS features native support for multi-variate time series data with any length and range of values. With ChatTS, you can easily understand and reason about both the shape features and value features in the time series.

ChatTS can also be integrated into existing LLM pipelines for more time series-related applications, leveraging existing inference frameworks such as vLLMs.

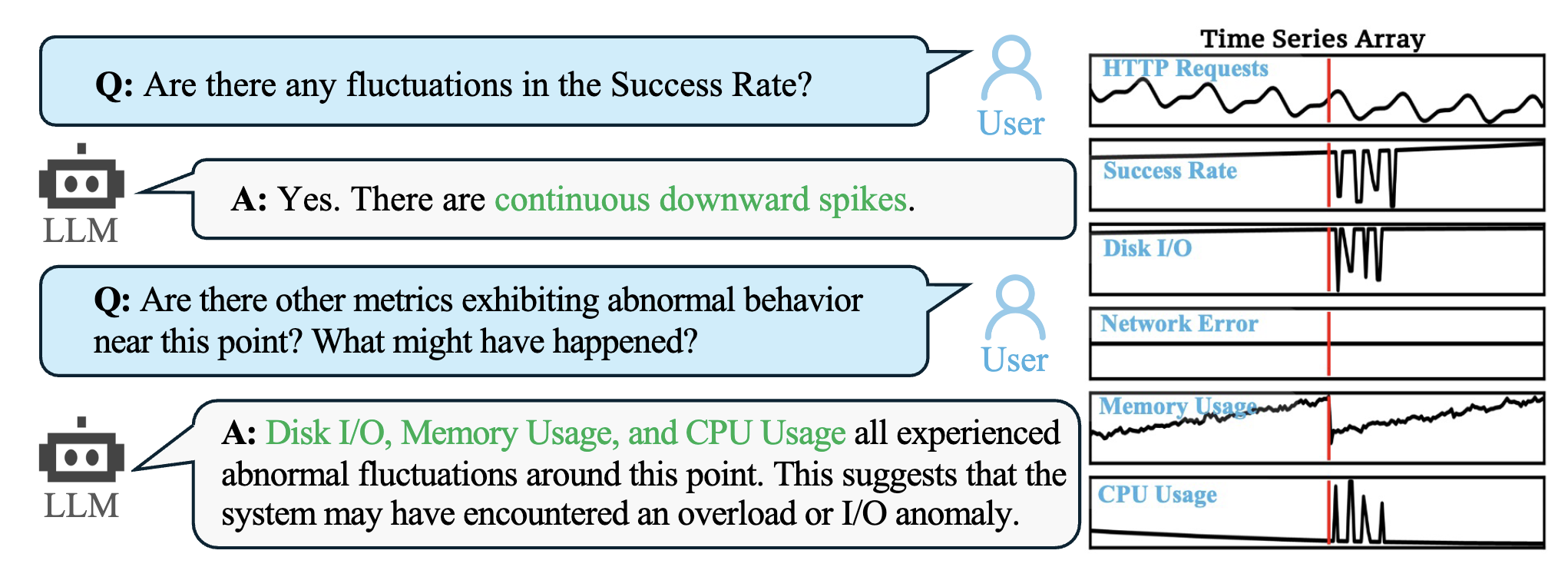

Here is an example of a ChatTS application, which allows users to interact with a LLM to understand and reason about time series data:

Usage

- This model is fine-tuned on the QWen2.5-14B-Instruct (https://huggingface.co/Qwen/Qwen2.5-14B-Instruct) model. For more usage details, please refer to the

README.mdin the ChatTS repository. - An example usage of ChatTS (with

HuggingFace):

from transformers import AutoModelForCausalLM, AutoTokenizer, AutoProcessor

import torch

import numpy as np

# Load the model, tokenizer and processor

model = AutoModelForCausalLM.from_pretrained("./ckpt", trust_remote_code=True, device_map="auto", torch_dtype='float16')

tokenizer = AutoTokenizer.from_pretrained("./ckpt", trust_remote_code=True)

processor = AutoProcessor.from_pretrained("./ckpt", trust_remote_code=True, tokenizer=tokenizer)

# Create time series and prompts

timeseries = np.sin(np.arange(256) / 10) * 5.0

timeseries[100:] -= 10.0

prompt = f"I have a time series length of 256: <ts><ts/>. Please analyze the local changes in this time series."

# Apply Chat Template

prompt = f"<|im_start|>system

You are a helpful assistant.<|im_end|><|im_start|>user

{prompt}<|im_end|><|im_start|>assistant

"

# Convert to tensor

inputs = processor(text=[prompt], timeseries=[timeseries], padding=True, return_tensors="pt")

# Model Generate

outputs = model.generate(**inputs, max_new_tokens=300)

print(tokenizer.decode(outputs[0][len(inputs['input_ids'][0]):], skip_special_tokens=True))

Reference

- QWen2.5-14B-Instruct (https://huggingface.co/Qwen/Qwen2.5-14B-Instruct)

- transformers (https://github.com/huggingface/transformers.git)

- ChatTS Paper

License

This model is licensed under the Apache License 2.0.

Cite

@article{xie2024chatts,

title={ChatTS: Aligning Time Series with LLMs via Synthetic Data for Enhanced Understanding and Reasoning},

author={Xie, Zhe and Li, Zeyan and He, Xiao and Xu, Longlong and Wen, Xidao and Zhang, Tieying and Chen, Jianjun and Shi, Rui and Pei, Dan},

journal={arXiv preprint arXiv:2412.03104},

year={2024}

}

- Downloads last month

- 1,074