Commit

·

93b4c8a

1

Parent(s):

8953ca0

Improved efficiency of review page navigation, especially for large documents. Updated user guide

Browse files- .dockerignore +3 -1

- .gitignore +3 -1

- README.md +224 -56

- app.py +6 -6

- tools/config.py +2 -2

- tools/file_conversion.py +605 -285

- tools/file_redaction.py +5 -30

- tools/redaction_review.py +518 -249

.dockerignore

CHANGED

|

@@ -17,4 +17,6 @@ dist/*

|

|

| 17 |

build_deps/*

|

| 18 |

logs/*

|

| 19 |

config/*

|

| 20 |

-

user_guide/*

|

|

|

|

|

|

|

|

|

| 17 |

build_deps/*

|

| 18 |

logs/*

|

| 19 |

config/*

|

| 20 |

+

user_guide/*

|

| 21 |

+

cdk/*

|

| 22 |

+

web/*

|

.gitignore

CHANGED

|

@@ -18,4 +18,6 @@ build_deps/*

|

|

| 18 |

logs/*

|

| 19 |

config/*

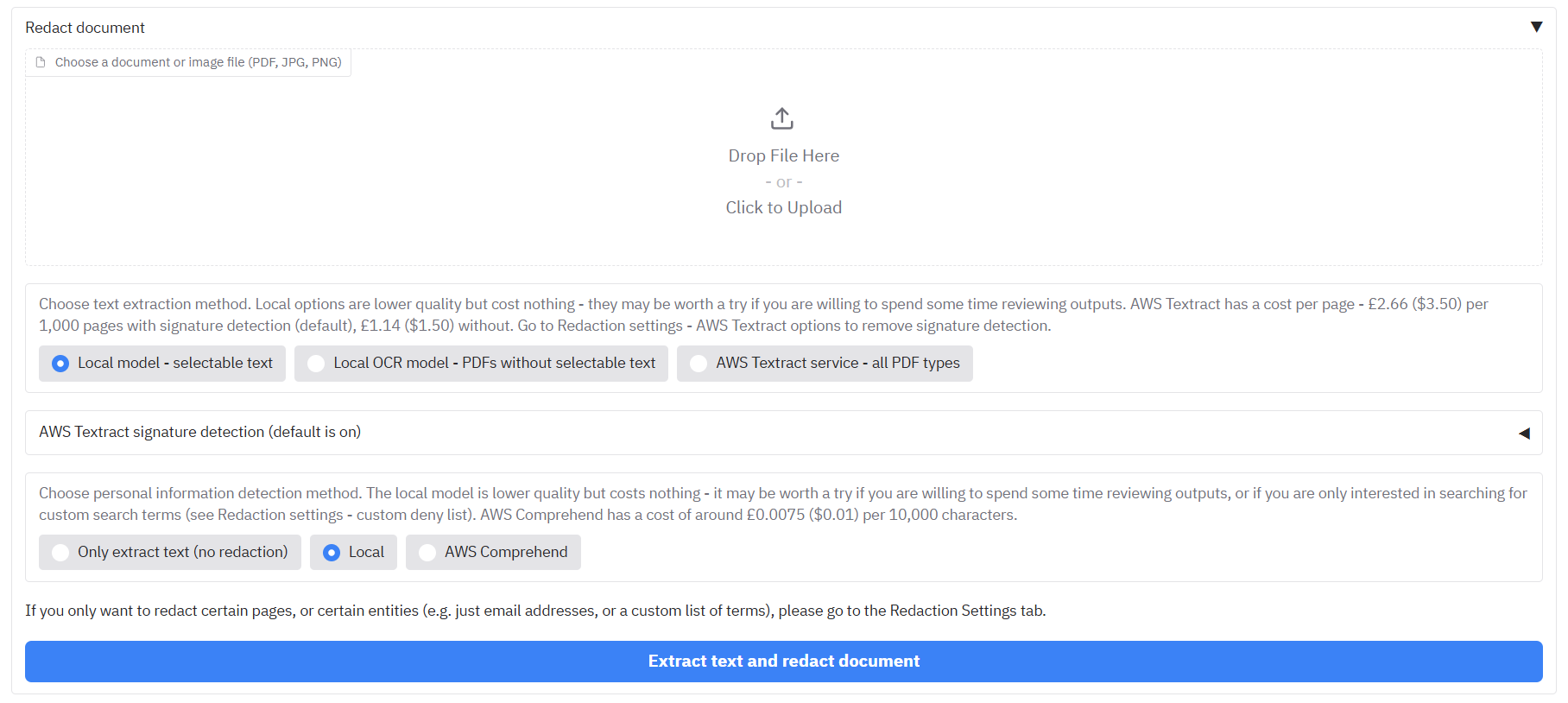

|

| 20 |

doc_redaction_amplify_app/*

|

| 21 |

-

user_guide/*

|

|

|

|

|

|

|

|

|

| 18 |

logs/*

|

| 19 |

config/*

|

| 20 |

doc_redaction_amplify_app/*

|

| 21 |

+

user_guide/*

|

| 22 |

+

cdk/*

|

| 23 |

+

web/*

|

README.md

CHANGED

|

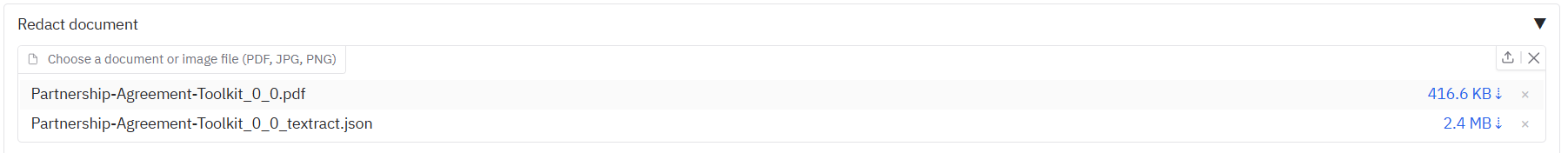

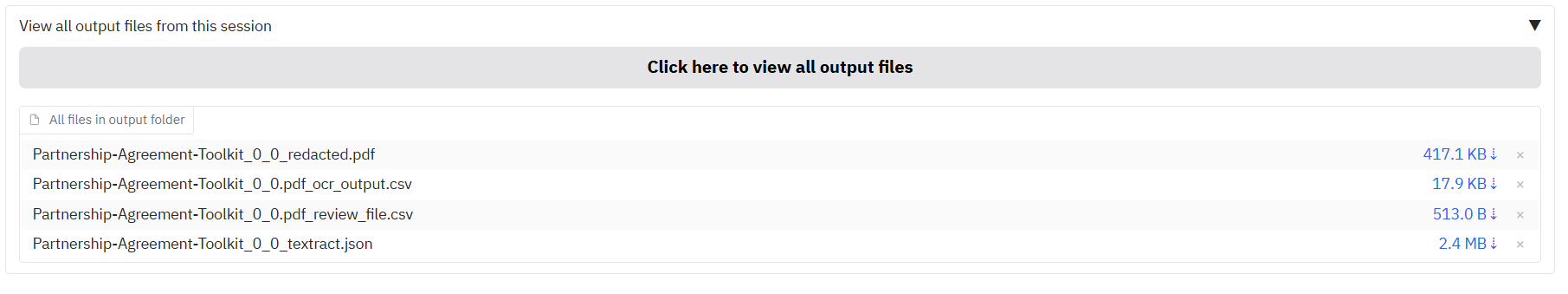

@@ -20,6 +20,12 @@ NOTE: The app is not 100% accurate, and it will miss some personal information.

|

|

| 20 |

|

| 21 |

# USER GUIDE

|

| 22 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 23 |

## Table of contents

|

| 24 |

|

| 25 |

- [Example data files](#example-data-files)

|

|

@@ -35,57 +41,97 @@ NOTE: The app is not 100% accurate, and it will miss some personal information.

|

|

| 35 |

- [Reviewing and modifying suggested redactions](#reviewing-and-modifying-suggested-redactions)

|

| 36 |

|

| 37 |

See the [advanced user guide here](#advanced-user-guide):

|

| 38 |

-

- [

|

| 39 |

-

- [Modifying existing redaction review files](#modifying-existing-redaction-review-files)

|

| 40 |

-

- [Merging existing redaction review files](#merging-existing-redaction-review-files)

|

| 41 |

- [Identifying and redacting duplicate pages](#identifying-and-redacting-duplicate-pages)

|

| 42 |

- [Fuzzy search and redaction](#fuzzy-search-and-redaction)

|

| 43 |

- [Export redactions to and import from Adobe Acrobat](#export-to-and-import-from-adobe)

|

| 44 |

- [Exporting to Adobe Acrobat](#exporting-to-adobe-acrobat)

|

| 45 |

- [Importing from Adobe Acrobat](#importing-from-adobe-acrobat)

|

|

|

|

| 46 |

- [Using AWS Textract and Comprehend when not running in an AWS environment](#using-aws-textract-and-comprehend-when-not-running-in-an-aws-environment)

|

|

|

|

| 47 |

|

| 48 |

## Example data files

|

| 49 |

|

| 50 |

-

Please

|

| 51 |

- [Example of files sent to a professor before applying](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/example_of_emails_sent_to_a_professor_before_applying.pdf)

|

| 52 |

- [Example complaint letter (jpg)](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/example_complaint_letter.jpg)

|

| 53 |

- [Partnership Agreement Toolkit (for signatures and more advanced usage)](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/Partnership-Agreement-Toolkit_0_0.pdf)

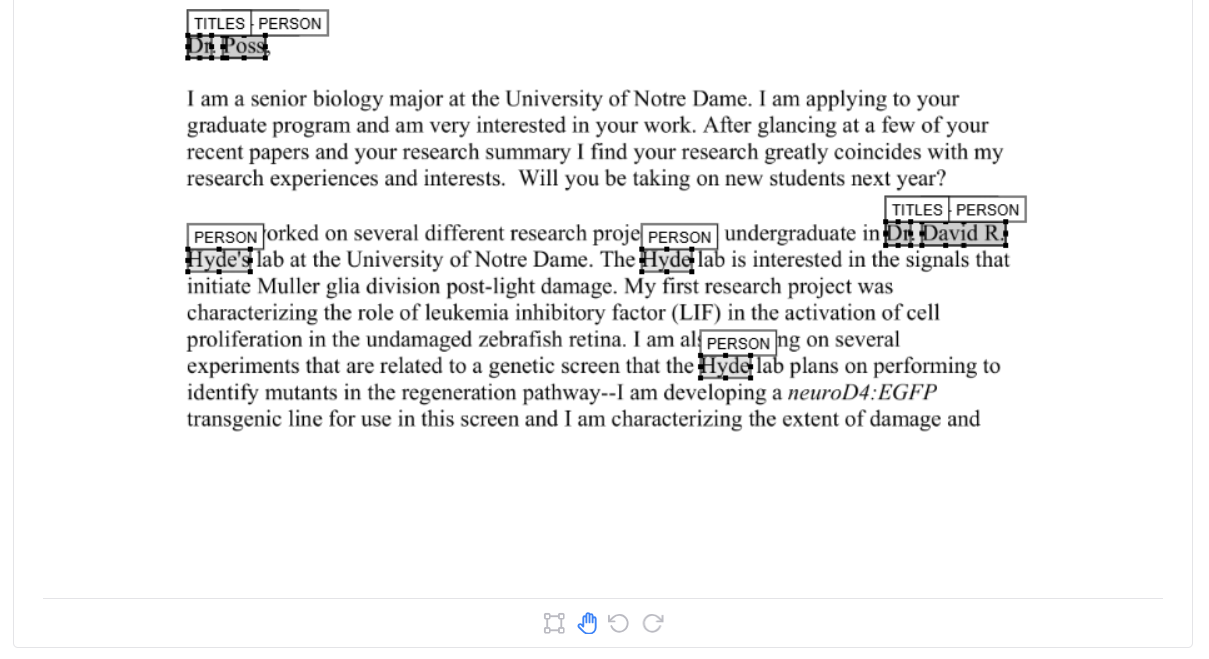

|

|

|

|

| 54 |

|

| 55 |

## Basic redaction

|

| 56 |

|

| 57 |

-

The document redaction app can detect personally-identifiable information (PII) in documents. Documents can be redacted directly, or suggested redactions can be reviewed and modified using a grapical user interface.

|

| 58 |

|

| 59 |

Download the example PDFs above to your computer. Open up the redaction app with the link provided by email.

|

| 60 |

|

| 61 |

|

| 62 |

|

| 63 |

-

|

|

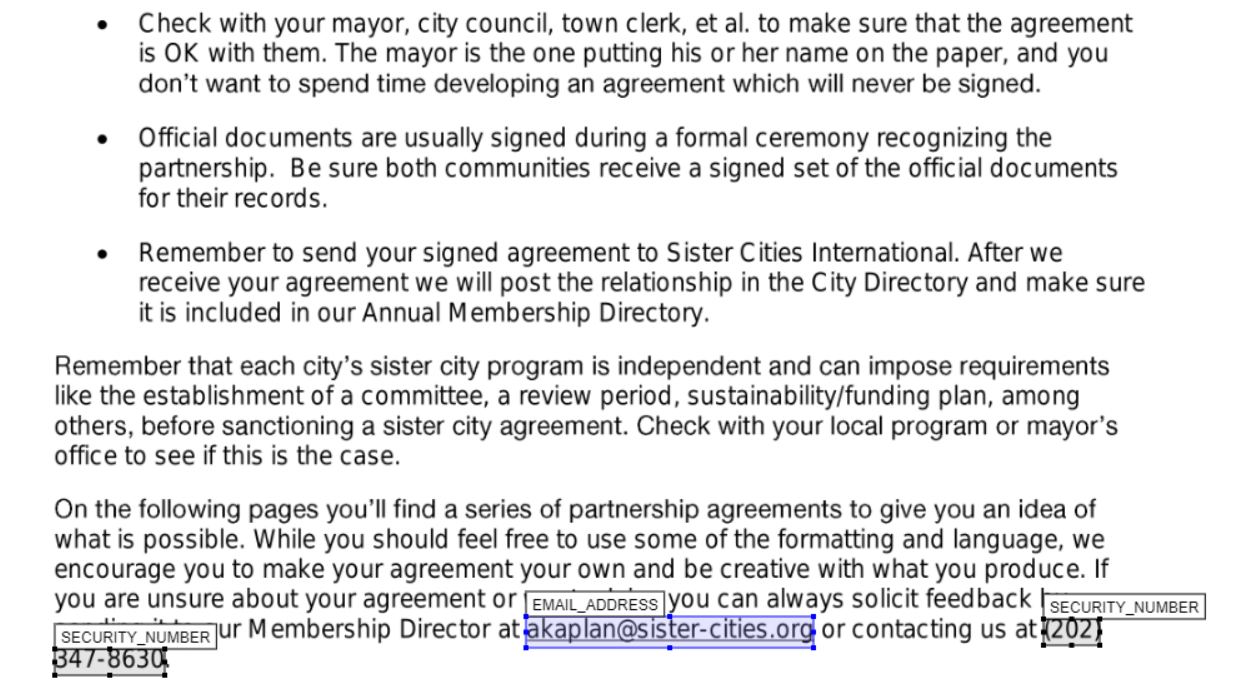

|

|

|

|

|

|

|

|

|

|

|

| 64 |

|

| 65 |

-

First, select one of the three text extraction options

|

| 66 |

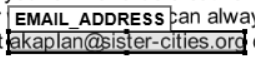

-

- 'Local model - selectable text' - This will read text directly from PDFs that have selectable text to redact (using PikePDF). This is fine for most PDFs, but will find nothing if the PDF does not have selectable text, and it is not good for handwriting or signatures. If it encounters an image file, it will send it onto the second option below.

|

| 67 |

-

- 'Local OCR model - PDFs without selectable text' - This option will use a simple Optical Character Recognition (OCR) model (Tesseract) to pull out text from a PDF/image that it 'sees'. This can handle most typed text in PDFs/images without selectable text, but struggles with handwriting/signatures. If you are interested in the latter, then you should use the third option if available.

|

| 68 |

-

- 'AWS Textract service - all PDF types' - Only available for instances of the app running on AWS. AWS Textract is a service that performs OCR on documents within their secure service. This is a more advanced version of OCR compared to the local option, and carries a (relatively small) cost. Textract excels in complex documents based on images, or documents that contain a lot of handwriting and signatures.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 69 |

|

| 70 |

If you are running with the AWS service enabled, here you will also have a choice for PII redaction method:

|

| 71 |

-

- '

|

| 72 |

-

- '

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 73 |

|

| 74 |

-

|

|

|

|

|

|

|

| 75 |

|

| 76 |

|

| 77 |

|

| 78 |

-

- '...redacted.pdf' files contain the original pdf with suggested redacted text deleted and replaced by a black box on top of the document.

|

| 79 |

-

- '...ocr_results.csv' files contain the line-by-line text outputs from the entire document. This file can be useful for later searching through for any terms of interest in the document (e.g. using Excel or a similar program).

|

| 80 |

-

- '...review_file.csv' files are the review files that contain details and locations of all of the suggested redactions in the document. This file is key to the [review process](#reviewing-and-modifying-suggested-redactions), and should be downloaded to use later for this.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 81 |

|

| 82 |

-

|

| 83 |

|

| 84 |

-

|

| 85 |

|

| 86 |

-

|

| 87 |

-

|

| 88 |

-

|

| 89 |

|

| 90 |

We have covered redacting documents with the default redaction options. The '...redacted.pdf' file output may be enough for your purposes. But it is very likely that you will need to customise your redaction options, which we will cover below.

|

| 91 |

|

|

@@ -126,6 +172,16 @@ There may be full pages in a document that you want to redact. The app also prov

|

|

| 126 |

|

| 127 |

Using the above approaches to allow, deny, and full page redaction lists will give you an output [like this](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/allow_list/Partnership-Agreement-Toolkit_0_0_redacted.pdf).

|

| 128 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

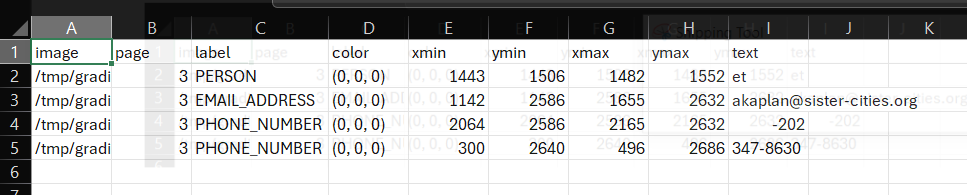

|

|

|

|

|

|

|

| 129 |

### Redacting additional types of personal information

|

| 130 |

|

| 131 |

You may want to redact additional types of information beyond the defaults, or you may not be interested in default suggested entity types. There are dates in the example complaint letter. Say we wanted to redact those dates also?

|

|

@@ -146,7 +202,9 @@ Say also we are only interested in redacting page 1 of the loaded documents. On

|

|

| 146 |

|

| 147 |

## Handwriting and signature redaction

|

| 148 |

|

| 149 |

-

The file [Partnership Agreement Toolkit (for signatures and more advanced usage)](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/Partnership-Agreement-Toolkit_0_0.pdf) is provided as an example document to test AWS Textract + redaction with a document that has signatures in. If you have access to AWS Textract in the app, try removing all entity types from redaction on the Redaction settings and clicking the big X to the right of 'Entities to redact'.

|

|

|

|

|

|

|

| 150 |

|

| 151 |

|

| 152 |

|

|

@@ -156,72 +214,135 @@ The outputs should show handwriting/signatures redacted (see pages 5 - 7), which

|

|

| 156 |

|

| 157 |

## Reviewing and modifying suggested redactions

|

| 158 |

|

| 159 |

-

|

|

|

|

|

|

|

| 160 |

|

| 161 |

-

On

|

| 162 |

|

| 163 |

|

| 164 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 165 |

You can change the page viewed either by clicking 'Previous page' or 'Next page', or by typing a specific page number in the 'Current page' box and pressing Enter on your keyboard. Each time you switch page, it will save redactions you have made on the page you are moving from, so you will not lose changes you have made.

|

| 166 |

|

| 167 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 168 |

|

| 169 |

-

|

| 170 |

|

| 171 |

|

| 172 |

|

| 173 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 174 |

|

| 175 |

-

|

| 176 |

|

| 177 |

-

|

| 178 |

|

| 179 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 180 |

|

| 181 |

|

| 182 |

|

| 183 |

-

|

| 184 |

|

| 185 |

-

|

| 186 |

|

| 187 |

-

|

| 188 |

|

| 189 |

-

|

| 190 |

|

| 191 |

-

|

| 192 |

-

- [Modifying existing redaction review files](#modifying-existing-redaction-review-files)

|

| 193 |

-

- [Merging existing redaction review files](#merging-existing-redaction-review-files)

|

| 194 |

-

- [Identifying and redacting duplicate pages](#identifying-and-redacting-duplicate-pages)

|

| 195 |

-

- [Fuzzy search and redaction](#fuzzy-search-and-redaction)

|

| 196 |

-

- [Export redactions to and import from Adobe Acrobat](#export-to-and-import-from-adobe)

|

| 197 |

-

- [Exporting to Adobe Acrobat](#exporting-to-adobe-acrobat)

|

| 198 |

-

- [Importing from Adobe Acrobat](#importing-from-adobe-acrobat)

|

| 199 |

|

|

|

|

| 200 |

|

| 201 |

-

|

|

|

|

|

|

|

| 202 |

|

| 203 |

-

|

| 204 |

|

| 205 |

-

|

|

|

|

| 206 |

|

| 207 |

-

|

| 208 |

-

If you open up a 'review_file' csv output using a spreadsheet software program such as Microsoft Excel you can easily modify redaction properties. Open the file '[Partnership-Agreement-Toolkit_0_0_redacted.pdf_review_file_local.csv](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/merge_review_files/Partnership-Agreement-Toolkit_0_0.pdf_review_file_local.csv)', and you should see a spreadshet with just four suggested redactions (see below). The following instructions are for using Excel.

|

| 209 |

|

| 210 |

-

|

| 211 |

|

| 212 |

-

|

| 213 |

|

| 214 |

-

|

| 215 |

|

| 216 |

-

|

| 217 |

|

| 218 |

-

|

| 219 |

|

| 220 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 221 |

|

| 222 |

-

|

| 223 |

|

| 224 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 225 |

|

| 226 |

Say you have run multiple redaction tasks on the same document, and you want to merge all of these redactions together. You could do this in your spreadsheet editor, but this could be fiddly especially if dealing with multiple review files or large numbers of redactions. The app has a feature to combine multiple review files together to create a 'merged' review file.

|

| 227 |

|

|

@@ -303,6 +424,30 @@ When you click the 'convert .xfdf comment file to review_file.csv' button, the a

|

|

| 303 |

|

| 304 |

|

| 305 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 306 |

## Using AWS Textract and Comprehend when not running in an AWS environment

|

| 307 |

|

| 308 |

AWS Textract and Comprehend give much better results for text extraction and document redaction than the local model options in the app. The most secure way to access them in the Redaction app is to run the app in a secure AWS environment with relevant permissions. Alternatively, you could run the app on your own system while logged in to AWS SSO with relevant permissions.

|

|

@@ -322,4 +467,27 @@ AWS_SECRET_KEY= your-secret-key

|

|

| 322 |

|

| 323 |

The app should then pick up these keys when trying to access the AWS Textract and Comprehend services during redaction.

|

| 324 |

|

| 325 |

-

Again, a lot can potentially go wrong with AWS solutions that are insecure, so before trying the above please consult with your AWS and data security teams.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 20 |

|

| 21 |

# USER GUIDE

|

| 22 |

|

| 23 |

+

## Experiment with the test (public) version of the app

|

| 24 |

+

You can test out many of the features described in this user guide at the [public test version of the app](https://huggingface.co/spaces/seanpedrickcase/document_redaction), which is free. AWS functions (e.g. Textract, Comprehend) are not enabled (unless you have valid API keys).

|

| 25 |

+

|

| 26 |

+

## Chat over this user guide

|

| 27 |

+

You can now [speak with a chat bot about this user guide](https://huggingface.co/spaces/seanpedrickcase/Light-PDF-Web-QA-Chatbot) (beta!)

|

| 28 |

+

|

| 29 |

## Table of contents

|

| 30 |

|

| 31 |

- [Example data files](#example-data-files)

|

|

|

|

| 41 |

- [Reviewing and modifying suggested redactions](#reviewing-and-modifying-suggested-redactions)

|

| 42 |

|

| 43 |

See the [advanced user guide here](#advanced-user-guide):

|

| 44 |

+

- [Merging redaction review files](#merging-redaction-review-files)

|

|

|

|

|

|

|

| 45 |

- [Identifying and redacting duplicate pages](#identifying-and-redacting-duplicate-pages)

|

| 46 |

- [Fuzzy search and redaction](#fuzzy-search-and-redaction)

|

| 47 |

- [Export redactions to and import from Adobe Acrobat](#export-to-and-import-from-adobe)

|

| 48 |

- [Exporting to Adobe Acrobat](#exporting-to-adobe-acrobat)

|

| 49 |

- [Importing from Adobe Acrobat](#importing-from-adobe-acrobat)

|

| 50 |

+

- [Using the AWS Textract document API](#using-the-aws-textract-document-api)

|

| 51 |

- [Using AWS Textract and Comprehend when not running in an AWS environment](#using-aws-textract-and-comprehend-when-not-running-in-an-aws-environment)

|

| 52 |

+

- [Modifying existing redaction review files](#modifying-existing-redaction-review-files)

|

| 53 |

|

| 54 |

## Example data files

|

| 55 |

|

| 56 |

+

Please try these example files to follow along with this guide:

|

| 57 |

- [Example of files sent to a professor before applying](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/example_of_emails_sent_to_a_professor_before_applying.pdf)

|

| 58 |

- [Example complaint letter (jpg)](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/example_complaint_letter.jpg)

|

| 59 |

- [Partnership Agreement Toolkit (for signatures and more advanced usage)](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/Partnership-Agreement-Toolkit_0_0.pdf)

|

| 60 |

+

- [Dummy case note data](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/combined_case_notes.csv)

|

| 61 |

|

| 62 |

## Basic redaction

|

| 63 |

|

| 64 |

+

The document redaction app can detect personally-identifiable information (PII) in documents. Documents can be redacted directly, or suggested redactions can be reviewed and modified using a grapical user interface. Basic document redaction can be performed quickly using the default options.

|

| 65 |

|

| 66 |

Download the example PDFs above to your computer. Open up the redaction app with the link provided by email.

|

| 67 |

|

| 68 |

|

| 69 |

|

| 70 |

+

### Upload files to the app

|

| 71 |

+

|

| 72 |

+

The 'Redact PDFs/images tab' currently accepts PDFs and image files (JPG, PNG) for redaction. Click on the 'Drop files here or Click to Upload' area of the screen, and select one of the three different [example files](#example-data-files) (they should all be stored in the same folder if you want them to be redacted at the same time).

|

| 73 |

+

|

| 74 |

+

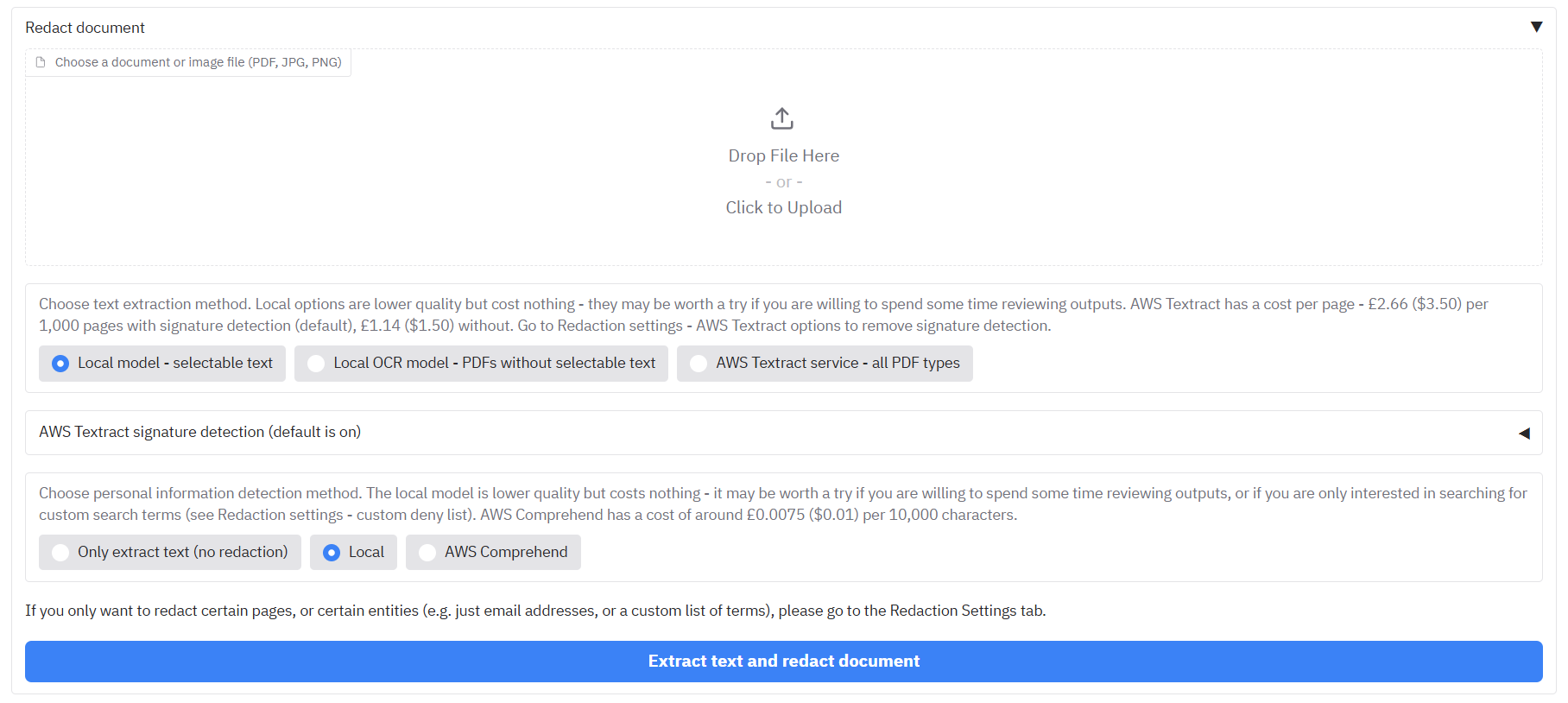

### Text extraction

|

| 75 |

|

| 76 |

+

First, select one of the three text extraction options:

|

| 77 |

+

- **'Local model - selectable text'** - This will read text directly from PDFs that have selectable text to redact (using PikePDF). This is fine for most PDFs, but will find nothing if the PDF does not have selectable text, and it is not good for handwriting or signatures. If it encounters an image file, it will send it onto the second option below.

|

| 78 |

+

- **'Local OCR model - PDFs without selectable text'** - This option will use a simple Optical Character Recognition (OCR) model (Tesseract) to pull out text from a PDF/image that it 'sees'. This can handle most typed text in PDFs/images without selectable text, but struggles with handwriting/signatures. If you are interested in the latter, then you should use the third option if available.

|

| 79 |

+

- **'AWS Textract service - all PDF types'** - Only available for instances of the app running on AWS. AWS Textract is a service that performs OCR on documents within their secure service. This is a more advanced version of OCR compared to the local option, and carries a (relatively small) cost. Textract excels in complex documents based on images, or documents that contain a lot of handwriting and signatures.

|

| 80 |

+

|

| 81 |

+

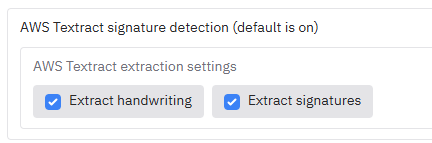

### Optional - select signature extraction

|

| 82 |

+

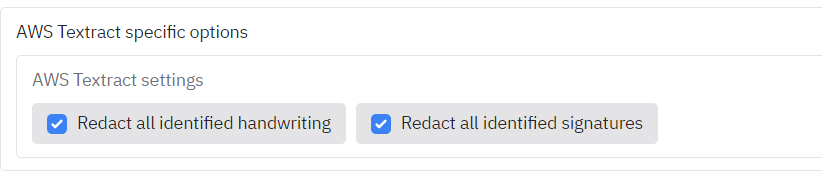

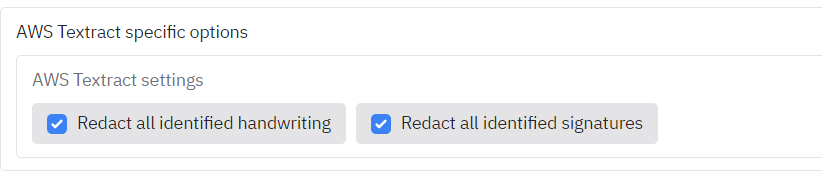

If you chose the AWS Textract service above, you can choose if you want handwriting and/or signatures redacted by default. Choosing signatures here will have a cost implication, as identifying signatures will cost ~£2.66 ($3.50) per 1,000 pages vs ~£1.14 ($1.50) per 1,000 pages without signature detection.

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

### PII redaction method

|

| 87 |

|

| 88 |

If you are running with the AWS service enabled, here you will also have a choice for PII redaction method:

|

| 89 |

+

- **'Only extract text - (no redaction)'** - If you are only interested in getting the text out of the document for further processing (e.g. to find duplicate pages, or to review text on the Review redactions page)

|

| 90 |

+

- **'Local'** - This uses the spacy package to rapidly detect PII in extracted text. This method is often sufficient if you are just interested in redacting specific terms defined in a custom list.

|

| 91 |

+

- **'AWS Comprehend'** - This method calls an AWS service to provide more accurate identification of PII in extracted text.

|

| 92 |

+

|

| 93 |

+

### Optional - costs and time estimation

|

| 94 |

+

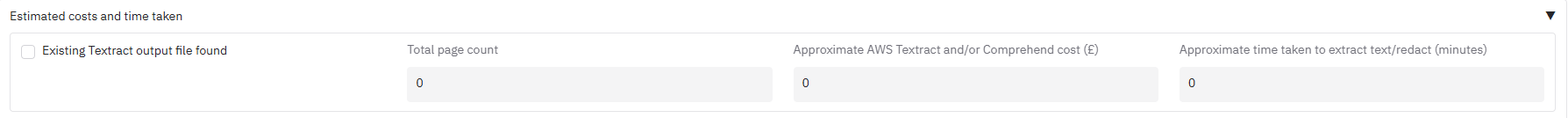

If the option is enabled (by your system admin, in the config file), you will see a cost and time estimate for the redaction process. 'Existing Textract output file found' will be checked automatically if previous Textract text extraction files exist in the output folder, or have been [previously uploaded by the user](#aws-textract-outputs) (saving time and money for redaction).

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

### Optional - cost code selection

|

| 99 |

+

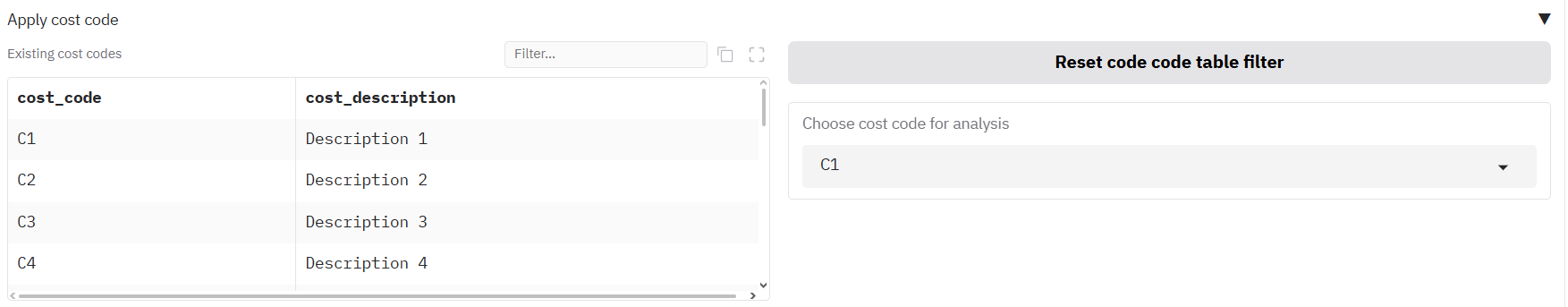

If the option is enabled (by your system admin, in the config file), you may be prompted to select a cost code before continuing with the redaction task.

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

The relevant cost code can be found either by: 1. Using the search bar above the data table to find relevant cost codes, then clicking on the relevant row, or 2. typing it directly into the dropdown to the right, where it should filter as you type.

|

| 104 |

+

|

| 105 |

+

### Optional - Submit whole documents to Textract API

|

| 106 |

+

If this option is enabled (by your system admin, in the config file), you will have the option to submit whole documents in quick succession to the AWS Textract service to get extracted text outputs quickly (faster than using the 'Redact document' process described here). This feature is described in more detail in the [advanced user guide](#using-the-aws-textract-document-api).

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

### Redact the document

|

| 111 |

|

| 112 |

+

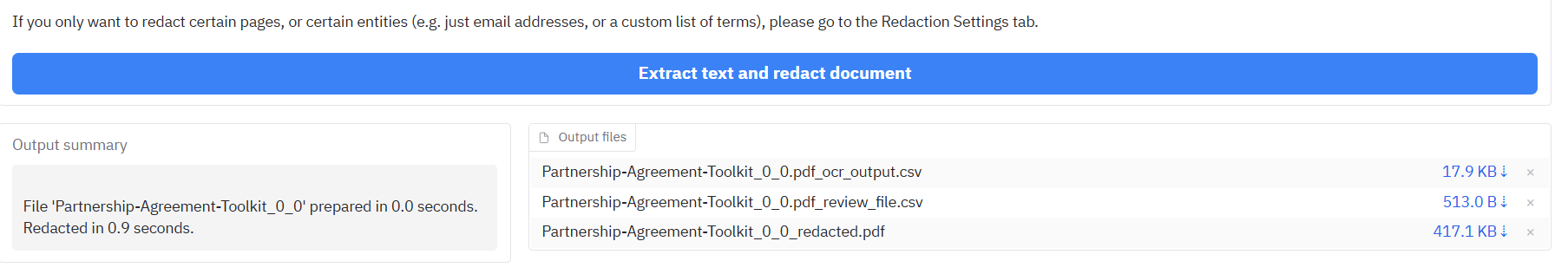

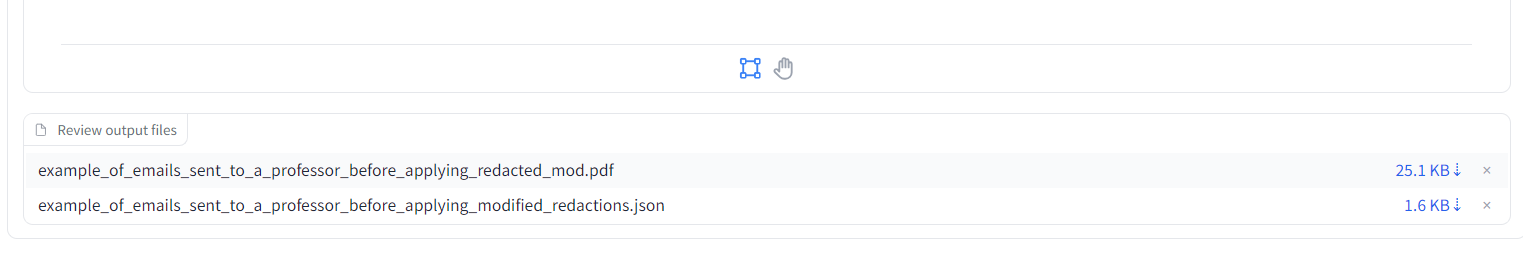

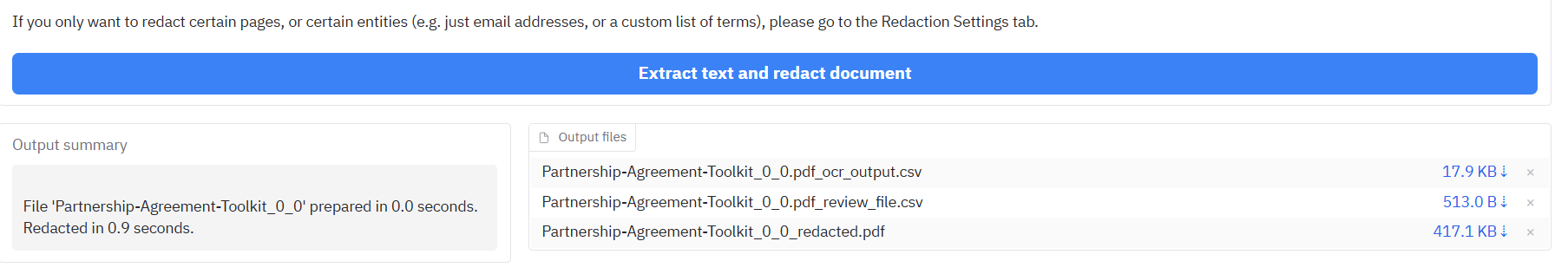

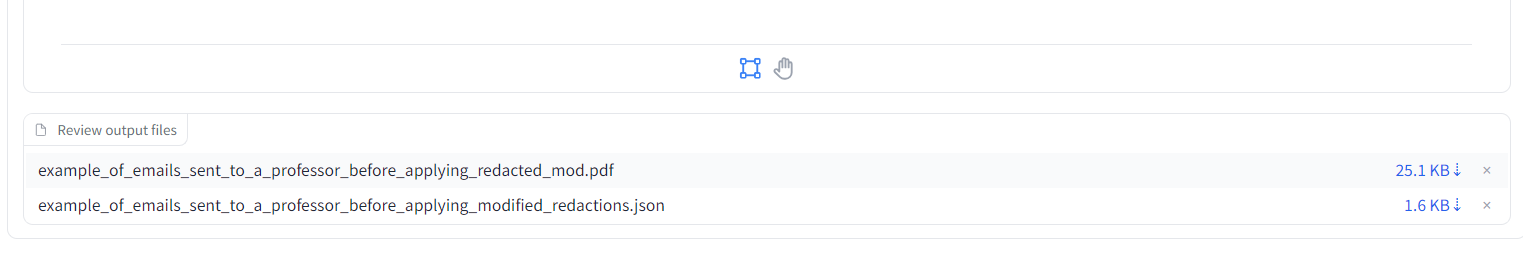

Click 'Redact document'. After loading in the document, the app should be able to process about 30 pages per minute (depending on redaction methods chose above). When ready, you should see a message saying that processing is complete, with output files appearing in the bottom right.

|

| 113 |

+

|

| 114 |

+

### Redaction outputs

|

| 115 |

|

| 116 |

|

| 117 |

|

| 118 |

+

- **'...redacted.pdf'** files contain the original pdf with suggested redacted text deleted and replaced by a black box on top of the document.

|

| 119 |

+

- **'...ocr_results.csv'** files contain the line-by-line text outputs from the entire document. This file can be useful for later searching through for any terms of interest in the document (e.g. using Excel or a similar program).

|

| 120 |

+

- **'...review_file.csv'** files are the review files that contain details and locations of all of the suggested redactions in the document. This file is key to the [review process](#reviewing-and-modifying-suggested-redactions), and should be downloaded to use later for this.

|

| 121 |

+

|

| 122 |

+

### Additional AWS Textract outputs

|

| 123 |

+

|

| 124 |

+

If you have used the AWS Textract option for extracting text, you may also see a '..._textract.json' file. This file contains all the relevant extracted text information that comes from the AWS Textract service. You can keep this file and upload it at a later date alongside your input document, which will enable you to skip calling AWS Textract every single time you want to do a redaction task, as follows:

|

| 125 |

+

|

| 126 |

+

|

| 127 |

|

| 128 |

+

### Downloading output files from previous redaction tasks

|

| 129 |

|

| 130 |

+

If you are logged in via AWS Cognito and you lose your app page for some reason (e.g. from a crash, reloading), it is possible recover your previous output files, provided the server has not been shut down since you redacted the document. Go to 'Redaction settings', then scroll to the bottom to see 'View all output files from this session'.

|

| 131 |

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

### Basic redaction summary

|

| 135 |

|

| 136 |

We have covered redacting documents with the default redaction options. The '...redacted.pdf' file output may be enough for your purposes. But it is very likely that you will need to customise your redaction options, which we will cover below.

|

| 137 |

|

|

|

|

| 172 |

|

| 173 |

Using the above approaches to allow, deny, and full page redaction lists will give you an output [like this](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/allow_list/Partnership-Agreement-Toolkit_0_0_redacted.pdf).

|

| 174 |

|

| 175 |

+

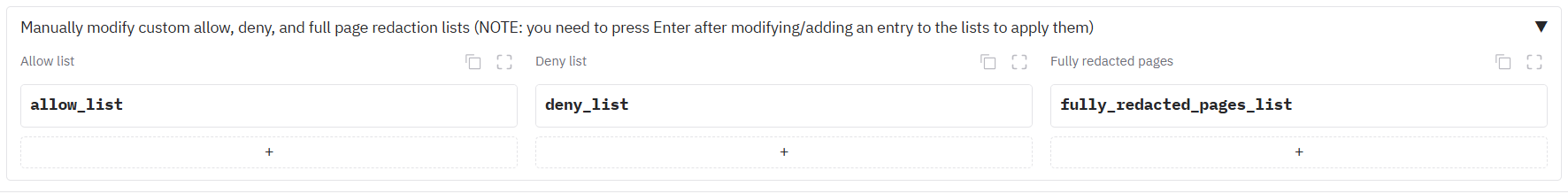

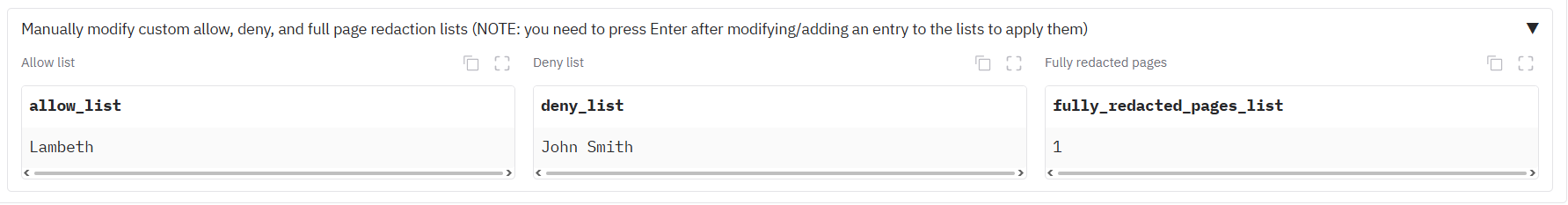

#### Adding to the loaded allow, deny, and whole page lists in-app

|

| 176 |

+

|

| 177 |

+

If you open the accordion below the allow list options called 'Manually modify custom allow...', you should be able to see a few tables with options to add new rows:

|

| 178 |

+

|

| 179 |

+

|

| 180 |

+

|

| 181 |

+

If the table is empty, you can add a new entry, you can add a new row by clicking on the '+' item below each table header. If there is existing data, you may need to click on the three dots to the right and select 'Add row below'. Type the item you wish to keep/remove in the cell, and then (important) press enter to add this new item to the allow/deny/whole page list. Your output tables should look something like below.

|

| 182 |

+

|

| 183 |

+

|

| 184 |

+

|

| 185 |

### Redacting additional types of personal information

|

| 186 |

|

| 187 |

You may want to redact additional types of information beyond the defaults, or you may not be interested in default suggested entity types. There are dates in the example complaint letter. Say we wanted to redact those dates also?

|

|

|

|

| 202 |

|

| 203 |

## Handwriting and signature redaction

|

| 204 |

|

| 205 |

+

The file [Partnership Agreement Toolkit (for signatures and more advanced usage)](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/Partnership-Agreement-Toolkit_0_0.pdf) is provided as an example document to test AWS Textract + redaction with a document that has signatures in. If you have access to AWS Textract in the app, try removing all entity types from redaction on the Redaction settings and clicking the big X to the right of 'Entities to redact'.

|

| 206 |

+

|

| 207 |

+

To ensure that handwriting and signatures are enabled (enabled by default), on the front screen go the 'AWS Textract signature detection' to enable/disable the following options :

|

| 208 |

|

| 209 |

|

| 210 |

|

|

|

|

| 214 |

|

| 215 |

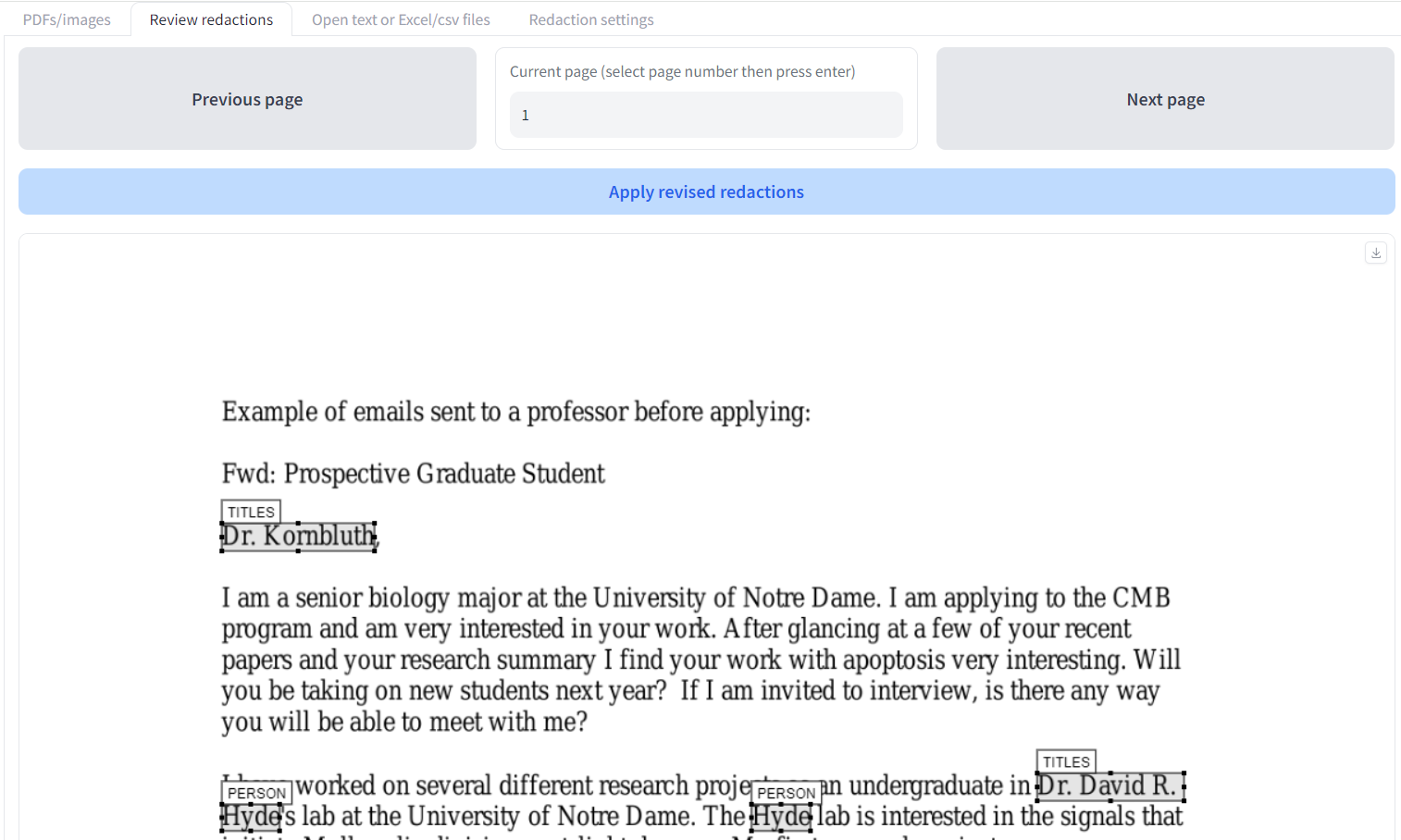

## Reviewing and modifying suggested redactions

|

| 216 |

|

| 217 |

+

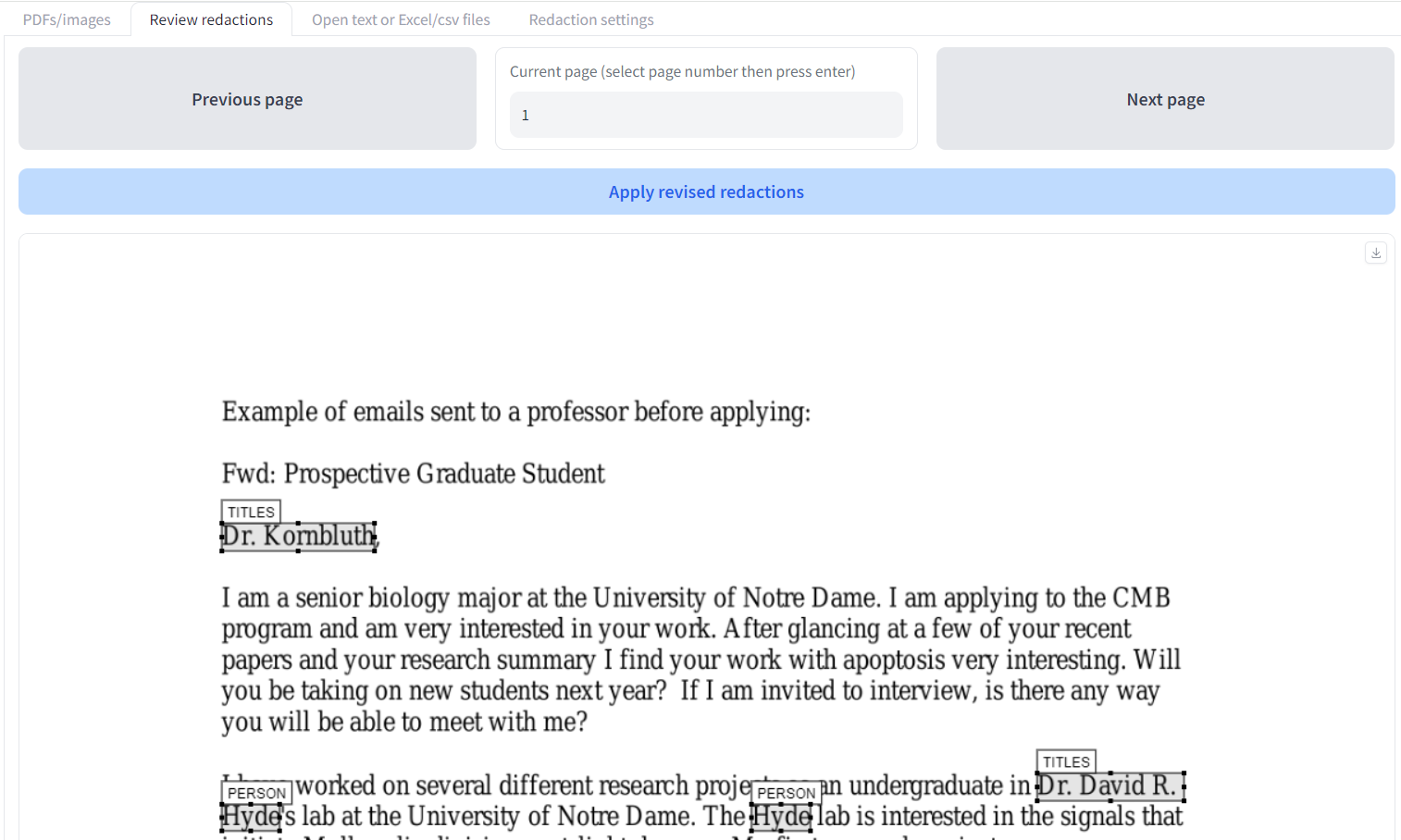

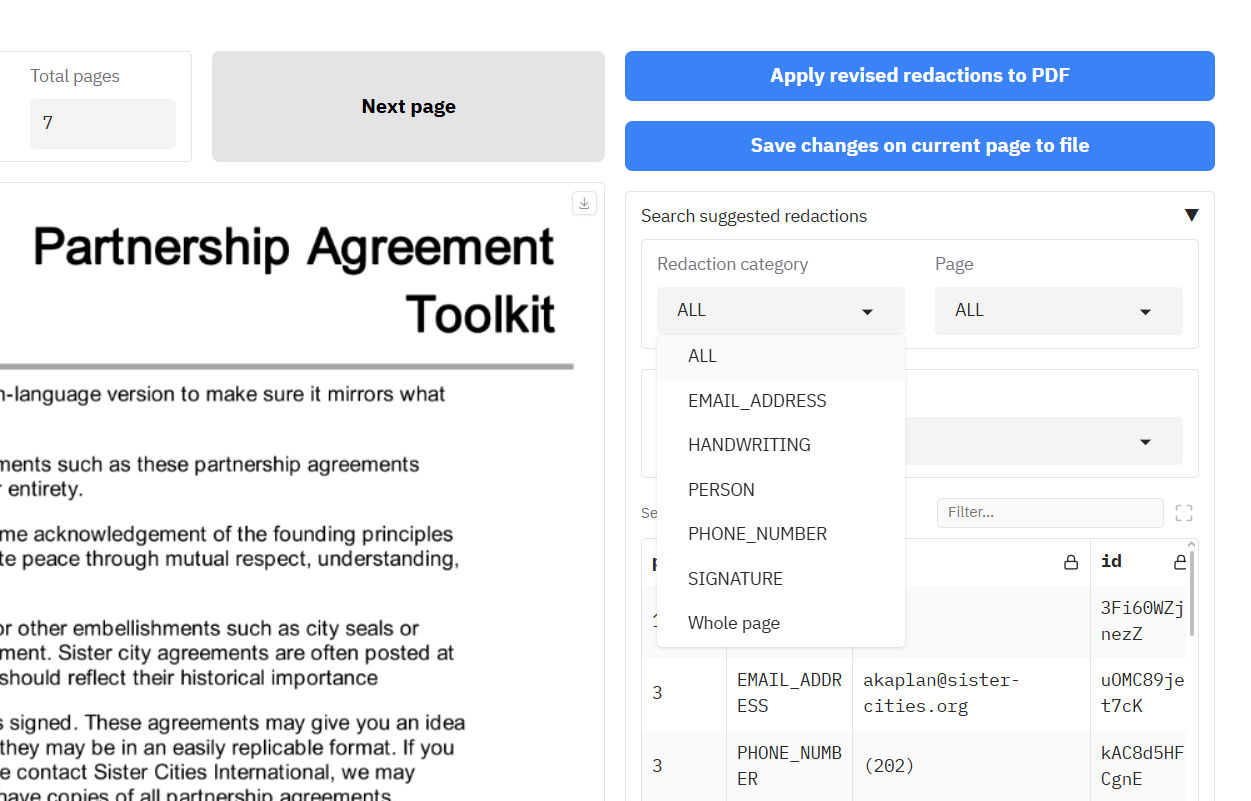

Sometimes the app will suggest redactions that are incorrect, or will miss personal information entirely. The app allows you to review and modify suggested redactions to compensate for this. You can do this on the 'Review redactions' tab.

|

| 218 |

+

|

| 219 |

+

We will go through ways to review suggested redactions with an example.On the first tab 'PDFs/images' upload the ['Example of files sent to a professor before applying.pdf'](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/example_of_emails_sent_to_a_professor_before_applying.pdf) file. Let's stick with the 'Local model - selectable text' option, and click 'Redact document'. Once the outputs are created, go to the 'Review redactions' tab.

|

| 220 |

|

| 221 |

+

On the 'Review redactions' tab you have a visual interface that allows you to inspect and modify redactions suggested by the app. There are quite a few options to look at, so we'll go from top to bottom.

|

| 222 |

|

| 223 |

|

| 224 |

|

| 225 |

+

### Uploading documents for review

|

| 226 |

+

|

| 227 |

+

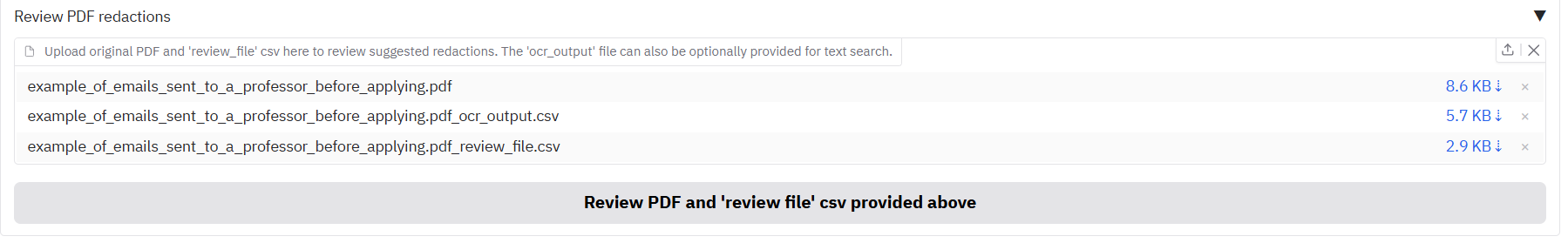

The top area has a file upload area where you can upload original, unredacted PDFs, alongside the '..._review_file.csv' that is produced by the redaction process. Once you have uploaded these two files, click the 'Review PDF...' button to load in the files for review. This will allow you to visualise and modify the suggested redactions using the interface below.

|

| 228 |

+

|

| 229 |

+

Optionally, you can also upload one of the '..._ocr_output.csv' files here that comes out of a redaction task, so that you can navigate the extracted text from the document.

|

| 230 |

+

|

| 231 |

+

|

| 232 |

+

|

| 233 |

+

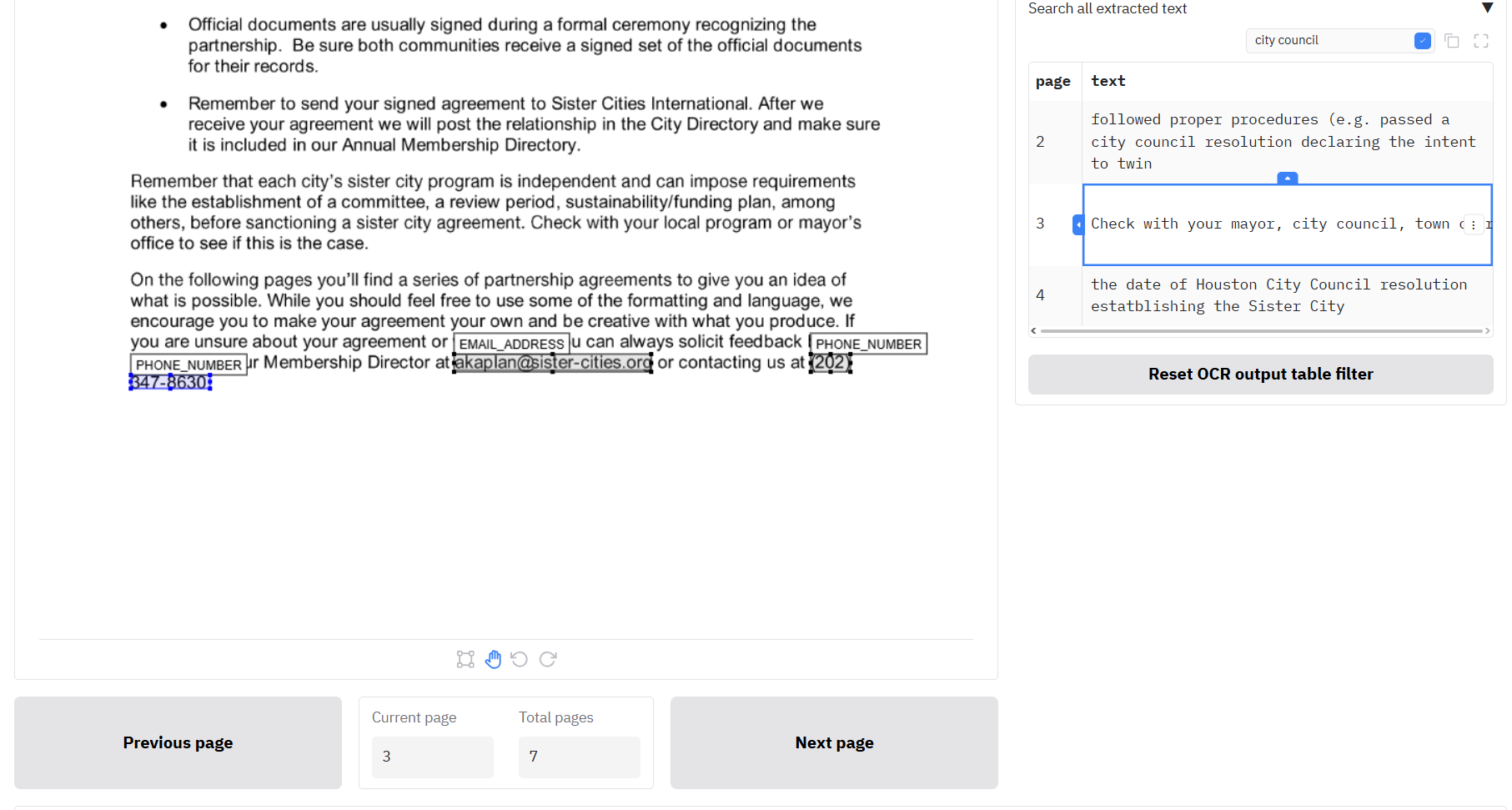

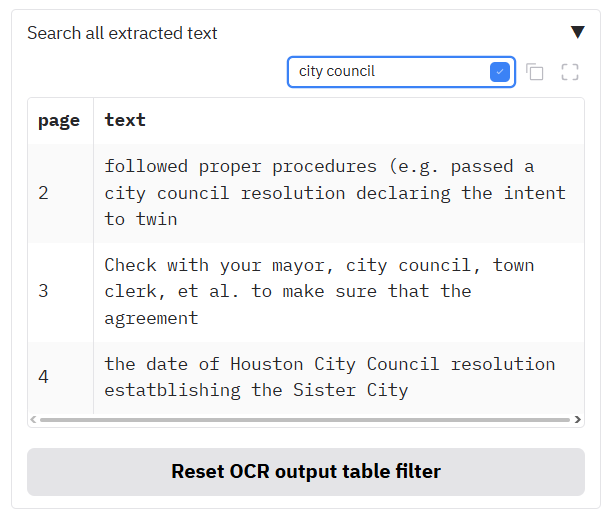

You can upload the three review files in the box (unredacted document, '..._review_file.csv' and '..._ocr_output.csv' file) before clicking 'Review PDF...', as in the image below:

|

| 234 |

+

|

| 235 |

+

|

| 236 |

+

|

| 237 |

+

**NOTE:** ensure you upload the ***unredacted*** document here and not the redacted version, otherwise you will be checking over a document that already has redaction boxes applied!

|

| 238 |

+

|

| 239 |

+

### Page navigation

|

| 240 |

+

|

| 241 |

You can change the page viewed either by clicking 'Previous page' or 'Next page', or by typing a specific page number in the 'Current page' box and pressing Enter on your keyboard. Each time you switch page, it will save redactions you have made on the page you are moving from, so you will not lose changes you have made.

|

| 242 |

|

| 243 |

+

You can also navigate to different pages by clicking on rows in the tables under 'Search suggested redactions' to the right, or 'search all extracted text' (if enabled) beneath that.

|

| 244 |

+

|

| 245 |

+

### The document viewer pane

|

| 246 |

+

|

| 247 |

+

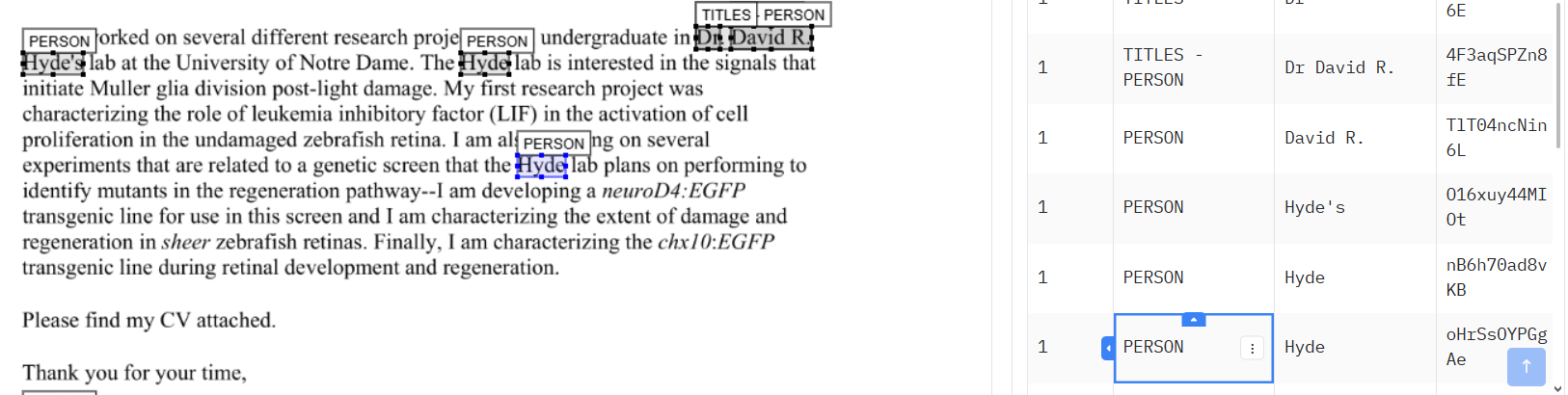

On the selected page, each redaction is highlighted with a box next to its suggested redaction label (e.g. person, email).

|

| 248 |

+

|

| 249 |

+

|

| 250 |

|

| 251 |

+

There are a number of different options to add and modify redaction boxes and page on the document viewer pane. To zoom in and out of the page, use your mouse wheel. To move around the page while zoomed, you need to be in modify mode. Scroll to the bottom of the document viewer to see the relevant controls. You should see a box icon, a hand icon, and two arrows pointing counter-clockwise and clockwise.

|

| 252 |

|

| 253 |

|

| 254 |

|

| 255 |

+

Click on the hand icon to go into modify mode. When you click and hold on the document viewer, This will allow you to move around the page when zoomed in. To rotate the page, you can click on either of the round arrow buttons to turn in that direction.

|

| 256 |

+

|

| 257 |

+

**NOTE:** When you switch page, the viewer will stay in your selected orientation, so if it looks strange, just rotate the page again and hopefully it will look correct!

|

| 258 |

+

|

| 259 |

+

#### Modify existing redactions (hand icon)

|

| 260 |

+

|

| 261 |

+

After clicking on the hand icon, the interface allows you to modify existing redaction boxes. When in this mode, you can click and hold on an existing box to move it.

|

| 262 |

+

|

| 263 |

+

|

| 264 |

+

|

| 265 |

+

Click on one of the small boxes at the edges to change the size of the box. To delete a box, click on it to highlight it, then press delete on your keyboard. Alternatively, double click on a box and click 'Remove' on the box that appears.

|

| 266 |

+

|

| 267 |

+

|

| 268 |

+

|

| 269 |

+

#### Add new redaction boxes (box icon)

|

| 270 |

|

| 271 |

+

To change to 'add redaction boxes' mode, scroll to the bottom of the page. Click on the box icon, and your cursor will change into a crosshair. Now you can add new redaction boxes where you wish. A popup will appear when you create a new box so you can select a label and colour for the new box.

|

| 272 |

|

| 273 |

+

#### 'Locking in' new redaction box format

|

| 274 |

|

| 275 |

+

It is possible to lock in a chosen format for new redaction boxes so that you don't have the popup appearing each time. When you make a new box, select the options for your 'locked' format, and then click on the lock icon on the left side of the popup, which should turn blue.

|

| 276 |

+

|

| 277 |

+

|

| 278 |

+

|

| 279 |

+

You can now add new redaction boxes without a popup appearing. If you want to change or 'unlock' the your chosen box format, you can click on the new icon that has appeared at the bottom of the document viewer pane that looks a little like a gift tag. You can then change the defaults, or click on the lock icon again to 'unlock' the new box format - then popups will appear again each time you create a new box.

|

| 280 |

+

|

| 281 |

+

|

| 282 |

+

|

| 283 |

+

### Apply redactions to PDF and Save changes on current page

|

| 284 |

+

|

| 285 |

+

Once you have reviewed all the redactions in your document and you are happy with the outputs, you can click 'Apply revised redactions to PDF' to create a new '_redacted.pdf' output alongside a new '_review_file.csv' output.

|

| 286 |

+

|

| 287 |

+

If you are working on a page and haven't saved for a while, you can click 'Save changes on current page to file' to ensure that they are saved to an updated 'review_file.csv' output.

|

| 288 |

|

| 289 |

|

| 290 |

|

| 291 |

+

### Selecting and removing redaction boxes using the 'Search suggested redactions' table

|

| 292 |

|

| 293 |

+

The table shows a list of all the suggested redactions in the document alongside the page, label, and text (if available).

|

| 294 |

|

| 295 |

+

|

| 296 |

|

| 297 |

+

If you click on one of the rows in this table, you will be taken to the page of the redaction. Clicking on a redaction row on the same page *should* change the colour of redaction box to blue to help you locate it in the document viewer (just in app, not in redaction output PDFs).

|

| 298 |

|

| 299 |

+

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 300 |

|

| 301 |

+

You can choose a specific entity type to see which pages the entity is present on. If you want to go to the page specified in the table, you can click on a cell in the table and the review page will be changed to that page.

|

| 302 |

|

| 303 |

+

To filter the 'Search suggested redactions' table you can:

|

| 304 |

+

1. Click on one of the dropdowns (Redaction category, Page, Text), and select an option, or

|

| 305 |

+

2. Write text in the 'Filter' box just above the table. Click the blue box to apply the filter to the table.

|

| 306 |

|

| 307 |

+

Once you have filtered the table, you have a few options underneath on what you can do with the filtered rows:

|

| 308 |

|

| 309 |

+

- Click the 'Exclude specific row from redactions' button to remove only the redaction from the last row you clicked on from the document.

|

| 310 |

+

- Click the 'Exclude all items in table from redactions' button to remove all redactions visible in the table from the document.

|

| 311 |

|

| 312 |

+

**NOTE**: After excluding redactions using either of the above options, click the 'Reset filters' button below to ensure that the dropdowns and table return to seeing all remaining redactions in the document.

|

|

|

|

| 313 |

|

| 314 |

+

If you made a mistake, click the 'Undo last element removal' button to restore the Search suggested redactions table to its previous state (can only undo the last action).

|

| 315 |

|

| 316 |

+

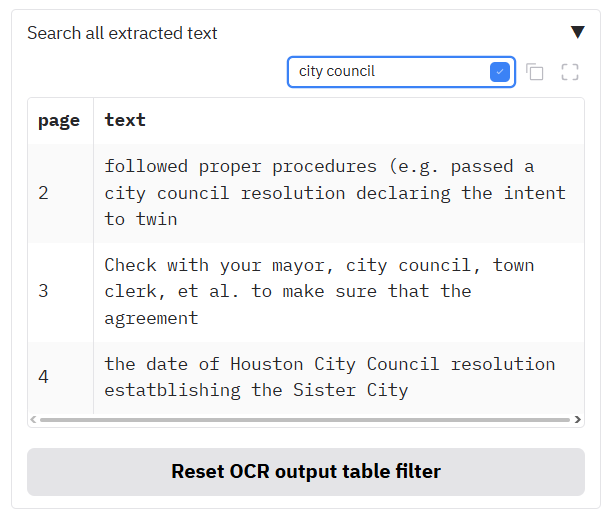

### Navigating through the document using the 'Search all extracted text'

|

| 317 |

|

| 318 |

+

The 'search all extracted text' table will contain text if you have just redacted a document, or if you have uploaded a '..._ocr_output.csv' file alongside a document file and review file on the Review redactions tab as [described above](#uploading-documents-for-review).

|

| 319 |

|

| 320 |

+

You can navigate through the document using this table. When you click on a row, the Document viewer pane to the left will change to the selected page.

|

| 321 |

|

| 322 |

+

|

| 323 |

|

| 324 |

+

You can search through the extracted text by using the search bar just above the table, which should filter as you type. To apply the filter and 'cut' the table, click on the blue tick inside the box next to your search term. To return the table to its original content, click the button below the table 'Reset OCR output table filter'.

|

| 325 |

+

|

| 326 |

+

|

| 327 |

+

|

| 328 |

+

# ADVANCED USER GUIDE

|

| 329 |

+

|

| 330 |

+

This advanced user guide will go over some of the features recently added to the app, including: modifying and merging redaction review files, identifying and redacting duplicate pages across multiple PDFs, 'fuzzy' search and redact, and exporting redactions to Adobe Acrobat.

|

| 331 |

|

| 332 |

+

## Table of contents

|

| 333 |

|

| 334 |

+

- [Merging redaction review files](#merging-redaction-review-files)

|

| 335 |

+

- [Identifying and redacting duplicate pages](#identifying-and-redacting-duplicate-pages)

|

| 336 |

+

- [Fuzzy search and redaction](#fuzzy-search-and-redaction)

|

| 337 |

+

- [Export redactions to and import from Adobe Acrobat](#export-to-and-import-from-adobe)

|

| 338 |

+

- [Exporting to Adobe Acrobat](#exporting-to-adobe-acrobat)

|

| 339 |

+

- [Importing from Adobe Acrobat](#importing-from-adobe-acrobat)

|

| 340 |

+

- [Using the AWS Textract document API](#using-the-aws-textract-document-api)

|

| 341 |

+

- [Using AWS Textract and Comprehend when not running in an AWS environment](#using-aws-textract-and-comprehend-when-not-running-in-an-aws-environment)

|

| 342 |

+

- [Modifying existing redaction review files](#modifying-existing-redaction-review-files)

|

| 343 |

+

|

| 344 |

+

|

| 345 |

+

## Merging redaction review files

|

| 346 |

|

| 347 |

Say you have run multiple redaction tasks on the same document, and you want to merge all of these redactions together. You could do this in your spreadsheet editor, but this could be fiddly especially if dealing with multiple review files or large numbers of redactions. The app has a feature to combine multiple review files together to create a 'merged' review file.

|

| 348 |

|

|

|

|

| 424 |

|

| 425 |

|

| 426 |

|

| 427 |

+

## Using the AWS Textract document API

|

| 428 |

+

|

| 429 |

+

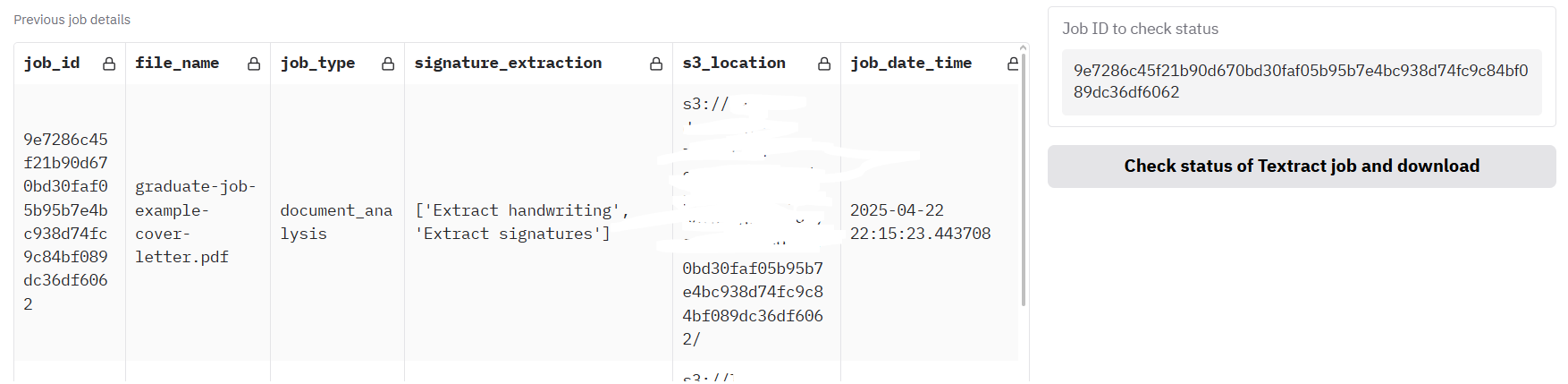

This option can be enabled by your system admin, in the config file ('SHOW_BULK_TEXTRACT_CALL_OPTIONS' environment variable, and subsequent variables). Using this, you will have the option to submit whole documents in quick succession to the AWS Textract service to get extracted text outputs quickly (faster than using the 'Redact document' process described here).

|

| 430 |

+

|

| 431 |

+

### Starting a new Textract API job

|

| 432 |

+

|

| 433 |

+

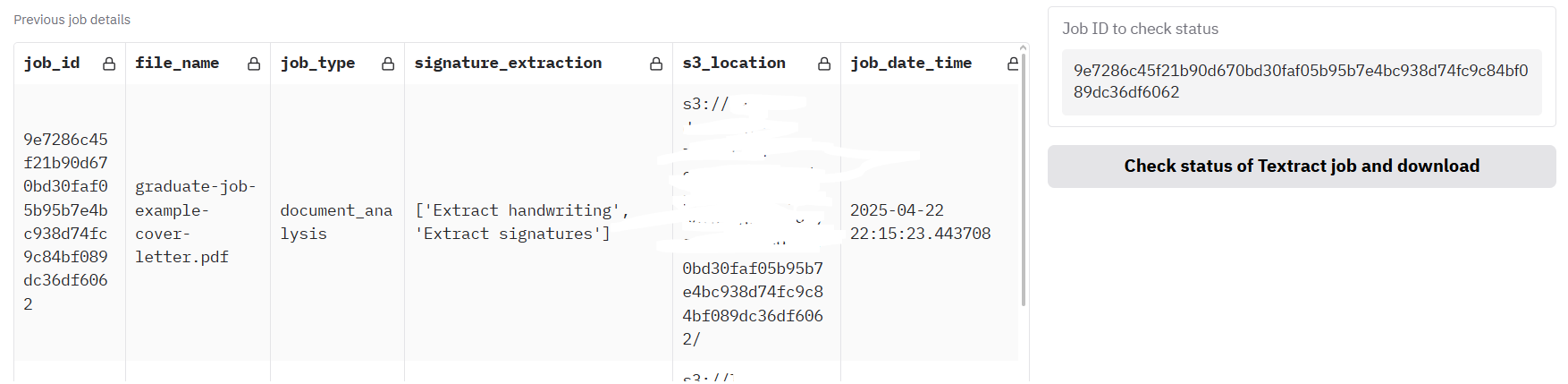

To use this feature, first upload a document file in the file input box [in the usual way](#upload-files-to-the-app) on the first tab of the app. Under AWS Textract signature detection you can select whether or not you would like to analyse signatures or not (with a [cost implication](#optional---select-signature-extraction)).

|

| 434 |

+

|

| 435 |

+

Then, open the section under the heading 'Submit whole document to AWS Textract API...'.

|

| 436 |

+

|

| 437 |

+

|

| 438 |

+

|

| 439 |

+

Click 'Analyse document with AWS Textract API call'. After a few seconds, the job should be submitted to the AWS Textract service. The box 'Job ID to check status' should now have an ID filled in. If it is not already filled with previous jobs (up to seven days old), the table should have a row added with details of the new API job.

|

| 440 |

+

|

| 441 |

+

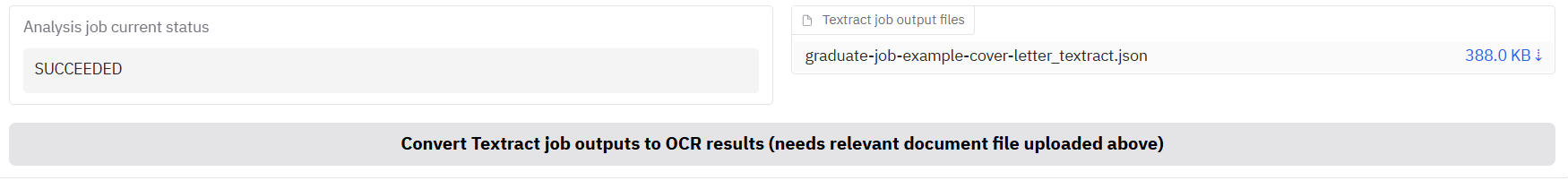

Click the button underneath, 'Check status of Textract job and download', to see progress on the job. Processing will continue in the background until the job is ready, so it is worth periodically clicking this button to see if the outputs are ready. In testing, and as a rough estimate, it seems like this process takes about five seconds per page. However, this has not been tested with very large documents. Once ready, the '_textract.json' output should appear below.

|

| 442 |

+

|

| 443 |

+

### Textract API job outputs

|

| 444 |

+

|

| 445 |

+

The '_textract.json' output can be used to speed up further redaction tasks as [described previously](#optional---costs-and-time-estimation), the 'Existing Textract output file found' flag should now be ticked.

|

| 446 |

+

|

| 447 |

+

|

| 448 |

+

|

| 449 |

+

You can now easily get the '..._ocr_output.csv' redaction output based on this '_textract.json' (described in [Redaction outputs](#redaction-outputs)) by clicking on the button 'Convert Textract job outputs to OCR results'. You can now use this file e.g. for [identifying duplicate pages](#identifying-and-redacting-duplicate-pages), or for redaction review.

|

| 450 |

+

|

| 451 |

## Using AWS Textract and Comprehend when not running in an AWS environment

|

| 452 |

|

| 453 |

AWS Textract and Comprehend give much better results for text extraction and document redaction than the local model options in the app. The most secure way to access them in the Redaction app is to run the app in a secure AWS environment with relevant permissions. Alternatively, you could run the app on your own system while logged in to AWS SSO with relevant permissions.

|

|

|

|

| 467 |

|

| 468 |

The app should then pick up these keys when trying to access the AWS Textract and Comprehend services during redaction.

|

| 469 |

|

| 470 |

+

Again, a lot can potentially go wrong with AWS solutions that are insecure, so before trying the above please consult with your AWS and data security teams.

|

| 471 |

+

|

| 472 |

+

## Modifying and merging redaction review files

|

| 473 |

+

|

| 474 |

+

You can find the folder containing the files discussed in this section [here](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/merge_review_files/).

|

| 475 |

+

|

| 476 |

+

As well as serving as inputs to the document redaction app's review function, the 'review_file.csv' output can be modified outside of the app, and also merged with others from multiple redaction attempts on the same file. This gives you the flexibility to change redaction details outside of the app.

|

| 477 |

+

|

| 478 |

+

### Modifying existing redaction review files

|

| 479 |

+

If you open up a 'review_file' csv output using a spreadsheet software program such as Microsoft Excel you can easily modify redaction properties. Open the file '[Partnership-Agreement-Toolkit_0_0_redacted.pdf_review_file_local.csv](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/merge_review_files/Partnership-Agreement-Toolkit_0_0.pdf_review_file_local.csv)', and you should see a spreadshet with just four suggested redactions (see below). The following instructions are for using Excel.

|

| 480 |

+

|

| 481 |

+

|

| 482 |

+

|

| 483 |

+

The first thing we can do is remove the first row - 'et' is suggested as a person, but is obviously not a genuine instance of personal information. Right click on the row number and select delete on this menu. Next, let's imagine that what the app identified as a 'phone number' was in fact another type of number and so we wanted to change the label. Simply click on the relevant label cells, let's change it to 'SECURITY_NUMBER'. You could also use 'Finad & Select' -> 'Replace' from the top ribbon menu if you wanted to change a number of labels simultaneously.

|

| 484 |

+

|

| 485 |

+

How about we wanted to change the colour of the 'email address' entry on the redaction review tab of the redaction app? The colours in a review file are based on an RGB scale with three numbers ranging from 0-255. [You can find suitable colours here](https://rgbcolorpicker.com). Using this scale, if I wanted my review box to be pure blue, I can change the cell value to (0,0,255).

|

| 486 |

+

|

| 487 |

+

Imagine that a redaction box was slightly too small, and I didn't want to use the in-app options to change the size. In the review file csv, we can modify e.g. the ymin and ymax values for any box to increase the extent of the redaction box. For the 'email address' entry, let's decrease ymin by 5, and increase ymax by 5.

|

| 488 |

+

|

| 489 |

+

I have saved an output file following the above steps as '[Partnership-Agreement-Toolkit_0_0_redacted.pdf_review_file_local_mod.csv](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/merge_review_files/outputs/Partnership-Agreement-Toolkit_0_0.pdf_review_file_local_mod.csv)' in the same folder that the original was found. Let's upload this file to the app along with the original pdf to see how the redactions look now.

|

| 490 |

+

|

| 491 |

+

|

| 492 |

+

|

| 493 |

+

We can see from the above that we have successfully removed a redaction box, changed labels, colours, and redaction box sizes.

|

app.py

CHANGED

|

@@ -4,11 +4,11 @@ import pandas as pd

|

|

| 4 |

import gradio as gr

|

| 5 |

from gradio_image_annotation import image_annotator

|

| 6 |

|

| 7 |

-

from tools.config import OUTPUT_FOLDER, INPUT_FOLDER, RUN_DIRECT_MODE, MAX_QUEUE_SIZE, DEFAULT_CONCURRENCY_LIMIT, MAX_FILE_SIZE, GRADIO_SERVER_PORT, ROOT_PATH, GET_DEFAULT_ALLOW_LIST, ALLOW_LIST_PATH, S3_ALLOW_LIST_PATH, FEEDBACK_LOGS_FOLDER, ACCESS_LOGS_FOLDER, USAGE_LOGS_FOLDER, TESSERACT_FOLDER, POPPLER_FOLDER, REDACTION_LANGUAGE, GET_COST_CODES, COST_CODES_PATH, S3_COST_CODES_PATH, ENFORCE_COST_CODES, DISPLAY_FILE_NAMES_IN_LOGS, SHOW_COSTS, RUN_AWS_FUNCTIONS, DOCUMENT_REDACTION_BUCKET, SHOW_BULK_TEXTRACT_CALL_OPTIONS, TEXTRACT_BULK_ANALYSIS_BUCKET, TEXTRACT_BULK_ANALYSIS_INPUT_SUBFOLDER, TEXTRACT_BULK_ANALYSIS_OUTPUT_SUBFOLDER, SESSION_OUTPUT_FOLDER, LOAD_PREVIOUS_TEXTRACT_JOBS_S3, TEXTRACT_JOBS_S3_LOC, TEXTRACT_JOBS_LOCAL_LOC, HOST_NAME, DEFAULT_COST_CODE, OUTPUT_COST_CODES_PATH, OUTPUT_ALLOW_LIST_PATH

|

| 8 |

from tools.helper_functions import put_columns_in_df, get_connection_params, reveal_feedback_buttons, custom_regex_load, reset_state_vars, load_in_default_allow_list, tesseract_ocr_option, text_ocr_option, textract_option, local_pii_detector, aws_pii_detector, no_redaction_option, reset_review_vars, merge_csv_files, load_all_output_files, update_dataframe, check_for_existing_textract_file, load_in_default_cost_codes, enforce_cost_codes, calculate_aws_costs, calculate_time_taken, reset_base_dataframe, reset_ocr_base_dataframe, update_cost_code_dataframe_from_dropdown_select

|

| 9 |

from tools.aws_functions import upload_file_to_s3, download_file_from_s3

|

| 10 |

from tools.file_redaction import choose_and_run_redactor

|

| 11 |

-

from tools.file_conversion import prepare_image_or_pdf, get_input_file_names

|

| 12 |

from tools.redaction_review import apply_redactions_to_review_df_and_files, update_all_page_annotation_object_based_on_previous_page, decrease_page, increase_page, update_annotator_object_and_filter_df, update_entities_df_recogniser_entities, update_entities_df_page, update_entities_df_text, df_select_callback, convert_df_to_xfdf, convert_xfdf_to_dataframe, reset_dropdowns, exclude_selected_items_from_redaction, undo_last_removal, update_selected_review_df_row_colour, update_all_entity_df_dropdowns, df_select_callback_cost, update_other_annotator_number_from_current, update_annotator_page_from_review_df, df_select_callback_ocr, df_select_callback_textract_api

|

| 13 |

from tools.data_anonymise import anonymise_data_files

|

| 14 |

from tools.auth import authenticate_user

|

|

@@ -572,9 +572,9 @@ with app:

|

|

| 572 |

text_entity_dropdown.select(update_entities_df_text, inputs=[text_entity_dropdown, recogniser_entity_dataframe_base, recogniser_entity_dropdown, page_entity_dropdown], outputs=[recogniser_entity_dataframe, recogniser_entity_dropdown, page_entity_dropdown])

|

| 573 |

|

| 574 |

# Clicking on a cell in the recogniser entity dataframe will take you to that page, and also highlight the target redaction box in blue

|

| 575 |

-

recogniser_entity_dataframe.select(df_select_callback, inputs=[recogniser_entity_dataframe], outputs=[

|

| 576 |

-

success(update_selected_review_df_row_colour, inputs=[selected_entity_dataframe_row, review_file_state, selected_entity_id, selected_entity_colour

|

| 577 |

-

success(update_annotator_page_from_review_df, inputs=[review_file_state, images_pdf_state, page_sizes,

|

| 578 |

|

| 579 |

reset_dropdowns_btn.click(reset_dropdowns, inputs=[recogniser_entity_dataframe_base], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown]).\

|

| 580 |

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state])

|

|

@@ -733,7 +733,7 @@ with app:

|

|

| 733 |

if __name__ == "__main__":

|

| 734 |

if RUN_DIRECT_MODE == "0":

|

| 735 |

|

| 736 |

-

if

|

| 737 |

app.queue(max_size=int(MAX_QUEUE_SIZE), default_concurrency_limit=int(DEFAULT_CONCURRENCY_LIMIT)).launch(show_error=True, inbrowser=True, auth=authenticate_user, max_file_size=MAX_FILE_SIZE, server_port=GRADIO_SERVER_PORT, root_path=ROOT_PATH)

|

| 738 |

else:

|

| 739 |

app.queue(max_size=int(MAX_QUEUE_SIZE), default_concurrency_limit=int(DEFAULT_CONCURRENCY_LIMIT)).launch(show_error=True, inbrowser=True, max_file_size=MAX_FILE_SIZE, server_port=GRADIO_SERVER_PORT, root_path=ROOT_PATH)

|

|

|

|

| 4 |

import gradio as gr

|

| 5 |

from gradio_image_annotation import image_annotator

|

| 6 |

|

| 7 |

+

from tools.config import OUTPUT_FOLDER, INPUT_FOLDER, RUN_DIRECT_MODE, MAX_QUEUE_SIZE, DEFAULT_CONCURRENCY_LIMIT, MAX_FILE_SIZE, GRADIO_SERVER_PORT, ROOT_PATH, GET_DEFAULT_ALLOW_LIST, ALLOW_LIST_PATH, S3_ALLOW_LIST_PATH, FEEDBACK_LOGS_FOLDER, ACCESS_LOGS_FOLDER, USAGE_LOGS_FOLDER, TESSERACT_FOLDER, POPPLER_FOLDER, REDACTION_LANGUAGE, GET_COST_CODES, COST_CODES_PATH, S3_COST_CODES_PATH, ENFORCE_COST_CODES, DISPLAY_FILE_NAMES_IN_LOGS, SHOW_COSTS, RUN_AWS_FUNCTIONS, DOCUMENT_REDACTION_BUCKET, SHOW_BULK_TEXTRACT_CALL_OPTIONS, TEXTRACT_BULK_ANALYSIS_BUCKET, TEXTRACT_BULK_ANALYSIS_INPUT_SUBFOLDER, TEXTRACT_BULK_ANALYSIS_OUTPUT_SUBFOLDER, SESSION_OUTPUT_FOLDER, LOAD_PREVIOUS_TEXTRACT_JOBS_S3, TEXTRACT_JOBS_S3_LOC, TEXTRACT_JOBS_LOCAL_LOC, HOST_NAME, DEFAULT_COST_CODE, OUTPUT_COST_CODES_PATH, OUTPUT_ALLOW_LIST_PATH, COGNITO_AUTH

|

| 8 |

from tools.helper_functions import put_columns_in_df, get_connection_params, reveal_feedback_buttons, custom_regex_load, reset_state_vars, load_in_default_allow_list, tesseract_ocr_option, text_ocr_option, textract_option, local_pii_detector, aws_pii_detector, no_redaction_option, reset_review_vars, merge_csv_files, load_all_output_files, update_dataframe, check_for_existing_textract_file, load_in_default_cost_codes, enforce_cost_codes, calculate_aws_costs, calculate_time_taken, reset_base_dataframe, reset_ocr_base_dataframe, update_cost_code_dataframe_from_dropdown_select

|

| 9 |

from tools.aws_functions import upload_file_to_s3, download_file_from_s3

|

| 10 |

from tools.file_redaction import choose_and_run_redactor

|

| 11 |

+

from tools.file_conversion import prepare_image_or_pdf, get_input_file_names

|

| 12 |

from tools.redaction_review import apply_redactions_to_review_df_and_files, update_all_page_annotation_object_based_on_previous_page, decrease_page, increase_page, update_annotator_object_and_filter_df, update_entities_df_recogniser_entities, update_entities_df_page, update_entities_df_text, df_select_callback, convert_df_to_xfdf, convert_xfdf_to_dataframe, reset_dropdowns, exclude_selected_items_from_redaction, undo_last_removal, update_selected_review_df_row_colour, update_all_entity_df_dropdowns, df_select_callback_cost, update_other_annotator_number_from_current, update_annotator_page_from_review_df, df_select_callback_ocr, df_select_callback_textract_api

|

| 13 |

from tools.data_anonymise import anonymise_data_files

|

| 14 |

from tools.auth import authenticate_user

|

|

|

|

| 572 |

text_entity_dropdown.select(update_entities_df_text, inputs=[text_entity_dropdown, recogniser_entity_dataframe_base, recogniser_entity_dropdown, page_entity_dropdown], outputs=[recogniser_entity_dataframe, recogniser_entity_dropdown, page_entity_dropdown])

|

| 573 |

|

| 574 |

# Clicking on a cell in the recogniser entity dataframe will take you to that page, and also highlight the target redaction box in blue

|

| 575 |

+

recogniser_entity_dataframe.select(df_select_callback, inputs=[recogniser_entity_dataframe], outputs=[selected_entity_dataframe_row]).\

|

| 576 |

+

success(update_selected_review_df_row_colour, inputs=[selected_entity_dataframe_row, review_file_state, selected_entity_id, selected_entity_colour], outputs=[review_file_state, selected_entity_id, selected_entity_colour]).\

|

| 577 |

+

success(update_annotator_page_from_review_df, inputs=[review_file_state, images_pdf_state, page_sizes, all_image_annotations_state, annotator, selected_entity_dataframe_row, input_folder_textbox, doc_full_file_name_textbox], outputs=[annotator, all_image_annotations_state, annotate_current_page, page_sizes, review_file_state, annotate_previous_page])

|

| 578 |

|

| 579 |

reset_dropdowns_btn.click(reset_dropdowns, inputs=[recogniser_entity_dataframe_base], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown]).\

|

| 580 |

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state])

|

|

|

|

| 733 |

if __name__ == "__main__":

|

| 734 |

if RUN_DIRECT_MODE == "0":

|

| 735 |

|

| 736 |

+

if COGNITO_AUTH == "1":

|

| 737 |

app.queue(max_size=int(MAX_QUEUE_SIZE), default_concurrency_limit=int(DEFAULT_CONCURRENCY_LIMIT)).launch(show_error=True, inbrowser=True, auth=authenticate_user, max_file_size=MAX_FILE_SIZE, server_port=GRADIO_SERVER_PORT, root_path=ROOT_PATH)

|

| 738 |

else:

|

| 739 |

app.queue(max_size=int(MAX_QUEUE_SIZE), default_concurrency_limit=int(DEFAULT_CONCURRENCY_LIMIT)).launch(show_error=True, inbrowser=True, max_file_size=MAX_FILE_SIZE, server_port=GRADIO_SERVER_PORT, root_path=ROOT_PATH)

|

tools/config.py

CHANGED

|

@@ -237,7 +237,7 @@ else: OUTPUT_ALLOW_LIST_PATH = 'config/default_allow_list.csv'

|

|

| 237 |

|

| 238 |

SHOW_COSTS = get_or_create_env_var('SHOW_COSTS', 'False')

|

| 239 |

|

| 240 |

-

GET_COST_CODES = get_or_create_env_var('GET_COST_CODES', '

|

| 241 |

|

| 242 |

DEFAULT_COST_CODE = get_or_create_env_var('DEFAULT_COST_CODE', '')

|

| 243 |

|

|

@@ -246,7 +246,7 @@ COST_CODES_PATH = get_or_create_env_var('COST_CODES_PATH', '') # 'config/COST_CE

|

|

| 246 |

S3_COST_CODES_PATH = get_or_create_env_var('S3_COST_CODES_PATH', '') # COST_CENTRES.csv # This is a path within the DOCUMENT_REDACTION_BUCKET

|

| 247 |

|

| 248 |

if COST_CODES_PATH: OUTPUT_COST_CODES_PATH = COST_CODES_PATH

|

| 249 |

-

else: OUTPUT_COST_CODES_PATH = '

|

| 250 |

|

| 251 |

ENFORCE_COST_CODES = get_or_create_env_var('ENFORCE_COST_CODES', 'False') # If you have cost codes listed, is it compulsory to choose one before redacting?

|

| 252 |

|

|

|

|

| 237 |

|

| 238 |

SHOW_COSTS = get_or_create_env_var('SHOW_COSTS', 'False')

|

| 239 |

|

| 240 |

+

GET_COST_CODES = get_or_create_env_var('GET_COST_CODES', 'True')

|

| 241 |

|

| 242 |

DEFAULT_COST_CODE = get_or_create_env_var('DEFAULT_COST_CODE', '')

|

| 243 |

|

|

|

|

| 246 |

S3_COST_CODES_PATH = get_or_create_env_var('S3_COST_CODES_PATH', '') # COST_CENTRES.csv # This is a path within the DOCUMENT_REDACTION_BUCKET

|

| 247 |

|

| 248 |

if COST_CODES_PATH: OUTPUT_COST_CODES_PATH = COST_CODES_PATH

|

| 249 |

+

else: OUTPUT_COST_CODES_PATH = ''

|

| 250 |

|

| 251 |

ENFORCE_COST_CODES = get_or_create_env_var('ENFORCE_COST_CODES', 'False') # If you have cost codes listed, is it compulsory to choose one before redacting?

|

| 252 |

|

tools/file_conversion.py

CHANGED

|

@@ -21,6 +21,7 @@ from PIL import Image

|

|

| 21 |

from scipy.spatial import cKDTree

|

| 22 |

import random

|

| 23 |

import string

|

|

|

|

| 24 |

|

| 25 |

IMAGE_NUM_REGEX = re.compile(r'_(\d+)\.png$')

|

| 26 |

|

|

@@ -617,11 +618,10 @@ def prepare_image_or_pdf(

|

|

| 617 |

|

| 618 |

elif file_extension in ['.csv']:

|

| 619 |

if '_review_file' in file_path_without_ext:

|

| 620 |

-

#print("file_path:", file_path)

|

| 621 |

review_file_csv = read_file(file_path)

|

| 622 |

all_annotations_object = convert_review_df_to_annotation_json(review_file_csv, image_file_paths, page_sizes)

|

| 623 |

json_from_csv = True

|

| 624 |

-

print("Converted CSV review file to image annotation object")

|

| 625 |

elif '_ocr_output' in file_path_without_ext:

|

| 626 |

all_line_level_ocr_results_df = read_file(file_path)

|

| 627 |

json_from_csv = False

|

|

@@ -850,121 +850,246 @@ def remove_duplicate_images_with_blank_boxes(data: List[dict]) -> List[dict]:

|

|

| 850 |

|

| 851 |

return result

|

| 852 |

|

| 853 |

-

def divide_coordinates_by_page_sizes(

|

| 854 |

-

|

| 855 |

-

|

| 856 |

-

|

| 857 |

-

|

| 858 |

-

|

| 859 |

-

|

| 860 |

-

coord_cols = [xmin, xmax, ymin, ymax]

|

| 861 |

-

for col in coord_cols:

|

| 862 |

-

review_file_df.loc[:, col] = pd.to_numeric(review_file_df[col], errors="coerce")

|

| 863 |

-

|

| 864 |

-

review_file_df_orig = review_file_df.copy().loc[(review_file_df[xmin] <= 1) & (review_file_df[xmax] <= 1) & (review_file_df[ymin] <= 1) & (review_file_df[ymax] <= 1),:]

|

| 865 |

-

|

| 866 |

-

#print("review_file_df_orig:", review_file_df_orig)

|

| 867 |

-

|

| 868 |

-

review_file_df_div = review_file_df.loc[(review_file_df[xmin] > 1) & (review_file_df[xmax] > 1) & (review_file_df[ymin] > 1) & (review_file_df[ymax] > 1),:]

|

| 869 |

-

|

| 870 |

-

#print("review_file_df_div:", review_file_df_div)

|

| 871 |

-

|

| 872 |

-

review_file_df_div.loc[:, "page"] = pd.to_numeric(review_file_df_div["page"], errors="coerce")

|

| 873 |

|

| 874 |

-

|

|

|

|

| 875 |

|

| 876 |

-

|

| 877 |

-

|

| 878 |

-

|

|

|

|

|

|

|

| 879 |

|

| 880 |

-

|

| 881 |

-

|

| 882 |

-

|

| 883 |

-

|

|

|

|

| 884 |

|

| 885 |

-

|

| 886 |

-

|

|

|

|

|

|

|

| 887 |

|

| 888 |

-

|

| 889 |

-

|

| 890 |

-

|

| 891 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 892 |

|

| 893 |

-

|

| 894 |

-

|

| 895 |

-

|

| 896 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 897 |

else:

|

| 898 |

-

|

| 899 |

|

| 900 |

-

# Only sort if the DataFrame is not empty and contains the required columns

|

| 901 |

-

required_sort_columns = {"page", xmin, ymin}

|

| 902 |

-

if not review_file_df_out.empty and required_sort_columns.issubset(review_file_df_out.columns):

|

| 903 |

-

review_file_df_out.sort_values(["page", ymin, xmin], inplace=True)

|

| 904 |

|

| 905 |

-

|

|

|

|

| 906 |

|

| 907 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 908 |

|

| 909 |

-

def multiply_coordinates_by_page_sizes(review_file_df: pd.DataFrame, page_sizes_df: pd.DataFrame, xmin="xmin", xmax="xmax", ymin="ymin", ymax="ymax"):

|

| 910 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 911 |

|

| 912 |

-

if xmin in review_file_df.columns and not review_file_df.empty:

|

| 913 |

|

| 914 |

-

|

| 915 |

-

|

| 916 |

-

|

|

|

|

| 917 |

|

| 918 |

-

|

| 919 |

-

review_file_df_orig = review_file_df.loc[

|

| 920 |

-

(review_file_df[xmin] > 1) & (review_file_df[xmax] > 1) &

|

| 921 |

-

(review_file_df[ymin] > 1) & (review_file_df[ymax] > 1), :].copy()

|

| 922 |

|

| 923 |

-

|

| 924 |

-

|

| 925 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 926 |

|

| 927 |

-

|

| 928 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 929 |

|

| 930 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 931 |

|

| 932 |

-

|

| 933 |

-

|

| 934 |

-

|

|

|

|

| 935 |

|

| 936 |

-

|

| 937 |

-

|

| 938 |

-

|

| 939 |

-

review_file_df_na = review_file_df.loc[review_file_df["image_width"].isna()].copy()

|

| 940 |

|

| 941 |

-

|

| 942 |

-

|

| 943 |

-

|

|

|

|

| 944 |

|

| 945 |

-

# Multiply coordinates by image sizes

|

| 946 |

-

review_file_df_not_na[xmin] *= review_file_df_not_na["image_width"]

|

| 947 |

-

review_file_df_not_na[xmax] *= review_file_df_not_na["image_width"]

|

| 948 |

-

review_file_df_not_na[ymin] *= review_file_df_not_na["image_height"]

|

| 949 |

-