Spaces:

Running

Running

fifth commit

Browse files- README.md +26 -1

- Screenshots.md +27 -0

- app/database.py +162 -1

- app/evaluation.py +134 -21

- app/generate_ground_truth.py +113 -38

- app/main.py +88 -42

- app/rag_evaluation.py +7 -5

- data/evaluation_results.csv +181 -0

- data/ground-truth-retrieval.csv +25 -0

- data/sqlite.db +0 -0

- docker-compose.yaml +11 -2

- grafana/dashboards/rag_evaluation.json +172 -0

- grafana/provisioning/dashboards/dashboards.yaml +14 -0

- grafana/provisioning/dashboards/rag_evaluation.json +0 -129

- grafana/provisioning/datasources/sqlite.yaml +18 -3

- image-1.png +0 -0

- image-10.png +0 -0

- image-11.png +0 -0

- image-2.png +0 -0

- image-3.png +0 -0

- image-4.png +0 -0

- image-5.png +0 -0

- image-6.png +0 -0

- image-7.png +0 -0

- image-8.png +0 -0

- image-9.png +0 -0

- image.png +0 -0

- run-docker-compose.sh +43 -16

README.md

CHANGED

|

@@ -100,7 +100,32 @@ youtube-rag-app/

|

|

| 100 |

## Getting Started

|

| 101 |

|

| 102 |

git clone [email protected]:ganesh3/rag-youtube-assistant.git

|

| 103 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 104 |

|

| 105 |

## License

|

| 106 |

GPL v3

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 100 |

## Getting Started

|

| 101 |

|

| 102 |

git clone [email protected]:ganesh3/rag-youtube-assistant.git

|

| 103 |

+

cd rag-youtube-assistant

|

| 104 |

+

docker-compose build app

|

| 105 |

+

docker-compose up -d

|

| 106 |

+

|

| 107 |

+

You need to have Docker Desktop installed on your laptop/workstation along with WSL2 on windows machine.

|

| 108 |

|

| 109 |

## License

|

| 110 |

GPL v3

|

| 111 |

+

|

| 112 |

+

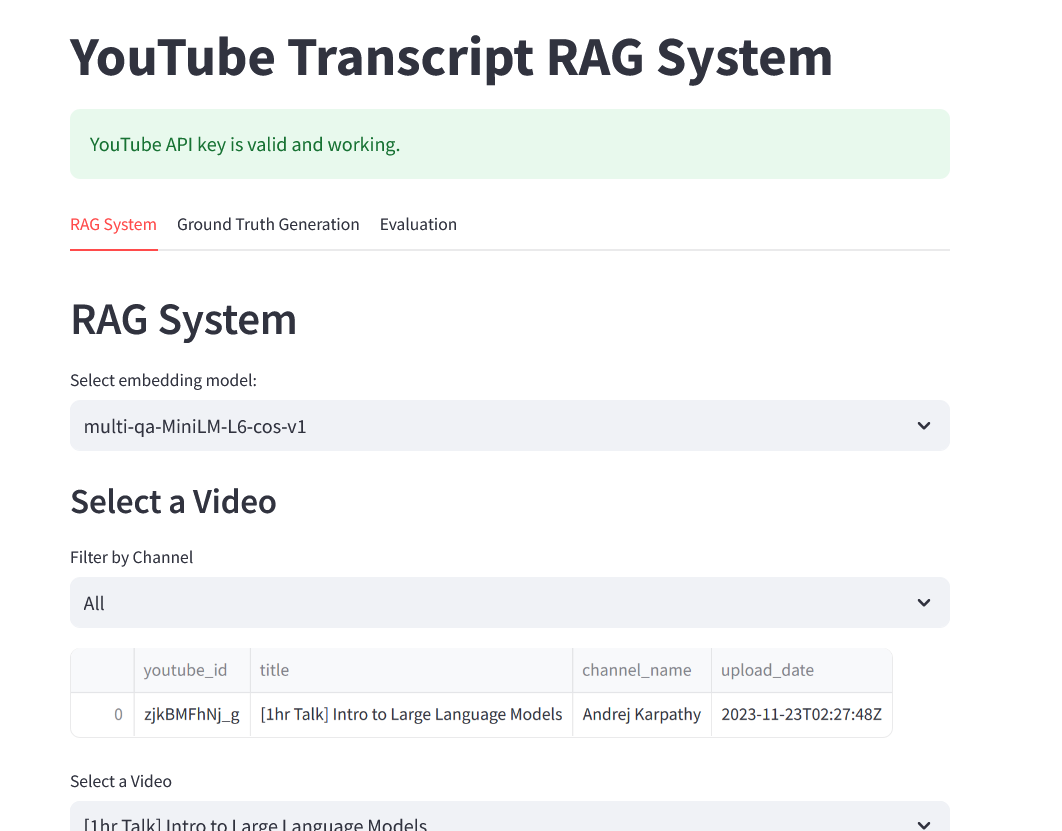

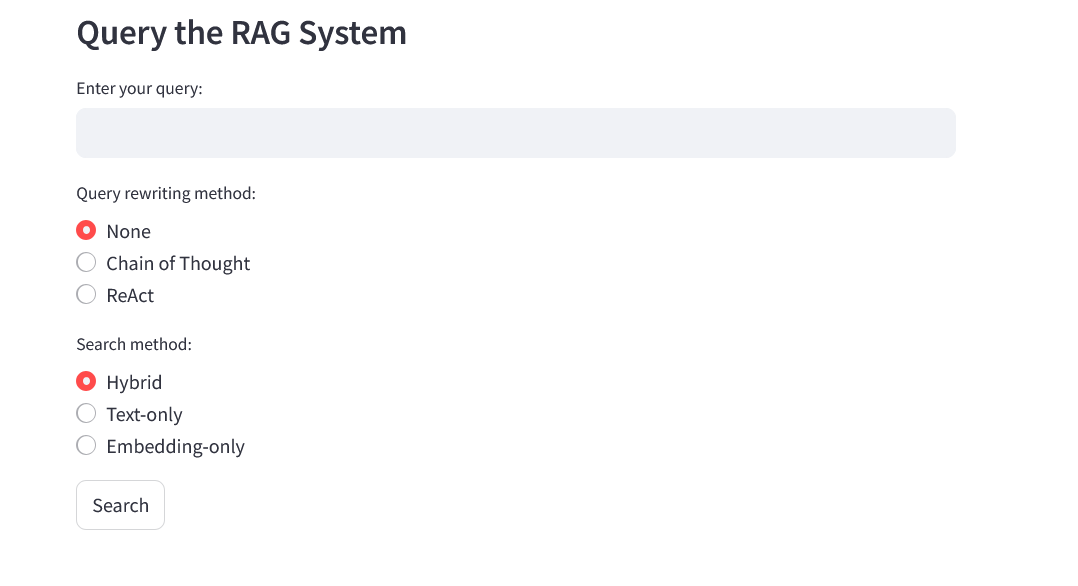

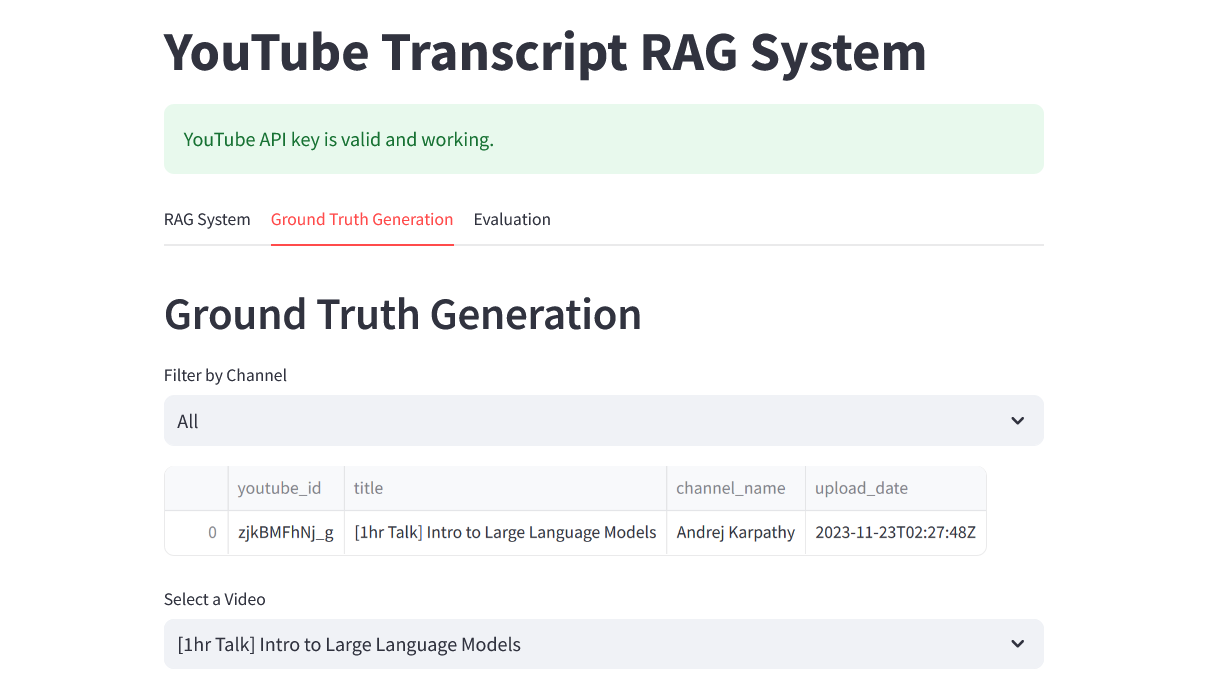

### Interface

|

| 113 |

+

|

| 114 |

+

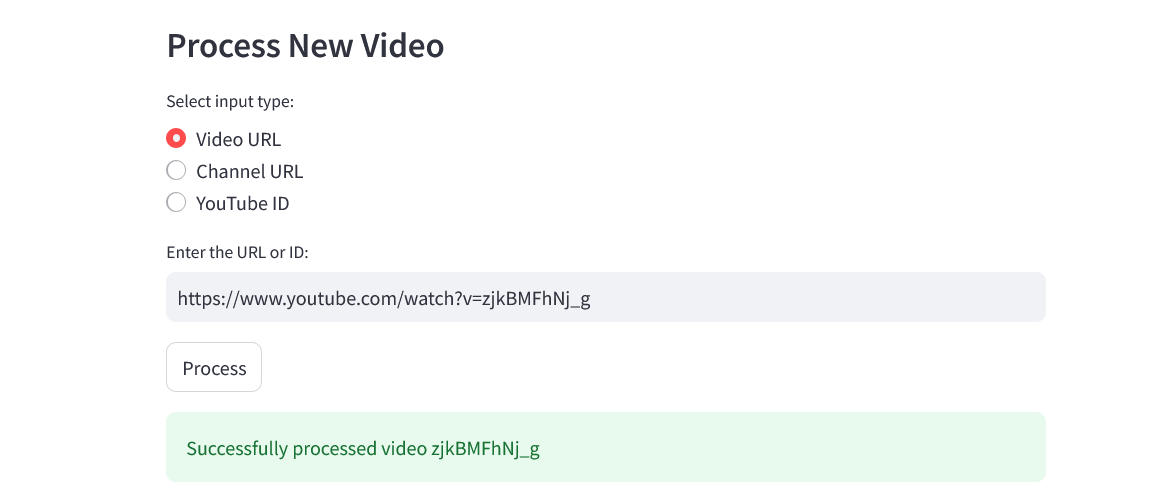

I use Streamlit to ingest the youtube transcripts, query the transcripts uing LLM & RAG, generate ground truth and evaluate the ground truth.

|

| 115 |

+

|

| 116 |

+

### Ingestion

|

| 117 |

+

|

| 118 |

+

I am ingesting Youtube transcripts using Youtube Data API v3 and Youtube Transcript package and the code is in transcript_extractor.py and it is run on the Streamlit app using main.py.

|

| 119 |

+

|

| 120 |

+

### Retrieval

|

| 121 |

+

|

| 122 |

+

"hit_rate":1, "mrr":1

|

| 123 |

+

|

| 124 |

+

### RAG Flow

|

| 125 |

+

|

| 126 |

+

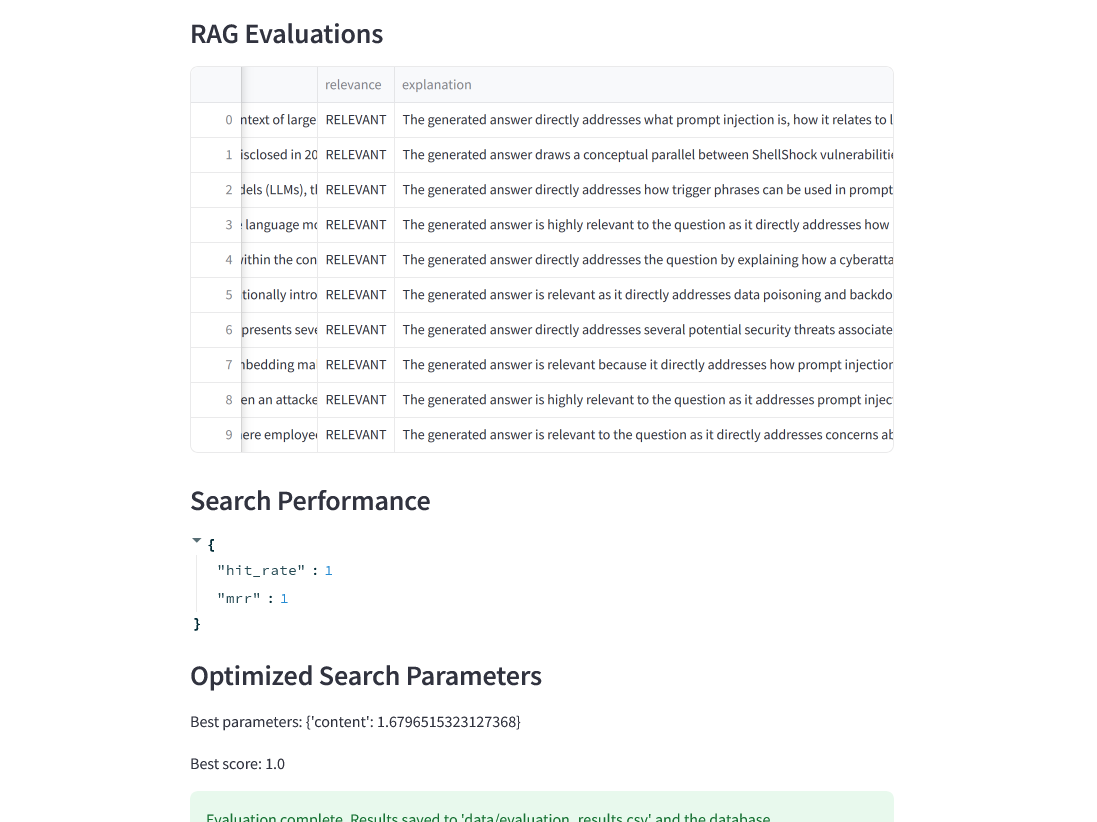

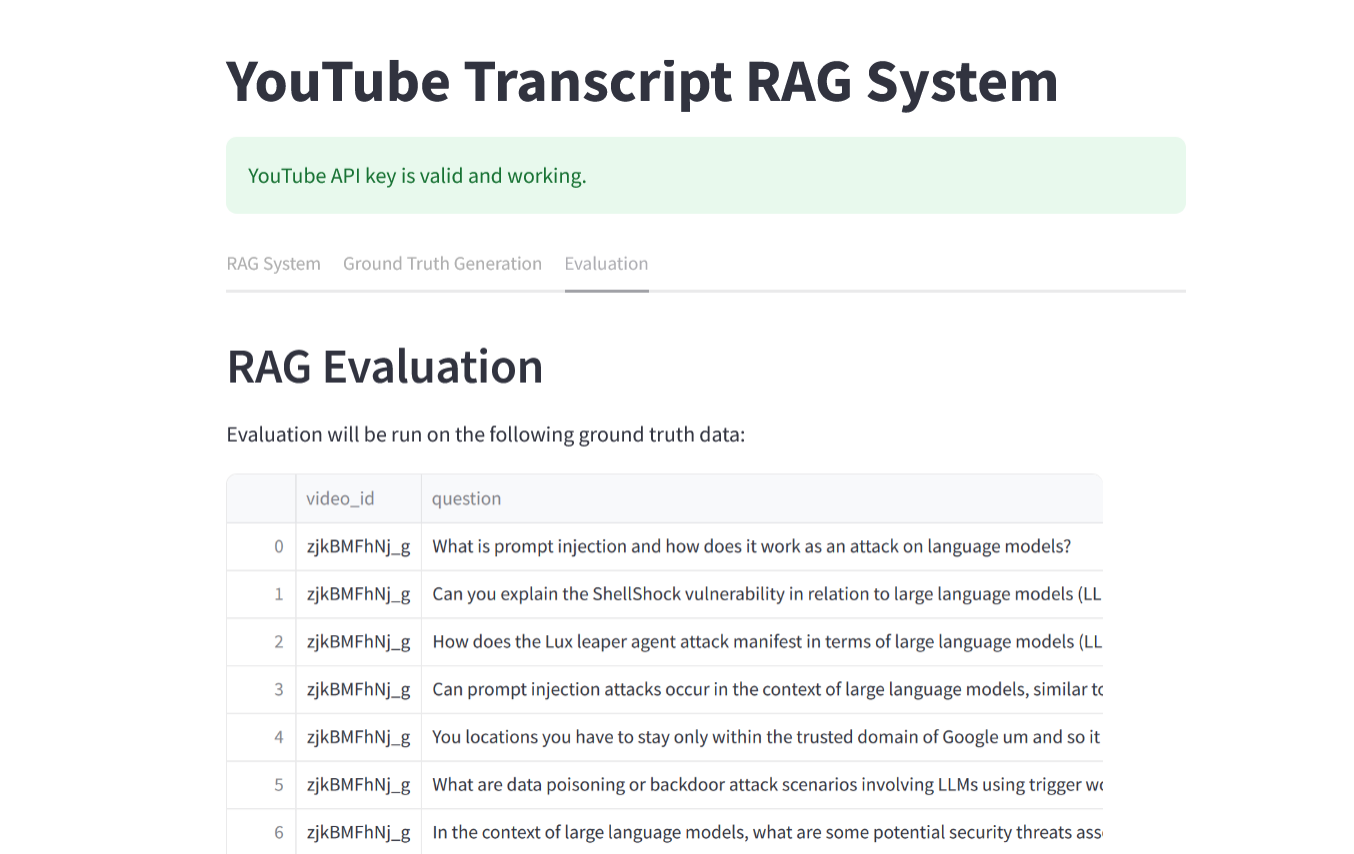

I used the LLM as a Judge metric to evaluate the quality of our RAG Flow on my local machine with CPU and hence the total records evaluated are pretty low (12).

|

| 127 |

+

|

| 128 |

+

* RELEVANT - 12 (100%)

|

| 129 |

+

* PARTLY_RELEVANT - 0 (0%)

|

| 130 |

+

* NON RELEVANT - 0 (0%)

|

| 131 |

+

|

Screenshots.md

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

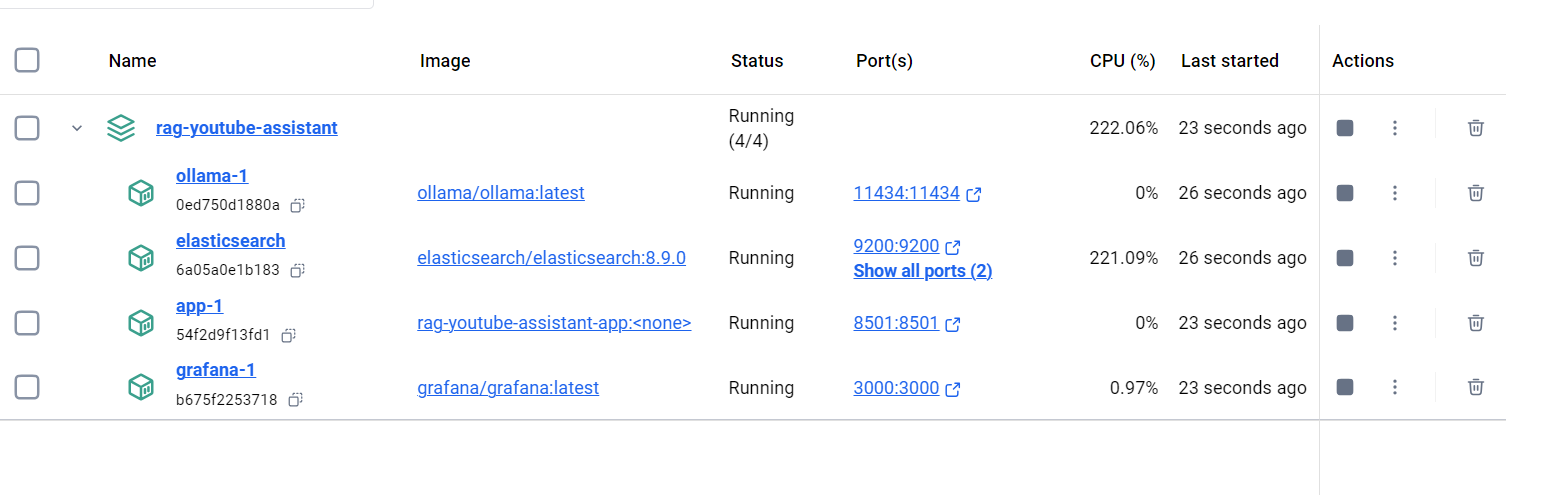

### Docker deployment

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

### Ingestion

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

### RAG

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

### Ground Truth Generation

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

### RAG Evaluation

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

|

app/database.py

CHANGED

|

@@ -11,6 +11,7 @@ class DatabaseHandler:

|

|

| 11 |

def create_tables(self):

|

| 12 |

with sqlite3.connect(self.db_path) as conn:

|

| 13 |

cursor = conn.cursor()

|

|

|

|

| 14 |

cursor.execute('''

|

| 15 |

CREATE TABLE IF NOT EXISTS videos (

|

| 16 |

id INTEGER PRIMARY KEY AUTOINCREMENT,

|

|

@@ -53,6 +54,50 @@ class DatabaseHandler:

|

|

| 53 |

FOREIGN KEY (embedding_model_id) REFERENCES embedding_models (id)

|

| 54 |

)

|

| 55 |

''')

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 56 |

conn.commit()

|

| 57 |

|

| 58 |

def update_schema(self):

|

|

@@ -186,4 +231,120 @@ class DatabaseHandler:

|

|

| 186 |

# SET transcript_content = ?

|

| 187 |

# WHERE youtube_id = ?

|

| 188 |

# ''', (transcript_content, youtube_id))

|

| 189 |

-

# conn.commit()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 |

def create_tables(self):

|

| 12 |

with sqlite3.connect(self.db_path) as conn:

|

| 13 |

cursor = conn.cursor()

|

| 14 |

+

# Existing tables

|

| 15 |

cursor.execute('''

|

| 16 |

CREATE TABLE IF NOT EXISTS videos (

|

| 17 |

id INTEGER PRIMARY KEY AUTOINCREMENT,

|

|

|

|

| 54 |

FOREIGN KEY (embedding_model_id) REFERENCES embedding_models (id)

|

| 55 |

)

|

| 56 |

''')

|

| 57 |

+

|

| 58 |

+

# New tables for ground truth and evaluation

|

| 59 |

+

cursor.execute('''

|

| 60 |

+

CREATE TABLE IF NOT EXISTS ground_truth (

|

| 61 |

+

id INTEGER PRIMARY KEY AUTOINCREMENT,

|

| 62 |

+

video_id TEXT,

|

| 63 |

+

question TEXT,

|

| 64 |

+

generation_date TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

|

| 65 |

+

UNIQUE(video_id, question)

|

| 66 |

+

)

|

| 67 |

+

''')

|

| 68 |

+

|

| 69 |

+

cursor.execute('''

|

| 70 |

+

CREATE TABLE IF NOT EXISTS search_performance (

|

| 71 |

+

id INTEGER PRIMARY KEY AUTOINCREMENT,

|

| 72 |

+

video_id TEXT,

|

| 73 |

+

hit_rate REAL,

|

| 74 |

+

mrr REAL,

|

| 75 |

+

evaluation_date TIMESTAMP DEFAULT CURRENT_TIMESTAMP

|

| 76 |

+

)

|

| 77 |

+

''')

|

| 78 |

+

|

| 79 |

+

cursor.execute('''

|

| 80 |

+

CREATE TABLE IF NOT EXISTS search_parameters (

|

| 81 |

+

id INTEGER PRIMARY KEY AUTOINCREMENT,

|

| 82 |

+

video_id TEXT,

|

| 83 |

+

parameter_name TEXT,

|

| 84 |

+

parameter_value REAL,

|

| 85 |

+

score REAL,

|

| 86 |

+

evaluation_date TIMESTAMP DEFAULT CURRENT_TIMESTAMP

|

| 87 |

+

)

|

| 88 |

+

''')

|

| 89 |

+

|

| 90 |

+

cursor.execute('''

|

| 91 |

+

CREATE TABLE IF NOT EXISTS rag_evaluations (

|

| 92 |

+

id INTEGER PRIMARY KEY AUTOINCREMENT,

|

| 93 |

+

video_id TEXT,

|

| 94 |

+

question TEXT,

|

| 95 |

+

answer TEXT,

|

| 96 |

+

relevance TEXT,

|

| 97 |

+

explanation TEXT,

|

| 98 |

+

evaluation_date TIMESTAMP DEFAULT CURRENT_TIMESTAMP

|

| 99 |

+

)

|

| 100 |

+

''')

|

| 101 |

conn.commit()

|

| 102 |

|

| 103 |

def update_schema(self):

|

|

|

|

| 231 |

# SET transcript_content = ?

|

| 232 |

# WHERE youtube_id = ?

|

| 233 |

# ''', (transcript_content, youtube_id))

|

| 234 |

+

# conn.commit()

|

| 235 |

+

|

| 236 |

+

def add_ground_truth_questions(self, video_id, questions):

|

| 237 |

+

with sqlite3.connect(self.db_path) as conn:

|

| 238 |

+

cursor = conn.cursor()

|

| 239 |

+

for question in questions:

|

| 240 |

+

try:

|

| 241 |

+

cursor.execute('''

|

| 242 |

+

INSERT OR IGNORE INTO ground_truth (video_id, question)

|

| 243 |

+

VALUES (?, ?)

|

| 244 |

+

''', (video_id, question))

|

| 245 |

+

except sqlite3.IntegrityError:

|

| 246 |

+

continue # Skip duplicate questions

|

| 247 |

+

conn.commit()

|

| 248 |

+

|

| 249 |

+

def get_ground_truth_by_video(self, video_id):

|

| 250 |

+

with sqlite3.connect(self.db_path) as conn:

|

| 251 |

+

cursor = conn.cursor()

|

| 252 |

+

cursor.execute('''

|

| 253 |

+

SELECT gt.*, v.channel_name

|

| 254 |

+

FROM ground_truth gt

|

| 255 |

+

JOIN videos v ON gt.video_id = v.youtube_id

|

| 256 |

+

WHERE gt.video_id = ?

|

| 257 |

+

ORDER BY gt.generation_date DESC

|

| 258 |

+

''', (video_id,))

|

| 259 |

+

return cursor.fetchall()

|

| 260 |

+

|

| 261 |

+

def get_ground_truth_by_channel(self, channel_name):

|

| 262 |

+

with sqlite3.connect(self.db_path) as conn:

|

| 263 |

+

cursor = conn.cursor()

|

| 264 |

+

cursor.execute('''

|

| 265 |

+

SELECT gt.*, v.channel_name

|

| 266 |

+

FROM ground_truth gt

|

| 267 |

+

JOIN videos v ON gt.video_id = v.youtube_id

|

| 268 |

+

WHERE v.channel_name = ?

|

| 269 |

+

ORDER BY gt.generation_date DESC

|

| 270 |

+

''', (channel_name,))

|

| 271 |

+

return cursor.fetchall()

|

| 272 |

+

|

| 273 |

+

def get_all_ground_truth(self):

|

| 274 |

+

with sqlite3.connect(self.db_path) as conn:

|

| 275 |

+

cursor = conn.cursor()

|

| 276 |

+

cursor.execute('''

|

| 277 |

+

SELECT gt.*, v.channel_name

|

| 278 |

+

FROM ground_truth gt

|

| 279 |

+

JOIN videos v ON gt.video_id = v.youtube_id

|

| 280 |

+

ORDER BY gt.generation_date DESC

|

| 281 |

+

''')

|

| 282 |

+

return cursor.fetchall()

|

| 283 |

+

|

| 284 |

+

def save_search_performance(self, video_id, hit_rate, mrr):

|

| 285 |

+

with sqlite3.connect(self.db_path) as conn:

|

| 286 |

+

cursor = conn.cursor()

|

| 287 |

+

cursor.execute('''

|

| 288 |

+

INSERT INTO search_performance (video_id, hit_rate, mrr)

|

| 289 |

+

VALUES (?, ?, ?)

|

| 290 |

+

''', (video_id, hit_rate, mrr))

|

| 291 |

+

conn.commit()

|

| 292 |

+

|

| 293 |

+

def save_search_parameters(self, video_id, parameters, score):

|

| 294 |

+

with sqlite3.connect(self.db_path) as conn:

|

| 295 |

+

cursor = conn.cursor()

|

| 296 |

+

for param_name, param_value in parameters.items():

|

| 297 |

+

cursor.execute('''

|

| 298 |

+

INSERT INTO search_parameters (video_id, parameter_name, parameter_value, score)

|

| 299 |

+

VALUES (?, ?, ?, ?)

|

| 300 |

+

''', (video_id, param_name, param_value, score))

|

| 301 |

+

conn.commit()

|

| 302 |

+

|

| 303 |

+

def save_rag_evaluation(self, evaluation_data):

|

| 304 |

+

with sqlite3.connect(self.db_path) as conn:

|

| 305 |

+

cursor = conn.cursor()

|

| 306 |

+

cursor.execute('''

|

| 307 |

+

INSERT INTO rag_evaluations

|

| 308 |

+

(video_id, question, answer, relevance, explanation)

|

| 309 |

+

VALUES (?, ?, ?, ?, ?)

|

| 310 |

+

''', (

|

| 311 |

+

evaluation_data['video_id'],

|

| 312 |

+

evaluation_data['question'],

|

| 313 |

+

evaluation_data['answer'],

|

| 314 |

+

evaluation_data['relevance'],

|

| 315 |

+

evaluation_data['explanation']

|

| 316 |

+

))

|

| 317 |

+

conn.commit()

|

| 318 |

+

|

| 319 |

+

def get_latest_evaluation_results(self, video_id=None):

|

| 320 |

+

with sqlite3.connect(self.db_path) as conn:

|

| 321 |

+

cursor = conn.cursor()

|

| 322 |

+

if video_id:

|

| 323 |

+

cursor.execute('''

|

| 324 |

+

SELECT * FROM rag_evaluations

|

| 325 |

+

WHERE video_id = ?

|

| 326 |

+

ORDER BY evaluation_date DESC

|

| 327 |

+

''', (video_id,))

|

| 328 |

+

else:

|

| 329 |

+

cursor.execute('''

|

| 330 |

+

SELECT * FROM rag_evaluations

|

| 331 |

+

ORDER BY evaluation_date DESC

|

| 332 |

+

''')

|

| 333 |

+

return cursor.fetchall()

|

| 334 |

+

|

| 335 |

+

def get_latest_search_performance(self, video_id=None):

|

| 336 |

+

with sqlite3.connect(self.db_path) as conn:

|

| 337 |

+

cursor = conn.cursor()

|

| 338 |

+

if video_id:

|

| 339 |

+

cursor.execute('''

|

| 340 |

+

SELECT * FROM search_performance

|

| 341 |

+

WHERE video_id = ?

|

| 342 |

+

ORDER BY evaluation_date DESC

|

| 343 |

+

LIMIT 1

|

| 344 |

+

''', (video_id,))

|

| 345 |

+

else:

|

| 346 |

+

cursor.execute('''

|

| 347 |

+

SELECT * FROM search_performance

|

| 348 |

+

ORDER BY evaluation_date DESC

|

| 349 |

+

''')

|

| 350 |

+

return cursor.fetchall()

|

app/evaluation.py

CHANGED

|

@@ -3,6 +3,10 @@ import numpy as np

|

|

| 3 |

import pandas as pd

|

| 4 |

import json

|

| 5 |

import ollama

|

|

|

|

|

|

|

|

|

|

|

|

|

| 6 |

|

| 7 |

class EvaluationSystem:

|

| 8 |

def __init__(self, data_processor, database_handler):

|

|

@@ -42,7 +46,7 @@ class EvaluationSystem:

|

|

| 42 |

|

| 43 |

relevance_scores.append(self.relevance_scoring(query, retrieved_docs))

|

| 44 |

similarity_scores.append(self.answer_similarity(generated_answer, reference))

|

| 45 |

-

human_scores.append(self.human_evaluation(index_name, query))

|

| 46 |

|

| 47 |

return {

|

| 48 |

"avg_relevance_score": np.mean(relevance_scores),

|

|

@@ -64,17 +68,16 @@ class EvaluationSystem:

|

|

| 64 |

print(f"Error in LLM evaluation: {str(e)}")

|

| 65 |

return None

|

| 66 |

|

| 67 |

-

def evaluate_rag(self, rag_system, ground_truth_file,

|

| 68 |

try:

|

| 69 |

ground_truth = pd.read_csv(ground_truth_file)

|

| 70 |

except FileNotFoundError:

|

| 71 |

print("Ground truth file not found. Please generate ground truth data first.")

|

| 72 |

return None

|

| 73 |

|

| 74 |

-

sample = ground_truth.sample(n=min(sample_size, len(ground_truth)), random_state=1)

|

| 75 |

evaluations = []

|

| 76 |

|

| 77 |

-

for _, row in

|

| 78 |

question = row['question']

|

| 79 |

video_id = row['video_id']

|

| 80 |

|

|

@@ -93,22 +96,132 @@ class EvaluationSystem:

|

|

| 93 |

if prompt_template:

|

| 94 |

evaluation = self.llm_as_judge(question, answer_llm, prompt_template)

|

| 95 |

if evaluation:

|

| 96 |

-

evaluations.append(

|

| 97 |

-

str(video_id),

|

| 98 |

-

str(question),

|

| 99 |

-

str(answer_llm),

|

| 100 |

-

str(evaluation.get('Relevance', 'UNKNOWN')),

|

| 101 |

-

str(evaluation.get('Explanation', 'No explanation provided'))

|

| 102 |

-

)

|

| 103 |

else:

|

| 104 |

-

# Fallback to cosine similarity if no prompt template is provided

|

| 105 |

similarity = self.answer_similarity(answer_llm, row.get('reference_answer', ''))

|

| 106 |

-

evaluations.append(

|

| 107 |

-

str(video_id),

|

| 108 |

-

str(question),

|

| 109 |

-

str(answer_llm),

|

| 110 |

-

f"Similarity: {similarity}",

|

| 111 |

-

"Cosine similarity used for evaluation"

|

| 112 |

-

)

|

| 113 |

-

|

| 114 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

import pandas as pd

|

| 4 |

import json

|

| 5 |

import ollama

|

| 6 |

+

import requests

|

| 7 |

+

import sqlite3

|

| 8 |

+

from tqdm import tqdm

|

| 9 |

+

import csv

|

| 10 |

|

| 11 |

class EvaluationSystem:

|

| 12 |

def __init__(self, data_processor, database_handler):

|

|

|

|

| 46 |

|

| 47 |

relevance_scores.append(self.relevance_scoring(query, retrieved_docs))

|

| 48 |

similarity_scores.append(self.answer_similarity(generated_answer, reference))

|

| 49 |

+

human_scores.append(self.human_evaluation(index_name, query))

|

| 50 |

|

| 51 |

return {

|

| 52 |

"avg_relevance_score": np.mean(relevance_scores),

|

|

|

|

| 68 |

print(f"Error in LLM evaluation: {str(e)}")

|

| 69 |

return None

|

| 70 |

|

| 71 |

+

def evaluate_rag(self, rag_system, ground_truth_file, prompt_template=None):

|

| 72 |

try:

|

| 73 |

ground_truth = pd.read_csv(ground_truth_file)

|

| 74 |

except FileNotFoundError:

|

| 75 |

print("Ground truth file not found. Please generate ground truth data first.")

|

| 76 |

return None

|

| 77 |

|

|

|

|

| 78 |

evaluations = []

|

| 79 |

|

| 80 |

+

for _, row in tqdm(ground_truth.iterrows(), total=len(ground_truth)):

|

| 81 |

question = row['question']

|

| 82 |

video_id = row['video_id']

|

| 83 |

|

|

|

|

| 96 |

if prompt_template:

|

| 97 |

evaluation = self.llm_as_judge(question, answer_llm, prompt_template)

|

| 98 |

if evaluation:

|

| 99 |

+

evaluations.append({

|

| 100 |

+

'video_id': str(video_id),

|

| 101 |

+

'question': str(question),

|

| 102 |

+

'answer': str(answer_llm),

|

| 103 |

+

'relevance': str(evaluation.get('Relevance', 'UNKNOWN')),

|

| 104 |

+

'explanation': str(evaluation.get('Explanation', 'No explanation provided'))

|

| 105 |

+

})

|

| 106 |

else:

|

|

|

|

| 107 |

similarity = self.answer_similarity(answer_llm, row.get('reference_answer', ''))

|

| 108 |

+

evaluations.append({

|

| 109 |

+

'video_id': str(video_id),

|

| 110 |

+

'question': str(question),

|

| 111 |

+

'answer': str(answer_llm),

|

| 112 |

+

'relevance': f"Similarity: {similarity}",

|

| 113 |

+

'explanation': "Cosine similarity used for evaluation"

|

| 114 |

+

})

|

| 115 |

+

|

| 116 |

+

# Save evaluations to CSV

|

| 117 |

+

csv_path = 'data/evaluation_results.csv'

|

| 118 |

+

with open(csv_path, 'w', newline='', encoding='utf-8') as csvfile:

|

| 119 |

+

fieldnames = ['video_id', 'question', 'answer', 'relevance', 'explanation']

|

| 120 |

+

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

|

| 121 |

+

writer.writeheader()

|

| 122 |

+

for eval_data in evaluations:

|

| 123 |

+

writer.writerow(eval_data)

|

| 124 |

+

|

| 125 |

+

print(f"Evaluation results saved to {csv_path}")

|

| 126 |

+

|

| 127 |

+

# Save evaluations to database

|

| 128 |

+

self.save_evaluations_to_db(evaluations)

|

| 129 |

+

|

| 130 |

+

return evaluations

|

| 131 |

+

|

| 132 |

+

def save_evaluations_to_db(self, evaluations):

|

| 133 |

+

with sqlite3.connect(self.db_handler.db_path) as conn:

|

| 134 |

+

cursor = conn.cursor()

|

| 135 |

+

cursor.execute('''

|

| 136 |

+

CREATE TABLE IF NOT EXISTS rag_evaluations (

|

| 137 |

+

id INTEGER PRIMARY KEY AUTOINCREMENT,

|

| 138 |

+

video_id TEXT,

|

| 139 |

+

question TEXT,

|

| 140 |

+

answer TEXT,

|

| 141 |

+

relevance TEXT,

|

| 142 |

+

explanation TEXT

|

| 143 |

+

)

|

| 144 |

+

''')

|

| 145 |

+

for eval_data in evaluations:

|

| 146 |

+

cursor.execute('''

|

| 147 |

+

INSERT INTO rag_evaluations (video_id, question, answer, relevance, explanation)

|

| 148 |

+

VALUES (?, ?, ?, ?, ?)

|

| 149 |

+

''', (eval_data['video_id'], eval_data['question'], eval_data['answer'],

|

| 150 |

+

eval_data['relevance'], eval_data['explanation']))

|

| 151 |

+

conn.commit()

|

| 152 |

+

print("Evaluation results saved to database")

|

| 153 |

+

|

| 154 |

+

def run_full_evaluation(self, rag_system, ground_truth_file, prompt_template=None):

|

| 155 |

+

# Load ground truth

|

| 156 |

+

ground_truth = pd.read_csv(ground_truth_file)

|

| 157 |

+

|

| 158 |

+

# Evaluate RAG

|

| 159 |

+

rag_evaluations = self.evaluate_rag(rag_system, ground_truth_file, prompt_template)

|

| 160 |

+

|

| 161 |

+

# Evaluate search performance

|

| 162 |

+

def search_function(query, video_id):

|

| 163 |

+

index_name = self.db_handler.get_elasticsearch_index_by_youtube_id(video_id)

|

| 164 |

+

if index_name:

|

| 165 |

+

return rag_system.data_processor.search(query, num_results=10, method='hybrid', index_name=index_name)

|

| 166 |

+

return []

|

| 167 |

+

|

| 168 |

+

search_performance = self.evaluate_search(ground_truth, search_function)

|

| 169 |

+

|

| 170 |

+

# Optimize search parameters

|

| 171 |

+

param_ranges = {'content': (0.0, 3.0)} # Example parameter range

|

| 172 |

+

|

| 173 |

+

def objective_function(params):

|

| 174 |

+

def parameterized_search(query, video_id):

|

| 175 |

+

index_name = self.db_handler.get_elasticsearch_index_by_youtube_id(video_id)

|

| 176 |

+

if index_name:

|

| 177 |

+

return rag_system.data_processor.search(query, num_results=10, method='hybrid', index_name=index_name, boost_dict=params)

|

| 178 |

+

return []

|

| 179 |

+

return self.evaluate_search(ground_truth, parameterized_search)['mrr']

|

| 180 |

+

|

| 181 |

+

best_params, best_score = self.simple_optimize(param_ranges, objective_function)

|

| 182 |

+

|

| 183 |

+

return {

|

| 184 |

+

"rag_evaluations": rag_evaluations,

|

| 185 |

+

"search_performance": search_performance,

|

| 186 |

+

"best_params": best_params,

|

| 187 |

+

"best_score": best_score

|

| 188 |

+

}

|

| 189 |

+

|

| 190 |

+

|

| 191 |

+

def hit_rate(self, relevance_total):

|

| 192 |

+

return sum(any(line) for line in relevance_total) / len(relevance_total)

|

| 193 |

+

|

| 194 |

+

def mrr(self, relevance_total):

|

| 195 |

+

scores = []

|

| 196 |

+

for line in relevance_total:

|

| 197 |

+

for rank, relevant in enumerate(line, 1):

|

| 198 |

+

if relevant:

|

| 199 |

+

scores.append(1 / rank)

|

| 200 |

+

break

|

| 201 |

+

else:

|

| 202 |

+

scores.append(0)

|

| 203 |

+

return sum(scores) / len(scores)

|

| 204 |

+

|

| 205 |

+

def simple_optimize(self, param_ranges, objective_function, n_iterations=10):

|

| 206 |

+

best_params = None

|

| 207 |

+

best_score = float('-inf')

|

| 208 |

+

for _ in range(n_iterations):

|

| 209 |

+

current_params = {param: np.random.uniform(min_val, max_val)

|

| 210 |

+

for param, (min_val, max_val) in param_ranges.items()}

|

| 211 |

+

current_score = objective_function(current_params)

|

| 212 |

+

if current_score > best_score:

|

| 213 |

+

best_score = current_score

|

| 214 |

+

best_params = current_params

|

| 215 |

+

return best_params, best_score

|

| 216 |

+

|

| 217 |

+

def evaluate_search(self, ground_truth, search_function):

|

| 218 |

+

relevance_total = []

|

| 219 |

+

for _, row in tqdm(ground_truth.iterrows(), total=len(ground_truth)):

|

| 220 |

+

video_id = row['video_id']

|

| 221 |

+

results = search_function(row['question'], video_id)

|

| 222 |

+

relevance = [d['video_id'] == video_id for d in results]

|

| 223 |

+

relevance_total.append(relevance)

|

| 224 |

+

return {

|

| 225 |

+

'hit_rate': self.hit_rate(relevance_total),

|

| 226 |

+

'mrr': self.mrr(relevance_total),

|

| 227 |

+

}

|

app/generate_ground_truth.py

CHANGED

|

@@ -46,13 +46,13 @@ def get_transcript_from_sqlite(db_path, video_id):

|

|

| 46 |

logger.error(f"Error retrieving transcript from SQLite: {str(e)}")

|

| 47 |

return None

|

| 48 |

|

| 49 |

-

def generate_questions(transcript):

|

| 50 |

prompt_template = """

|

| 51 |

You are an AI assistant tasked with generating questions based on a YouTube video transcript.

|

| 52 |

-

Formulate

|

| 53 |

Make the questions specific to the content of the transcript.

|

| 54 |

The questions should be complete and not too short. Use as few words as possible from the transcript.

|

| 55 |

-

|

| 56 |

|

| 57 |

The transcript:

|

| 58 |

|

|

@@ -63,60 +63,121 @@ def generate_questions(transcript):

|

|

| 63 |

{{"questions": ["question1", "question2", ..., "question10"]}}

|

| 64 |

""".strip()

|

| 65 |

|

| 66 |

-

|

|

|

|

| 67 |

|

| 68 |

-

|

| 69 |

-

|

| 70 |

-

|

| 71 |

-

|

| 72 |

-

|

| 73 |

-

|

| 74 |

-

|

| 75 |

-

|

| 76 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 77 |

|

| 78 |

def generate_ground_truth(db_handler, data_processor, video_id):

|

| 79 |

es = Elasticsearch([f'http://{os.getenv("ELASTICSEARCH_HOST", "localhost")}:{os.getenv("ELASTICSEARCH_PORT", "9200")}'])

|

| 80 |

|

| 81 |

-

# Get

|

| 82 |

-

|

| 83 |

-

|

| 84 |

-

if not index_name:

|

| 85 |

-

logger.error(f"No Elasticsearch index found for video {video_id}")

|

| 86 |

-

return None

|

| 87 |

-

|

| 88 |

-

# Extract the model name from the index name

|

| 89 |

-

model_name = extract_model_name(index_name)

|

| 90 |

-

|

| 91 |

-

if not model_name:

|

| 92 |

-

logger.error(f"Could not extract model name from index name: {index_name}")

|

| 93 |

-

return None

|

| 94 |

|

| 95 |

transcript = None

|

|

|

|

|

|

|

| 96 |

if index_name:

|

| 97 |

transcript = get_transcript_from_elasticsearch(es, index_name, video_id)

|

| 98 |

-

logger.info(f"Transcript to generate questions using elasticsearch is {transcript}")

|

| 99 |

|

| 100 |

if not transcript:

|

| 101 |

transcript = db_handler.get_transcript_content(video_id)

|

| 102 |

-

logger.info(f"Transcript to generate questions using textual data is {transcript}")

|

| 103 |

|

| 104 |

if not transcript:

|

| 105 |

logger.error(f"Failed to retrieve transcript for video {video_id}")

|

| 106 |

return None

|

| 107 |

|

| 108 |

-

questions

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 109 |

|

| 110 |

-

if

|

| 111 |

-

|

| 112 |

-

|

| 113 |

-

|

| 114 |

df.to_csv(csv_path, index=False)

|

| 115 |

-

|

| 116 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 117 |

else:

|

| 118 |

-

|

| 119 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 120 |

|

| 121 |

def generate_ground_truth_for_all_videos(db_handler, data_processor):

|

| 122 |

videos = db_handler.get_all_videos()

|

|

@@ -136,4 +197,18 @@ def generate_ground_truth_for_all_videos(db_handler, data_processor):

|

|

| 136 |

return df

|

| 137 |

else:

|

| 138 |

logger.error("Failed to generate questions for any video.")

|

| 139 |

-

return None

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 46 |

logger.error(f"Error retrieving transcript from SQLite: {str(e)}")

|

| 47 |

return None

|

| 48 |

|

| 49 |

+

def generate_questions(transcript, max_retries=3):

|

| 50 |

prompt_template = """

|

| 51 |

You are an AI assistant tasked with generating questions based on a YouTube video transcript.

|

| 52 |

+

Formulate EXACTLY 10 questions that a user might ask based on the provided transcript.

|

| 53 |

Make the questions specific to the content of the transcript.

|

| 54 |

The questions should be complete and not too short. Use as few words as possible from the transcript.

|

| 55 |

+

Ensure that all 10 questions are unique and not repetitive.

|

| 56 |

|

| 57 |

The transcript:

|

| 58 |

|

|

|

|

| 63 |

{{"questions": ["question1", "question2", ..., "question10"]}}

|

| 64 |

""".strip()

|

| 65 |

|

| 66 |

+

all_questions = set()

|

| 67 |

+

retries = 0

|

| 68 |

|

| 69 |

+

while len(all_questions) < 10 and retries < max_retries:

|

| 70 |

+

prompt = prompt_template.format(transcript=transcript)

|

| 71 |

+

try:

|

| 72 |

+

response = ollama.chat(

|

| 73 |

+

model='phi3.5',

|

| 74 |

+

messages=[{"role": "user", "content": prompt}]

|

| 75 |

+

)

|

| 76 |

+

questions = json.loads(response['message']['content'])['questions']

|

| 77 |

+

all_questions.update(questions)

|

| 78 |

+

except Exception as e:

|

| 79 |

+

logger.error(f"Error generating questions: {str(e)}")

|

| 80 |

+

retries += 1

|

| 81 |

+

|

| 82 |

+

if len(all_questions) < 10:

|

| 83 |

+

logger.warning(f"Could only generate {len(all_questions)} unique questions after {max_retries} attempts.")

|

| 84 |

+

|

| 85 |

+

return {"questions": list(all_questions)[:10]}

|

| 86 |

|

| 87 |

def generate_ground_truth(db_handler, data_processor, video_id):

|

| 88 |

es = Elasticsearch([f'http://{os.getenv("ELASTICSEARCH_HOST", "localhost")}:{os.getenv("ELASTICSEARCH_PORT", "9200")}'])

|

| 89 |

|

| 90 |

+

# Get existing questions for this video to avoid duplicates

|

| 91 |

+

existing_questions = set(q[1] for q in db_handler.get_ground_truth_by_video(video_id))

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 92 |

|

| 93 |

transcript = None

|

| 94 |

+

index_name = db_handler.get_elasticsearch_index_by_youtube_id(video_id)

|

| 95 |

+

|

| 96 |

if index_name:

|

| 97 |

transcript = get_transcript_from_elasticsearch(es, index_name, video_id)

|

|

|

|

| 98 |

|

| 99 |

if not transcript:

|

| 100 |

transcript = db_handler.get_transcript_content(video_id)

|

|

|

|

| 101 |

|

| 102 |

if not transcript:

|

| 103 |

logger.error(f"Failed to retrieve transcript for video {video_id}")

|

| 104 |

return None

|

| 105 |

|

| 106 |

+

# Generate questions until we have 10 unique ones

|

| 107 |

+

all_questions = set()

|

| 108 |

+

max_attempts = 3

|

| 109 |

+

attempts = 0

|

| 110 |

+

|

| 111 |

+

while len(all_questions) < 10 and attempts < max_attempts:

|

| 112 |

+

questions = generate_questions(transcript)

|

| 113 |

+

if questions and 'questions' in questions:

|

| 114 |

+

new_questions = set(questions['questions']) - existing_questions

|

| 115 |

+

all_questions.update(new_questions)

|

| 116 |

+

attempts += 1

|

| 117 |

+

|

| 118 |

+

if not all_questions:

|

| 119 |

+

logger.error("Failed to generate any unique questions.")

|

| 120 |

+

return None

|

| 121 |

+

|

| 122 |

+

# Store questions in database

|

| 123 |

+

db_handler.add_ground_truth_questions(video_id, all_questions)

|

| 124 |

+

|

| 125 |

+

# Create DataFrame and save to CSV

|

| 126 |

+

df = pd.DataFrame([(video_id, q) for q in all_questions], columns=['video_id', 'question'])

|

| 127 |

+

csv_path = 'data/ground-truth-retrieval.csv'

|

| 128 |

|

| 129 |

+

# Append to existing CSV if it exists, otherwise create new

|

| 130 |

+

if os.path.exists(csv_path):

|

| 131 |

+

df.to_csv(csv_path, mode='a', header=False, index=False)

|

| 132 |

+

else:

|

| 133 |

df.to_csv(csv_path, index=False)

|

| 134 |

+

|

| 135 |

+

logger.info(f"Ground truth data saved to {csv_path}")

|

| 136 |

+

return df

|

| 137 |

+

|

| 138 |

+

def get_ground_truth_display_data(db_handler, video_id=None, channel_name=None):

|

| 139 |

+

"""Get ground truth data from both database and CSV file"""

|

| 140 |

+

import pandas as pd

|

| 141 |

+

|

| 142 |

+

# Try to get data from database first

|

| 143 |

+

if video_id:

|

| 144 |

+

data = db_handler.get_ground_truth_by_video(video_id)

|

| 145 |

+

elif channel_name:

|

| 146 |

+

data = db_handler.get_ground_truth_by_channel(channel_name)

|

| 147 |

else:

|

| 148 |

+

data = []

|

| 149 |

+

|

| 150 |

+

# Create DataFrame from database data

|

| 151 |

+

if data:

|

| 152 |

+

db_df = pd.DataFrame(data, columns=['id', 'video_id', 'question', 'generation_date', 'channel_name'])

|

| 153 |

+

else:

|

| 154 |

+

db_df = pd.DataFrame()

|

| 155 |

+

|

| 156 |

+

# Try to get data from CSV

|

| 157 |

+

try:

|

| 158 |

+

csv_df = pd.read_csv('data/ground-truth-retrieval.csv')

|

| 159 |

+

if video_id:

|

| 160 |

+

csv_df = csv_df[csv_df['video_id'] == video_id]

|

| 161 |

+

elif channel_name:

|

| 162 |

+

# Join with videos table to get channel information

|

| 163 |

+

videos_df = pd.DataFrame(db_handler.get_all_videos(),

|

| 164 |

+

columns=['youtube_id', 'title', 'channel_name', 'upload_date'])

|

| 165 |

+

csv_df = csv_df.merge(videos_df, left_on='video_id', right_on='youtube_id')

|

| 166 |

+

csv_df = csv_df[csv_df['channel_name'] == channel_name]

|

| 167 |

+

except FileNotFoundError:

|

| 168 |

+

csv_df = pd.DataFrame()

|

| 169 |

+

|

| 170 |

+

# Combine data from both sources

|

| 171 |

+

if not db_df.empty and not csv_df.empty:

|

| 172 |

+

combined_df = pd.concat([db_df, csv_df]).drop_duplicates(subset=['video_id', 'question'])

|

| 173 |

+

elif not db_df.empty:

|

| 174 |

+

combined_df = db_df

|

| 175 |

+

elif not csv_df.empty:

|

| 176 |

+

combined_df = csv_df

|

| 177 |

+

else:

|

| 178 |

+

combined_df = pd.DataFrame()

|

| 179 |

+

|

| 180 |

+

return combined_df

|

| 181 |

|

| 182 |

def generate_ground_truth_for_all_videos(db_handler, data_processor):

|

| 183 |

videos = db_handler.get_all_videos()

|

|

|

|

| 197 |

return df

|

| 198 |

else:

|

| 199 |

logger.error("Failed to generate questions for any video.")

|

| 200 |

+

return None

|

| 201 |

+

|

| 202 |

+

def get_evaluation_display_data(video_id=None):

|

| 203 |

+

"""Get evaluation data from both database and CSV file"""

|

| 204 |

+

import pandas as pd

|

| 205 |

+

|

| 206 |

+

# Try to get data from CSV

|

| 207 |

+

try:

|

| 208 |

+

csv_df = pd.read_csv('data/evaluation_results.csv')

|

| 209 |

+

if video_id:

|

| 210 |

+

csv_df = csv_df[csv_df['video_id'] == video_id]

|

| 211 |

+

except FileNotFoundError:

|

| 212 |

+

csv_df = pd.DataFrame()

|

| 213 |

+

|

| 214 |

+

return csv_df

|

app/main.py

CHANGED

|

@@ -6,7 +6,7 @@ from database import DatabaseHandler

|

|

| 6 |

from rag import RAGSystem

|

| 7 |

from query_rewriter import QueryRewriter

|

| 8 |

from evaluation import EvaluationSystem

|

| 9 |

-

from generate_ground_truth import generate_ground_truth, generate_ground_truth_for_all_videos

|

| 10 |

from sentence_transformers import SentenceTransformer

|

| 11 |

import os

|

| 12 |

import sys

|

|

@@ -311,38 +311,46 @@ def main():

|

|

| 311 |

else:

|

| 312 |

video_df = pd.DataFrame(videos, columns=['youtube_id', 'title', 'channel_name', 'upload_date'])

|

| 313 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 314 |

st.dataframe(video_df)

|

| 315 |

selected_video_id = st.selectbox("Select a Video", video_df['youtube_id'].tolist(),

|

| 316 |

-

|

| 317 |

-

|

| 318 |

|

| 319 |

-

|

| 320 |

-

|

| 321 |

-

|

| 322 |

-

|

| 323 |

-

|

| 324 |

-

|

| 325 |

-

|

| 326 |

-

|

| 327 |

-

|

| 328 |

-

|

| 329 |

-

|

| 330 |

-

|

| 331 |

-

|

| 332 |

-

|

| 333 |

-

with st.spinner("Processing videos and generating ground truth..."):

|

| 334 |

-

for video_id in video_df['youtube_id']:

|

| 335 |

-

ensure_video_processed(db_handler, data_processor, video_id, embedding_model)

|

| 336 |

-

ground_truth_df = generate_ground_truth_for_all_videos(db_handler, data_processor)

|

| 337 |

-

if ground_truth_df is not None:

|

| 338 |

-

st.dataframe(ground_truth_df)

|

| 339 |

-

csv = ground_truth_df.to_csv(index=False)

|

| 340 |

-

st.download_button(

|

| 341 |

-

label="Download Ground Truth CSV (All Videos)",

|

| 342 |

-

data=csv,

|

| 343 |

-

file_name="ground_truth_all_videos.csv",

|

| 344 |

-

mime="text/csv",

|

| 345 |

-

)

|

| 346 |

|

| 347 |

with tab3:

|

| 348 |

st.header("RAG Evaluation")

|

|

@@ -350,22 +358,60 @@ def main():

|

|

| 350 |

try:

|

| 351 |

ground_truth_df = pd.read_csv('data/ground-truth-retrieval.csv')

|

| 352 |

ground_truth_available = True

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 353 |

except FileNotFoundError:

|

| 354 |

ground_truth_available = False

|

| 355 |

|

| 356 |

if ground_truth_available:

|

| 357 |

-

st.

|

| 358 |

-

|

| 359 |

-

|

| 360 |

-

|

| 361 |

-

|

| 362 |

-

|

| 363 |

-

|

| 364 |

-

|

| 365 |

-

|

| 366 |

-

|

| 367 |

-

|

| 368 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 369 |

else:

|

| 370 |

st.warning("No ground truth data available. Please generate ground truth data first.")

|

| 371 |

st.button("Run Evaluation", disabled=True)

|

|

|

|

| 6 |

from rag import RAGSystem

|

| 7 |

from query_rewriter import QueryRewriter

|

| 8 |

from evaluation import EvaluationSystem

|

| 9 |

+

from generate_ground_truth import generate_ground_truth, generate_ground_truth_for_all_videos, get_ground_truth_display_data, get_evaluation_display_data

|

| 10 |

from sentence_transformers import SentenceTransformer

|

| 11 |

import os

|

| 12 |

import sys

|

|

|

|

| 311 |

else:

|

| 312 |

video_df = pd.DataFrame(videos, columns=['youtube_id', 'title', 'channel_name', 'upload_date'])

|

| 313 |

|

| 314 |

+

# Add channel filter

|

| 315 |

+

channels = sorted(video_df['channel_name'].unique())

|

| 316 |

+

selected_channel = st.selectbox("Filter by Channel", ["All"] + channels, key="gt_channel_select")

|

| 317 |

+

|

| 318 |

+

if selected_channel != "All":

|

| 319 |

+

video_df = video_df[video_df['channel_name'] == selected_channel]

|

| 320 |

+

# Display existing ground truth for selected channel

|

| 321 |

+

gt_data = get_ground_truth_display_data(db_handler, channel_name=selected_channel)

|

| 322 |

+

if not gt_data.empty:

|

| 323 |

+

st.subheader("Existing Ground Truth Questions for Channel")

|

| 324 |

+

st.dataframe(gt_data)

|

| 325 |

+

|

| 326 |

+

# Add download button for channel ground truth

|

| 327 |

+

csv = gt_data.to_csv(index=False)

|

| 328 |

+

st.download_button(

|

| 329 |

+

label="Download Channel Ground Truth CSV",

|

| 330 |

+

data=csv,

|

| 331 |

+

file_name=f"ground_truth_{selected_channel}.csv",

|

| 332 |

+

mime="text/csv",

|

| 333 |

+

)

|

| 334 |

+

|

| 335 |

st.dataframe(video_df)

|

| 336 |

selected_video_id = st.selectbox("Select a Video", video_df['youtube_id'].tolist(),

|

| 337 |

+

format_func=lambda x: video_df[video_df['youtube_id'] == x]['title'].iloc[0],

|

| 338 |

+

key="gt_video_select")

|

| 339 |

|

| 340 |

+

# Display existing ground truth for selected video

|

| 341 |

+

gt_data = get_ground_truth_display_data(db_handler, video_id=selected_video_id)

|

| 342 |

+

if not gt_data.empty:

|

| 343 |

+

st.subheader("Existing Ground Truth Questions")

|

| 344 |

+

st.dataframe(gt_data)

|

| 345 |

+

|

| 346 |

+

# Add download button for video ground truth

|

| 347 |

+

csv = gt_data.to_csv(index=False)

|

| 348 |

+

st.download_button(

|

| 349 |

+

label="Download Video Ground Truth CSV",

|

| 350 |

+

data=csv,

|

| 351 |

+

file_name=f"ground_truth_{selected_video_id}.csv",

|

| 352 |

+

mime="text/csv",

|

| 353 |

+

)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 354 |

|

| 355 |

with tab3:

|

| 356 |

st.header("RAG Evaluation")

|

|

|

|

| 358 |

try:

|

| 359 |

ground_truth_df = pd.read_csv('data/ground-truth-retrieval.csv')

|

| 360 |

ground_truth_available = True

|

| 361 |

+

|

| 362 |

+

# Display existing evaluations

|

| 363 |

+

existing_evaluations = get_evaluation_display_data()

|

| 364 |

+

if not existing_evaluations.empty:

|

| 365 |

+

st.subheader("Existing Evaluation Results")

|

| 366 |

+

st.dataframe(existing_evaluations)

|

| 367 |

+

|

| 368 |

+

# Add download button for evaluation results

|

| 369 |

+

csv = existing_evaluations.to_csv(index=False)

|

| 370 |

+

st.download_button(

|

| 371 |

+

label="Download Evaluation Results CSV",

|

| 372 |

+

data=csv,

|

| 373 |

+

file_name="evaluation_results.csv",

|

| 374 |

+

mime="text/csv",

|

| 375 |

+

)

|

| 376 |

+

|

| 377 |

except FileNotFoundError:

|

| 378 |

ground_truth_available = False

|

| 379 |

|

| 380 |

if ground_truth_available:

|

| 381 |

+

if st.button("Run Full Evaluation"):

|

| 382 |

+

with st.spinner("Running full evaluation..."):

|

| 383 |

+

evaluation_results = evaluation_system.run_full_evaluation(rag_system, 'data/ground-truth-retrieval.csv', prompt_template)

|

| 384 |

+

|

| 385 |

+

st.subheader("RAG Evaluations")

|

| 386 |

+

rag_eval_df = pd.DataFrame(evaluation_results["rag_evaluations"])

|

| 387 |

+

st.dataframe(rag_eval_df)

|

| 388 |

+

|

| 389 |

+

st.subheader("Search Performance")

|

| 390 |

+

search_perf_df = pd.DataFrame([evaluation_results["search_performance"]])

|

| 391 |

+

st.dataframe(search_perf_df)

|

| 392 |

+

|

| 393 |

+

st.subheader("Optimized Search Parameters")

|

| 394 |

+

params_df = pd.DataFrame([{

|

| 395 |

+

'parameter': k,

|

| 396 |

+

'value': v,

|

| 397 |

+

'score': evaluation_results['best_score']

|

| 398 |

+

} for k, v in evaluation_results['best_params'].items()])

|

| 399 |

+

st.dataframe(params_df)

|

| 400 |

+

|

| 401 |

+

# Save to database

|

| 402 |

+

for video_id in rag_eval_df['video_id'].unique():

|

| 403 |

+

db_handler.save_search_performance(

|

| 404 |

+

video_id,

|

| 405 |

+

evaluation_results["search_performance"]['hit_rate'],

|

| 406 |

+

evaluation_results["search_performance"]['mrr']

|

| 407 |

+

)

|

| 408 |

+

db_handler.save_search_parameters(

|

| 409 |

+

video_id,

|

| 410 |

+

evaluation_results['best_params'],

|

| 411 |

+

evaluation_results['best_score']

|

| 412 |

+

)

|

| 413 |

+

|

| 414 |

+

st.success("Evaluation complete. Results saved to database and CSV.")

|

| 415 |

else:

|

| 416 |

st.warning("No ground truth data available. Please generate ground truth data first.")

|

| 417 |

st.button("Run Evaluation", disabled=True)

|

app/rag_evaluation.py

CHANGED

|

@@ -1,3 +1,4 @@

|

|

|

|

|

| 1 |

import pandas as pd

|

| 2 |

import numpy as np

|

| 3 |

from tqdm import tqdm

|

|

@@ -42,7 +43,7 @@ def search(query):

|

|

| 42 |

)

|

| 43 |

return results

|

| 44 |

|

| 45 |

-

prompt_template =

|

| 46 |

You're an AI assistant for YouTube video transcripts. Answer the QUESTION based on the CONTEXT from our transcript database.

|

| 47 |

Use only the facts from the CONTEXT when answering the QUESTION.

|

| 48 |

|

|

@@ -50,7 +51,7 @@ QUESTION: {question}

|

|

| 50 |

|

| 51 |

CONTEXT:

|

| 52 |

{context}

|

| 53 |

-

|

| 54 |

|

| 55 |

def build_prompt(query, search_results):

|

| 56 |

context = "\n\n".join([f"Segment {i+1}: {result['content']}" for i, result in enumerate(search_results)])

|

|

@@ -125,7 +126,7 @@ def objective(boost_params):

|

|

| 125 |

return results['mrr']

|

| 126 |

|

| 127 |

# RAG evaluation

|

| 128 |

-

prompt2_template =

|

| 129 |

You are an expert evaluator for a Youtube transcript assistant.

|

| 130 |

Your task is to analyze the relevance of the generated answer to the given question.

|

| 131 |

Based on the relevance of the generated answer, you will classify it

|

|

@@ -143,7 +144,7 @@ and provide your evaluation in parsable JSON without using code blocks:

|

|

| 143 |

"Relevance": "NON_RELEVANT" | "PARTLY_RELEVANT" | "RELEVANT",

|

| 144 |

"Explanation": "[Provide a brief explanation for your evaluation]"

|

| 145 |

}}

|

| 146 |

-

|

| 147 |

|

| 148 |

def evaluate_rag(sample_size=200):

|

| 149 |

sample = ground_truth.sample(n=sample_size, random_state=1)

|

|

@@ -190,4 +191,5 @@ if __name__ == "__main__":

|

|

| 190 |

print("Evaluation complete. Results stored in the database.")

|

| 191 |

|

| 192 |

# Close the database connection

|

| 193 |

-

conn.close()

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

import pandas as pd

|

| 3 |

import numpy as np

|

| 4 |

from tqdm import tqdm

|

|

|

|

| 43 |

)

|

| 44 |

return results

|

| 45 |

|

| 46 |

+

prompt_template = '''

|

| 47 |

You're an AI assistant for YouTube video transcripts. Answer the QUESTION based on the CONTEXT from our transcript database.

|

| 48 |

Use only the facts from the CONTEXT when answering the QUESTION.

|

| 49 |

|

|

|

|

| 51 |

|

| 52 |

CONTEXT:

|

| 53 |

{context}

|

| 54 |

+

'''.strip()

|

| 55 |

|

| 56 |

def build_prompt(query, search_results):

|

| 57 |

context = "\n\n".join([f"Segment {i+1}: {result['content']}" for i, result in enumerate(search_results)])

|

|

|

|

| 126 |

return results['mrr']

|

| 127 |

|

| 128 |

# RAG evaluation

|

| 129 |

+

prompt2_template = '''

|

| 130 |

You are an expert evaluator for a Youtube transcript assistant.

|

| 131 |

Your task is to analyze the relevance of the generated answer to the given question.

|

| 132 |

Based on the relevance of the generated answer, you will classify it

|

|

|

|

| 144 |

"Relevance": "NON_RELEVANT" | "PARTLY_RELEVANT" | "RELEVANT",

|

| 145 |

"Explanation": "[Provide a brief explanation for your evaluation]"

|

| 146 |

}}

|

| 147 |

+

'''.strip()

|

| 148 |

|

| 149 |

def evaluate_rag(sample_size=200):

|

| 150 |

sample = ground_truth.sample(n=sample_size, random_state=1)

|

|

|

|

| 191 |

print("Evaluation complete. Results stored in the database.")

|

| 192 |

|

| 193 |

# Close the database connection

|

| 194 |

+

conn.close()

|

| 195 |

+

"""

|

data/evaluation_results.csv

ADDED

|

@@ -0,0 +1,181 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|