Update README.md

Browse files

README.md

CHANGED

|

@@ -1,7 +1,173 @@

|

|

| 1 |

---

|

| 2 |

license: mit

|

|

|

|

|

|

|

|

|

|

| 3 |

language:

|

| 4 |

- en

|

| 5 |

size_categories:

|

| 6 |

-

- n<1K

|

| 7 |

-

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

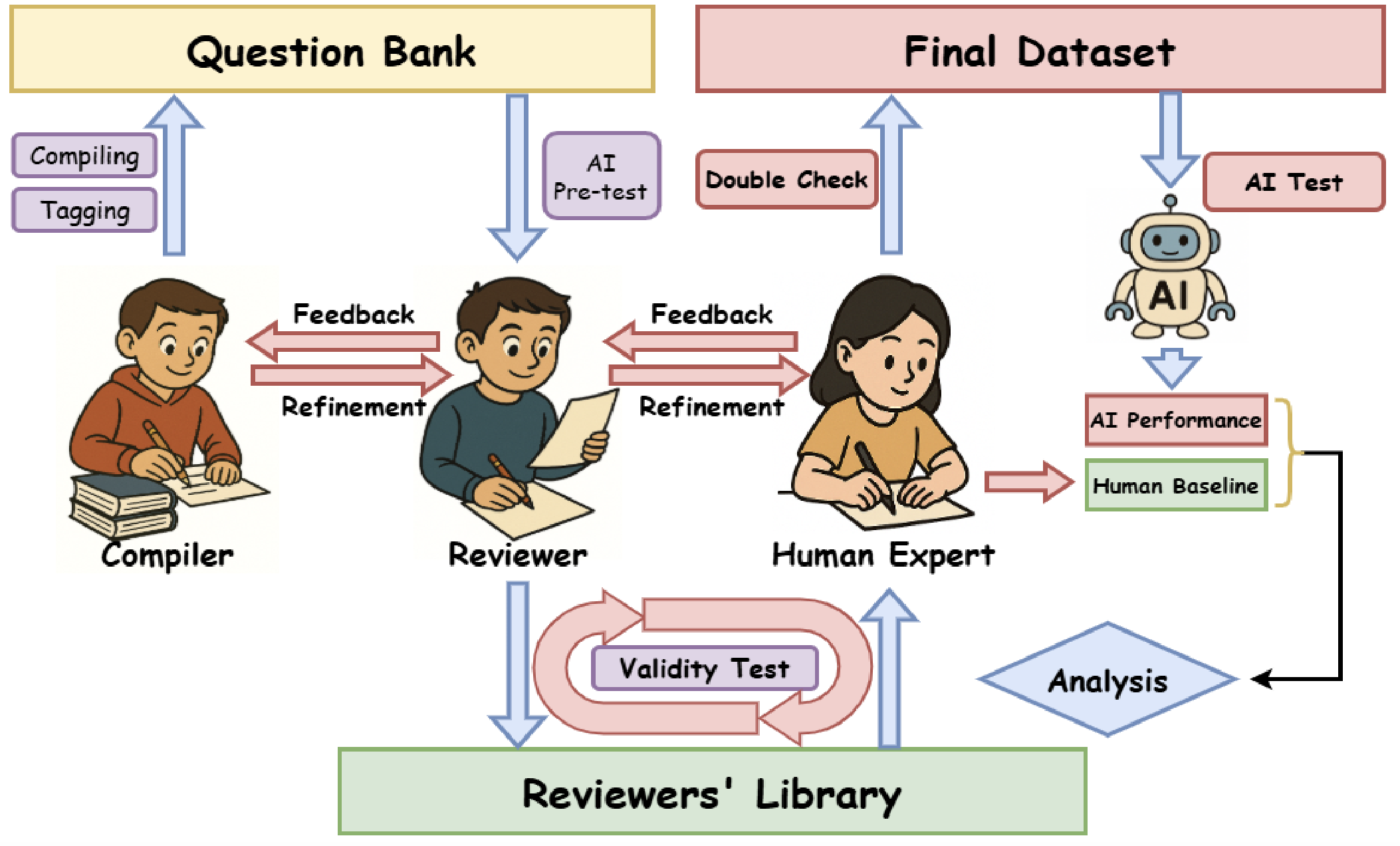

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

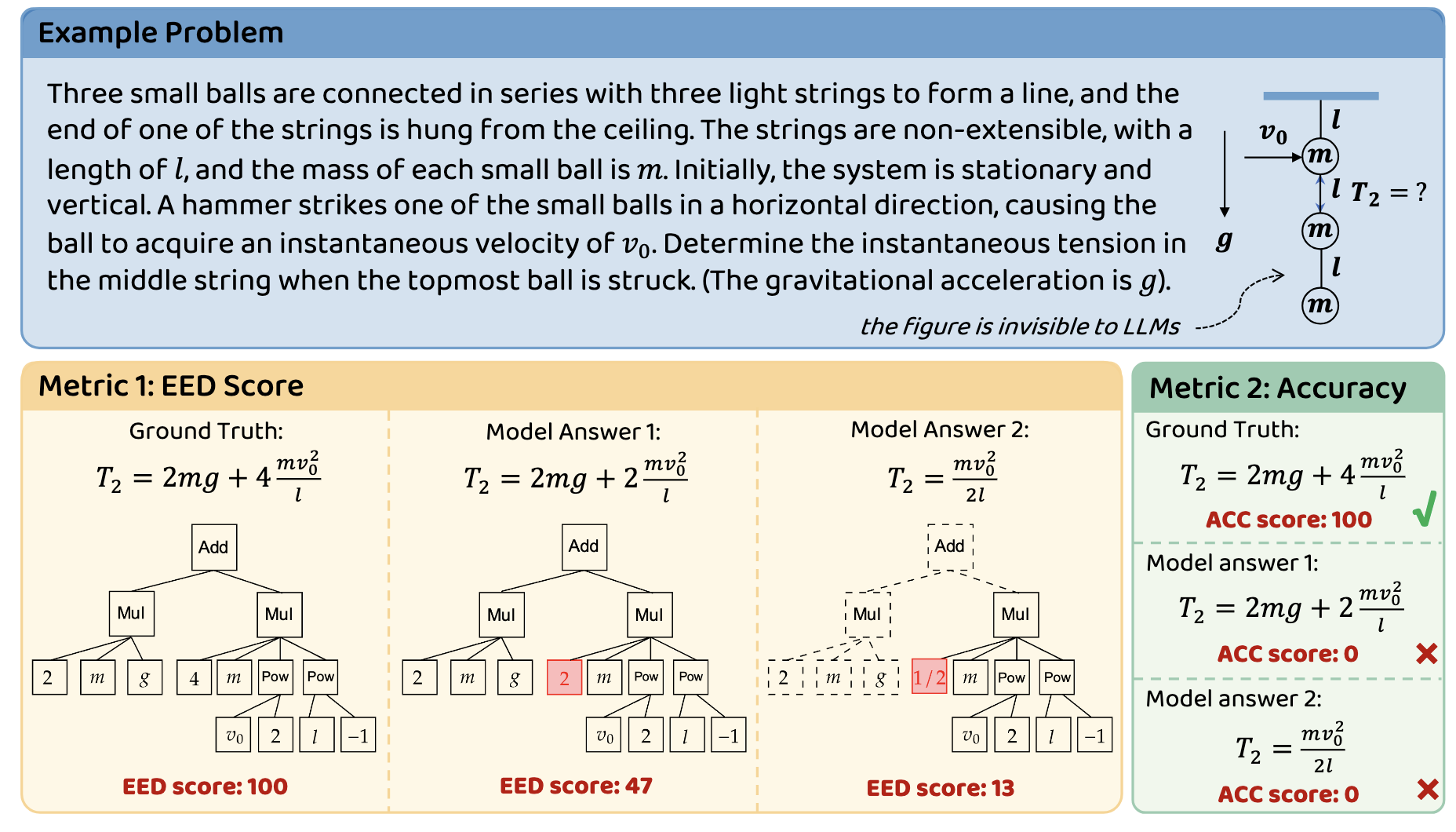

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

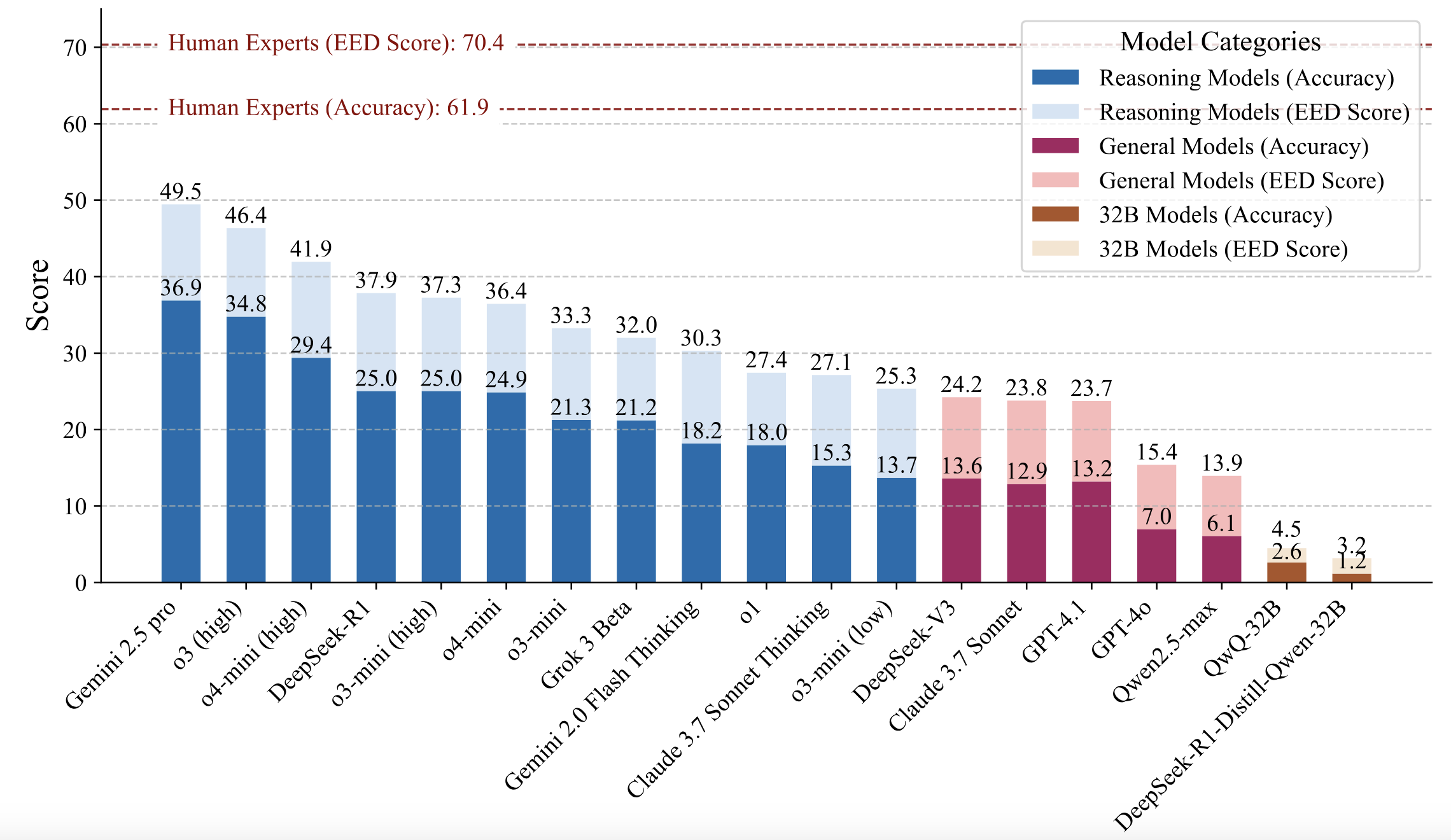

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

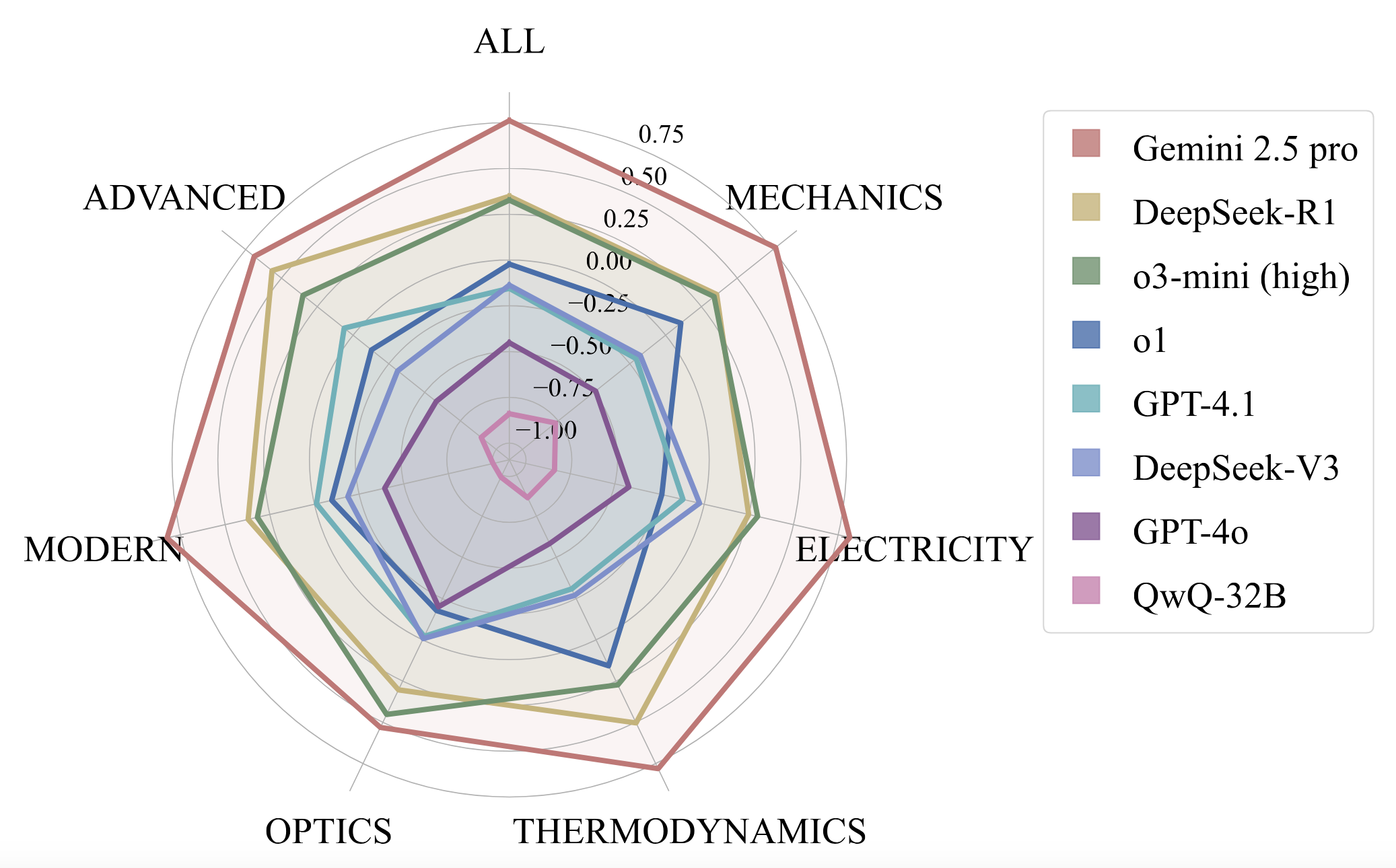

|

|

|

|

|

|

|

|

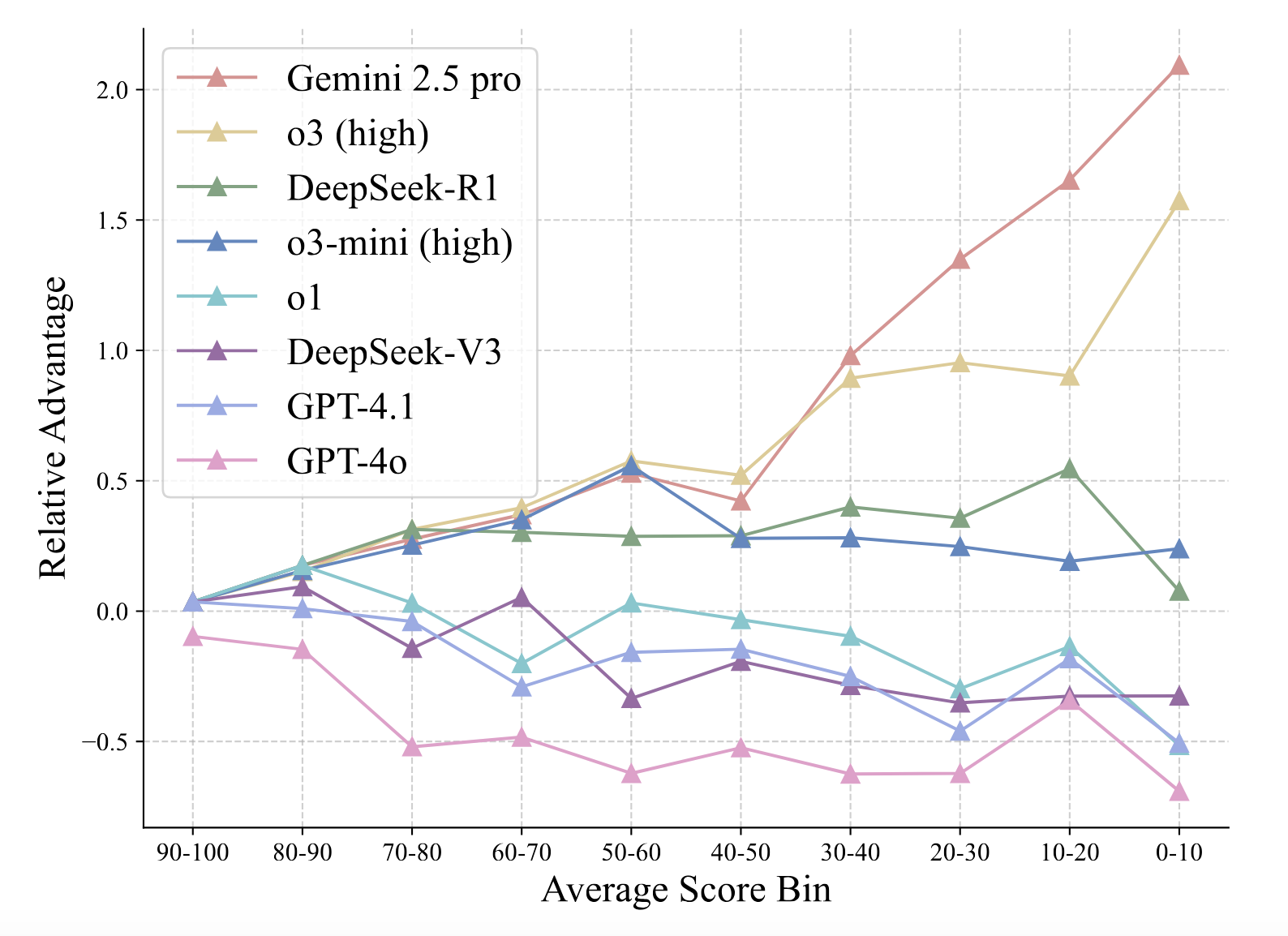

|

|

|

|

|

|

|

|

|

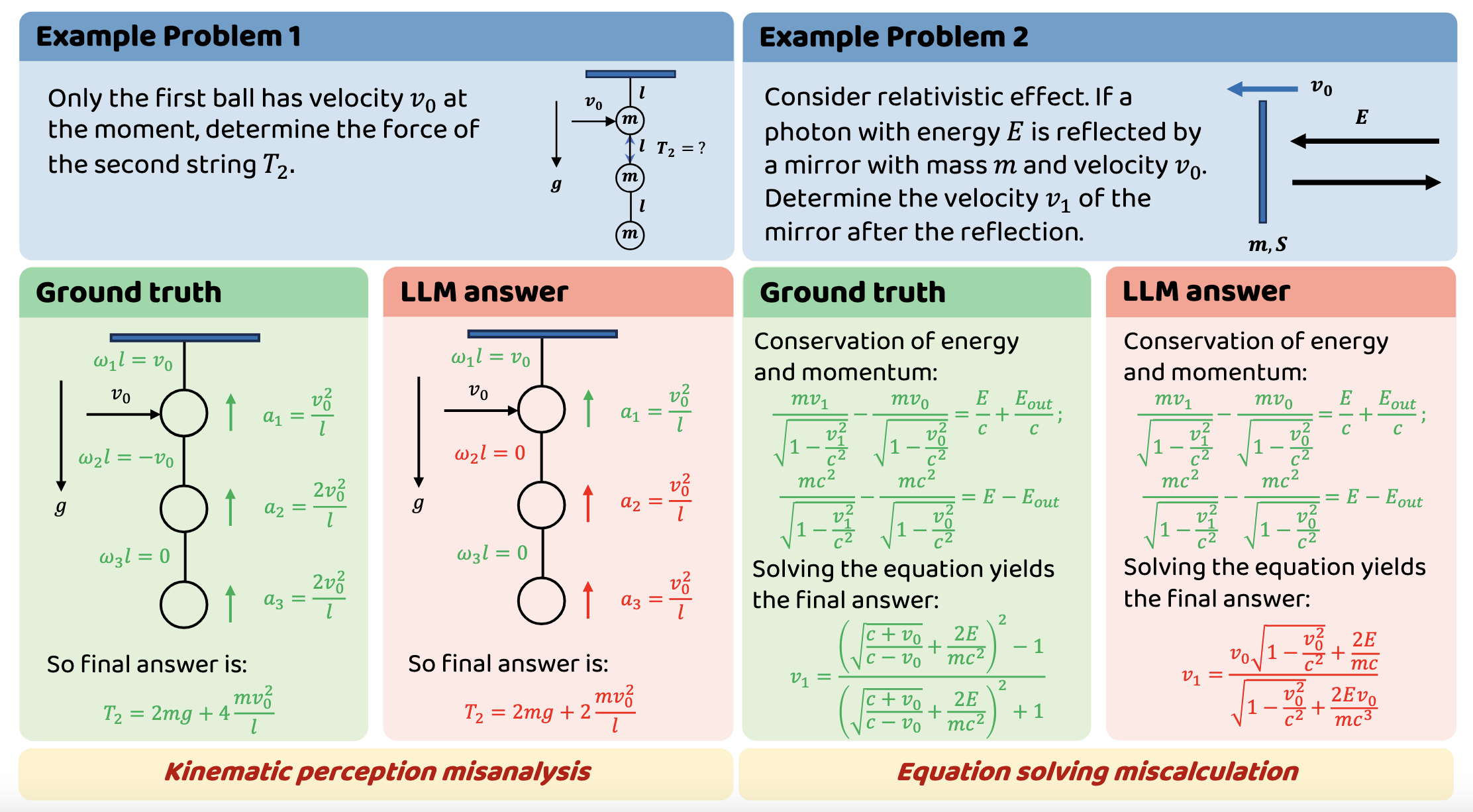

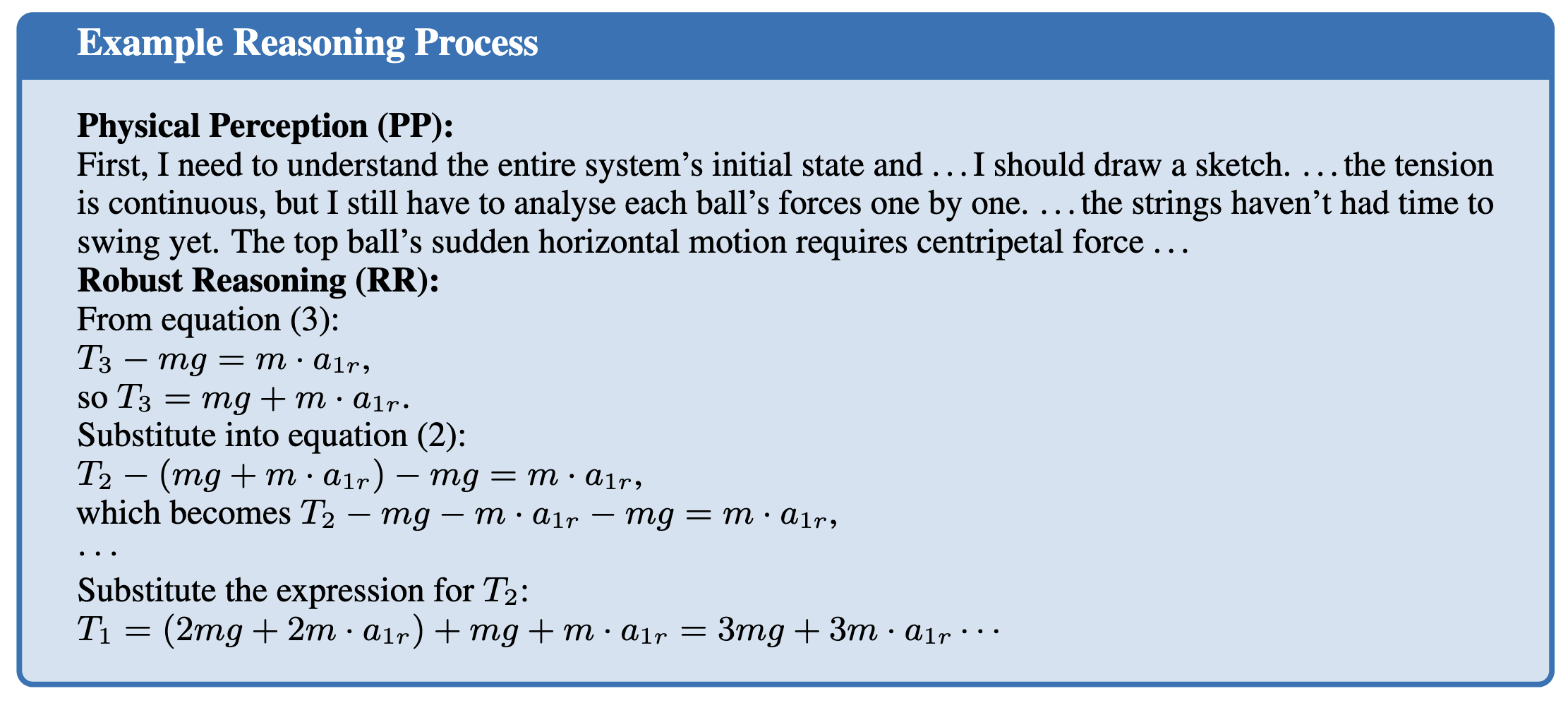

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: mit

|

| 3 |

+

task_categories:

|

| 4 |

+

- question-answering

|

| 5 |

+

- mathematical-reasoning

|

| 6 |

language:

|

| 7 |

- en

|

| 8 |

size_categories:

|

| 9 |

+

- 500<n<1K

|

| 10 |

+

---

|

| 11 |

+

<div align="center">

|

| 12 |

+

|

| 13 |

+

<p align="center" style="font-size:28px"><b>PHYBench: Holistic Evaluation of Physical Perception and Reasoning in Large Language Models</b></p>

|

| 14 |

+

<p align="center">

|

| 15 |

+

<a href="https://phybench.ai">[🌐 Project]</a>

|

| 16 |

+

<a href="https://arxiv.org/abs/2504.16074">[📄 Paper]</a>

|

| 17 |

+

<a href="https://github.com/PHYBench/PHYBench">[💻 Code]</a>

|

| 18 |

+

<a href="#-overview">[🌟 Overview]</a>

|

| 19 |

+

<a href="#-data-details">[🔧 Data Details]</a>

|

| 20 |

+

<a href="#-citation">[🚩 Citation]</a>

|

| 21 |

+

</p>

|

| 22 |

+

|

| 23 |

+

[](https://opensource.org/license/mit)

|

| 24 |

+

|

| 25 |

+

---

|

| 26 |

+

|

| 27 |

+

</div>

|

| 28 |

+

|

| 29 |

+

## Acknowledgement and Progress

|

| 30 |

+

|

| 31 |

+

We have released **100 examples** complete with handwritten solutions, questions, tags, and reference answers, along with **another 400 examples** that include questions and tags.

|

| 32 |

+

|

| 33 |

+

For full-dataset evaluation and the real-time leaderboard, we are actively working on their finalization and will release them as soon as possible. Thank you for your patience and support!

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

## 🌟 Overview

|

| 38 |

+

|

| 39 |

+

PHYBench is the first large-scale benchmark specifically designed to evaluate **physical perception** and **robust reasoning** capabilities in Large Language Models (LLMs). With **500 meticulously curated physics problems** spanning mechanics, electromagnetism, thermodynamics, optics, modern physics, and advanced physics, it challenges models to demonstrate:

|

| 40 |

+

|

| 41 |

+

- **Real-world grounding**: Problems based on tangible physical scenarios (e.g., ball inside a bowl, pendulum dynamics)

|

| 42 |

+

- **Multi-step reasoning**: Average solution length of 3,000 characters requiring 10+ intermediate steps

|

| 43 |

+

- **Symbolic precision**: Strict evaluation of LaTeX-formulated expressions through novel **Expression Edit Distance (EED) Score**

|

| 44 |

+

|

| 45 |

+

Key innovations:

|

| 46 |

+

|

| 47 |

+

- 🎯 **EED Metric**: Smoother measurement based on the edit-distance on expression tree

|

| 48 |

+

- 🏋️ **Difficulty Spectrum**: High school, undergraduate, Olympiad-level physics problems

|

| 49 |

+

- 🔍 **Error Taxonomy**: Explicit evaluation of Physical Perception (PP) vs Robust Reasoning (RR) failures

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

## 🔧 Example Problems

|

| 54 |

+

|

| 55 |

+

**Put some problem cards here**

|

| 56 |

+

|

| 57 |

+

**Answer Types**:

|

| 58 |

+

🔹 Strict symbolic expressions (e.g., `\sqrt{\frac{2g}{3R}}`)

|

| 59 |

+

🔹 Multiple equivalent forms accepted

|

| 60 |

+

🔹 No numerical approximations or equation chains

|

| 61 |

+

|

| 62 |

+

## 🛠️ Data Curation

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

### 3-Stage Rigorous Validation Pipeline

|

| 67 |

+

|

| 68 |

+

1. **Expert Creation & Strict Screening**

|

| 69 |

+

|

| 70 |

+

- 178 PKU physics students contributed problems that are:

|

| 71 |

+

- Almost entirely original/custom-created

|

| 72 |

+

- None easily found through direct internet searches or standard reference materials

|

| 73 |

+

- Strict requirements:

|

| 74 |

+

- Single unambiguous symbolic answer (e.g., `T=2mg+4mv₀²/l`)

|

| 75 |

+

- Text-only solvability (no diagrams/multimodal inputs)

|

| 76 |

+

- Rigorously precise statements to avoid ambiguity

|

| 77 |

+

- Solvable using only basic physics principles (no complex specialized knowledge required)

|

| 78 |

+

- No requirements on AI test to avoid filtering for AI weaknesses

|

| 79 |

+

2. **Multi-Round Academic Review**

|

| 80 |

+

|

| 81 |

+

- Dedicated internal platform for peer review:

|

| 82 |

+

|

| 83 |

+

- 3-tier verification process:

|

| 84 |

+

- Initial filtering: Reviewers assessed format validity and appropriateness (not filtering for AI weaknesses)

|

| 85 |

+

- Ambiguity detection and revision: Reviewers analyzed LLM-generated solutions to identify potential ambiguities in problem statements

|

| 86 |

+

- Iterative improvement cycle: Questions refined repeatedly until all LLMs can understand the question and follow the instructions to produce the expressions it believes to be right.

|

| 87 |

+

3. **Human Expert Finalization**

|

| 88 |

+

|

| 89 |

+

- **81 PKU students participated:**

|

| 90 |

+

- Each student independently solved 8 problems from the dataset

|

| 91 |

+

- Evaluate question clarity, statement rigor, and answer correctness

|

| 92 |

+

- Establish of human baseline performance meanwhile

|

| 93 |

+

|

| 94 |

+

## 📊 Evaluation Protocol

|

| 95 |

+

|

| 96 |

+

### Machine Evaluation

|

| 97 |

+

|

| 98 |

+

**Dual Metrics**:

|

| 99 |

+

|

| 100 |

+

1. **Accuracy**: Binary correctness (expression equivalence via SymPy simplification)

|

| 101 |

+

2. **EED Score**: Continuous assessment of expression tree similarity

|

| 102 |

+

|

| 103 |

+

The EED Score evaluates the similarity between the model-generated answer and the ground truth by leveraging the concept of expression tree edit distance. The process involves the following steps:

|

| 104 |

+

|

| 105 |

+

1. **Simplification of Expressions**:Both the ground truth (`gt`) and the model-generated answer (`gen`) are first converted into simplified symbolic expressions using the `sympy.simplify()` function. This step ensures that equivalent forms of the same expression are recognized as identical.

|

| 106 |

+

2. **Equivalence Check**:If the simplified expressions of `gt` and `gen` are identical, the EED Score is assigned a perfect score of 100, indicating complete correctness.

|

| 107 |

+

3. **Tree Conversion and Edit Distance Calculation**:If the expressions are not identical, they are converted into tree structures. The edit distance between these trees is then calculated using an extended version of the Zhang-Shasha algorithm. This distance represents the minimum number of node-level operations (insertions, deletions, and updates) required to transform one tree into the other.

|

| 108 |

+

4. **Relative Edit Distance and Scoring**:The relative edit distance \( r \) is computed as the ratio of the edit distance to the size of the ground truth tree. The EED Score is then determined based on this relative distance:

|

| 109 |

+

|

| 110 |

+

- If \( r = 0 \) (i.e., the expressions are identical), the score is 100.

|

| 111 |

+

- If \( 0 < r < 0.6 \), the score is calculated as \( 60 - 100r \).

|

| 112 |

+

- If \( r \geq 0.6 \), the score is 0, indicating a significant discrepancy between the model-generated answer and the ground truth.

|

| 113 |

+

|

| 114 |

+

This scoring mechanism provides a continuous measure of similarity, allowing for a nuanced evaluation of the model's reasoning capabilities beyond binary correctness.

|

| 115 |

+

|

| 116 |

+

**Key Advantages**:

|

| 117 |

+

|

| 118 |

+

- 204% higher sample efficiency vs binary metrics

|

| 119 |

+

- Distinguishes coefficient errors (30<EED score<60) vs structural errors (EED score<30)

|

| 120 |

+

|

| 121 |

+

### Human Baseline

|

| 122 |

+

|

| 123 |

+

- **Participants**: 81 PKU physics students

|

| 124 |

+

- **Protocol**:

|

| 125 |

+

- **8 problems per student**: Each student solved a set of 8 problems from PHYBench dataset

|

| 126 |

+

- **Time-constrained solving**: 3 hours

|

| 127 |

+

- **Performance metrics**:

|

| 128 |

+

- **61.9±2.1% average accuracy**

|

| 129 |

+

- **70.4±1.8 average EED Score**

|

| 130 |

+

- Top quartile reached 71.4% accuracy and 80.4 EED Score

|

| 131 |

+

- Significant outperformance vs LLMs: Human experts outperformed all evaluated LLMs at 99% confidence level

|

| 132 |

+

- Human experts significantly outperformed all evaluated LLMs (99.99% confidence level)

|

| 133 |

+

|

| 134 |

+

## 📝 Main Results

|

| 135 |

+

|

| 136 |

+

The results of the evaluation are shown in the following figure:

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

1. **Significant Performance Gap**: Even state-of-the-art LLMs significantly lag behind human experts in physical reasoning. The highest-performing model, Gemini 2.5 Pro, achieved only a 36.9% accuracy, compared to the human baseline of 61.9%.

|

| 140 |

+

2. **EED Score Advantages**: The EED Score provides a more nuanced evaluation of model performance compared to traditional binary scoring methods.

|

| 141 |

+

3. **Domain-Specific Strengths**: Different models exhibit varying strengths in different domains of physics:

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

* Gemini 2.5 Pro shows strong performance across most domains

|

| 145 |

+

* DeepSeek-R1 and o3-mini (high) shows comparable performance in mechanics and electricity

|

| 146 |

+

* Most models struggle with advanced physics and modern physics

|

| 147 |

+

4. **Difficulty Handling**: Comparing the advantage across problem difficulties, Gemini 2.5 Pro gains a pronounced edge on harder problems, followed by o3 (high).

|

| 148 |

+

|

| 149 |

+

|

| 150 |

+

## 😵💫 Error Analysis

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

|

| 154 |

+

We categorize the capabilities assessed by the PHYBench benchmark into two key dimensions: Physical Perception (PP) and Robust Reasoning (RR):

|

| 155 |

+

|

| 156 |

+

1. **Physical Perception (PP) Errors**: During this phase, models engage in intensive semantic reasoning, expending significant cognitive effort to identify relevant physical objects, variables, and dynamics. Models make qualitative judgments about which physical effects are significant and which can be safely ignored. PP manifests as critical decision nodes in the reasoning chain. An example of a PP error is shown in Example Problem 1.

|

| 157 |

+

2. **Robust Reasoning (RR) Errors**: In this phase, models produce numerous lines of equations and perform symbolic reasoning. This process forms the connecting chains between perception nodes. RR involves consistent mathematical derivation, equation solving, and proper application of established conditions. An example of a RR error is shown in Example Problem 2.

|

| 158 |

+

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

## 🚩 Citation

|

| 162 |

+

|

| 163 |

+

```bibtex

|

| 164 |

+

@misc{qiu2025phybenchholisticevaluationphysical,

|

| 165 |

+

title={PHYBench: Holistic Evaluation of Physical Perception and Reasoning in Large Language Models},

|

| 166 |

+

author={Shi Qiu and Shaoyang Guo and Zhuo-Yang Song and Yunbo Sun and Zeyu Cai and Jiashen Wei and Tianyu Luo and Yixuan Yin and Haoxu Zhang and Yi Hu and Chenyang Wang and Chencheng Tang and Haoling Chang and Qi Liu and Ziheng Zhou and Tianyu Zhang and Jingtian Zhang and Zhangyi Liu and Minghao Li and Yuku Zhang and Boxuan Jing and Xianqi Yin and Yutong Ren and Zizhuo Fu and Weike Wang and Xudong Tian and Anqi Lv and Laifu Man and Jianxiang Li and Feiyu Tao and Qihua Sun and Zhou Liang and Yushu Mu and Zhongxuan Li and Jing-Jun Zhang and Shutao Zhang and Xiaotian Li and Xingqi Xia and Jiawei Lin and Zheyu Shen and Jiahang Chen and Qiuhao Xiong and Binran Wang and Fengyuan Wang and Ziyang Ni and Bohan Zhang and Fan Cui and Changkun Shao and Qing-Hong Cao and Ming-xing Luo and Muhan Zhang and Hua Xing Zhu},

|

| 167 |

+

year={2025},

|

| 168 |

+

eprint={2504.16074},

|

| 169 |

+

archivePrefix={arXiv},

|

| 170 |

+

primaryClass={cs.CL},

|

| 171 |

+

url={https://arxiv.org/abs/2504.16074},

|

| 172 |

+

}

|

| 173 |

+

```

|